History of computers: A brief timeline

The history of computers began with primitive designs in the early 19th century and went on to change the world during the 20th century.

- 2000-present day

Additional resources

The history of computers goes back over 200 years. At first theorized by mathematicians and entrepreneurs, during the 19th century mechanical calculating machines were designed and built to solve the increasingly complex number-crunching challenges. The advancement of technology enabled ever more-complex computers by the early 20th century, and computers became larger and more powerful.

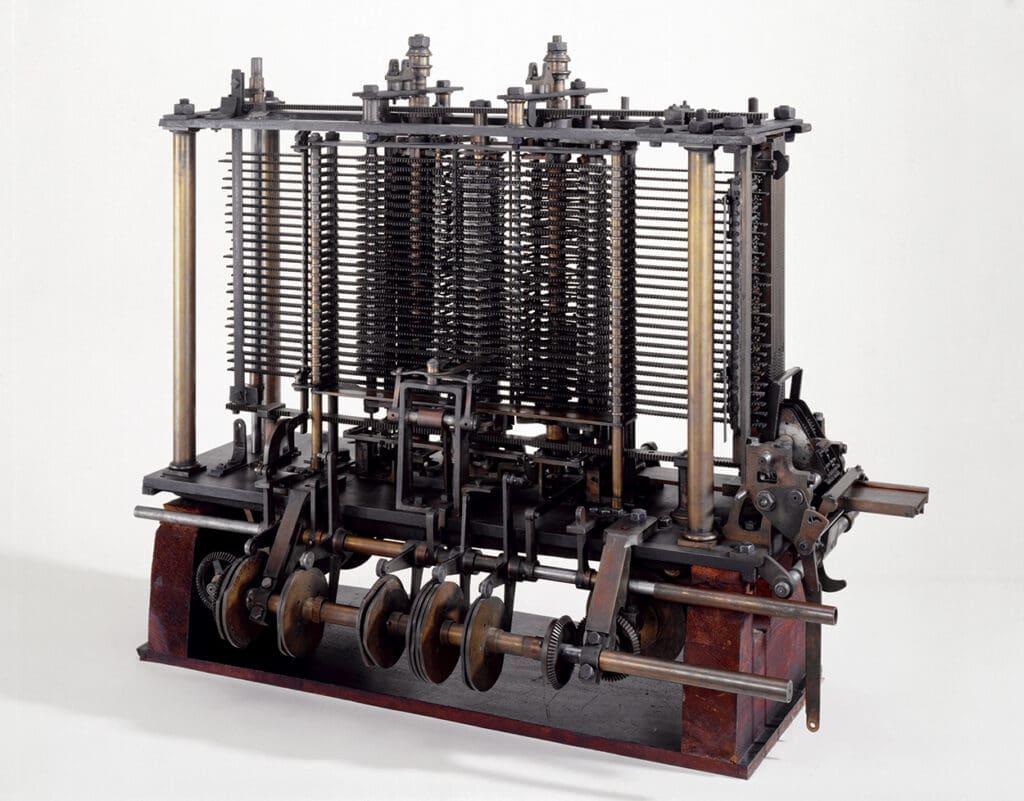

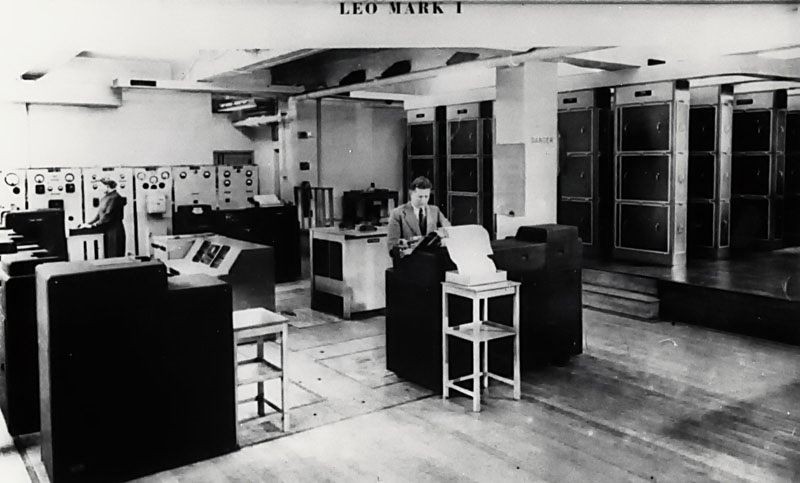

Today, computers are almost unrecognizable from designs of the 19th century, such as Charles Babbage's Analytical Engine — or even from the huge computers of the 20th century that occupied whole rooms, such as the Electronic Numerical Integrator and Calculator.

Here's a brief history of computers, from their primitive number-crunching origins to the powerful modern-day machines that surf the Internet, run games and stream multimedia.

19th century

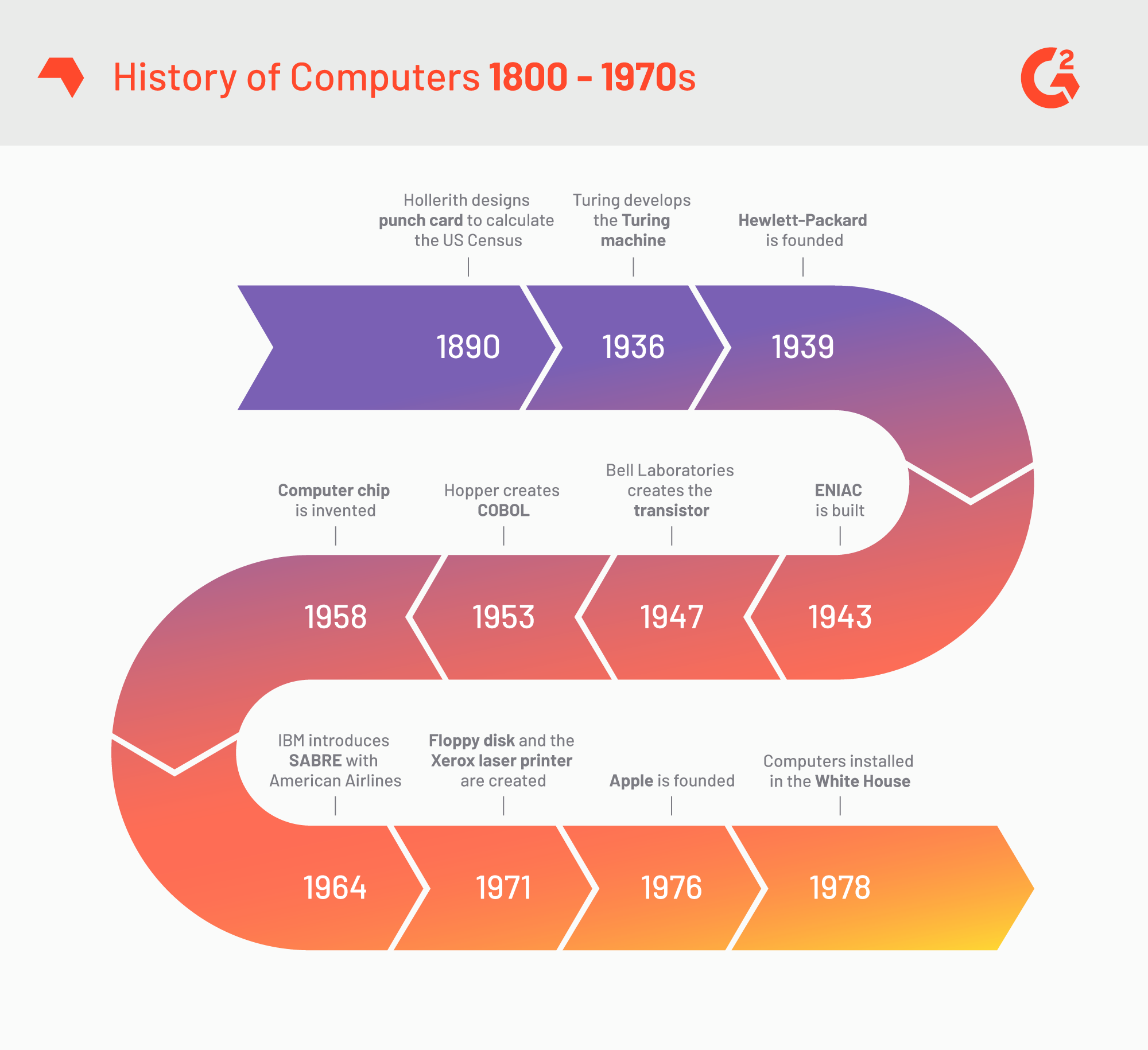

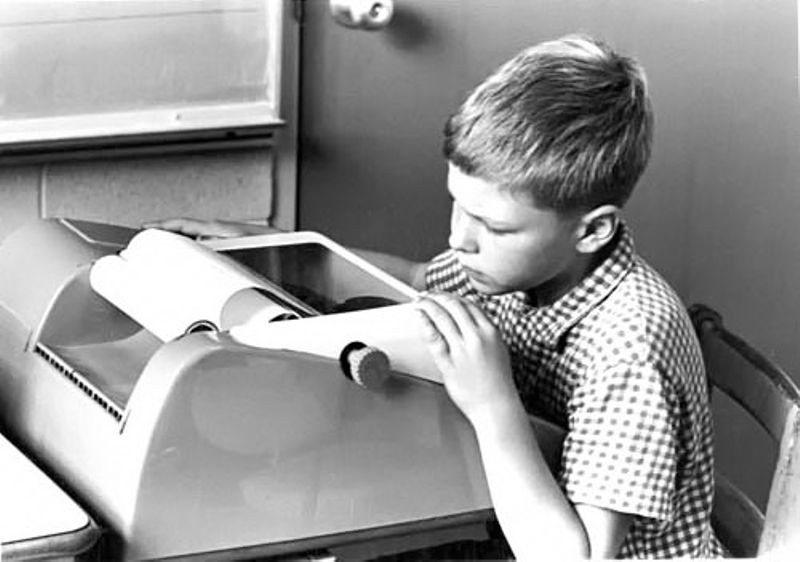

1801: Joseph Marie Jacquard, a French merchant and inventor invents a loom that uses punched wooden cards to automatically weave fabric designs. Early computers would use similar punch cards.

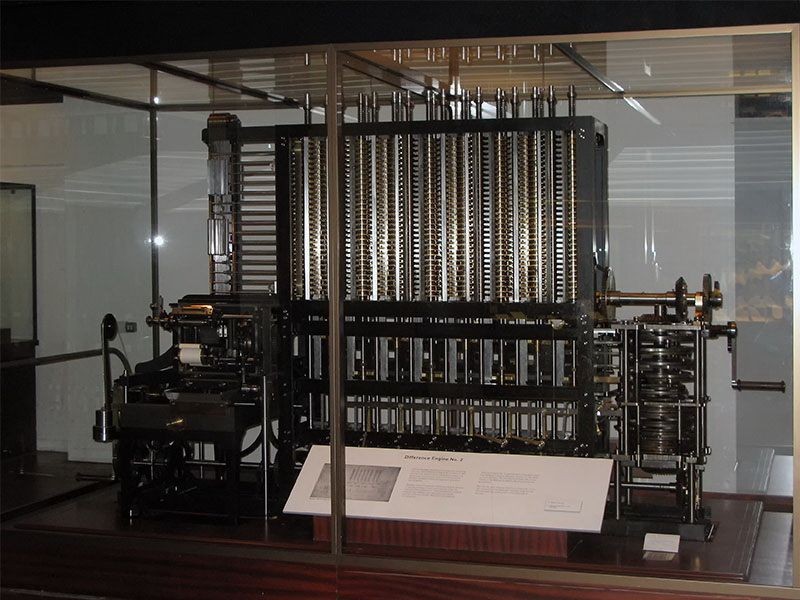

1821: English mathematician Charles Babbage conceives of a steam-driven calculating machine that would be able to compute tables of numbers. Funded by the British government, the project, called the "Difference Engine" fails due to the lack of technology at the time, according to the University of Minnesota .

1848: Ada Lovelace, an English mathematician and the daughter of poet Lord Byron, writes the world's first computer program. According to Anna Siffert, a professor of theoretical mathematics at the University of Münster in Germany, Lovelace writes the first program while translating a paper on Babbage's Analytical Engine from French into English. "She also provides her own comments on the text. Her annotations, simply called "notes," turn out to be three times as long as the actual transcript," Siffert wrote in an article for The Max Planck Society . "Lovelace also adds a step-by-step description for computation of Bernoulli numbers with Babbage's machine — basically an algorithm — which, in effect, makes her the world's first computer programmer." Bernoulli numbers are a sequence of rational numbers often used in computation.

1853: Swedish inventor Per Georg Scheutz and his son Edvard design the world's first printing calculator. The machine is significant for being the first to "compute tabular differences and print the results," according to Uta C. Merzbach's book, " Georg Scheutz and the First Printing Calculator " (Smithsonian Institution Press, 1977).

1890: Herman Hollerith designs a punch-card system to help calculate the 1890 U.S. Census. The machine, saves the government several years of calculations, and the U.S. taxpayer approximately $5 million, according to Columbia University Hollerith later establishes a company that will eventually become International Business Machines Corporation ( IBM ).

Early 20th century

1931: At the Massachusetts Institute of Technology (MIT), Vannevar Bush invents and builds the Differential Analyzer, the first large-scale automatic general-purpose mechanical analog computer, according to Stanford University .

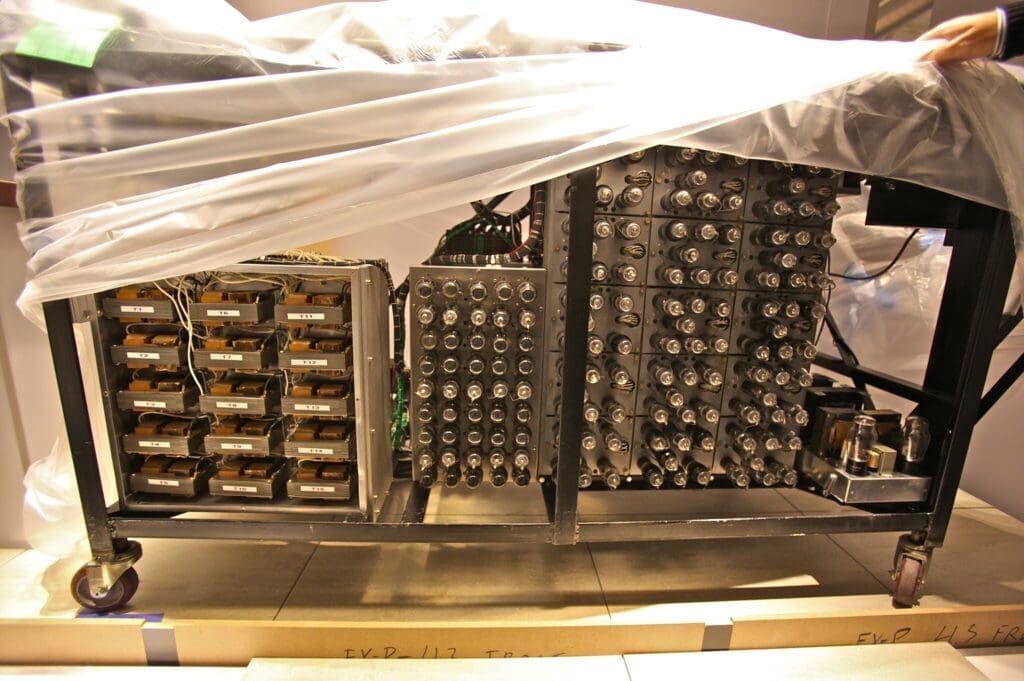

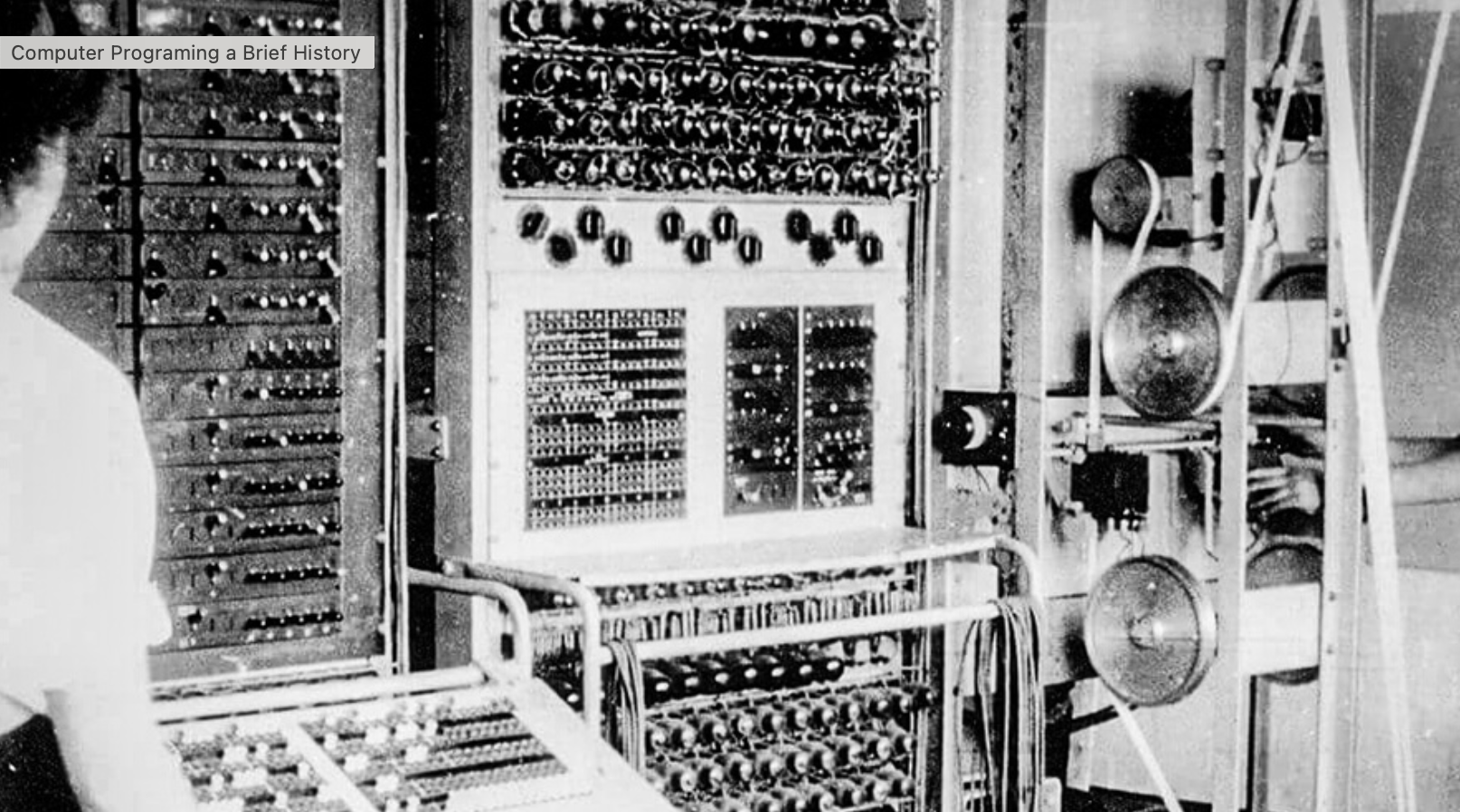

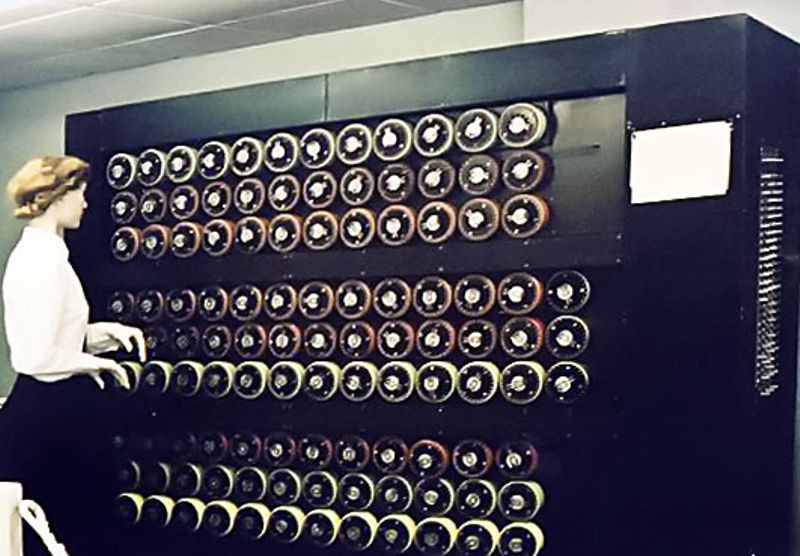

1936: Alan Turing , a British scientist and mathematician, presents the principle of a universal machine, later called the Turing machine, in a paper called "On Computable Numbers…" according to Chris Bernhardt's book " Turing's Vision " (The MIT Press, 2017). Turing machines are capable of computing anything that is computable. The central concept of the modern computer is based on his ideas. Turing is later involved in the development of the Turing-Welchman Bombe, an electro-mechanical device designed to decipher Nazi codes during World War II, according to the UK's National Museum of Computing .

1937: John Vincent Atanasoff, a professor of physics and mathematics at Iowa State University, submits a grant proposal to build the first electric-only computer, without using gears, cams, belts or shafts.

1939: David Packard and Bill Hewlett found the Hewlett Packard Company in Palo Alto, California. The pair decide the name of their new company by the toss of a coin, and Hewlett-Packard's first headquarters are in Packard's garage, according to MIT .

1941: German inventor and engineer Konrad Zuse completes his Z3 machine, the world's earliest digital computer, according to Gerard O'Regan's book " A Brief History of Computing " (Springer, 2021). The machine was destroyed during a bombing raid on Berlin during World War II. Zuse fled the German capital after the defeat of Nazi Germany and later released the world's first commercial digital computer, the Z4, in 1950, according to O'Regan.

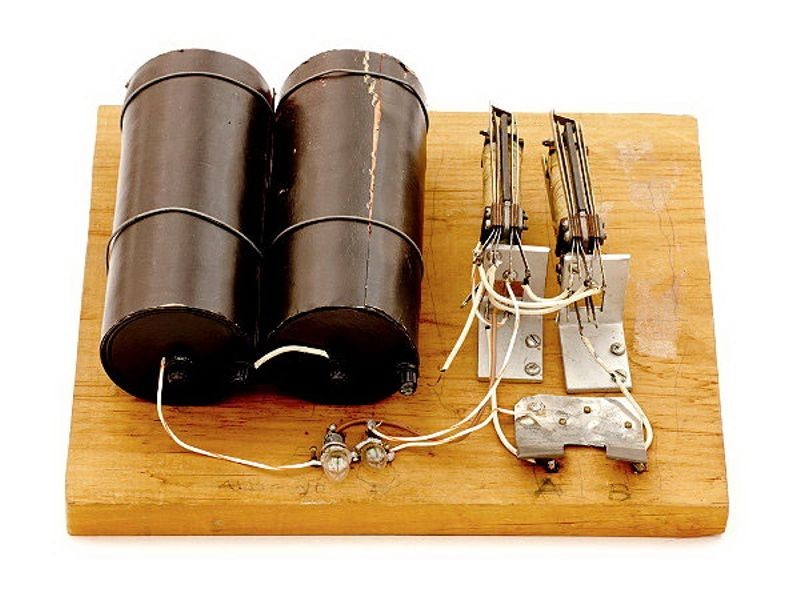

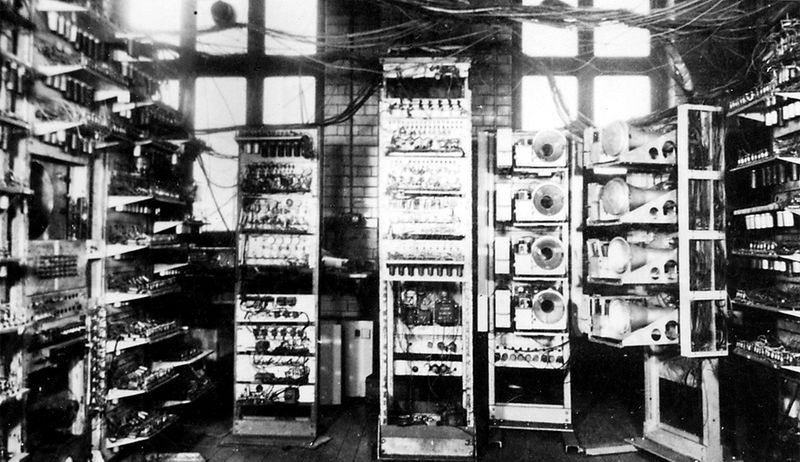

1941: Atanasoff and his graduate student, Clifford Berry, design the first digital electronic computer in the U.S., called the Atanasoff-Berry Computer (ABC). This marks the first time a computer is able to store information on its main memory, and is capable of performing one operation every 15 seconds, according to the book " Birthing the Computer " (Cambridge Scholars Publishing, 2016)

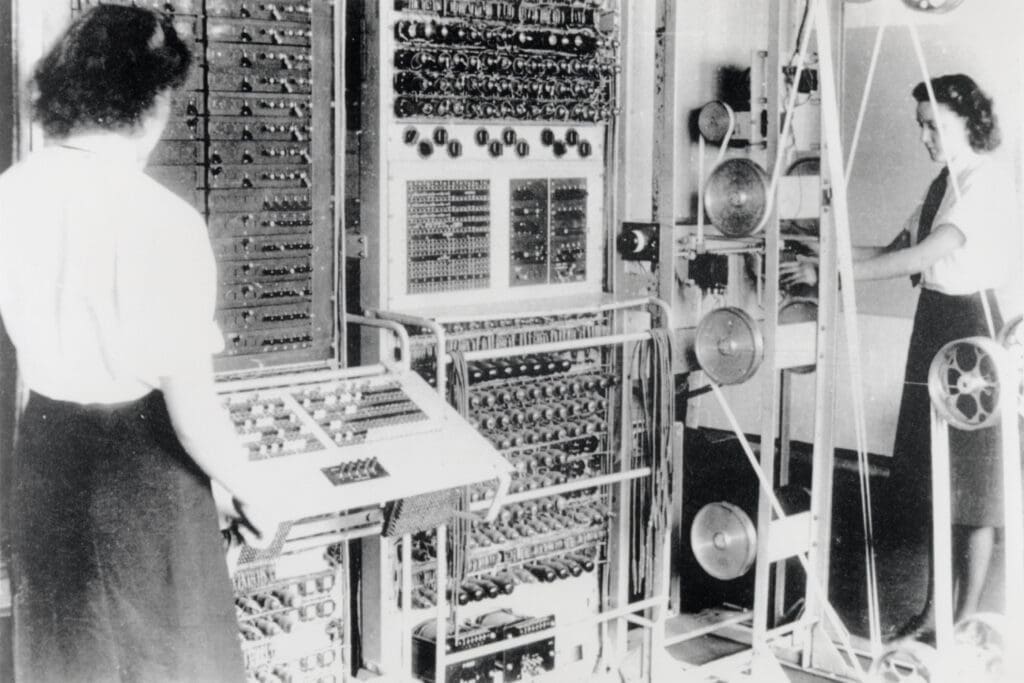

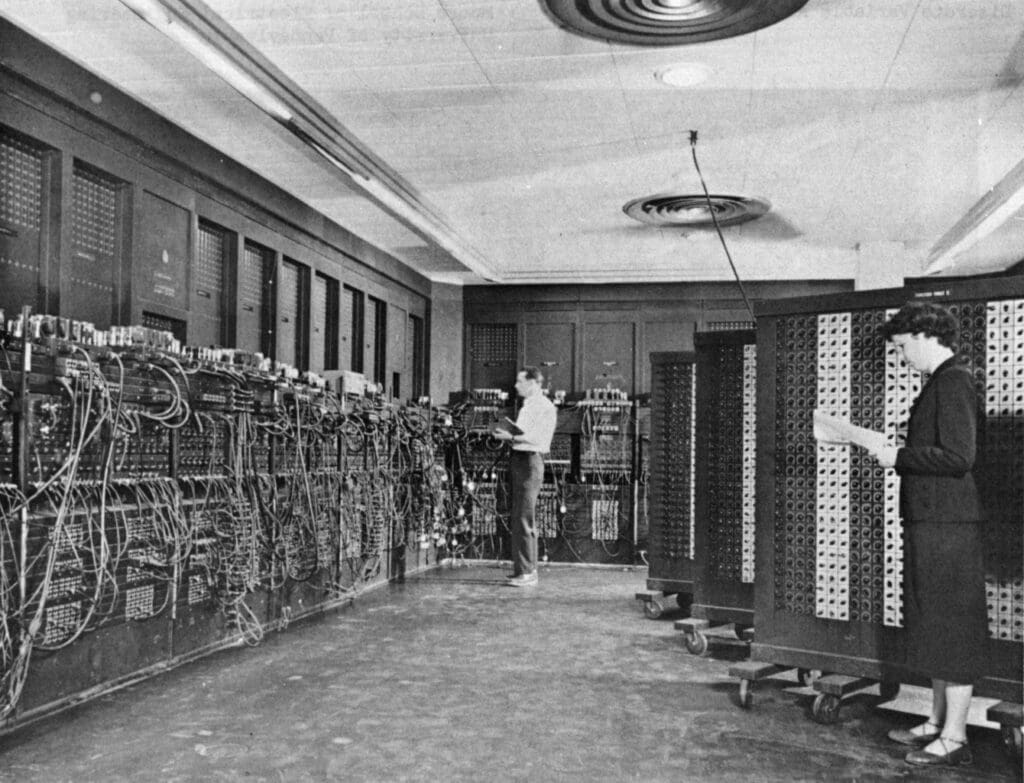

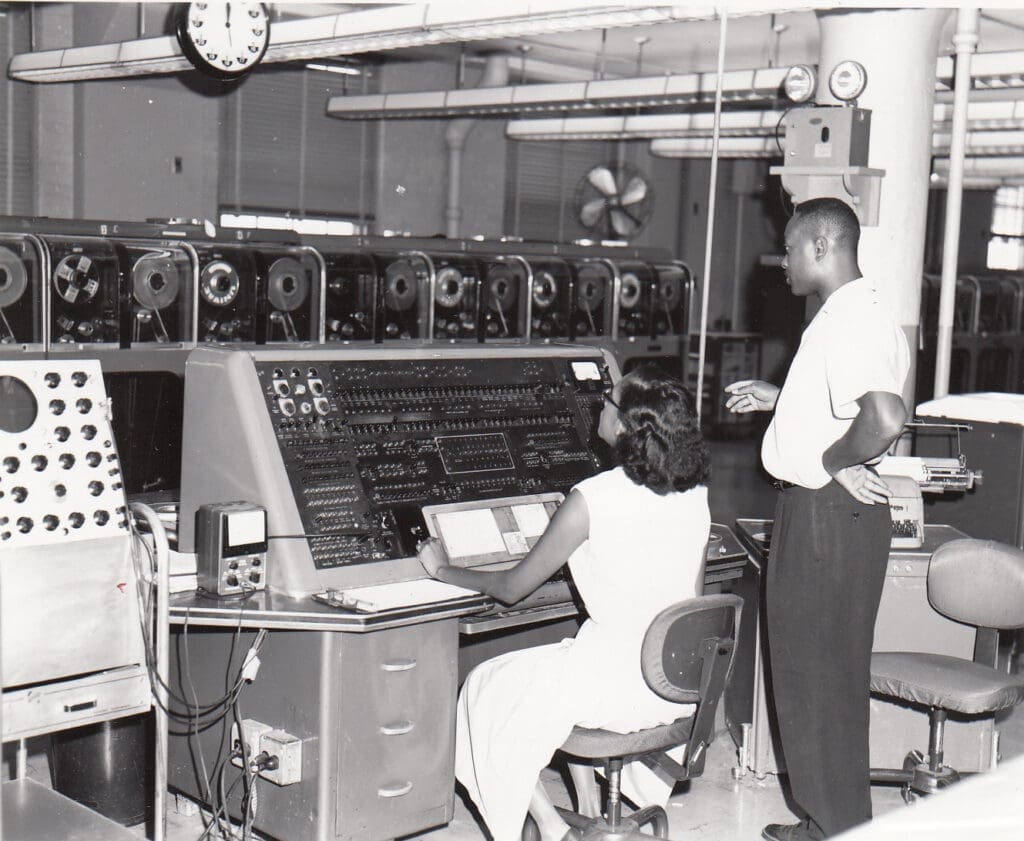

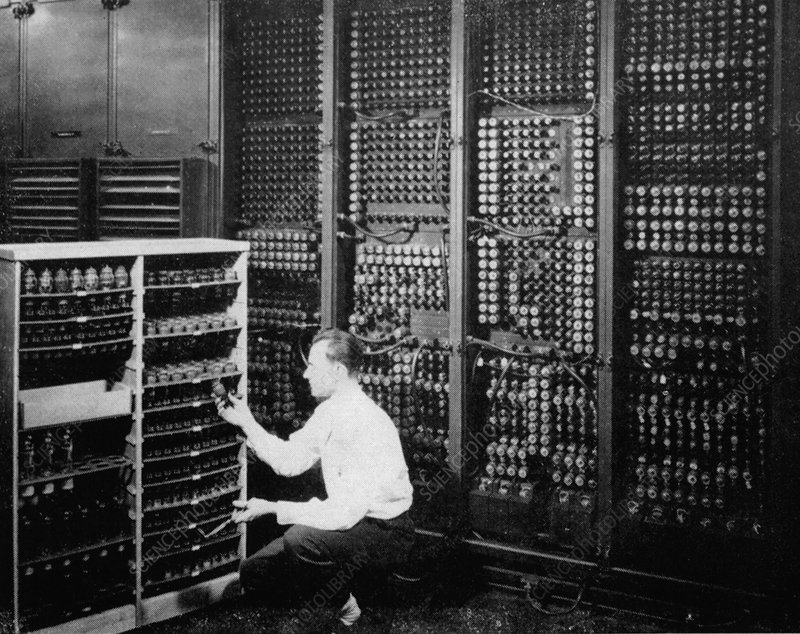

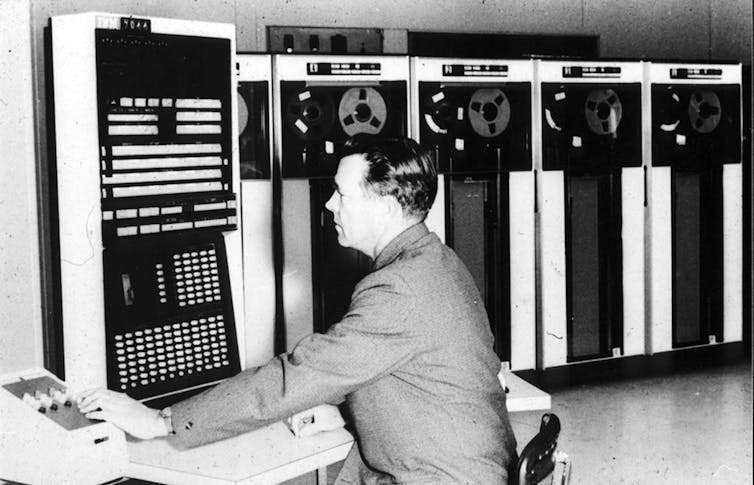

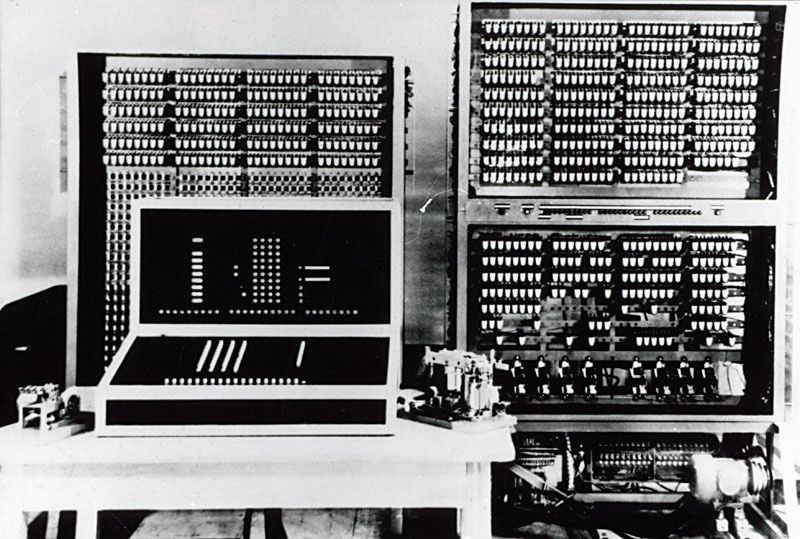

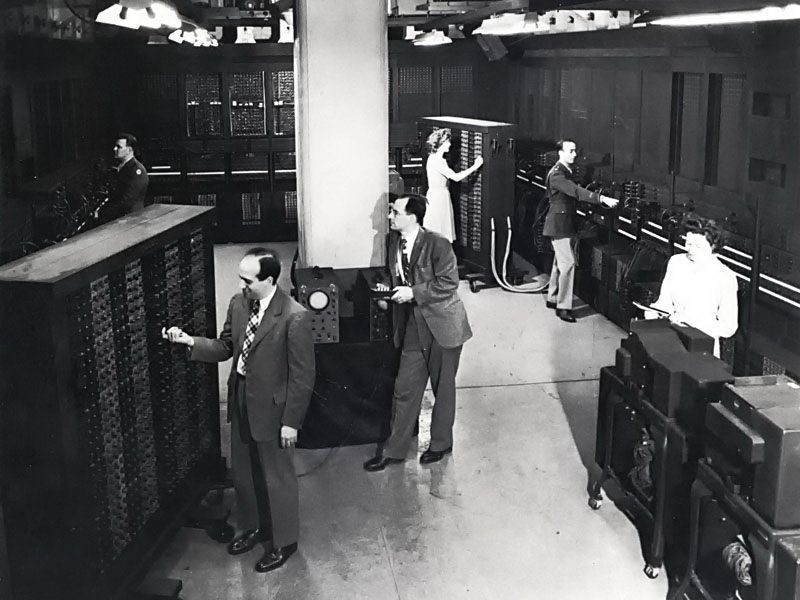

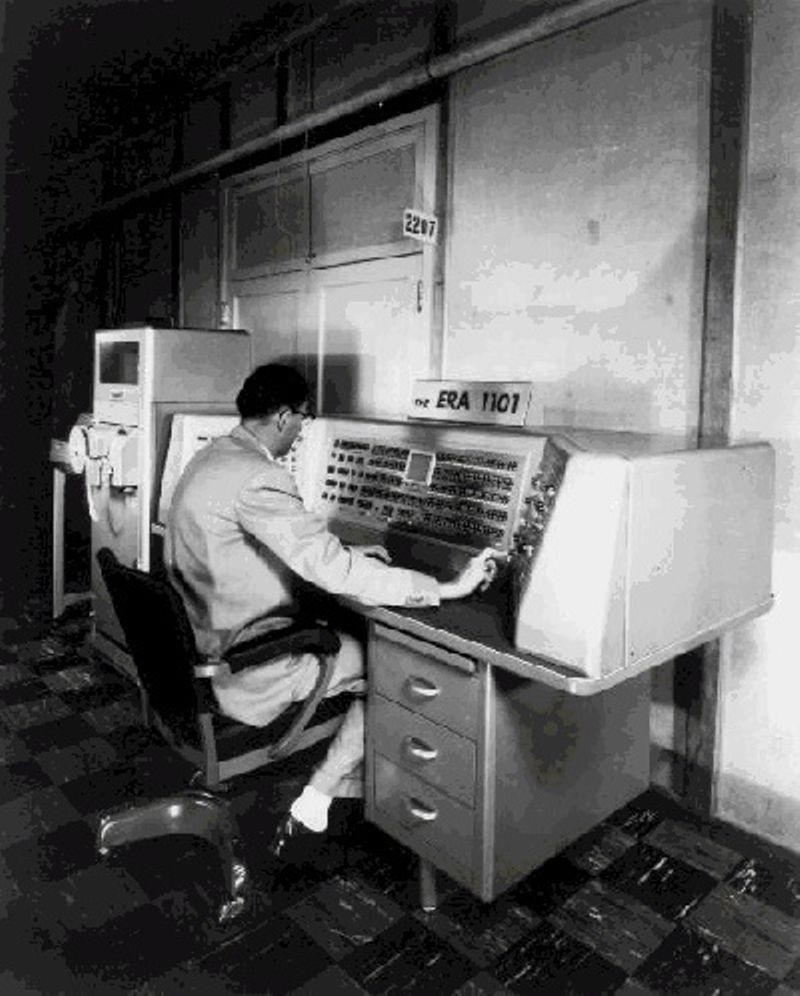

1945: Two professors at the University of Pennsylvania, John Mauchly and J. Presper Eckert, design and build the Electronic Numerical Integrator and Calculator (ENIAC). The machine is the first "automatic, general-purpose, electronic, decimal, digital computer," according to Edwin D. Reilly's book "Milestones in Computer Science and Information Technology" (Greenwood Press, 2003).

1946: Mauchly and Presper leave the University of Pennsylvania and receive funding from the Census Bureau to build the UNIVAC, the first commercial computer for business and government applications.

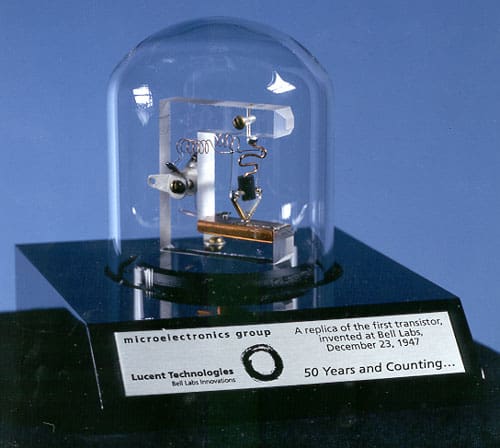

1947: William Shockley, John Bardeen and Walter Brattain of Bell Laboratories invent the transistor . They discover how to make an electric switch with solid materials and without the need for a vacuum.

1949: A team at the University of Cambridge develops the Electronic Delay Storage Automatic Calculator (EDSAC), "the first practical stored-program computer," according to O'Regan. "EDSAC ran its first program in May 1949 when it calculated a table of squares and a list of prime numbers ," O'Regan wrote. In November 1949, scientists with the Council of Scientific and Industrial Research (CSIR), now called CSIRO, build Australia's first digital computer called the Council for Scientific and Industrial Research Automatic Computer (CSIRAC). CSIRAC is the first digital computer in the world to play music, according to O'Regan.

Late 20th century

1953: Grace Hopper develops the first computer language, which eventually becomes known as COBOL, which stands for COmmon, Business-Oriented Language according to the National Museum of American History . Hopper is later dubbed the "First Lady of Software" in her posthumous Presidential Medal of Freedom citation. Thomas Johnson Watson Jr., son of IBM CEO Thomas Johnson Watson Sr., conceives the IBM 701 EDPM to help the United Nations keep tabs on Korea during the war.

1954: John Backus and his team of programmers at IBM publish a paper describing their newly created FORTRAN programming language, an acronym for FORmula TRANslation, according to MIT .

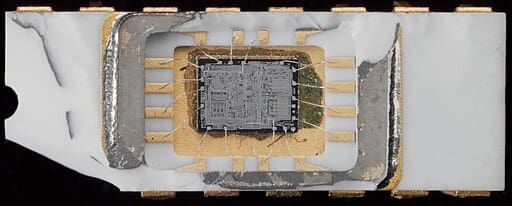

1958: Jack Kilby and Robert Noyce unveil the integrated circuit, known as the computer chip. Kilby is later awarded the Nobel Prize in Physics for his work.

1968: Douglas Engelbart reveals a prototype of the modern computer at the Fall Joint Computer Conference, San Francisco. His presentation, called "A Research Center for Augmenting Human Intellect" includes a live demonstration of his computer, including a mouse and a graphical user interface (GUI), according to the Doug Engelbart Institute . This marks the development of the computer from a specialized machine for academics to a technology that is more accessible to the general public.

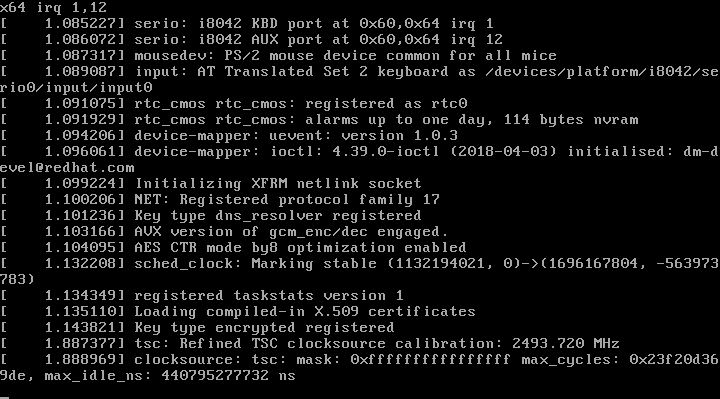

1969: Ken Thompson, Dennis Ritchie and a group of other developers at Bell Labs produce UNIX, an operating system that made "large-scale networking of diverse computing systems — and the internet — practical," according to Bell Labs .. The team behind UNIX continued to develop the operating system using the C programming language, which they also optimized.

1970: The newly formed Intel unveils the Intel 1103, the first Dynamic Access Memory (DRAM) chip.

1971: A team of IBM engineers led by Alan Shugart invents the "floppy disk," enabling data to be shared among different computers.

1972: Ralph Baer, a German-American engineer, releases Magnavox Odyssey, the world's first home game console, in September 1972 , according to the Computer Museum of America . Months later, entrepreneur Nolan Bushnell and engineer Al Alcorn with Atari release Pong, the world's first commercially successful video game.

1973: Robert Metcalfe, a member of the research staff for Xerox, develops Ethernet for connecting multiple computers and other hardware.

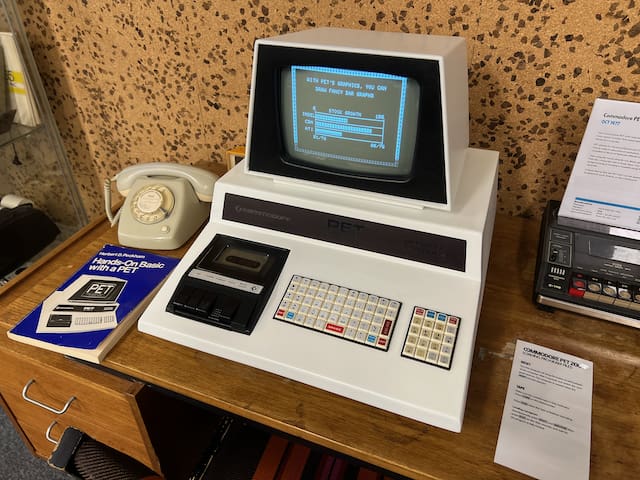

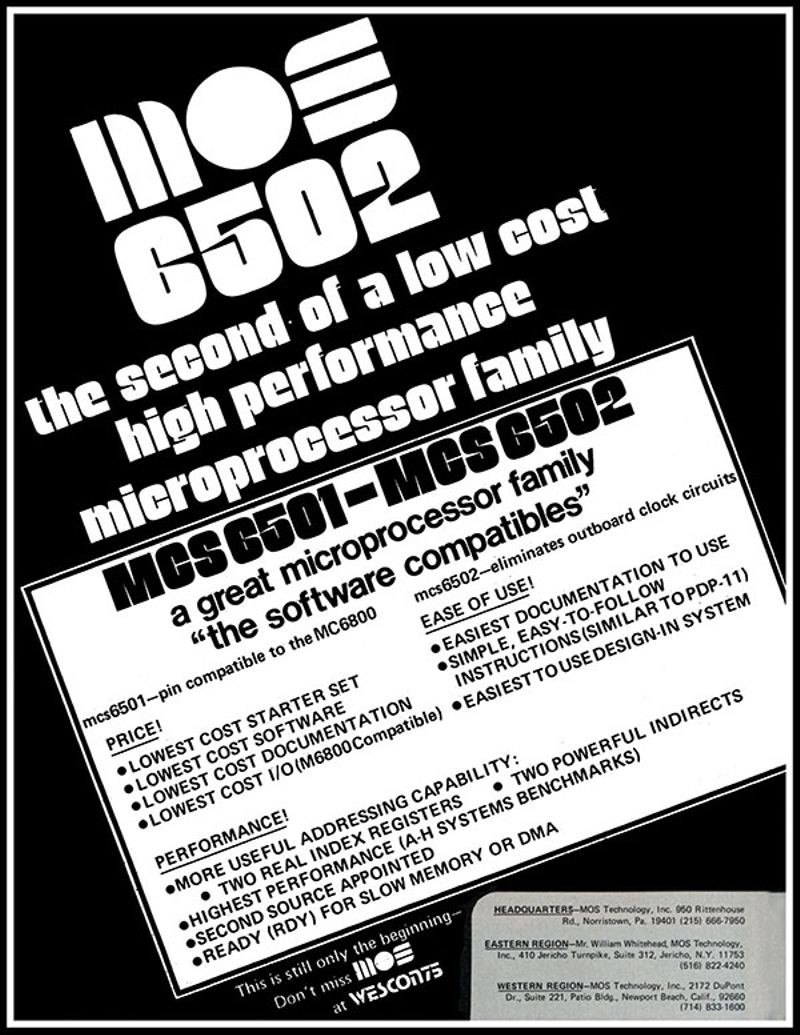

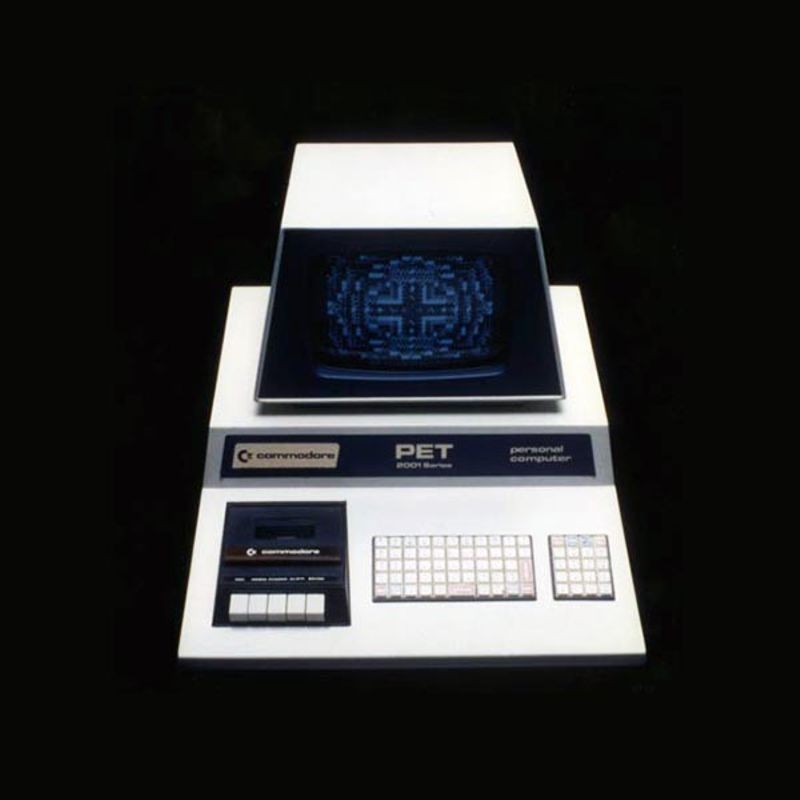

1977: The Commodore Personal Electronic Transactor (PET), is released onto the home computer market, featuring an MOS Technology 8-bit 6502 microprocessor, which controls the screen, keyboard and cassette player. The PET is especially successful in the education market, according to O'Regan.

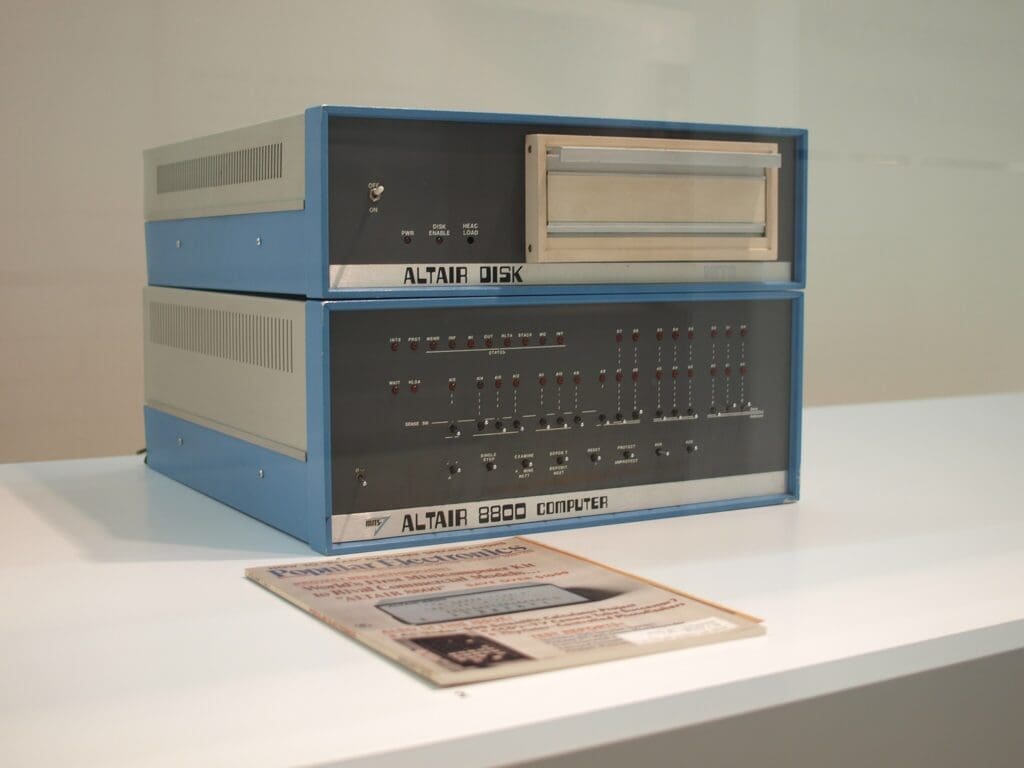

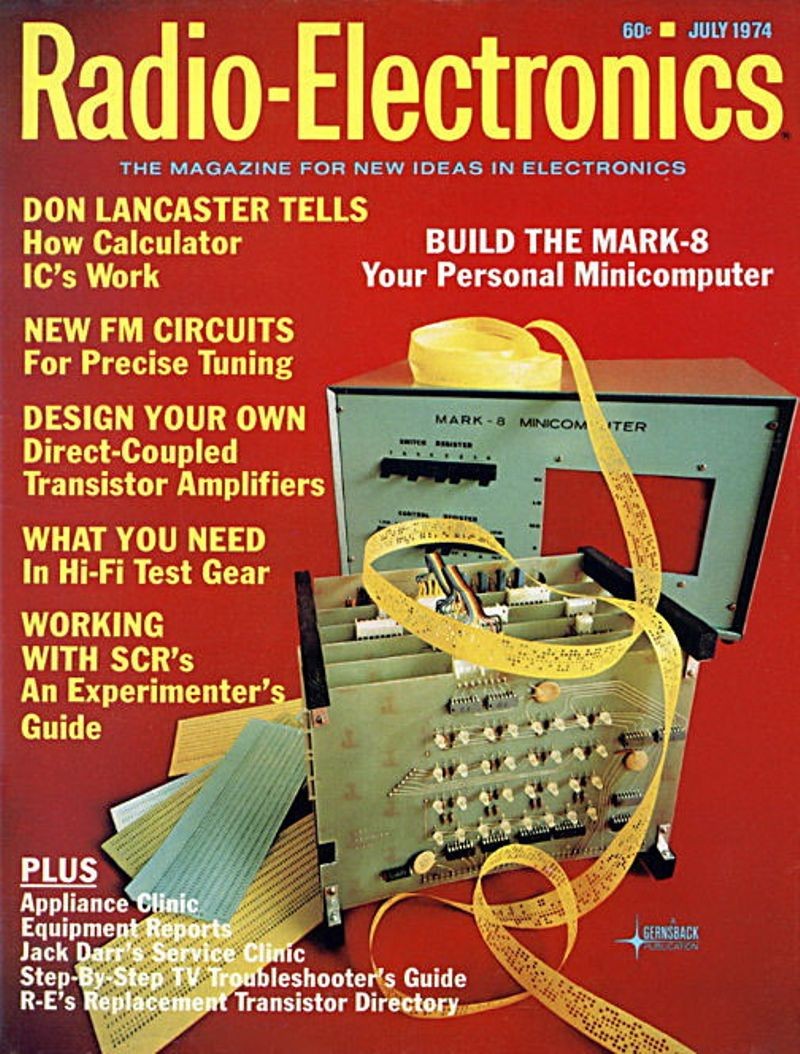

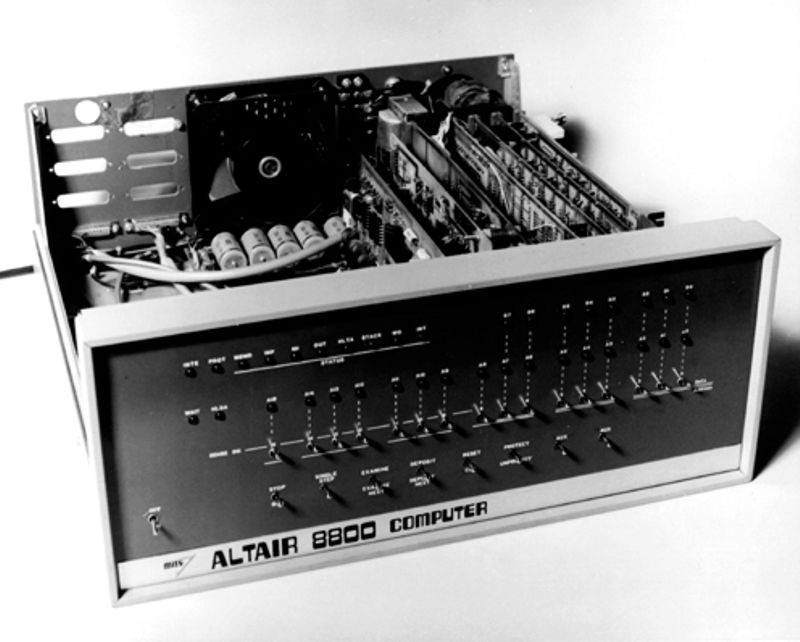

1975: The magazine cover of the January issue of "Popular Electronics" highlights the Altair 8080 as the "world's first minicomputer kit to rival commercial models." After seeing the magazine issue, two "computer geeks," Paul Allen and Bill Gates, offer to write software for the Altair, using the new BASIC language. On April 4, after the success of this first endeavor, the two childhood friends form their own software company, Microsoft.

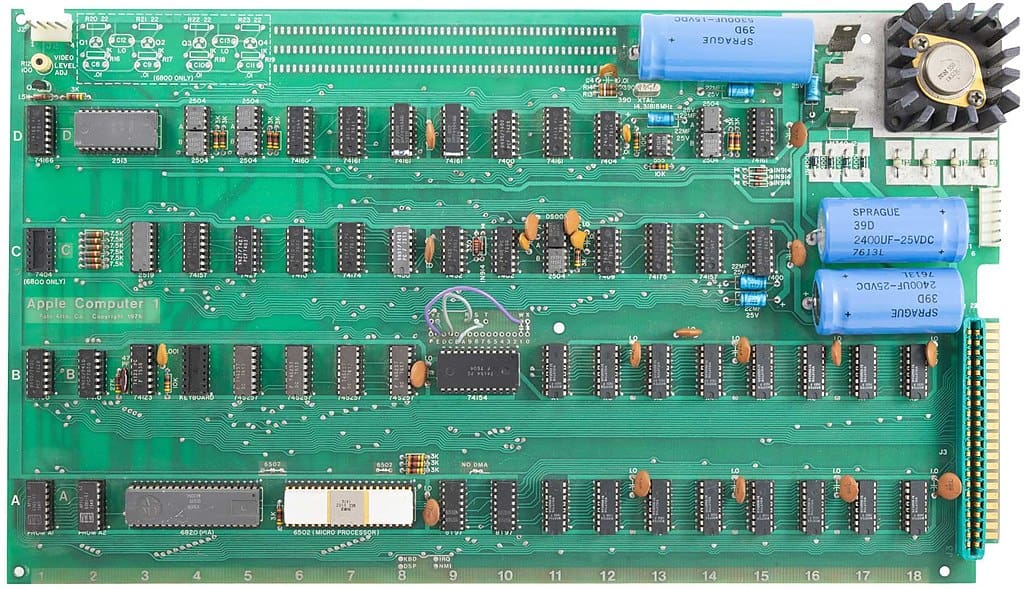

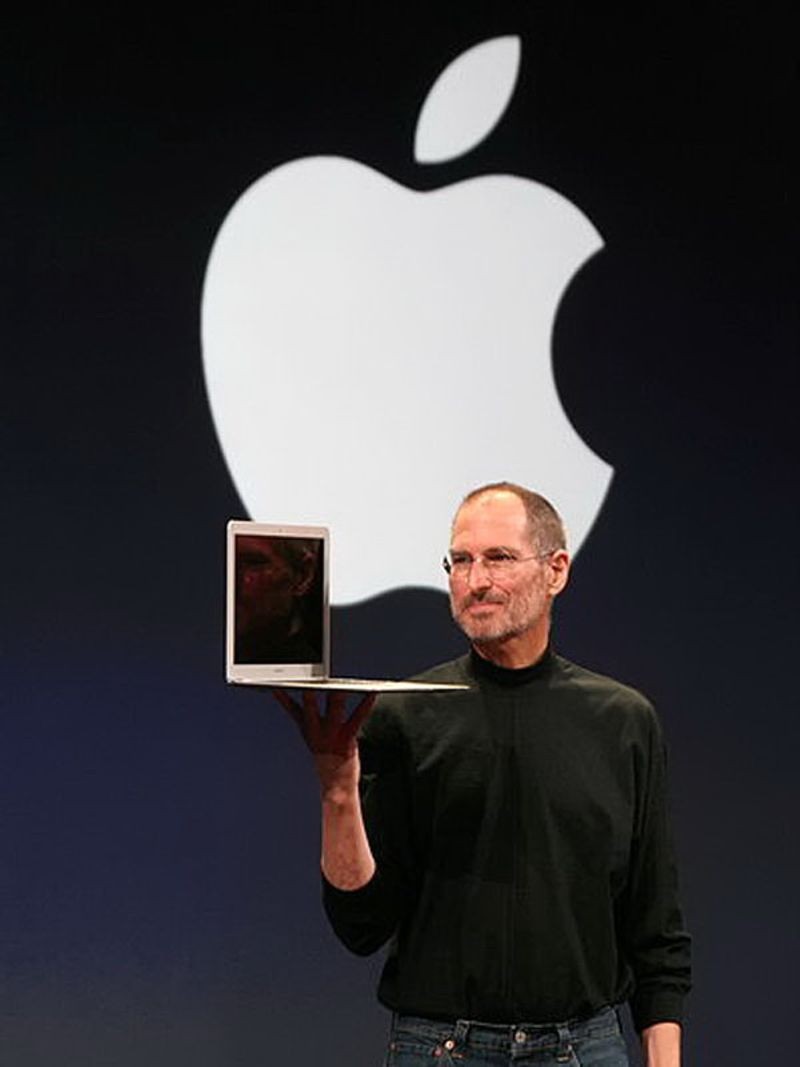

1976: Steve Jobs and Steve Wozniak co-found Apple Computer on April Fool's Day. They unveil Apple I, the first computer with a single-circuit board and ROM (Read Only Memory), according to MIT .

1977: Radio Shack began its initial production run of 3,000 TRS-80 Model 1 computers — disparagingly known as the "Trash 80" — priced at $599, according to the National Museum of American History. Within a year, the company took 250,000 orders for the computer, according to the book " How TRS-80 Enthusiasts Helped Spark the PC Revolution " (The Seeker Books, 2007).

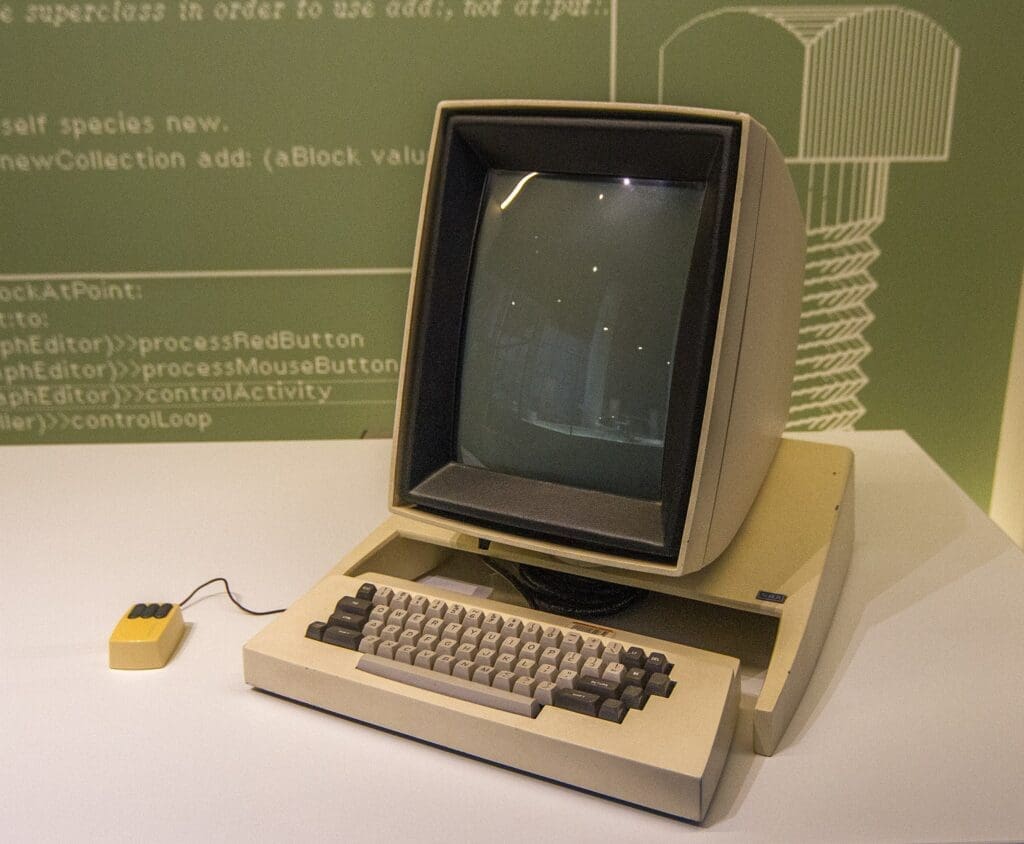

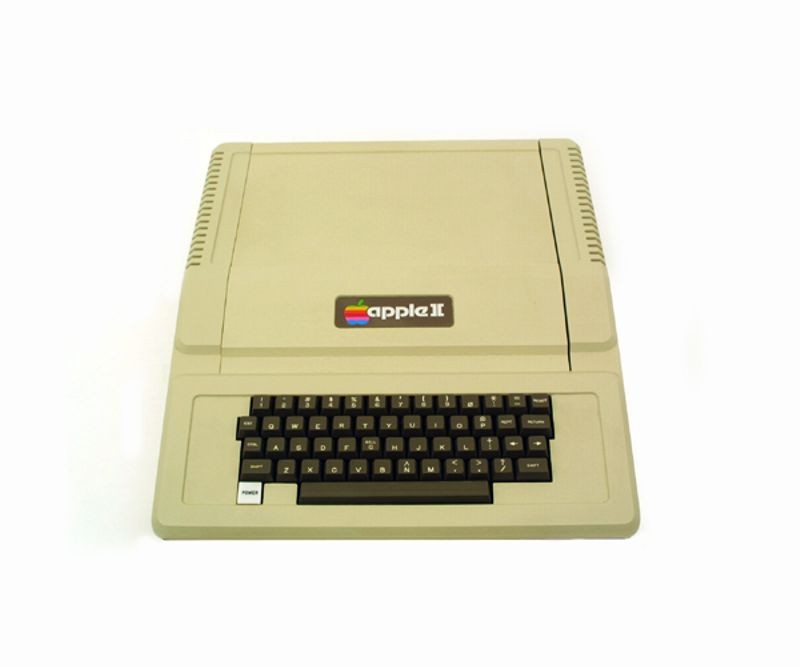

1977: The first West Coast Computer Faire is held in San Francisco. Jobs and Wozniak present the Apple II computer at the Faire, which includes color graphics and features an audio cassette drive for storage.

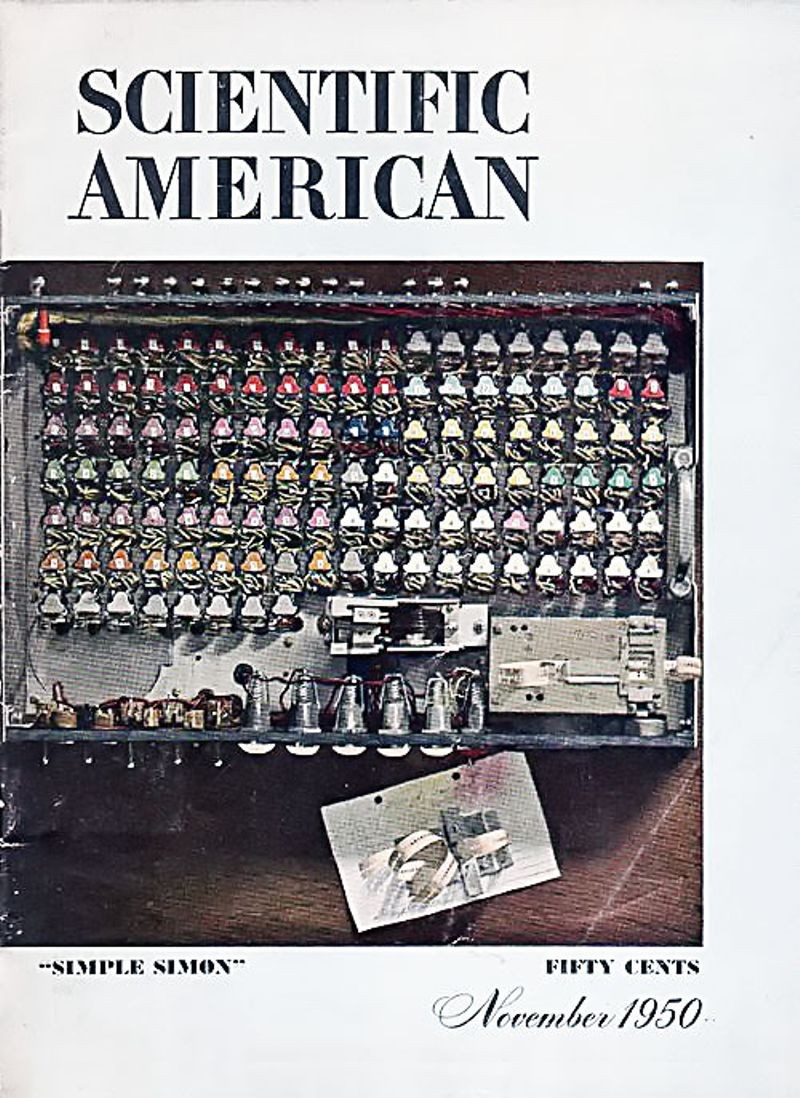

1978: VisiCalc, the first computerized spreadsheet program is introduced.

1979: MicroPro International, founded by software engineer Seymour Rubenstein, releases WordStar, the world's first commercially successful word processor. WordStar is programmed by Rob Barnaby, and includes 137,000 lines of code, according to Matthew G. Kirschenbaum's book " Track Changes: A Literary History of Word Processing " (Harvard University Press, 2016).

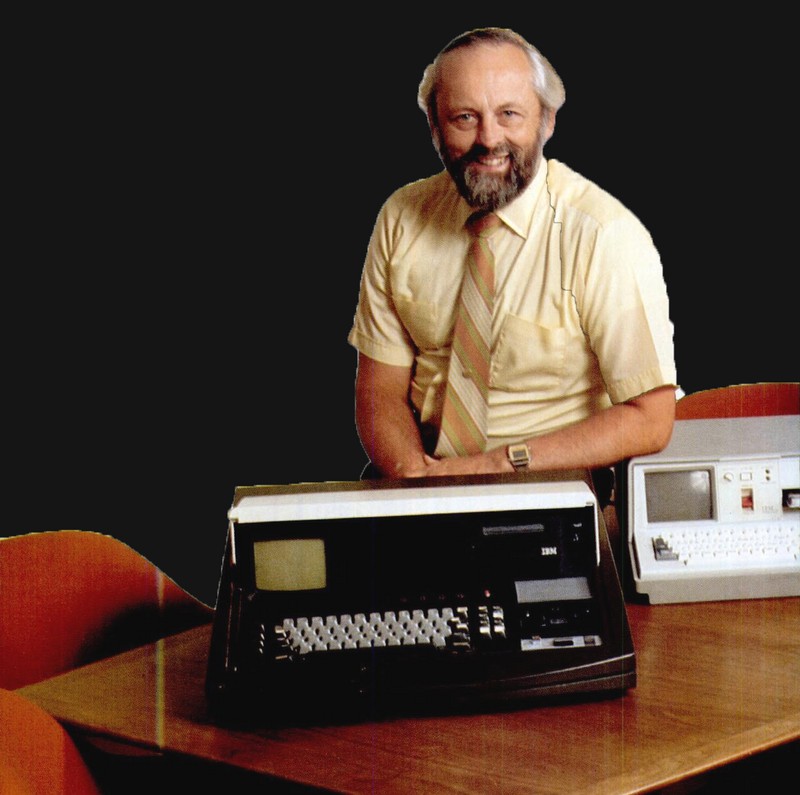

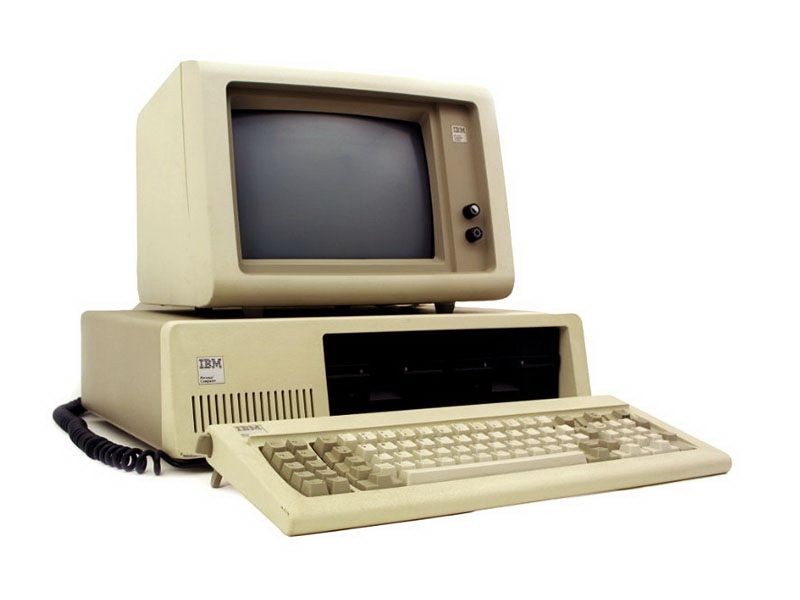

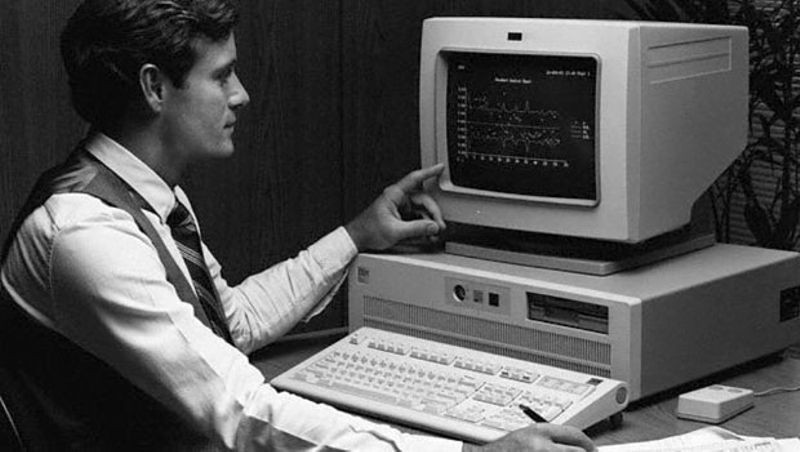

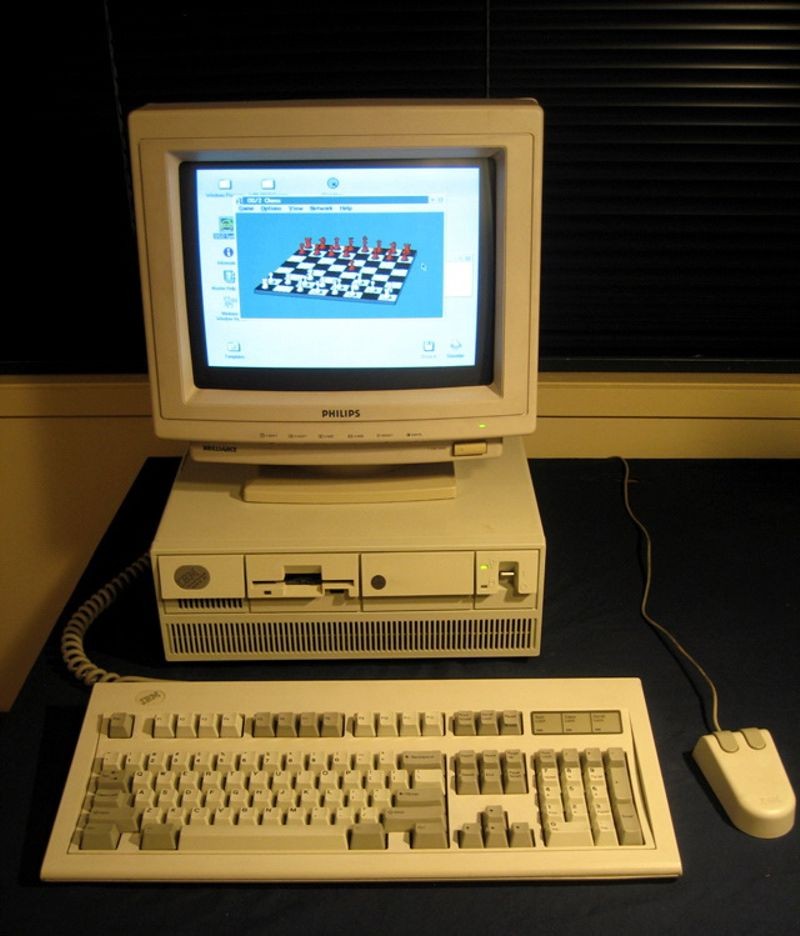

1981: "Acorn," IBM's first personal computer, is released onto the market at a price point of $1,565, according to IBM. Acorn uses the MS-DOS operating system from Windows. Optional features include a display, printer, two diskette drives, extra memory, a game adapter and more.

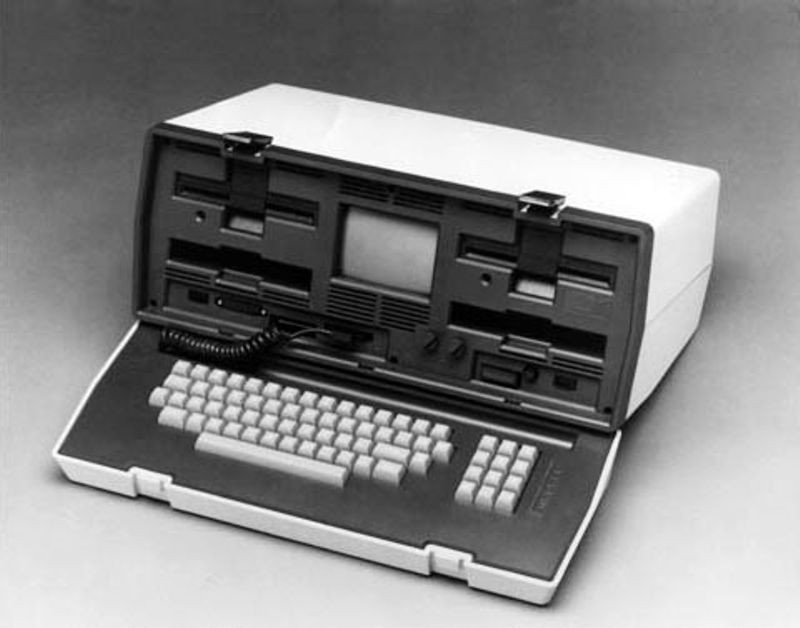

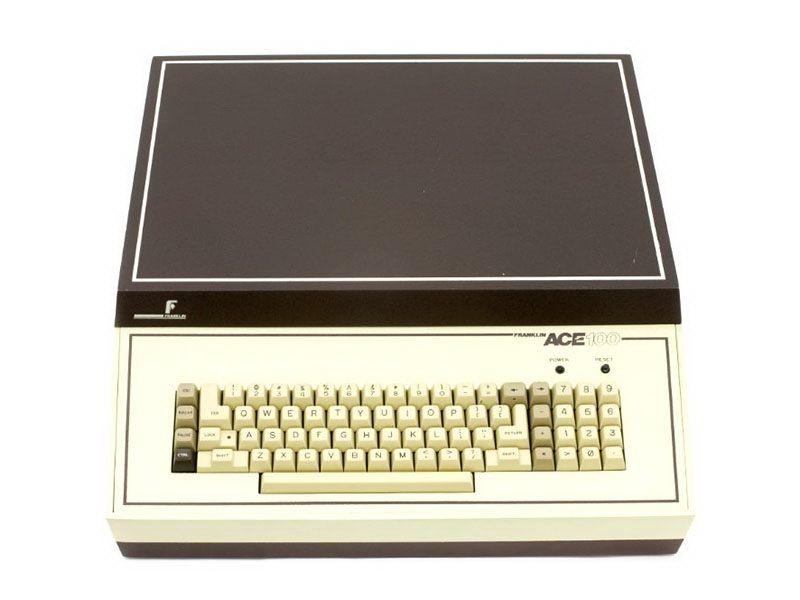

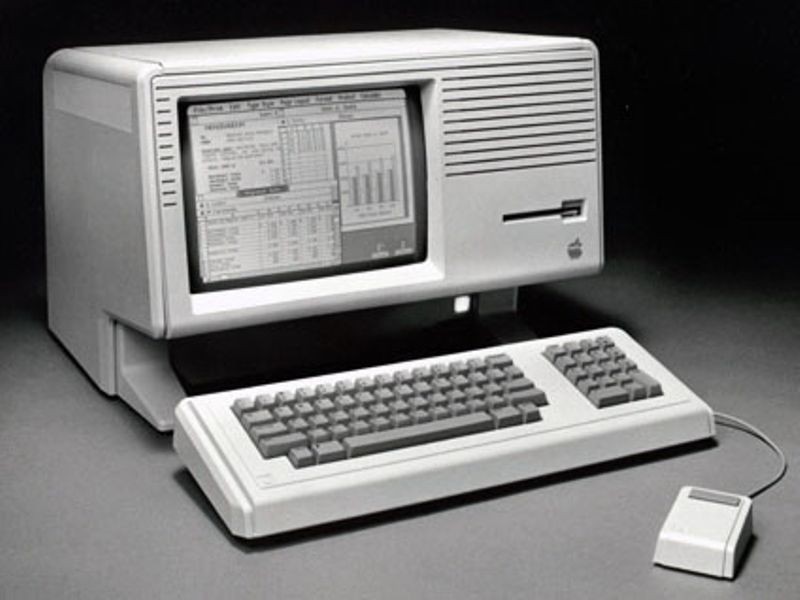

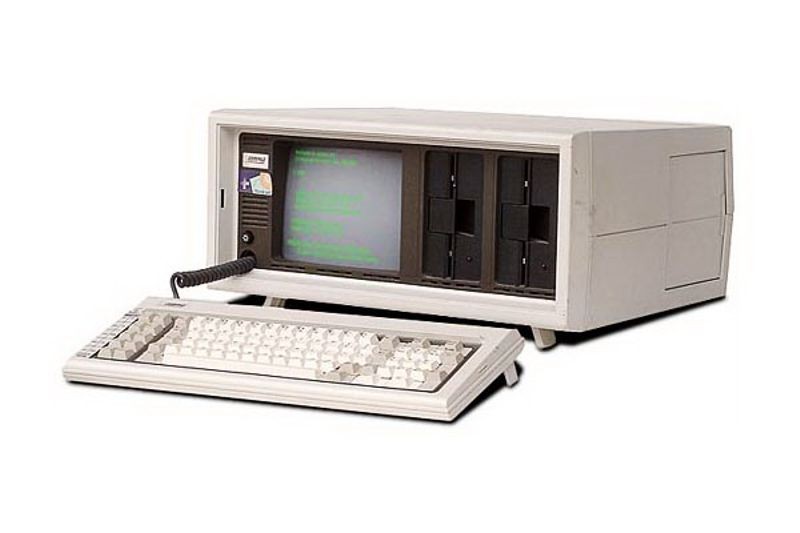

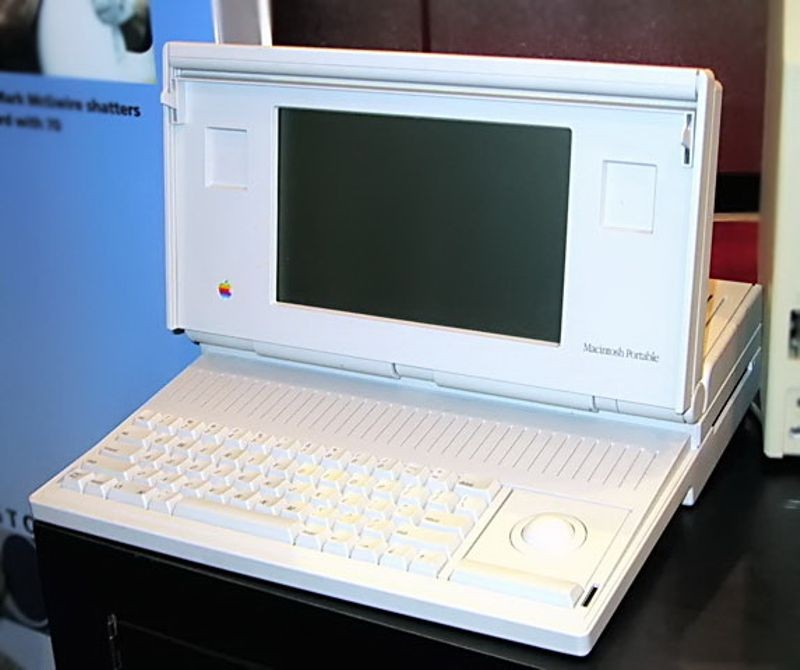

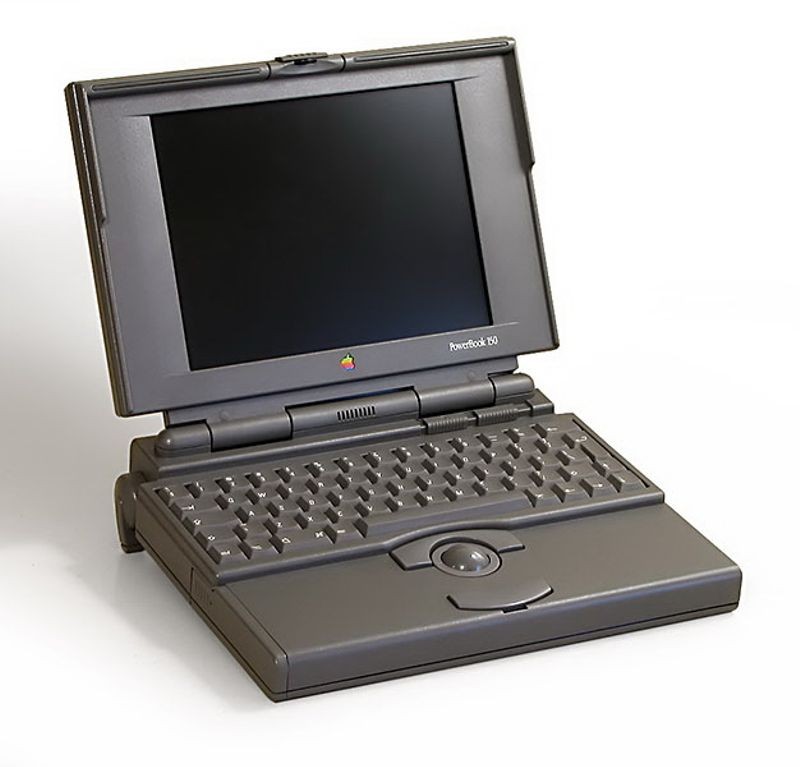

1983: The Apple Lisa, standing for "Local Integrated Software Architecture" but also the name of Steve Jobs' daughter, according to the National Museum of American History ( NMAH ), is the first personal computer to feature a GUI. The machine also includes a drop-down menu and icons. Also this year, the Gavilan SC is released and is the first portable computer with a flip-form design and the very first to be sold as a "laptop."

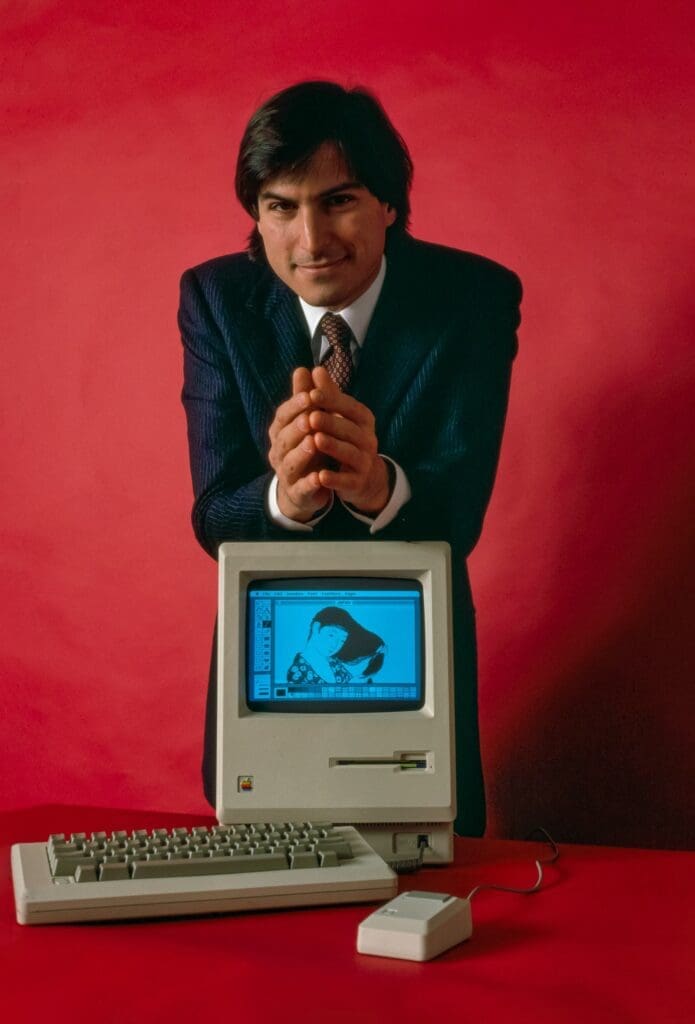

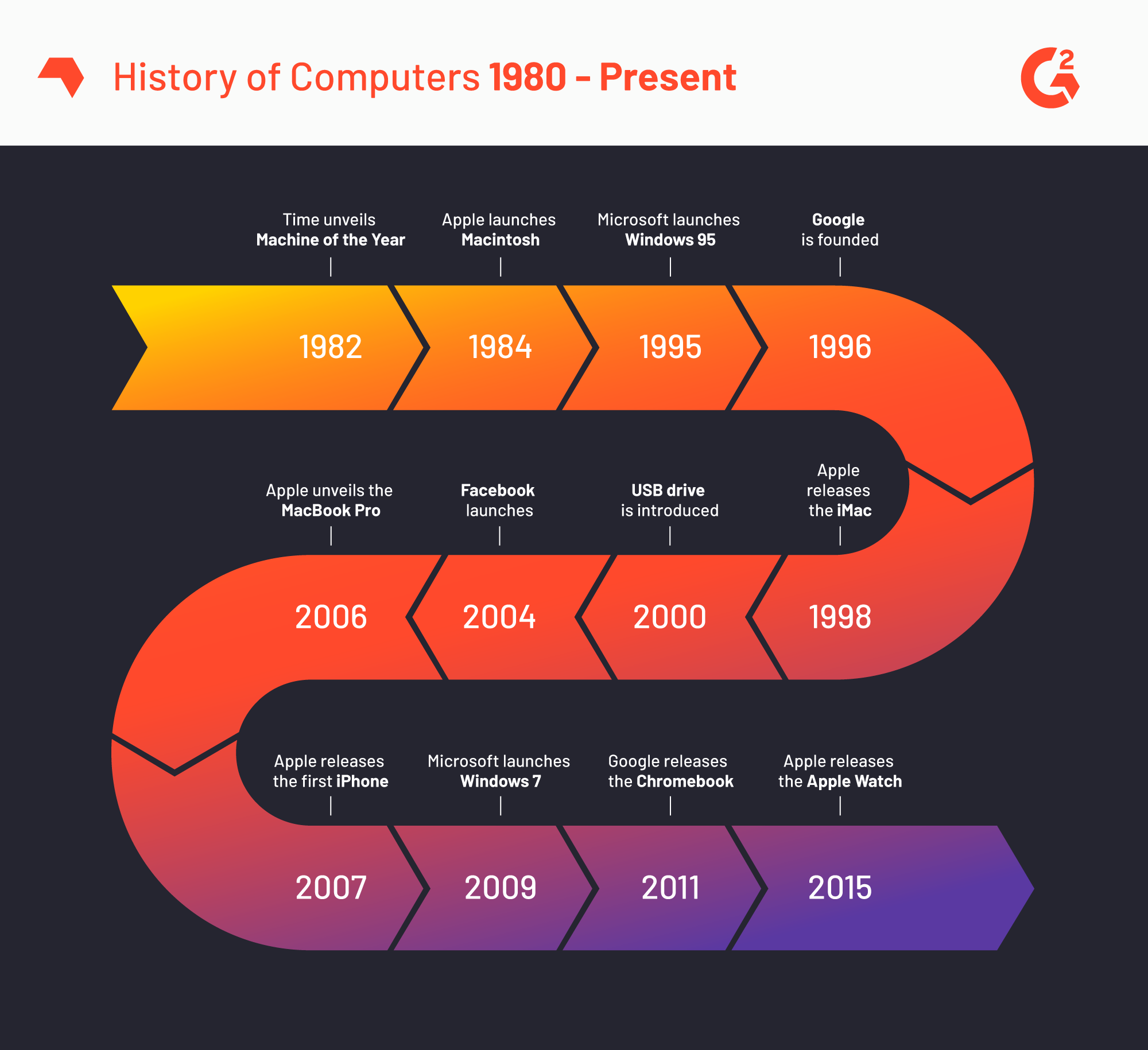

1984: The Apple Macintosh is announced to the world during a Superbowl advertisement. The Macintosh is launched with a retail price of $2,500, according to the NMAH.

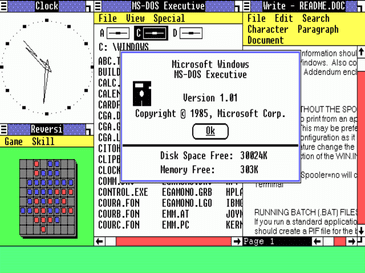

1985 : As a response to the Apple Lisa's GUI, Microsoft releases Windows in November 1985, the Guardian reported . Meanwhile, Commodore announces the Amiga 1000.

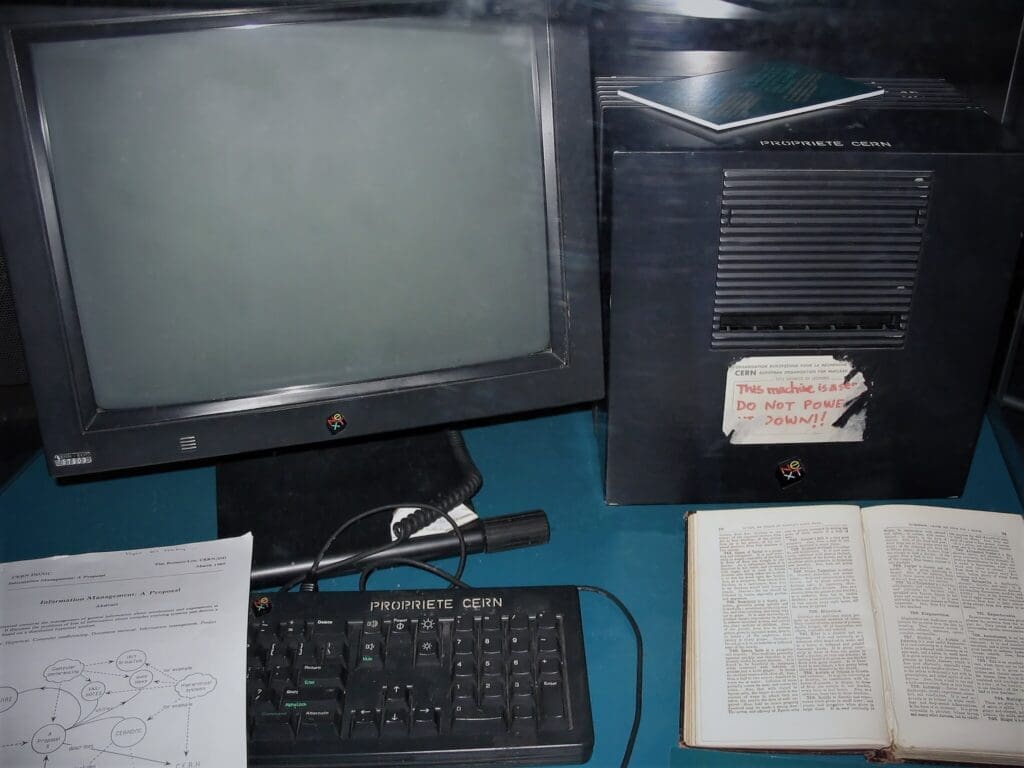

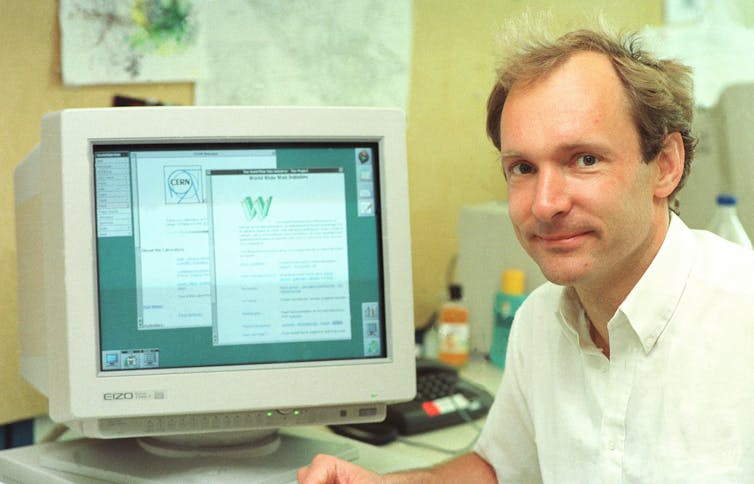

1989: Tim Berners-Lee, a British researcher at the European Organization for Nuclear Research ( CERN ), submits his proposal for what would become the World Wide Web. His paper details his ideas for Hyper Text Markup Language (HTML), the building blocks of the Web.

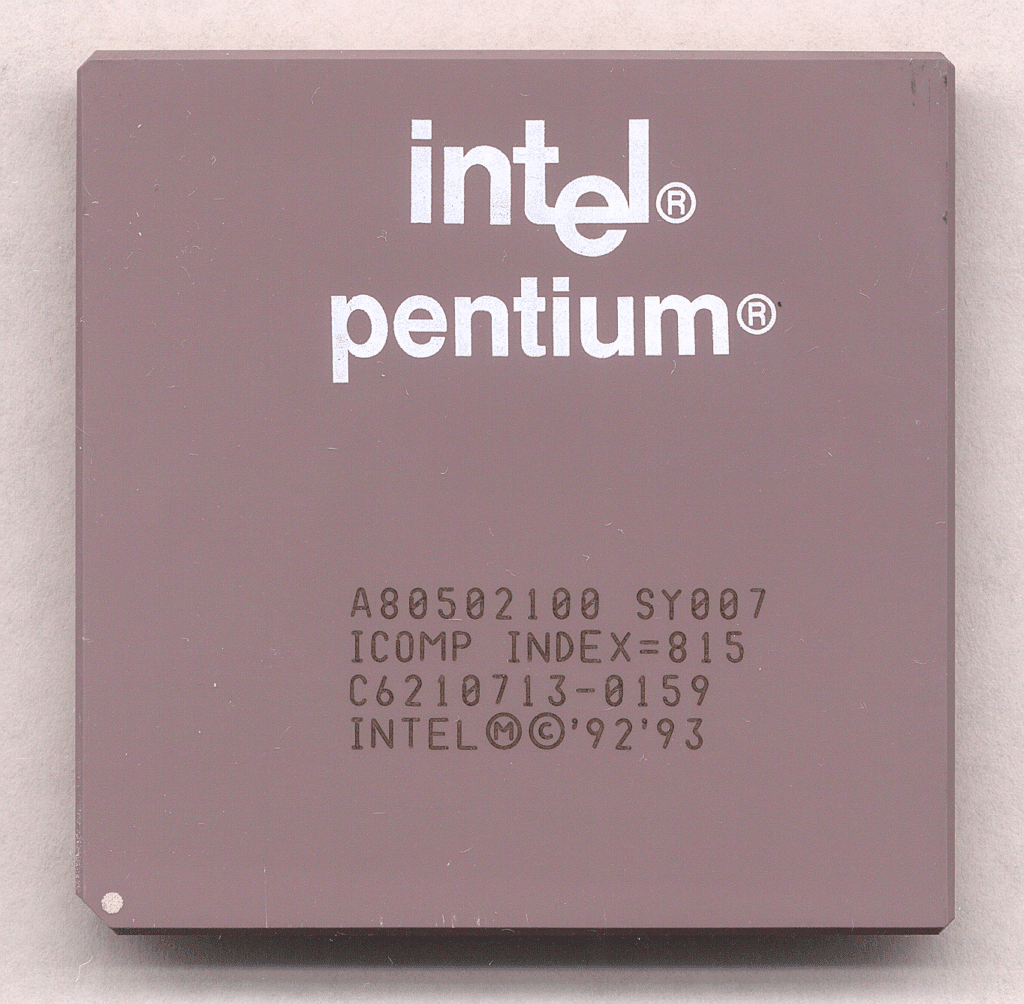

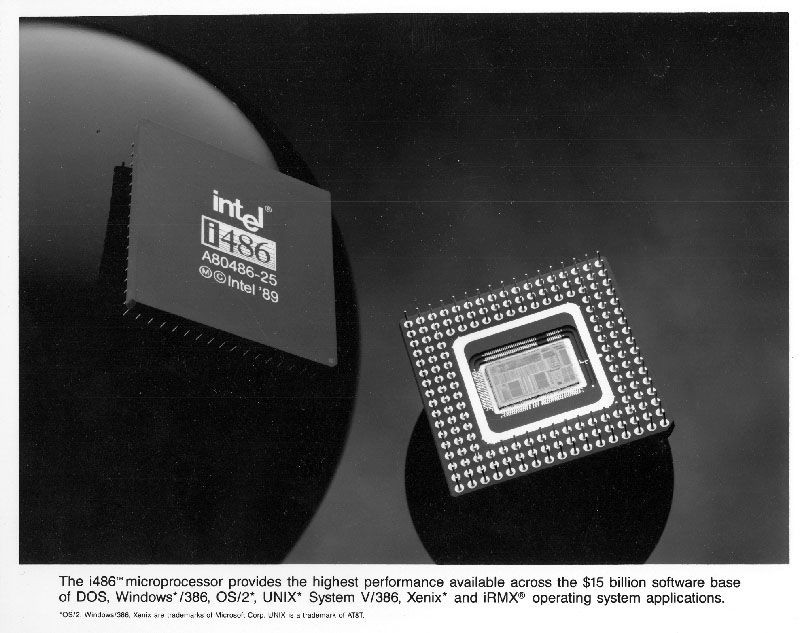

1993: The Pentium microprocessor advances the use of graphics and music on PCs.

1996: Sergey Brin and Larry Page develop the Google search engine at Stanford University.

1997: Microsoft invests $150 million in Apple, which at the time is struggling financially. This investment ends an ongoing court case in which Apple accused Microsoft of copying its operating system.

1999: Wi-Fi, the abbreviated term for "wireless fidelity" is developed, initially covering a distance of up to 300 feet (91 meters) Wired reported .

21st century

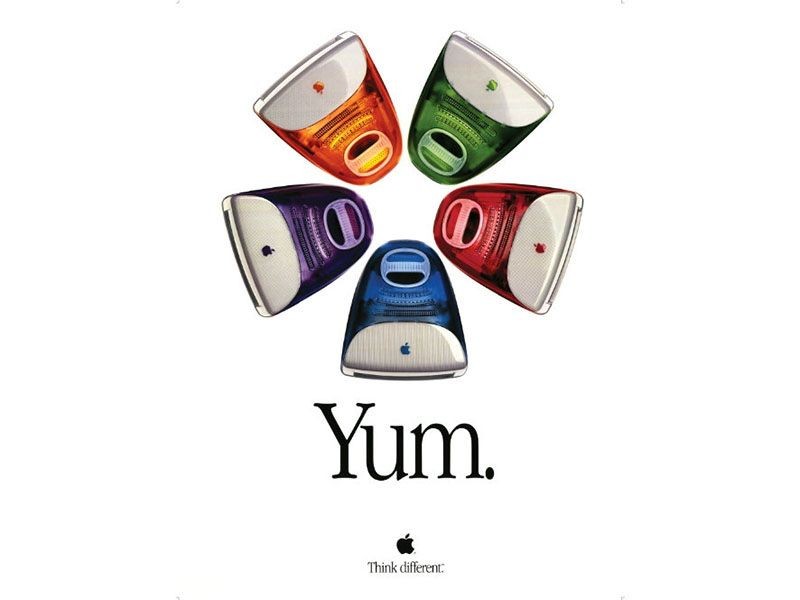

2001: Mac OS X, later renamed OS X then simply macOS, is released by Apple as the successor to its standard Mac Operating System. OS X goes through 16 different versions, each with "10" as its title, and the first nine iterations are nicknamed after big cats, with the first being codenamed "Cheetah," TechRadar reported.

2003: AMD's Athlon 64, the first 64-bit processor for personal computers, is released to customers.

2004: The Mozilla Corporation launches Mozilla Firefox 1.0. The Web browser is one of the first major challenges to Internet Explorer, owned by Microsoft. During its first five years, Firefox exceeded a billion downloads by users, according to the Web Design Museum .

2005: Google buys Android, a Linux-based mobile phone operating system

2006: The MacBook Pro from Apple hits the shelves. The Pro is the company's first Intel-based, dual-core mobile computer.

2009: Microsoft launches Windows 7 on July 22. The new operating system features the ability to pin applications to the taskbar, scatter windows away by shaking another window, easy-to-access jumplists, easier previews of tiles and more, TechRadar reported .

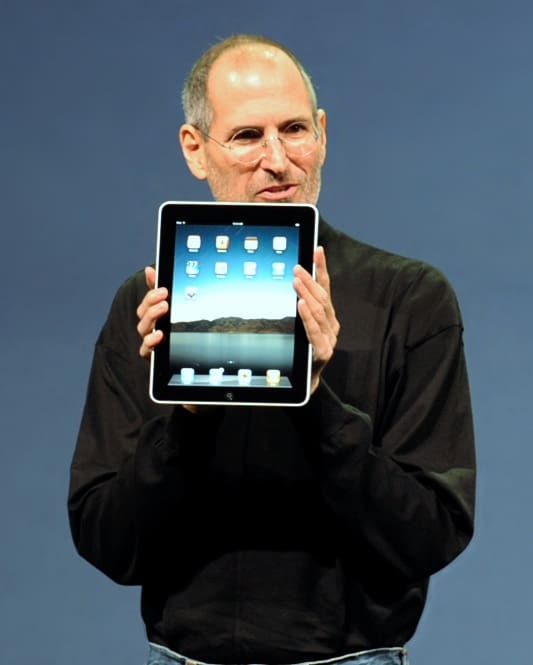

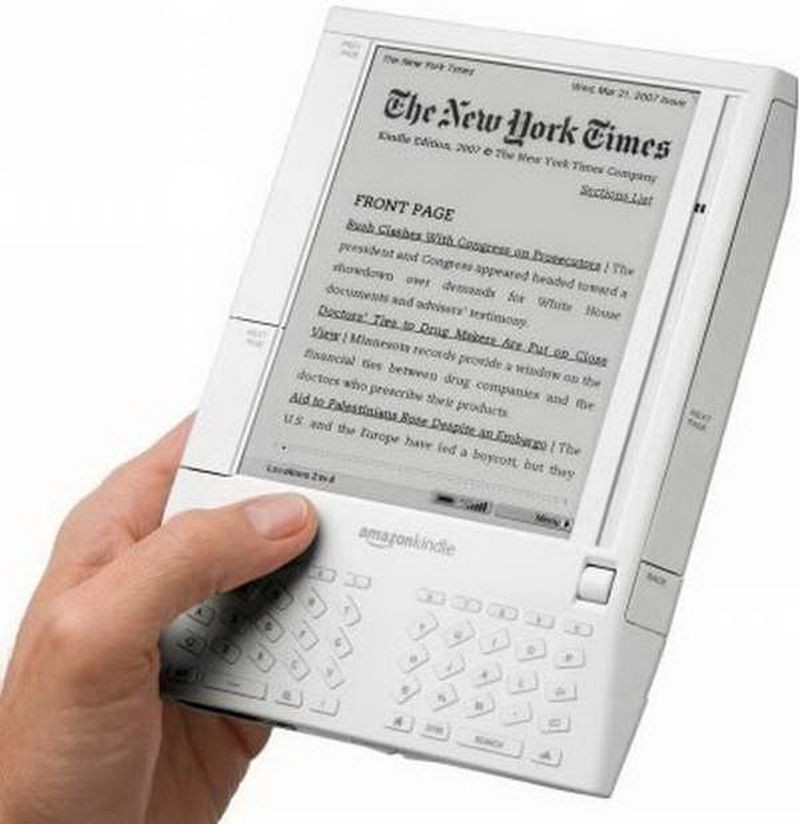

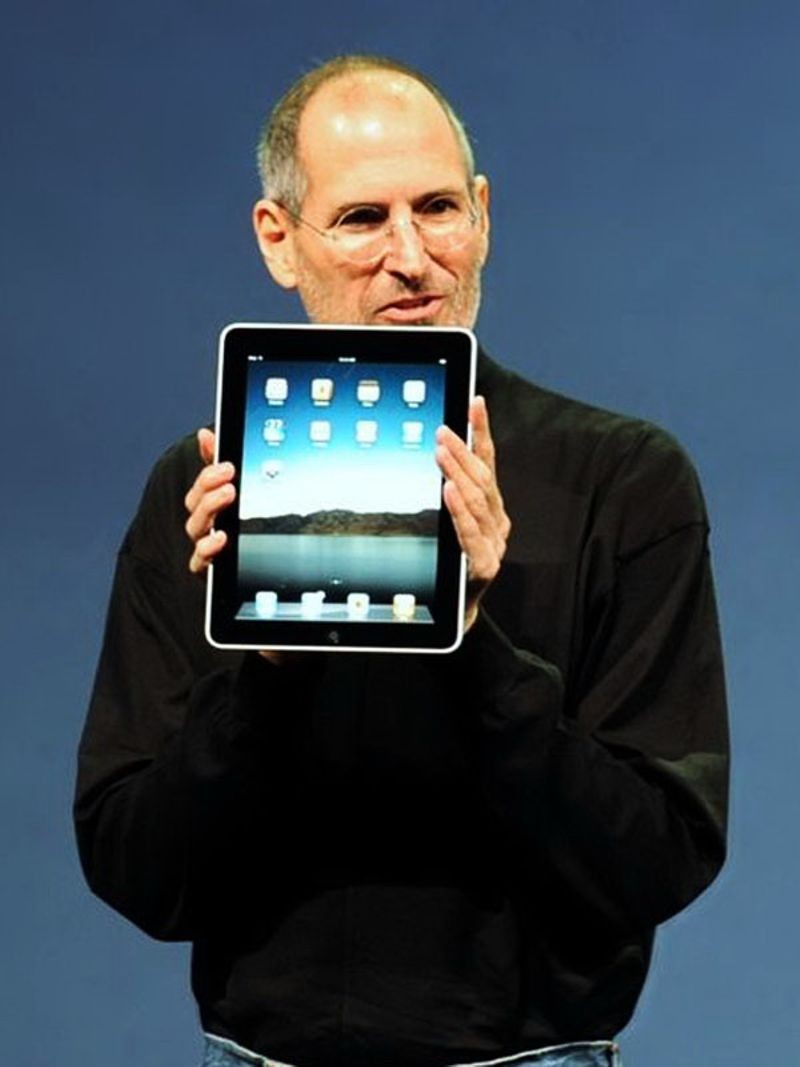

2010: The iPad, Apple's flagship handheld tablet, is unveiled.

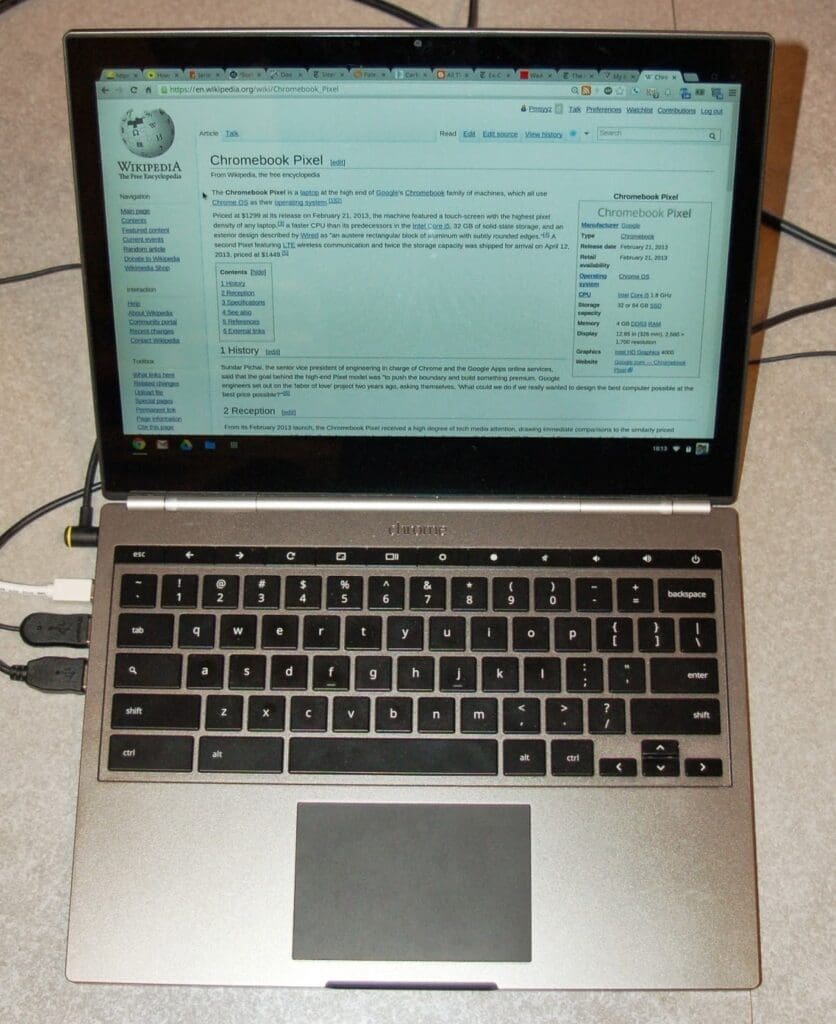

2011: Google releases the Chromebook, which runs on Google Chrome OS.

2015: Apple releases the Apple Watch. Microsoft releases Windows 10.

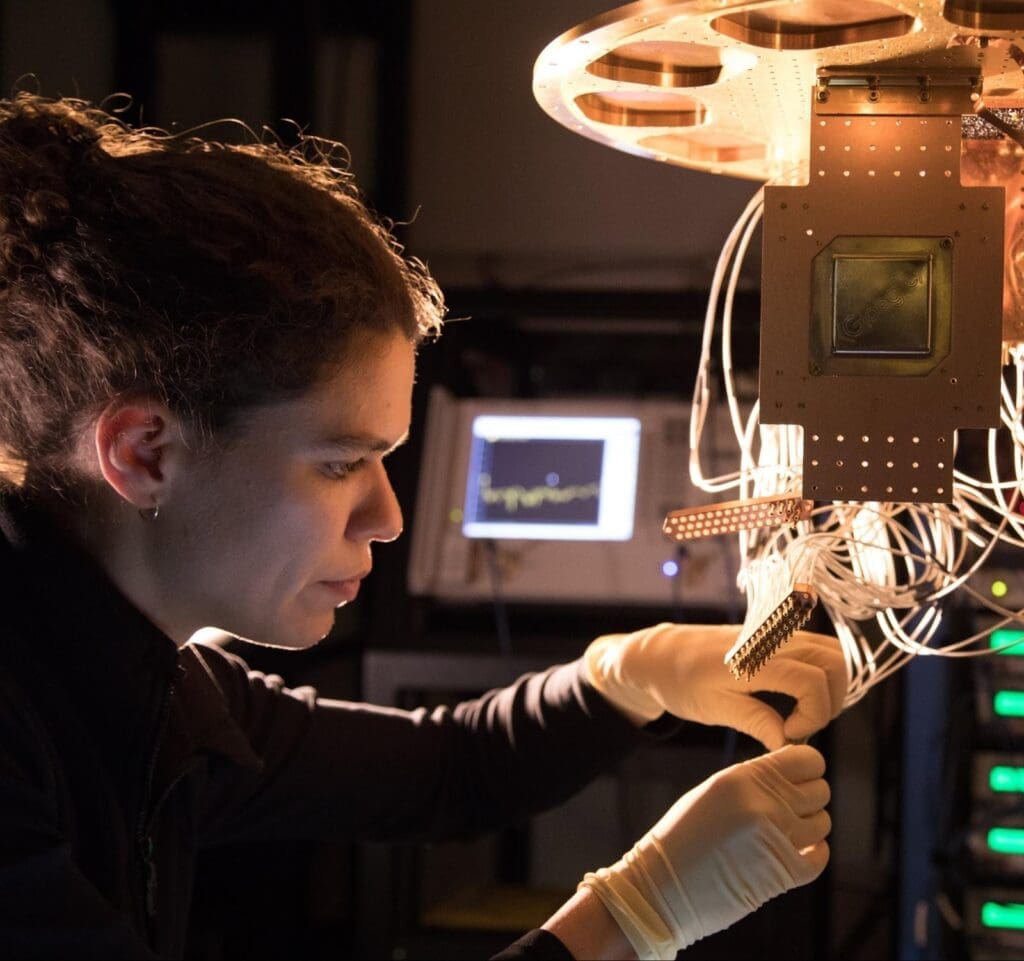

2016: The first reprogrammable quantum computer was created. "Until now, there hasn't been any quantum-computing platform that had the capability to program new algorithms into their system. They're usually each tailored to attack a particular algorithm," said study lead author Shantanu Debnath, a quantum physicist and optical engineer at the University of Maryland, College Park.

2017: The Defense Advanced Research Projects Agency (DARPA) is developing a new "Molecular Informatics" program that uses molecules as computers. "Chemistry offers a rich set of properties that we may be able to harness for rapid, scalable information storage and processing," Anne Fischer, program manager in DARPA's Defense Sciences Office, said in a statement. "Millions of molecules exist, and each molecule has a unique three-dimensional atomic structure as well as variables such as shape, size, or even color. This richness provides a vast design space for exploring novel and multi-value ways to encode and process data beyond the 0s and 1s of current logic-based, digital architectures."

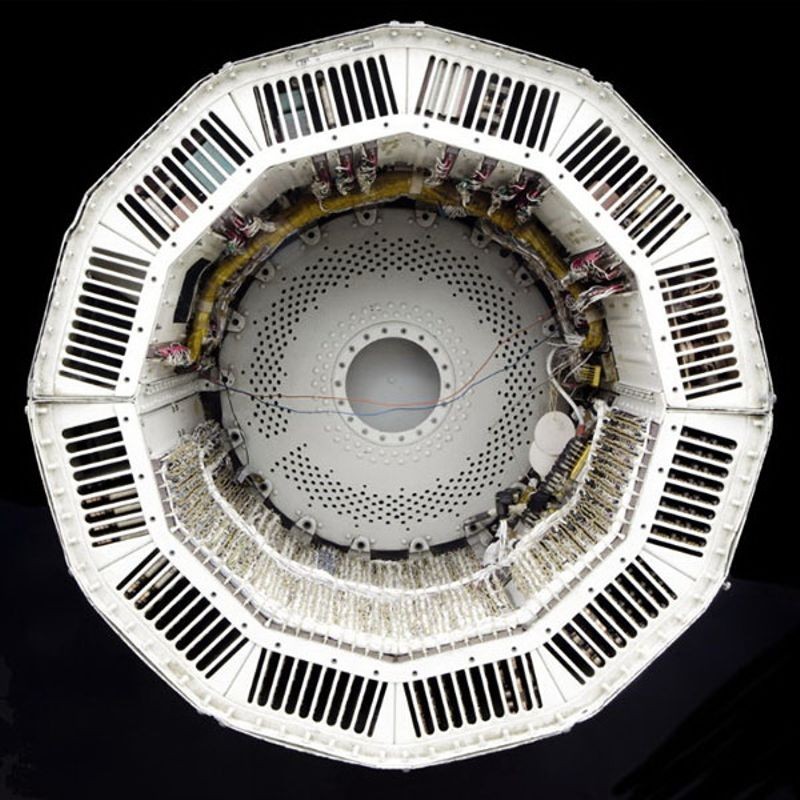

2019: A team at Google became the first to demonstrate quantum supremacy — creating a quantum computer that could feasibly outperform the most powerful classical computer — albeit for a very specific problem with no practical real-world application. The described the computer, dubbed "Sycamore" in a paper that same year in the journal Nature . Achieving quantum advantage – in which a quantum computer solves a problem with real-world applications faster than the most powerful classical computer — is still a ways off.

2022: The first exascale supercomputer, and the world's fastest, Frontier, went online at the Oak Ridge Leadership Computing Facility (OLCF) in Tennessee. Built by Hewlett Packard Enterprise (HPE) at the cost of $600 million, Frontier uses nearly 10,000 AMD EPYC 7453 64-core CPUs alongside nearly 40,000 AMD Radeon Instinct MI250X GPUs. This machine ushered in the era of exascale computing, which refers to systems that can reach more than one exaFLOP of power – used to measure the performance of a system. Only one machine – Frontier – is currently capable of reaching such levels of performance. It is currently being used as a tool to aid scientific discovery.

What is the first computer in history?

Charles Babbage's Difference Engine, designed in the 1820s, is considered the first "mechanical" computer in history, according to the Science Museum in the U.K . Powered by steam with a hand crank, the machine calculated a series of values and printed the results in a table.

What are the five generations of computing?

The "five generations of computing" is a framework for assessing the entire history of computing and the key technological advancements throughout it.

The first generation, spanning the 1940s to the 1950s, covered vacuum tube-based machines. The second then progressed to incorporate transistor-based computing between the 50s and the 60s. In the 60s and 70s, the third generation gave rise to integrated circuit-based computing. We are now in between the fourth and fifth generations of computing, which are microprocessor-based and AI-based computing.

What is the most powerful computer in the world?

As of November 2023, the most powerful computer in the world is the Frontier supercomputer . The machine, which can reach a performance level of up to 1.102 exaFLOPS, ushered in the age of exascale computing in 2022 when it went online at Tennessee's Oak Ridge Leadership Computing Facility (OLCF)

There is, however, a potentially more powerful supercomputer waiting in the wings in the form of the Aurora supercomputer, which is housed at the Argonne National Laboratory (ANL) outside of Chicago. Aurora went online in November 2023. Right now, it lags far behind Frontier, with performance levels of just 585.34 petaFLOPS (roughly half the performance of Frontier), although it's still not finished. When work is completed, the supercomputer is expected to reach performance levels higher than 2 exaFLOPS.

What was the first killer app?

Killer apps are widely understood to be those so essential that they are core to the technology they run on. There have been so many through the years – from Word for Windows in 1989 to iTunes in 2001 to social media apps like WhatsApp in more recent years

Several pieces of software may stake a claim to be the first killer app, but there is a broad consensus that VisiCalc, a spreadsheet program created by VisiCorp and originally released for the Apple II in 1979, holds that title. Steve Jobs even credits this app for propelling the Apple II to become the success it was, according to co-creator Dan Bricklin .

- Fortune: A Look Back At 40 Years of Apple

- The New Yorker: The First Windows

- " A Brief History of Computing " by Gerard O'Regan (Springer, 2021)

Sign up for the Live Science daily newsletter now

Get the world’s most fascinating discoveries delivered straight to your inbox.

Timothy is Editor in Chief of print and digital magazines All About History and History of War . He has previously worked on sister magazine All About Space , as well as photography and creative brands including Digital Photographer and 3D Artist . He has also written for How It Works magazine, several history bookazines and has a degree in English Literature from Bath Spa University .

China's upgraded light-powered 'AGI chip' is now a million times more efficient than before, researchers say

Quantum compasses closer to replacing GPS after scientists squeeze key refrigerator-sized laser system onto a microchip

Al Naslaa rock: Saudi Arabia's enigmatic sandstone block that's split perfectly down the middle

Most Popular

- 2 AI 'hallucinations' can lead to catastrophic mistakes, but a new approach makes automated decisions more reliable

- 3 2,200-year old battering ram from epic battle between Rome and Carthage found in Mediterranean

- 4 Arctic expedition uncovers deep-sea microbes that may harbor the next generation of antibiotics

- 5 SpaceX Falcon 9 rocket grounded for 2nd time in 2 months following explosive landing failure

- History & Society

- Science & Tech

- Biographies

- Animals & Nature

- Geography & Travel

- Arts & Culture

- Games & Quizzes

- On This Day

- One Good Fact

- New Articles

- Lifestyles & Social Issues

- Philosophy & Religion

- Politics, Law & Government

- World History

- Health & Medicine

- Browse Biographies

- Birds, Reptiles & Other Vertebrates

- Bugs, Mollusks & Other Invertebrates

- Environment

- Fossils & Geologic Time

- Entertainment & Pop Culture

- Sports & Recreation

- Visual Arts

- Demystified

- Image Galleries

- Infographics

- Top Questions

- Britannica Kids

- Saving Earth

- Space Next 50

- Student Center

- Introduction & Top Questions

Analog computers

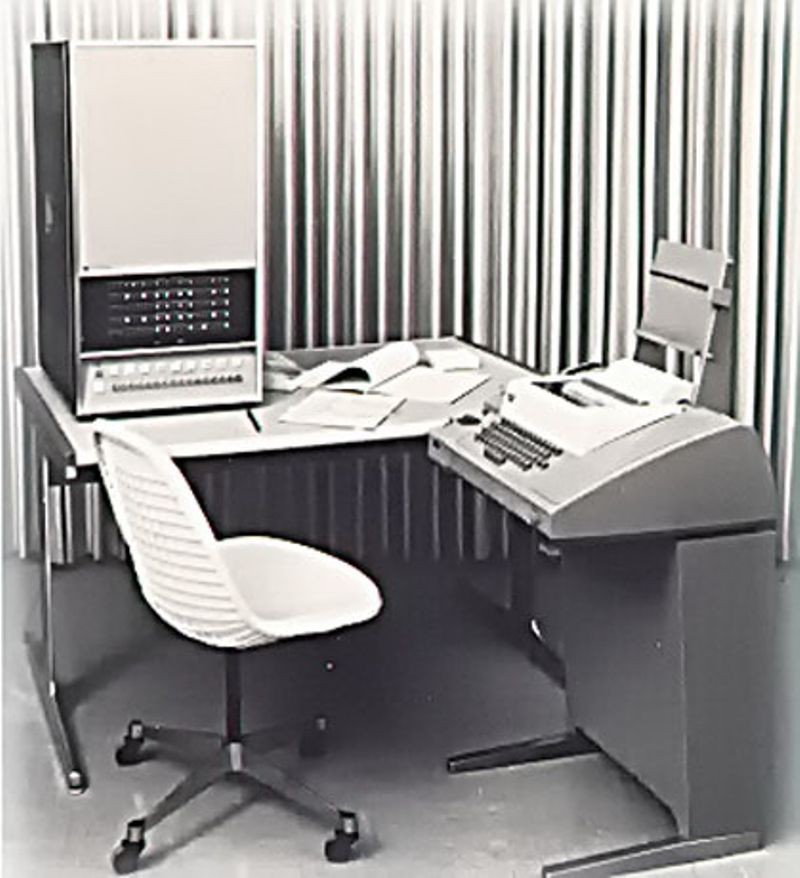

Mainframe computer.

- Supercomputer

- Minicomputer

- Microcomputer

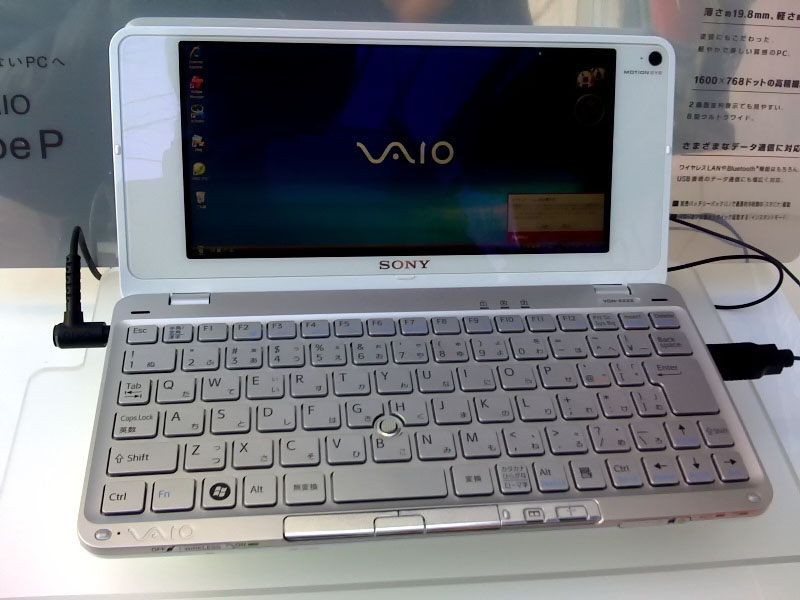

- Laptop computer

- Embedded processors

- Central processing unit

- Main memory

- Secondary memory

- Input devices

- Output devices

- Communication devices

- Peripheral interfaces

- Fabrication

- Transistor size

- Power consumption

- Quantum computing

- Molecular computing

- Role of operating systems

- Multiuser systems

- Thin systems

- Reactive systems

- Operating system design approaches

- Local area networks

- Wide area networks

- Business and personal software

- Scientific and engineering software

- Internet and collaborative software

- Games and entertainment

- Analog calculators: from Napier’s logarithms to the slide rule

- Digital calculators: from the Calculating Clock to the Arithmometer

- The Jacquard loom

- The Difference Engine

- The Analytical Engine

- Ada Lovelace, the first programmer

- Herman Hollerith’s census tabulator

- Other early business machine companies

- Vannevar Bush’s Differential Analyzer

- Howard Aiken’s digital calculators

- The Turing machine

- The Atanasoff-Berry Computer

- The first computer network

- Konrad Zuse

- Bigger brains

- Von Neumann’s “Preliminary Discussion”

- The first stored-program machines

- Machine language

- Zuse’s Plankalkül

- Interpreters

- Grace Murray Hopper

- IBM develops FORTRAN

- Control programs

- The IBM 360

- Time-sharing from Project MAC to UNIX

- Minicomputers

- Integrated circuits

- The Intel 4004

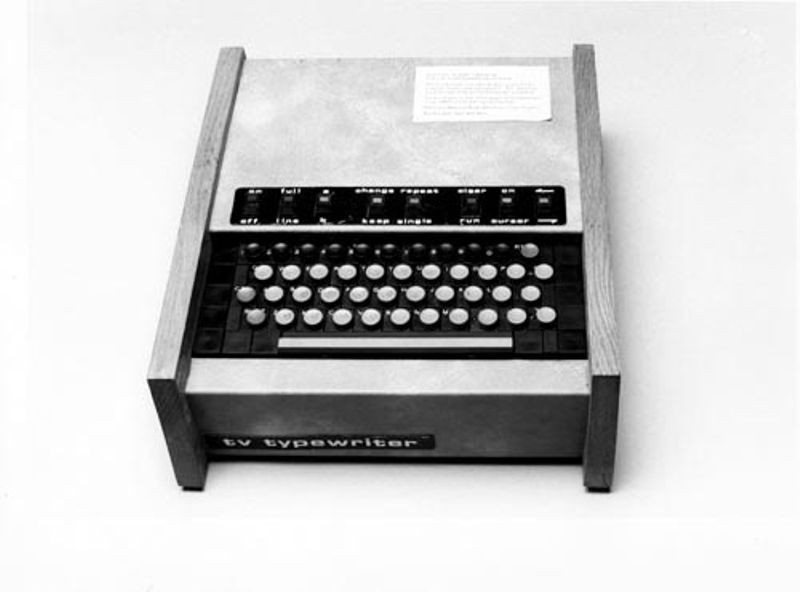

- Early computer enthusiasts

- The hobby market expands

- From Star Trek to Microsoft

- Application software

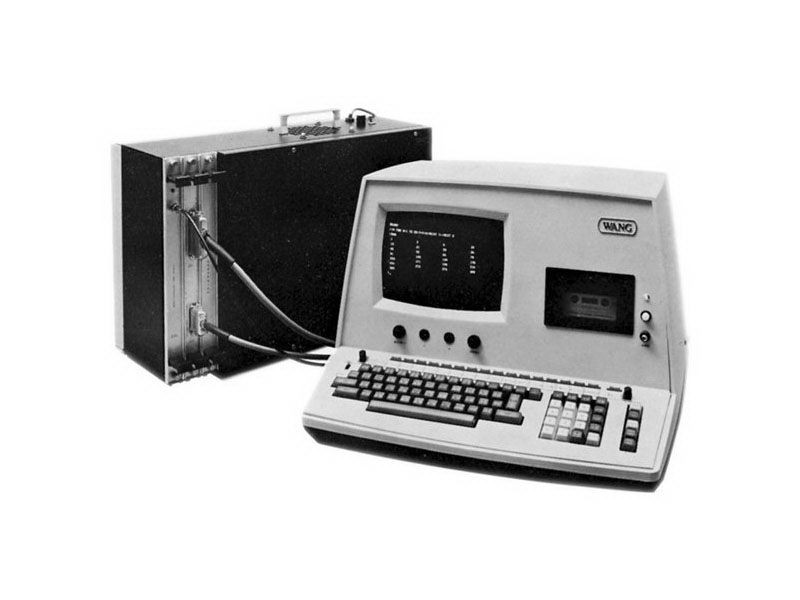

- Commodore and Tandy enter the field

- The graphical user interface

- The IBM Personal Computer

- Microsoft’s Windows operating system

- Workstation computers

- Embedded systems

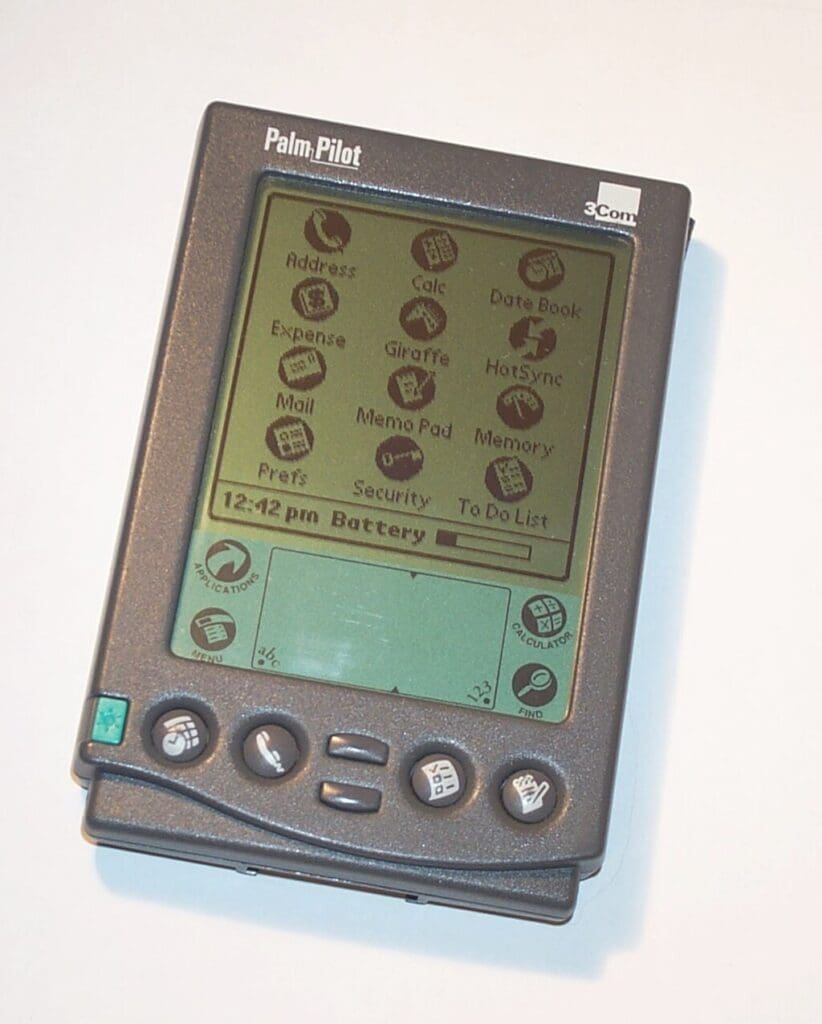

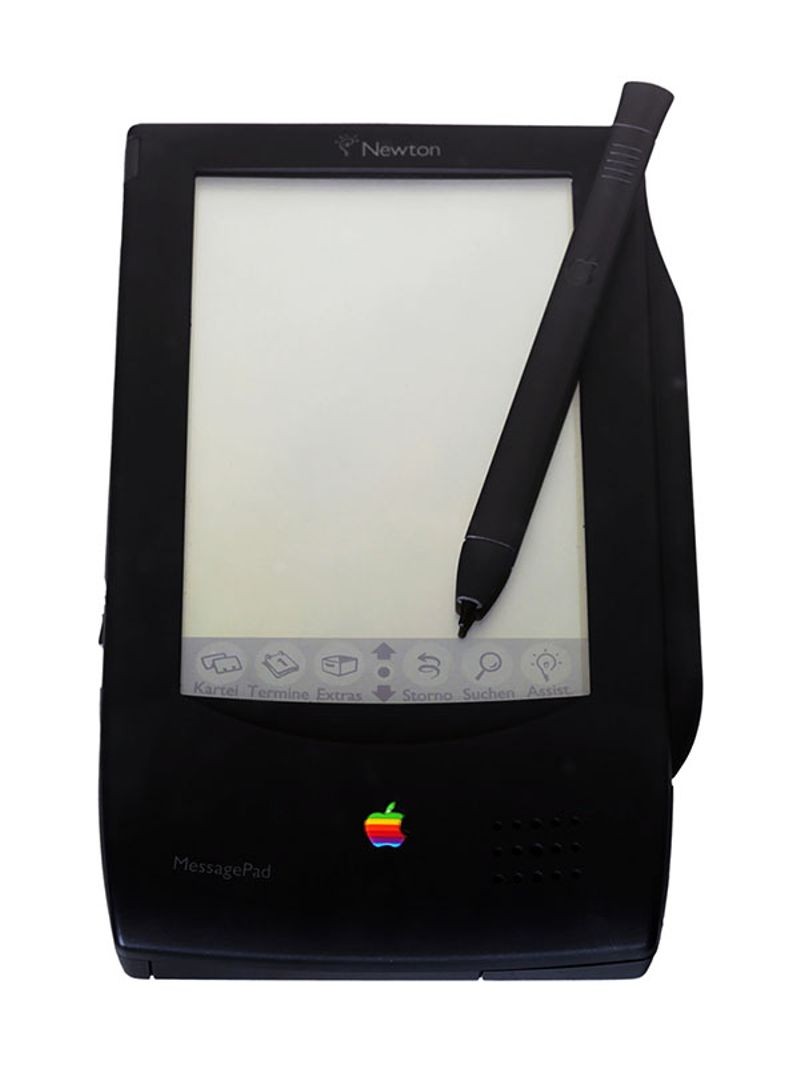

- Handheld digital devices

- The Internet

- Social networking

- Ubiquitous computing

What is a computer?

Who invented the computer, what can computers do, are computers conscious, what is the impact of computer artificial intelligence (ai) on society.

Our editors will review what you’ve submitted and determine whether to revise the article.

- University of Rhode Island - College of Arts and Sciences - Department of Computer Science and Statistics - History of Computers

- LiveScience - History of Computers: A Brief Timeline

- Computer History Museum - Timeline of Computer history

- Engineering LibreTexts - What is a computer?

- Computer Hope - What is a Computer?

- computer - Children's Encyclopedia (Ages 8-11)

- computer - Student Encyclopedia (Ages 11 and up)

- Table Of Contents

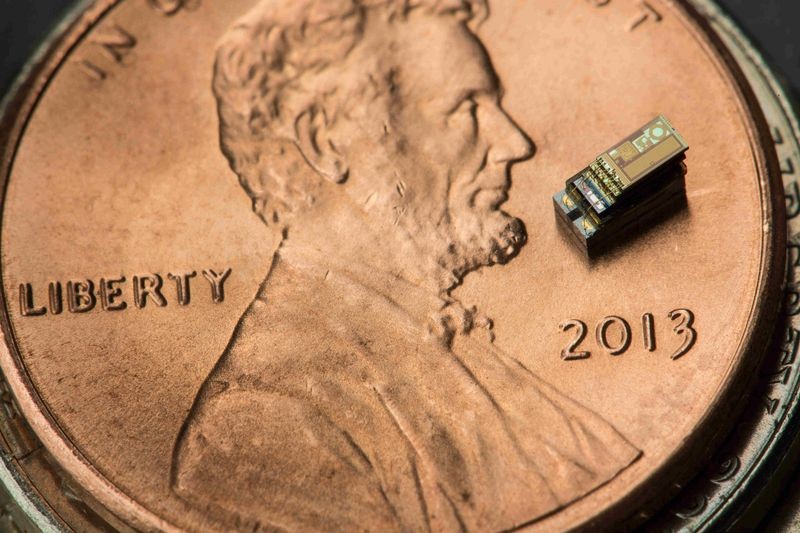

A computer is a machine that can store and process information . Most computers rely on a binary system , which uses two variables, 0 and 1, to complete tasks such as storing data, calculating algorithms, and displaying information. Computers come in many different shapes and sizes, from handheld smartphones to supercomputers weighing more than 300 tons.

Many people throughout history are credited with developing early prototypes that led to the modern computer. During World War II, physicist John Mauchly , engineer J. Presper Eckert, Jr. , and their colleagues at the University of Pennsylvania designed the first programmable general-purpose electronic digital computer, the Electronic Numerical Integrator and Computer (ENIAC).

What is the most powerful computer in the world?

As of November 2021 the most powerful computer in the world is the Japanese supercomputer Fugaku, developed by RIKEN and Fujitsu . It has been used to model COVID-19 simulations.

How do programming languages work?

Popular modern programming languages , such as JavaScript and Python, work through multiple forms of programming paradigms. Functional programming, which uses mathematical functions to give outputs based on data input, is one of the more common ways code is used to provide instructions for a computer.

The most powerful computers can perform extremely complex tasks, such as simulating nuclear weapon experiments and predicting the development of climate change . The development of quantum computers , machines that can handle a large number of calculations through quantum parallelism (derived from superposition ), would be able to do even more-complex tasks.

A computer’s ability to gain consciousness is a widely debated topic. Some argue that consciousness depends on self-awareness and the ability to think , which means that computers are conscious because they recognize their environment and can process data. Others believe that human consciousness can never be replicated by physical processes. Read one researcher’s perspective.

Computer artificial intelligence's impact on society is widely debated. Many argue that AI improves the quality of everyday life by doing routine and even complicated tasks better than humans can, making life simpler, safer, and more efficient. Others argue AI poses dangerous privacy risks, exacerbates racism by standardizing people, and costs workers their jobs leading to greater unemployment. For more on the debate over artificial intelligence, visit ProCon.org .

computer , device for processing, storing, and displaying information.

Computer once meant a person who did computations, but now the term almost universally refers to automated electronic machinery . The first section of this article focuses on modern digital electronic computers and their design, constituent parts, and applications. The second section covers the history of computing. For details on computer architecture , software , and theory, see computer science .

Computing basics

The first computers were used primarily for numerical calculations. However, as any information can be numerically encoded, people soon realized that computers are capable of general-purpose information processing . Their capacity to handle large amounts of data has extended the range and accuracy of weather forecasting . Their speed has allowed them to make decisions about routing telephone connections through a network and to control mechanical systems such as automobiles, nuclear reactors, and robotic surgical tools. They are also cheap enough to be embedded in everyday appliances and to make clothes dryers and rice cookers “smart.” Computers have allowed us to pose and answer questions that were difficult to pursue in the past. These questions might be about DNA sequences in genes, patterns of activity in a consumer market, or all the uses of a word in texts that have been stored in a database . Increasingly, computers can also learn and adapt as they operate by using processes such as machine learning .

Computers also have limitations, some of which are theoretical. For example, there are undecidable propositions whose truth cannot be determined within a given set of rules, such as the logical structure of a computer. Because no universal algorithmic method can exist to identify such propositions, a computer asked to obtain the truth of such a proposition will (unless forcibly interrupted) continue indefinitely—a condition known as the “ halting problem .” ( See Turing machine .) Other limitations reflect current technology . For example, although computers have progressed greatly in terms of processing data and using artificial intelligence algorithms , they are limited by their incapacity to think in a more holistic fashion. Computers may imitate humans—quite effectively, even—but imitation may not replace the human element in social interaction. Ethical concerns also limit computers, because computers rely on data, rather than a moral compass or human conscience , to make decisions.

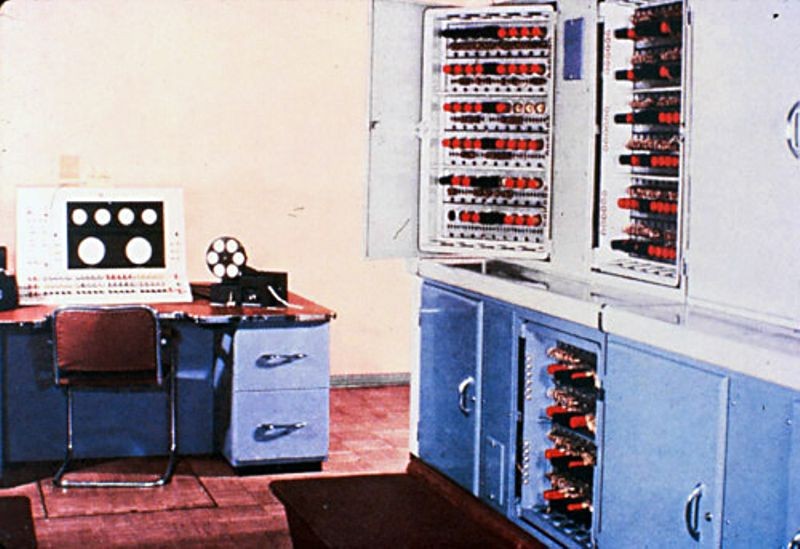

Analog computers use continuous physical magnitudes to represent quantitative information. At first they represented quantities with mechanical components ( see differential analyzer and integrator ), but after World War II voltages were used; by the 1960s digital computers had largely replaced them. Nonetheless, analog computers, and some hybrid digital-analog systems, continued in use through the 1960s in tasks such as aircraft and spaceflight simulation.

One advantage of analog computation is that it may be relatively simple to design and build an analog computer to solve a single problem. Another advantage is that analog computers can frequently represent and solve a problem in “real time”; that is, the computation proceeds at the same rate as the system being modeled by it. Their main disadvantages are that analog representations are limited in precision—typically a few decimal places but fewer in complex mechanisms—and general-purpose devices are expensive and not easily programmed.

Digital computers

In contrast to analog computers, digital computers represent information in discrete form, generally as sequences of 0s and 1s ( binary digits, or bits). The modern era of digital computers began in the late 1930s and early 1940s in the United States , Britain, and Germany . The first devices used switches operated by electromagnets (relays). Their programs were stored on punched paper tape or cards, and they had limited internal data storage. For historical developments, see the section Invention of the modern computer .

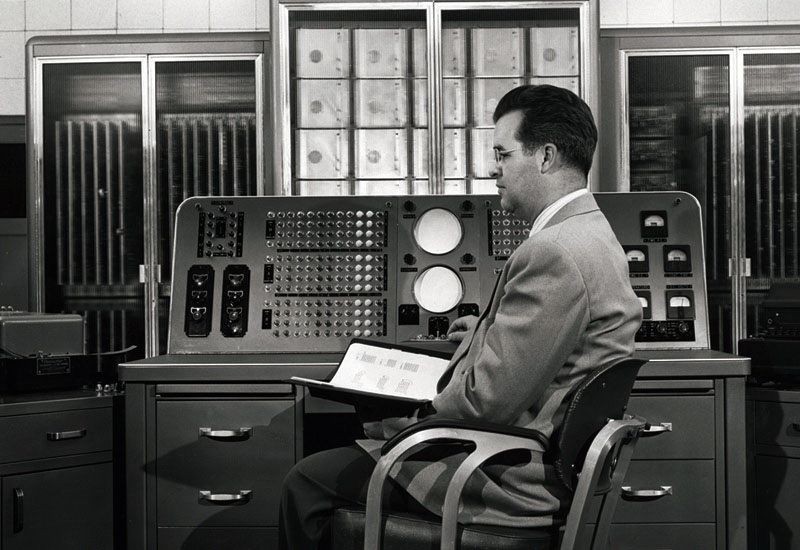

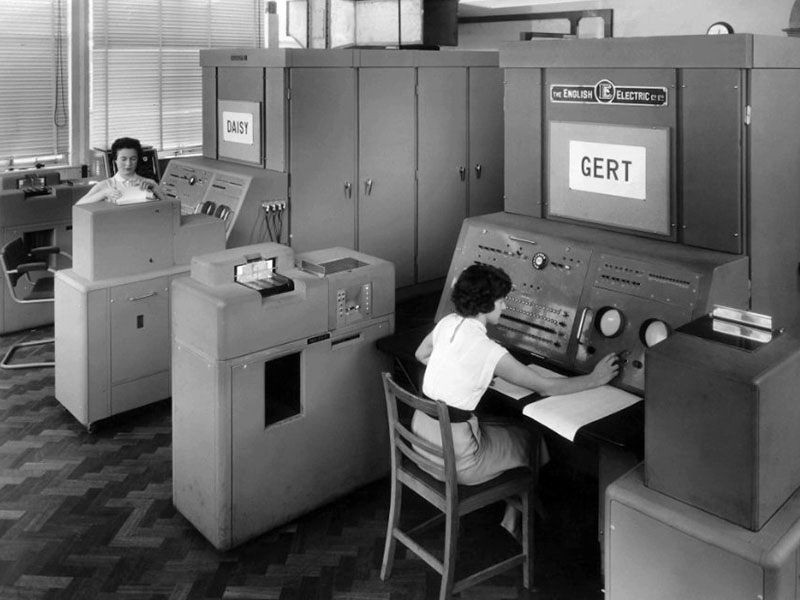

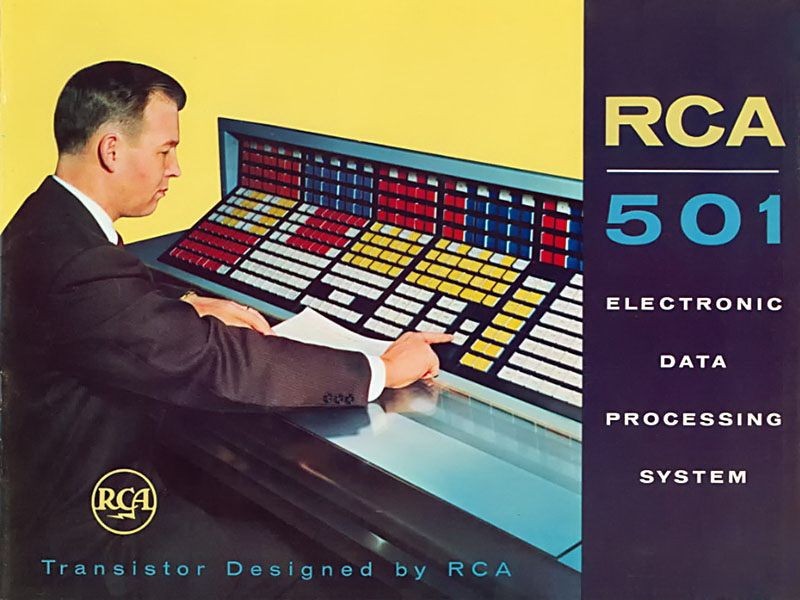

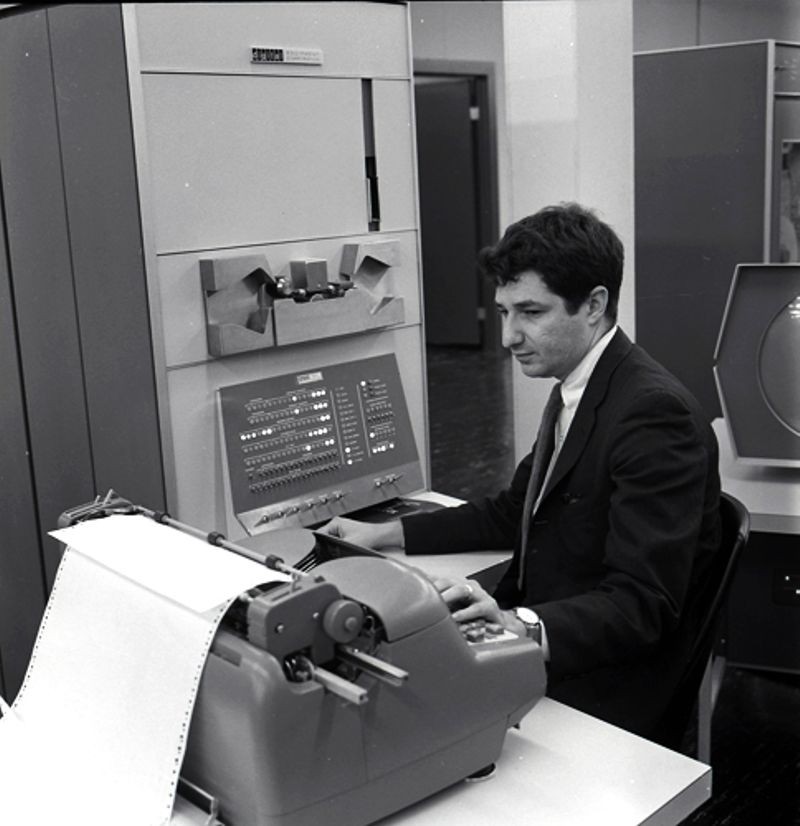

During the 1950s and ’60s, Unisys (maker of the UNIVAC computer), International Business Machines Corporation (IBM), and other companies made large, expensive computers of increasing power . They were used by major corporations and government research laboratories, typically as the sole computer in the organization. In 1959 the IBM 1401 computer rented for $8,000 per month (early IBM machines were almost always leased rather than sold), and in 1964 the largest IBM S/360 computer cost several million dollars.

These computers came to be called mainframes, though the term did not become common until smaller computers were built. Mainframe computers were characterized by having (for their time) large storage capabilities, fast components, and powerful computational abilities. They were highly reliable, and, because they frequently served vital needs in an organization, they were sometimes designed with redundant components that let them survive partial failures. Because they were complex systems, they were operated by a staff of systems programmers, who alone had access to the computer. Other users submitted “batch jobs” to be run one at a time on the mainframe.

Such systems remain important today, though they are no longer the sole, or even primary, central computing resource of an organization, which will typically have hundreds or thousands of personal computers (PCs). Mainframes now provide high-capacity data storage for Internet servers, or, through time-sharing techniques, they allow hundreds or thousands of users to run programs simultaneously. Because of their current roles, these computers are now called servers rather than mainframes.

- Random article

- Teaching guide

- Privacy & cookies

A brief history of computers

by Chris Woodford . Last updated: January 19, 2023.

C omputers truly came into their own as great inventions in the last two decades of the 20th century. But their history stretches back more than 2500 years to the abacus: a simple calculator made from beads and wires, which is still used in some parts of the world today. The difference between an ancient abacus and a modern computer seems vast, but the principle—making repeated calculations more quickly than the human brain—is exactly the same.

Read on to learn more about the history of computers—or take a look at our article on how computers work .

Listen instead... or scroll to keep reading

Photo: A model of one of the world's first computers (the Difference Engine invented by Charles Babbage) at the Computer History Museum in Mountain View, California, USA. Photo by Cory Doctorow published on Flickr in 2020 under a Creative Commons (CC BY-SA 2.0) licence.

Cogs and Calculators

It is a measure of the brilliance of the abacus, invented in the Middle East circa 500 BC, that it remained the fastest form of calculator until the middle of the 17th century. Then, in 1642, aged only 18, French scientist and philosopher Blaise Pascal (1623–1666) invented the first practical mechanical calculator , the Pascaline, to help his tax-collector father do his sums. The machine had a series of interlocking cogs ( gear wheels with teeth around their outer edges) that could add and subtract decimal numbers. Several decades later, in 1671, German mathematician and philosopher Gottfried Wilhelm Leibniz (1646–1716) came up with a similar but more advanced machine. Instead of using cogs, it had a "stepped drum" (a cylinder with teeth of increasing length around its edge), an innovation that survived in mechanical calculators for 300 hundred years. The Leibniz machine could do much more than Pascal's: as well as adding and subtracting, it could multiply, divide, and work out square roots. Another pioneering feature was the first memory store or "register."

Apart from developing one of the world's earliest mechanical calculators, Leibniz is remembered for another important contribution to computing: he was the man who invented binary code, a way of representing any decimal number using only the two digits zero and one. Although Leibniz made no use of binary in his own calculator, it set others thinking. In 1854, a little over a century after Leibniz had died, Englishman George Boole (1815–1864) used the idea to invent a new branch of mathematics called Boolean algebra. [1] In modern computers, binary code and Boolean algebra allow computers to make simple decisions by comparing long strings of zeros and ones. But, in the 19th century, these ideas were still far ahead of their time. It would take another 50–100 years for mathematicians and computer scientists to figure out how to use them (find out more in our articles about calculators and logic gates ).

Artwork: Pascaline: Two details of Blaise Pascal's 17th-century calculator. Left: The "user interface": the part where you dial in numbers you want to calculate. Right: The internal gear mechanism. Picture courtesy of US Library of Congress .

Engines of Calculation

Neither the abacus, nor the mechanical calculators constructed by Pascal and Leibniz really qualified as computers. A calculator is a device that makes it quicker and easier for people to do sums—but it needs a human operator. A computer, on the other hand, is a machine that can operate automatically, without any human help, by following a series of stored instructions called a program (a kind of mathematical recipe). Calculators evolved into computers when people devised ways of making entirely automatic, programmable calculators.

Photo: Punched cards: Herman Hollerith perfected the way of using punched cards and paper tape to store information and feed it into a machine. Here's a drawing from his 1889 patent Art of Compiling Statistics (US Patent#395,782), showing how a strip of paper (yellow) is punched with different patterns of holes (orange) that correspond to statistics gathered about people in the US census. Picture courtesy of US Patent and Trademark Office.

The first person to attempt this was a rather obsessive, notoriously grumpy English mathematician named Charles Babbage (1791–1871). Many regard Babbage as the "father of the computer" because his machines had an input (a way of feeding in numbers), a memory (something to store these numbers while complex calculations were taking place), a processor (the number-cruncher that carried out the calculations), and an output (a printing mechanism)—the same basic components shared by all modern computers. During his lifetime, Babbage never completed a single one of the hugely ambitious machines that he tried to build. That was no surprise. Each of his programmable "engines" was designed to use tens of thousands of precision-made gears. It was like a pocket watch scaled up to the size of a steam engine , a Pascal or Leibniz machine magnified a thousand-fold in dimensions, ambition, and complexity. For a time, the British government financed Babbage—to the tune of £17,000, then an enormous sum. But when Babbage pressed the government for more money to build an even more advanced machine, they lost patience and pulled out. Babbage was more fortunate in receiving help from Augusta Ada Byron (1815–1852), Countess of Lovelace, daughter of the poet Lord Byron. An enthusiastic mathematician, she helped to refine Babbage's ideas for making his machine programmable—and this is why she is still, sometimes, referred to as the world's first computer programmer. [2] Little of Babbage's work survived after his death. But when, by chance, his notebooks were rediscovered in the 1930s, computer scientists finally appreciated the brilliance of his ideas. Unfortunately, by then, most of these ideas had already been reinvented by others.

Artwork: Charles Babbage (1791–1871). Picture from The Illustrated London News, 1871, courtesy of US Library of Congress .

Babbage had intended that his machine would take the drudgery out of repetitive calculations. Originally, he imagined it would be used by the army to compile the tables that helped their gunners to fire cannons more accurately. Toward the end of the 19th century, other inventors were more successful in their effort to construct "engines" of calculation. American statistician Herman Hollerith (1860–1929) built one of the world's first practical calculating machines, which he called a tabulator, to help compile census data. Then, as now, a census was taken each decade but, by the 1880s, the population of the United States had grown so much through immigration that a full-scale analysis of the data by hand was taking seven and a half years. The statisticians soon figured out that, if trends continued, they would run out of time to compile one census before the next one fell due. Fortunately, Hollerith's tabulator was an amazing success: it tallied the entire census in only six weeks and completed the full analysis in just two and a half years. Soon afterward, Hollerith realized his machine had other applications, so he set up the Tabulating Machine Company in 1896 to manufacture it commercially. A few years later, it changed its name to the Computing-Tabulating-Recording (C-T-R) company and then, in 1924, acquired its present name: International Business Machines (IBM).

Photo: Keeping count: Herman Hollerith's late-19th-century census machine (blue, left) could process 12 separate bits of statistical data each minute. Its compact 1940 replacement (red, right), invented by Eugene M. La Boiteaux of the Census Bureau, could work almost five times faster. Photo by Harris & Ewing courtesy of US Library of Congress .

Bush and the bomb

Photo: Dr Vannevar Bush (1890–1974). Picture by Harris & Ewing, courtesy of US Library of Congress .

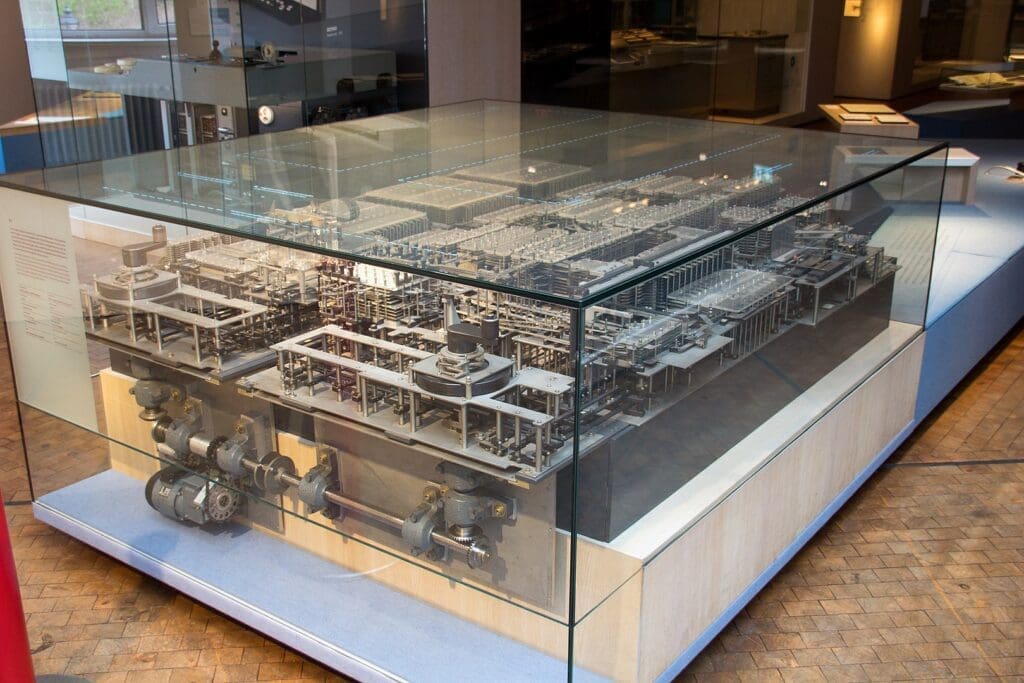

The history of computing remembers colorful characters like Babbage, but others who played important—if supporting—roles are less well known. At the time when C-T-R was becoming IBM, the world's most powerful calculators were being developed by US government scientist Vannevar Bush (1890–1974). In 1925, Bush made the first of a series of unwieldy contraptions with equally cumbersome names: the New Recording Product Integraph Multiplier. Later, he built a machine called the Differential Analyzer, which used gears, belts, levers, and shafts to represent numbers and carry out calculations in a very physical way, like a gigantic mechanical slide rule. Bush's ultimate calculator was an improved machine named the Rockefeller Differential Analyzer, assembled in 1935 from 320 km (200 miles) of wire and 150 electric motors . Machines like these were known as analog calculators—analog because they stored numbers in a physical form (as so many turns on a wheel or twists of a belt) rather than as digits. Although they could carry out incredibly complex calculations, it took several days of wheel cranking and belt turning before the results finally emerged.

Impressive machines like the Differential Analyzer were only one of several outstanding contributions Bush made to 20th-century technology. Another came as the teacher of Claude Shannon (1916–2001), a brilliant mathematician who figured out how electrical circuits could be linked together to process binary code with Boolean algebra (a way of comparing binary numbers using logic) and thus make simple decisions. During World War II, President Franklin D. Roosevelt appointed Bush chairman first of the US National Defense Research Committee and then director of the Office of Scientific Research and Development (OSRD). In this capacity, he was in charge of the Manhattan Project, the secret $2-billion initiative that led to the creation of the atomic bomb. One of Bush's final wartime contributions was to sketch out, in 1945, an idea for a memory-storing and sharing device called Memex that would later inspire Tim Berners-Lee to invent the World Wide Web . [3] Few outside the world of computing remember Vannevar Bush today—but what a legacy! As a father of the digital computer, an overseer of the atom bomb, and an inspiration for the Web, Bush played a pivotal role in three of the 20th-century's most far-reaching technologies.

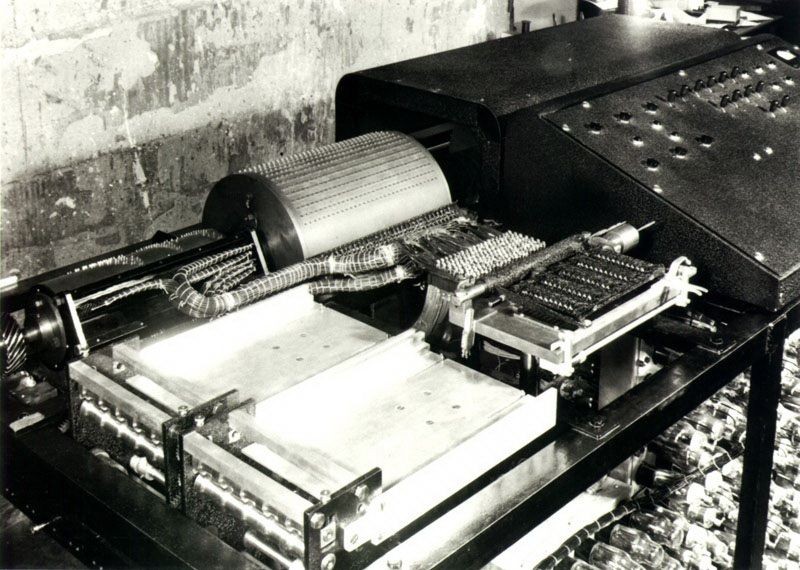

Photo: "A gigantic mechanical slide rule": A differential analyzer pictured in 1938. Picture courtesy of and © University of Cambridge Computer Laboratory, published with permission via Wikimedia Commons under a Creative Commons (CC BY 2.0) licence.

Turing—tested

The first modern computers.

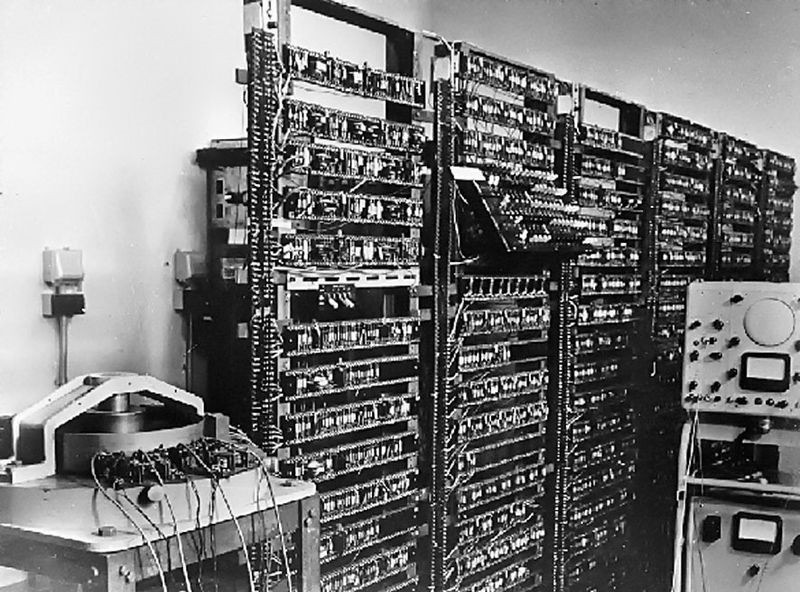

The World War II years were a crucial period in the history of computing, when powerful gargantuan computers began to appear. Just before the outbreak of the war, in 1938, German engineer Konrad Zuse (1910–1995) constructed his Z1, the world's first programmable binary computer, in his parents' living room. [4] The following year, American physicist John Atanasoff (1903–1995) and his assistant, electrical engineer Clifford Berry (1918–1963), built a more elaborate binary machine that they named the Atanasoff Berry Computer (ABC). It was a great advance—1000 times more accurate than Bush's Differential Analyzer. These were the first machines that used electrical switches to store numbers: when a switch was "off", it stored the number zero; flipped over to its other, "on", position, it stored the number one. Hundreds or thousands of switches could thus store a great many binary digits (although binary is much less efficient in this respect than decimal, since it takes up to eight binary digits to store a three-digit decimal number). These machines were digital computers: unlike analog machines, which stored numbers using the positions of wheels and rods, they stored numbers as digits.

The first large-scale digital computer of this kind appeared in 1944 at Harvard University, built by mathematician Howard Aiken (1900–1973). Sponsored by IBM, it was variously known as the Harvard Mark I or the IBM Automatic Sequence Controlled Calculator (ASCC). A giant of a machine, stretching 15m (50ft) in length, it was like a huge mechanical calculator built into a wall. It must have sounded impressive, because it stored and processed numbers using "clickety-clack" electromagnetic relays (electrically operated magnets that automatically switched lines in telephone exchanges)—no fewer than 3304 of them. Impressive they may have been, but relays suffered from several problems: they were large (that's why the Harvard Mark I had to be so big); they needed quite hefty pulses of power to make them switch; and they were slow (it took time for a relay to flip from "off" to "on" or from 0 to 1).

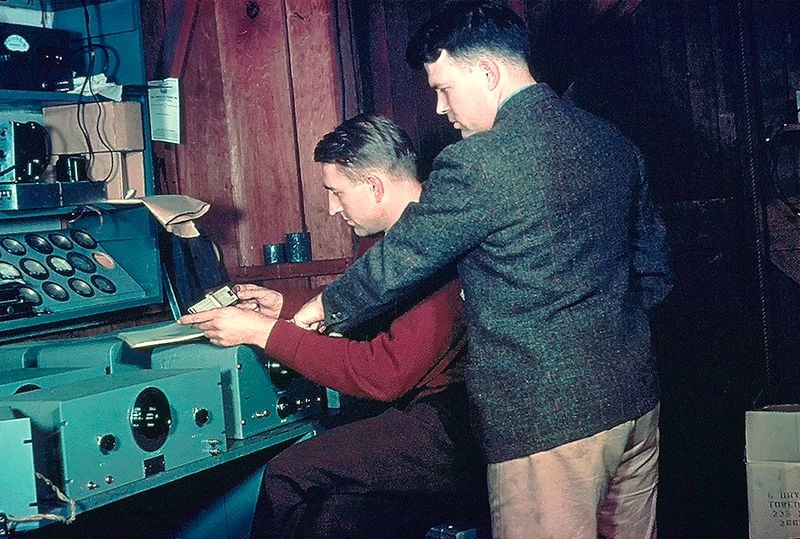

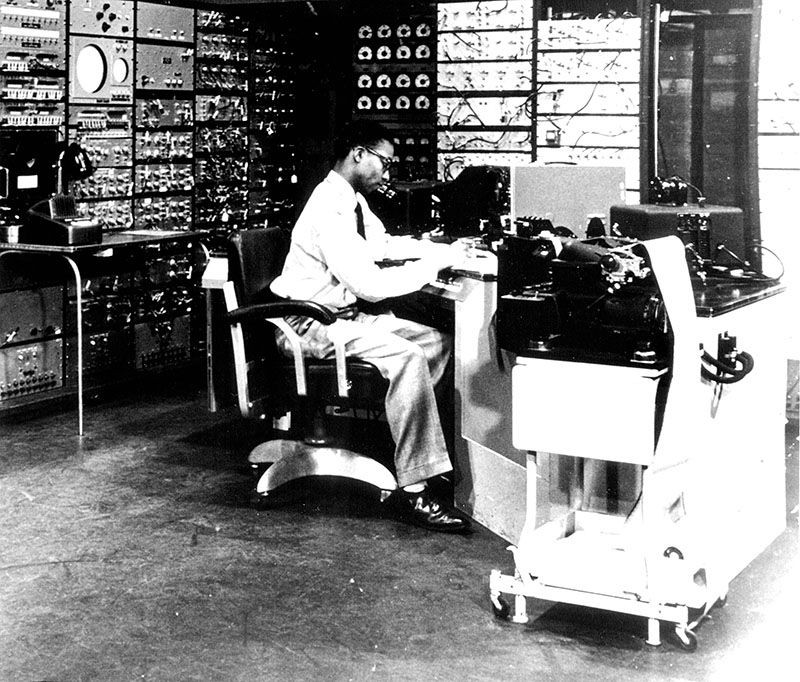

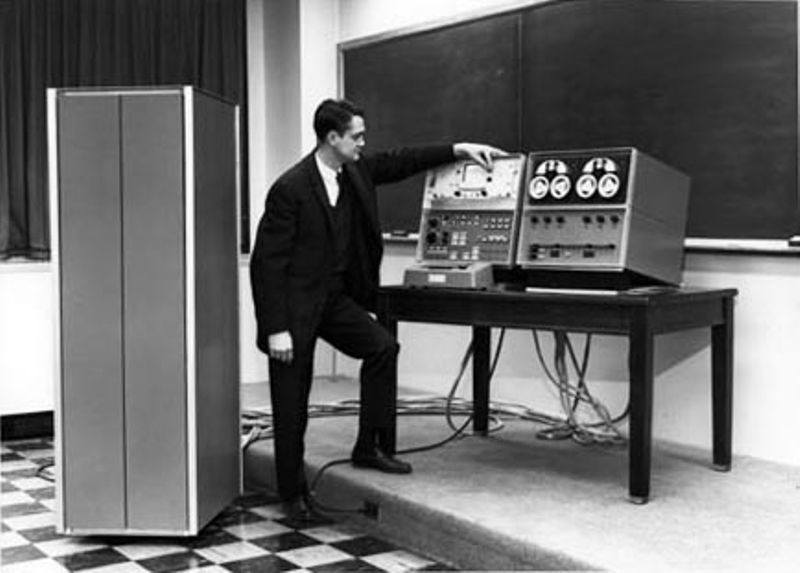

Photo: An analog computer being used in military research in 1949. Picture courtesy of NASA on the Commons (where you can download a larger version.

Most of the machines developed around this time were intended for military purposes. Like Babbage's never-built mechanical engines, they were designed to calculate artillery firing tables and chew through the other complex chores that were then the lot of military mathematicians. During World War II, the military co-opted thousands of the best scientific minds: recognizing that science would win the war, Vannevar Bush's Office of Scientific Research and Development employed 10,000 scientists from the United States alone. Things were very different in Germany. When Konrad Zuse offered to build his Z2 computer to help the army, they couldn't see the need—and turned him down.

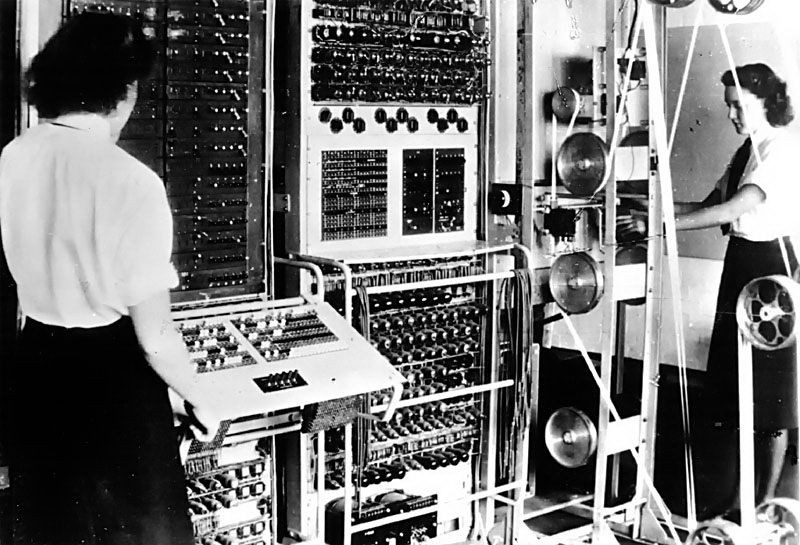

On the Allied side, great minds began to make great breakthroughs. In 1943, a team of mathematicians based at Bletchley Park near London, England (including Alan Turing) built a computer called Colossus to help them crack secret German codes. Colossus was the first fully electronic computer. Instead of relays, it used a better form of switch known as a vacuum tube (also known, especially in Britain, as a valve). The vacuum tube, each one about as big as a person's thumb (earlier ones were very much bigger) and glowing red hot like a tiny electric light bulb, had been invented in 1906 by Lee de Forest (1873–1961), who named it the Audion. This breakthrough earned de Forest his nickname as "the father of radio" because their first major use was in radio receivers , where they amplified weak incoming signals so people could hear them more clearly. [5] In computers such as the ABC and Colossus, vacuum tubes found an alternative use as faster and more compact switches.

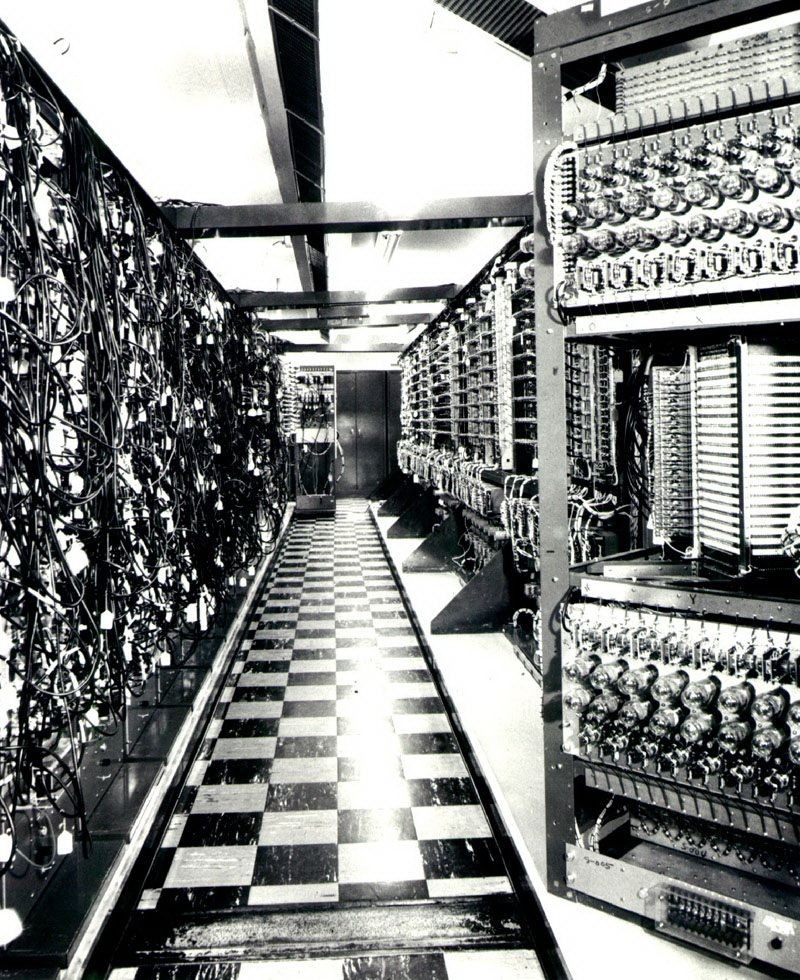

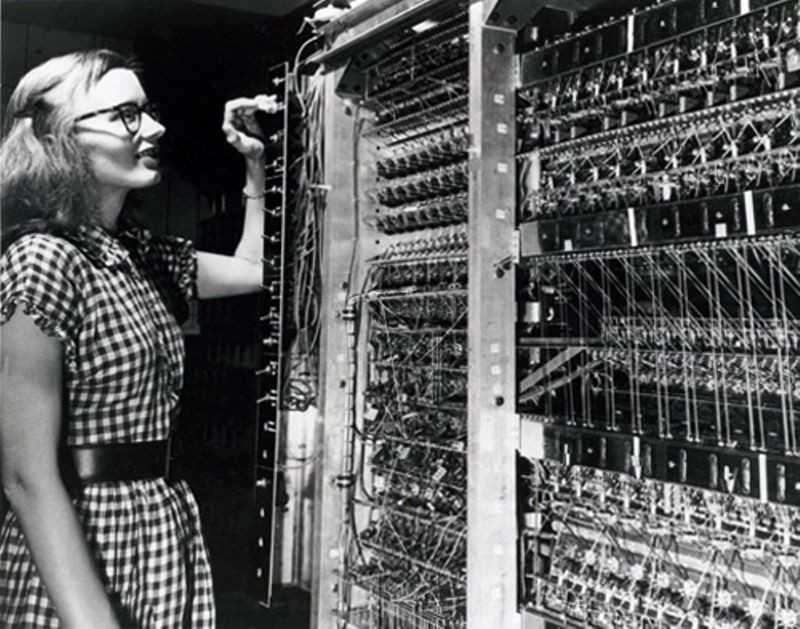

Just like the codes it was trying to crack, Colossus was top-secret and its existence wasn't confirmed until after the war ended. As far as most people were concerned, vacuum tubes were pioneered by a more visible computer that appeared in 1946: the Electronic Numerical Integrator And Calculator (ENIAC). The ENIAC's inventors, two scientists from the University of Pennsylvania, John Mauchly (1907–1980) and J. Presper Eckert (1919–1995), were originally inspired by Bush's Differential Analyzer; years later Eckert recalled that ENIAC was the "descendant of Dr Bush's machine." But the machine they constructed was far more ambitious. It contained nearly 18,000 vacuum tubes (nine times more than Colossus), was around 24 m (80 ft) long, and weighed almost 30 tons. ENIAC is generally recognized as the world's first fully electronic, general-purpose, digital computer. Colossus might have qualified for this title too, but it was designed purely for one job (code-breaking); since it couldn't store a program, it couldn't easily be reprogrammed to do other things.

Photo: Sir Maurice Wilkes (left), his collaborator William Renwick, and the early EDSAC-1 electronic computer they built in Cambridge, pictured around 1947/8. Picture courtesy of and © University of Cambridge Computer Laboratory, published with permission via Wikimedia Commons under a Creative Commons (CC BY 2.0) licence.

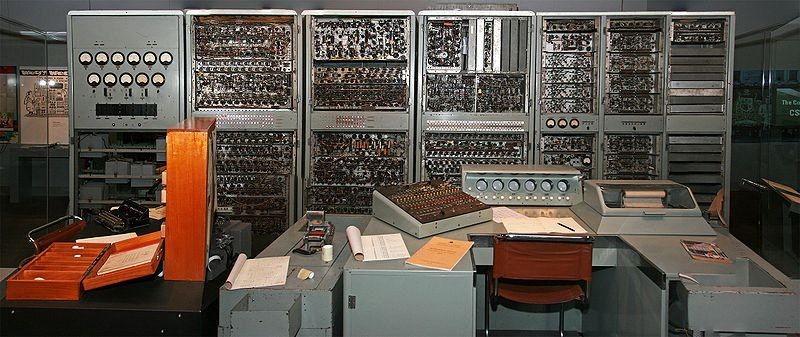

ENIAC was just the beginning. Its two inventors formed the Eckert Mauchly Computer Corporation in the late 1940s. Working with a brilliant Hungarian mathematician, John von Neumann (1903–1957), who was based at Princeton University, they then designed a better machine called EDVAC (Electronic Discrete Variable Automatic Computer). In a key piece of work, von Neumann helped to define how the machine stored and processed its programs, laying the foundations for how all modern computers operate. [6] After EDVAC, Eckert and Mauchly developed UNIVAC 1 (UNIVersal Automatic Computer) in 1951. They were helped in this task by a young, largely unknown American mathematician and Naval reserve named Grace Murray Hopper (1906–1992), who had originally been employed by Howard Aiken on the Harvard Mark I. Like Herman Hollerith's tabulator over 50 years before, UNIVAC 1 was used for processing data from the US census. It was then manufactured for other users—and became the world's first large-scale commercial computer.

Machines like Colossus, the ENIAC, and the Harvard Mark I compete for significance and recognition in the minds of computer historians. Which one was truly the first great modern computer? All of them and none: these—and several other important machines—evolved our idea of the modern electronic computer during the key period between the late 1930s and the early 1950s. Among those other machines were pioneering computers put together by English academics, notably the Manchester/Ferranti Mark I, built at Manchester University by Frederic Williams (1911–1977) and Thomas Kilburn (1921–2001), and the EDSAC (Electronic Delay Storage Automatic Calculator), built by Maurice Wilkes (1913–2010) at Cambridge University. [7]

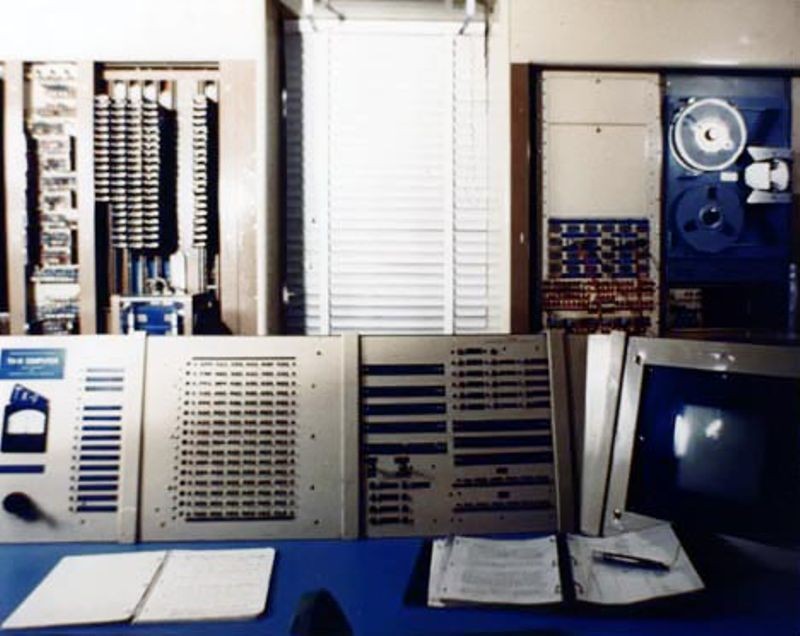

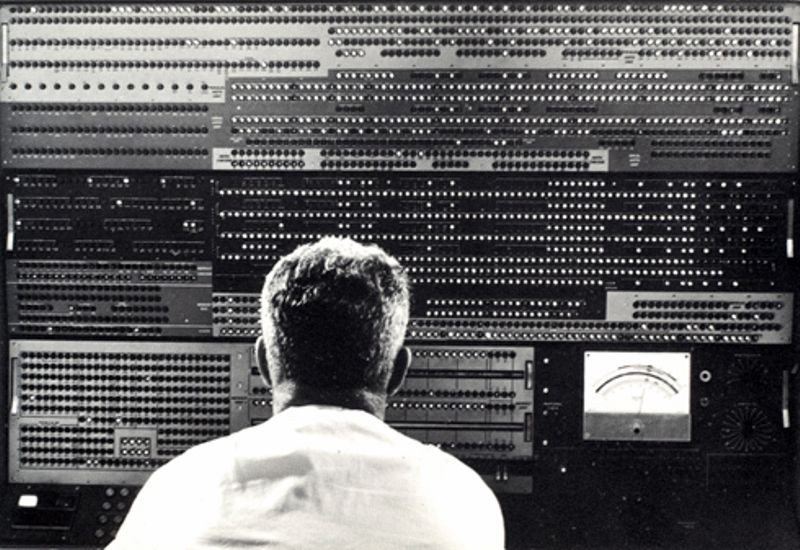

Photo: Control panel of the UNIVAC 1, the world's first large-scale commercial computer. Photo by Cory Doctorow published on Flickr in 2020 under a Creative Commons (CC BY-SA 2.0) licence.

The microelectronic revolution

Vacuum tubes were a considerable advance on relay switches, but machines like the ENIAC were notoriously unreliable. The modern term for a problem that holds up a computer program is a "bug." Popular legend has it that this word entered the vocabulary of computer programmers sometime in the 1950s when moths, attracted by the glowing lights of vacuum tubes, flew inside machines like the ENIAC, caused a short circuit, and brought work to a juddering halt. But there were other problems with vacuum tubes too. They consumed enormous amounts of power: the ENIAC used about 2000 times as much electricity as a modern laptop. And they took up huge amounts of space. Military needs were driving the development of machines like the ENIAC, but the sheer size of vacuum tubes had now become a real problem. ABC had used 300 vacuum tubes, Colossus had 2000, and the ENIAC had 18,000. The ENIAC's designers had boasted that its calculating speed was "at least 500 times as great as that of any other existing computing machine." But developing computers that were an order of magnitude more powerful still would have needed hundreds of thousands or even millions of vacuum tubes—which would have been far too costly, unwieldy, and unreliable. So a new technology was urgently required.

The solution appeared in 1947 thanks to three physicists working at Bell Telephone Laboratories (Bell Labs). John Bardeen (1908–1991), Walter Brattain (1902–1987), and William Shockley (1910–1989) were then helping Bell to develop new technology for the American public telephone system, so the electrical signals that carried phone calls could be amplified more easily and carried further. Shockley, who was leading the team, believed he could use semiconductors (materials such as germanium and silicon that allow electricity to flow through them only when they've been treated in special ways) to make a better form of amplifier than the vacuum tube. When his early experiments failed, he set Bardeen and Brattain to work on the task for him. Eventually, in December 1947, they created a new form of amplifier that became known as the point-contact transistor. Bell Labs credited Bardeen and Brattain with the transistor and awarded them a patent. This enraged Shockley and prompted him to invent an even better design, the junction transistor, which has formed the basis of most transistors ever since.

Like vacuum tubes, transistors could be used as amplifiers or as switches. But they had several major advantages. They were a fraction the size of vacuum tubes (typically about as big as a pea), used no power at all unless they were in operation, and were virtually 100 percent reliable. The transistor was one of the most important breakthroughs in the history of computing and it earned its inventors the world's greatest science prize, the 1956 Nobel Prize in Physics . By that time, however, the three men had already gone their separate ways. John Bardeen had begun pioneering research into superconductivity , which would earn him a second Nobel Prize in 1972. Walter Brattain moved to another part of Bell Labs.

William Shockley decided to stick with the transistor, eventually forming his own corporation to develop it further. His decision would have extraordinary consequences for the computer industry. With a small amount of capital, Shockley set about hiring the best brains he could find in American universities, including young electrical engineer Robert Noyce (1927–1990) and research chemist Gordon Moore (1929–). It wasn't long before Shockley's idiosyncratic and bullying management style upset his workers. In 1956, eight of them—including Noyce and Moore—left Shockley Transistor to found a company of their own, Fairchild Semiconductor, just down the road. Thus began the growth of "Silicon Valley," the part of California centered on Palo Alto, where many of the world's leading computer and electronics companies have been based ever since. [8]

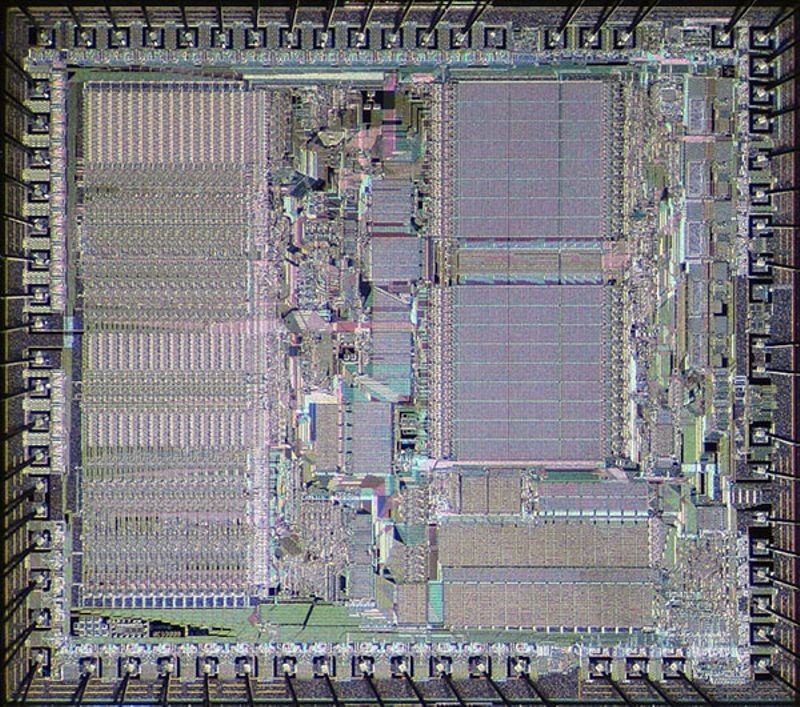

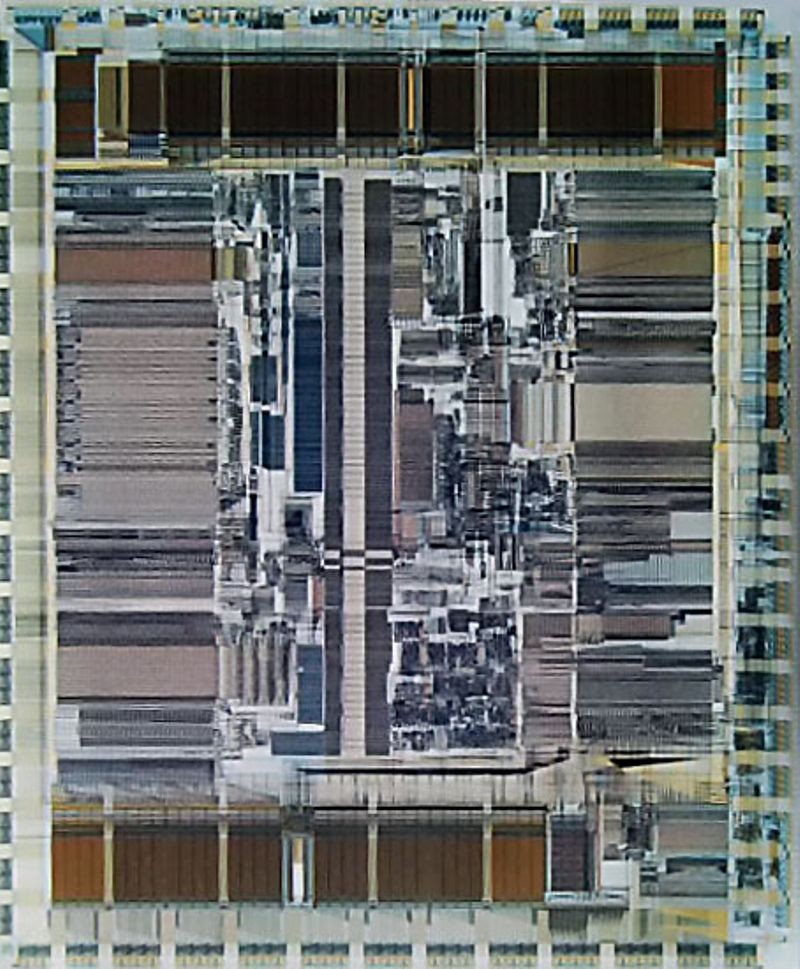

It was in Fairchild's California building that the next breakthrough occurred—although, somewhat curiously, it also happened at exactly the same time in the Dallas laboratories of Texas Instruments. In Dallas, a young engineer from Kansas named Jack Kilby (1923–2005) was considering how to improve the transistor. Although transistors were a great advance on vacuum tubes, one key problem remained. Machines that used thousands of transistors still had to be hand wired to connect all these components together. That process was laborious, costly, and error prone. Wouldn't it be better, Kilby reflected, if many transistors could be made in a single package? This prompted him to invent the "monolithic" integrated circuit (IC) , a collection of transistors and other components that could be manufactured all at once, in a block, on the surface of a semiconductor. Kilby's invention was another step forward, but it also had a drawback: the components in his integrated circuit still had to be connected by hand. While Kilby was making his breakthrough in Dallas, unknown to him, Robert Noyce was perfecting almost exactly the same idea at Fairchild in California. Noyce went one better, however: he found a way to include the connections between components in an integrated circuit, thus automating the entire process.

Photo: An integrated circuit from the 1980s. This is an EPROM chip (effectively a forerunner of flash memory , which you could only erase with a blast of ultraviolet light).

Mainframes, minis, and micros

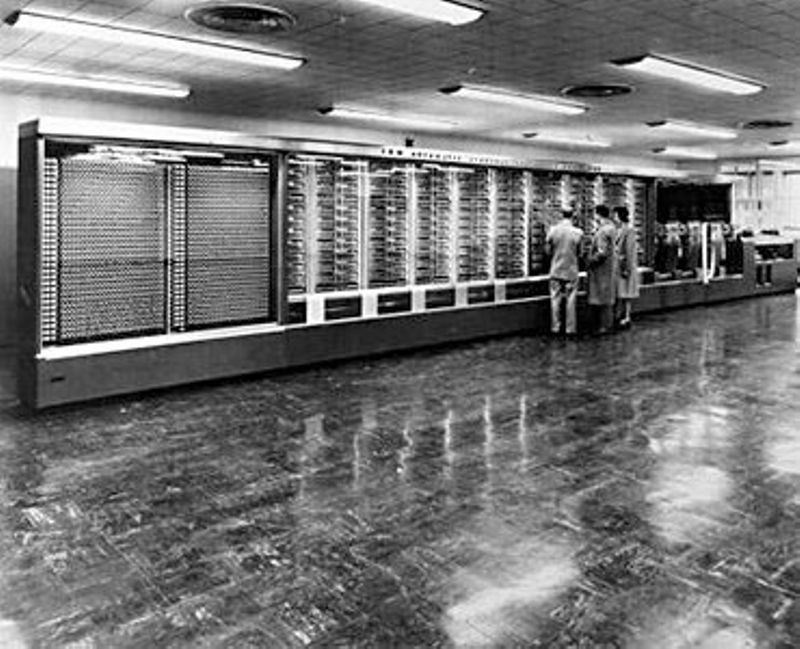

Photo: An IBM 704 mainframe pictured at NASA in 1958. Designed by Gene Amdahl, this scientific number cruncher was the successor to the 701 and helped pave the way to arguably the most important IBM computer of all time, the System/360, which Amdahl also designed. Photo courtesy of NASA .

Photo: The control panel of DEC's classic 1965 PDP-8 minicomputer. Photo by Cory Doctorow published on Flickr in 2020 under a Creative Commons (CC BY-SA 2.0) licence.

Integrated circuits, as much as transistors, helped to shrink computers during the 1960s. In 1943, IBM boss Thomas Watson had reputedly quipped: "I think there is a world market for about five computers." Just two decades later, the company and its competitors had installed around 25,000 large computer systems across the United States. As the 1960s wore on, integrated circuits became increasingly sophisticated and compact. Soon, engineers were speaking of large-scale integration (LSI), in which hundreds of components could be crammed onto a single chip, and then very large-scale integrated (VLSI), when the same chip could contain thousands of components.

The logical conclusion of all this miniaturization was that, someday, someone would be able to squeeze an entire computer onto a chip. In 1968, Robert Noyce and Gordon Moore had left Fairchild to establish a new company of their own. With integration very much in their minds, they called it Integrated Electronics or Intel for short. Originally they had planned to make memory chips, but when the company landed an order to make chips for a range of pocket calculators, history headed in a different direction. A couple of their engineers, Federico Faggin (1941–) and Marcian Edward (Ted) Hoff (1937–), realized that instead of making a range of specialist chips for a range of calculators, they could make a universal chip that could be programmed to work in them all. Thus was born the general-purpose, single chip computer or microprocessor—and that brought about the next phase of the computer revolution.

Personal computers

By 1974, Intel had launched a popular microprocessor known as the 8080 and computer hobbyists were soon building home computers around it. The first was the MITS Altair 8800, built by Ed Roberts . With its front panel covered in red LED lights and toggle switches, it was a far cry from modern PCs and laptops. Even so, it sold by the thousand and earned Roberts a fortune. The Altair inspired a Californian electronics wizard name Steve Wozniak (1950–) to develop a computer of his own. "Woz" is often described as the hacker's "hacker"—a technically brilliant and highly creative engineer who pushed the boundaries of computing largely for his own amusement. In the mid-1970s, he was working at the Hewlett-Packard computer company in California, and spending his free time tinkering away as a member of the Homebrew Computer Club in the Bay Area.

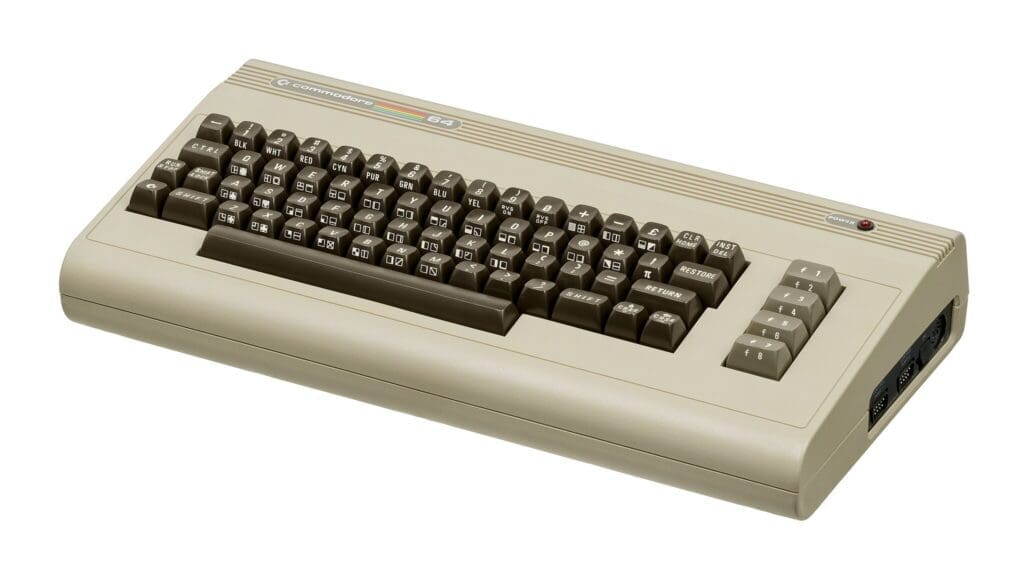

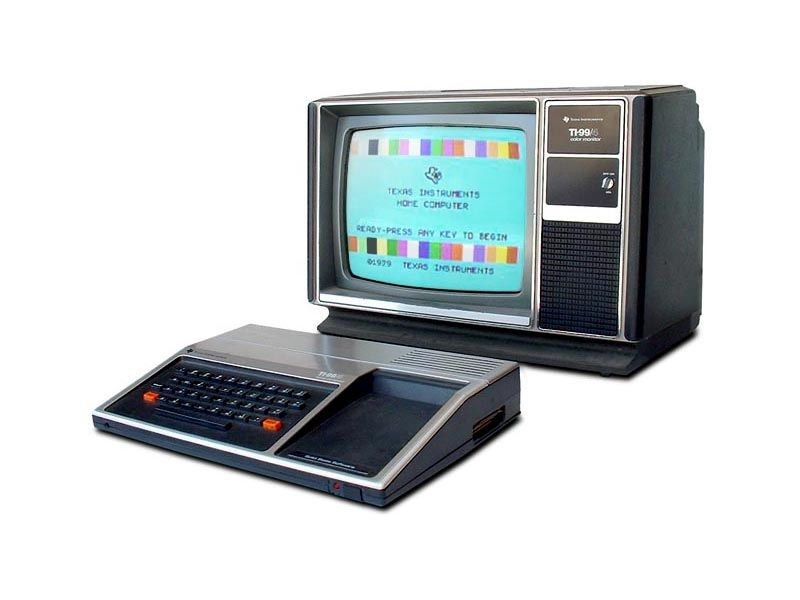

After seeing the Altair, Woz used a 6502 microprocessor (made by an Intel rival, Mos Technology) to build a better home computer of his own: the Apple I. When he showed off his machine to his colleagues at the club, they all wanted one too. One of his friends, Steve Jobs (1955–2011), persuaded Woz that they should go into business making the machine. Woz agreed so, famously, they set up Apple Computer Corporation in a garage belonging to Jobs' parents. After selling 175 of the Apple I for the devilish price of $666.66, Woz built a much better machine called the Apple ][ (pronounced "Apple Two"). While the Altair 8800 looked like something out of a science lab, and the Apple I was little more than a bare circuit board, the Apple ][ took its inspiration from such things as Sony televisions and stereos: it had a neat and friendly looking cream plastic case. Launched in April 1977, it was the world's first easy-to-use home "microcomputer." Soon home users, schools, and small businesses were buying the machine in their tens of thousands—at $1298 a time. Two things turned the Apple ][ into a really credible machine for small firms: a disk drive unit, launched in 1978, which made it easy to store data; and a spreadsheet program called VisiCalc, which gave Apple users the ability to analyze that data. In just two and a half years, Apple sold around 50,000 of the machine, quickly accelerating out of Jobs' garage to become one of the world's biggest companies. Dozens of other microcomputers were launched around this time, including the TRS-80 from Radio Shack (Tandy in the UK) and the Commodore PET. [9]

Apple's success selling to businesses came as a great shock to IBM and the other big companies that dominated the computer industry. It didn't take a VisiCalc spreadsheet to figure out that, if the trend continued, upstarts like Apple would undermine IBM's immensely lucrative business market selling "Big Blue" computers. In 1980, IBM finally realized it had to do something and launched a highly streamlined project to save its business. One year later, it released the IBM Personal Computer (PC), based on an Intel 8080 microprocessor, which rapidly reversed the company's fortunes and stole the market back from Apple.

The PC was successful essentially for one reason. All the dozens of microcomputers that had been launched in the 1970s—including the Apple ][—were incompatible. All used different hardware and worked in different ways. Most were programmed using a simple, English-like language called BASIC, but each one used its own flavor of BASIC, which was tied closely to the machine's hardware design. As a result, programs written for one machine would generally not run on another one without a great deal of conversion. Companies who wrote software professionally typically wrote it just for one machine and, consequently, there was no software industry to speak of.

In 1976, Gary Kildall (1942–1994), a teacher and computer scientist, and one of the founders of the Homebrew Computer Club, had figured out a solution to this problem. Kildall wrote an operating system (a computer's fundamental control software) called CP/M that acted as an intermediary between the user's programs and the machine's hardware. With a stroke of genius, Kildall realized that all he had to do was rewrite CP/M so it worked on each different machine. Then all those machines could run identical user programs—without any modification at all—inside CP/M. That would make all the different microcomputers compatible at a stroke. By the early 1980s, Kildall had become a multimillionaire through the success of his invention: the first personal computer operating system. Naturally, when IBM was developing its personal computer, it approached him hoping to put CP/M on its own machine. Legend has it that Kildall was out flying his personal plane when IBM called, so missed out on one of the world's greatest deals. But the truth seems to have been that IBM wanted to buy CP/M outright for just $200,000, while Kildall recognized his product was worth millions more and refused to sell. Instead, IBM turned to a young programmer named Bill Gates (1955–). His then tiny company, Microsoft, rapidly put together an operating system called DOS, based on a product called QDOS (Quick and Dirty Operating System), which they acquired from Seattle Computer Products. Some believe Microsoft and IBM cheated Kildall out of his place in computer history; Kildall himself accused them of copying his ideas. Others think Gates was simply the shrewder businessman. Either way, the IBM PC, powered by Microsoft's operating system, was a runaway success.

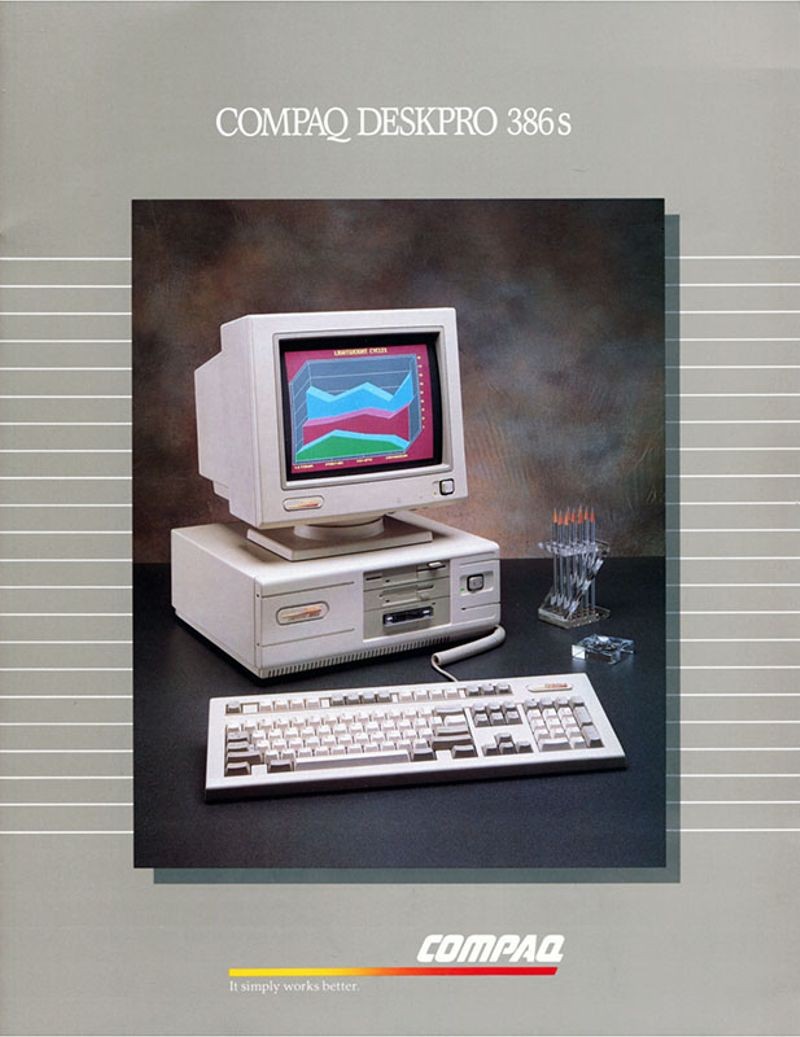

Yet IBM's victory was short-lived. Cannily, Bill Gates had sold IBM the rights to one flavor of DOS (PC-DOS) and retained the rights to a very similar version (MS-DOS) for his own use. When other computer manufacturers, notably Compaq and Dell, starting making IBM-compatible (or "cloned") hardware, they too came to Gates for the software. IBM charged a premium for machines that carried its badge, but consumers soon realized that PCs were commodities: they contained almost identical components—an Intel microprocessor, for example—no matter whose name they had on the case. As IBM lost market share, the ultimate victors were Microsoft and Intel, who were soon supplying the software and hardware for almost every PC on the planet. Apple, IBM, and Kildall made a great deal of money—but all failed to capitalize decisively on their early success. [10]

Photo: Personal computers threatened companies making large "mainframes" like this one. Picture courtesy of NASA on the Commons (where you can download a larger version).

The user revolution

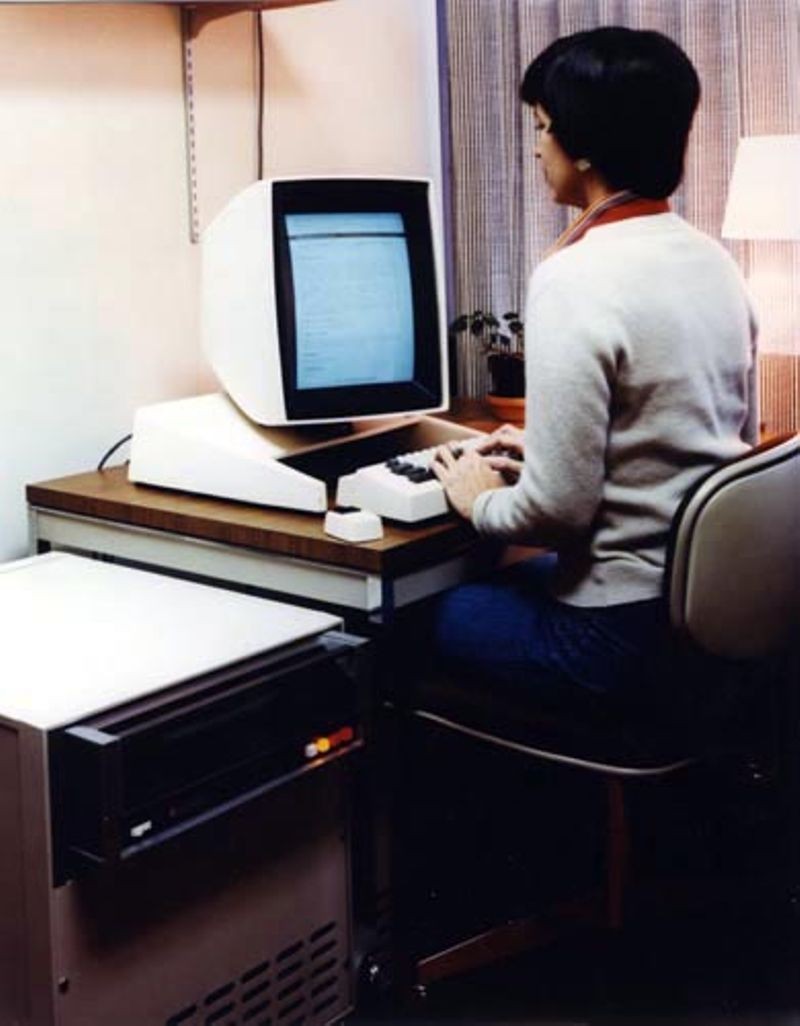

Fortunately for Apple, it had another great idea. One of the Apple II's strongest suits was its sheer "user-friendliness." For Steve Jobs, developing truly easy-to-use computers became a personal mission in the early 1980s. What truly inspired him was a visit to PARC (Palo Alto Research Center), a cutting-edge computer laboratory then run as a division of the Xerox Corporation. Xerox had started developing computers in the early 1970s, believing they would make paper (and the highly lucrative photocopiers Xerox made) obsolete. One of PARC's research projects was an advanced $40,000 computer called the Xerox Alto. Unlike most microcomputers launched in the 1970s, which were programmed by typing in text commands, the Alto had a desktop-like screen with little picture icons that could be moved around with a mouse: it was the very first graphical user interface (GUI, pronounced "gooey")—an idea conceived by Alan Kay (1940–) and now used in virtually every modern computer. The Alto borrowed some of its ideas, including the mouse , from 1960s computer pioneer Douglas Engelbart (1925–2013).

Photo: During the 1980s, computers started to converge on the same basic "look and feel," largely inspired by the work of pioneers like Alan Kay and Douglas Engelbart. Photographs in the Carol M. Highsmith Archive, courtesy of US Library of Congress , Prints and Photographs Division.

Back at Apple, Jobs launched his own version of the Alto project to develop an easy-to-use computer called PITS (Person In The Street). This machine became the Apple Lisa, launched in January 1983—the first widely available computer with a GUI desktop. With a retail price of $10,000, over three times the cost of an IBM PC, the Lisa was a commercial flop. But it paved the way for a better, cheaper machine called the Macintosh that Jobs unveiled a year later, in January 1984. With its memorable launch ad for the Macintosh inspired by George Orwell's novel 1984 , and directed by Ridley Scott (director of the dystopic movie Blade Runner ), Apple took a swipe at IBM's monopoly, criticizing what it portrayed as the firm's domineering—even totalitarian—approach: Big Blue was really Big Brother. Apple's ad promised a very different vision: "On January 24, Apple Computer will introduce Macintosh. And you'll see why 1984 won't be like '1984'." The Macintosh was a critical success and helped to invent the new field of desktop publishing in the mid-1980s, yet it never came close to challenging IBM's position.

Ironically, Jobs' easy-to-use machine also helped Microsoft to dislodge IBM as the world's leading force in computing. When Bill Gates saw how the Macintosh worked, with its easy-to-use picture-icon desktop, he launched Windows, an upgraded version of his MS-DOS software. Apple saw this as blatant plagiarism and filed a $5.5 billion copyright lawsuit in 1988. Four years later, the case collapsed with Microsoft effectively securing the right to use the Macintosh "look and feel" in all present and future versions of Windows. Microsoft's Windows 95 system, launched three years later, had an easy-to-use, Macintosh-like desktop and MS-DOS running behind the scenes.

Photo: The IBM Blue Gene/P supercomputer at Argonne National Laboratory: one of the world's most powerful computers. Picture courtesy of Argonne National Laboratory published on Wikimedia Commons in 2009 under a Creative Commons Licence .

From nets to the Internet

Standardized PCs running standardized software brought a big benefit for businesses: computers could be linked together into networks to share information. At Xerox PARC in 1973, electrical engineer Bob Metcalfe (1946–) developed a new way of linking computers "through the ether" (empty space) that he called Ethernet. A few years later, Metcalfe left Xerox to form his own company, 3Com, to help companies realize "Metcalfe's Law": computers become useful the more closely connected they are to other people's computers. As more and more companies explored the power of local area networks (LANs), so, as the 1980s progressed, it became clear that there were great benefits to be gained by connecting computers over even greater distances—into so-called wide area networks (WANs).

Photo: Computers aren't what they used to be: they're much less noticeable because they're much more seamlessly integrated into everyday life. Some are "embedded" into household gadgets like coffee makers or televisions . Others travel round in our pockets in our smartphones—essentially pocket computers that we can program simply by downloading "apps" (applications).

Today, the best known WAN is the Internet —a global network of individual computers and LANs that links up hundreds of millions of people. The history of the Internet is another story, but it began in the 1960s when four American universities launched a project to connect their computer systems together to make the first WAN. Later, with funding for the Department of Defense, that network became a bigger project called ARPANET (Advanced Research Projects Agency Network). In the mid-1980s, the US National Science Foundation (NSF) launched its own WAN called NSFNET. The convergence of all these networks produced what we now call the Internet later in the 1980s. Shortly afterward, the power of networking gave British computer programmer Tim Berners-Lee (1955–) his big idea: to combine the power of computer networks with the information-sharing idea Vannevar Bush had proposed in 1945. Thus, was born the World Wide Web —an easy way of sharing information over a computer network, which made possible the modern age of cloud computing (where anyone can access vast computing power over the Internet without having to worry about where or how their data is processed). It's Tim Berners-Lee's invention that brings you this potted history of computing today!

And now where?

If you liked this article..., don't want to read our articles try listening instead, find out more, on this site.

- Supercomputers : How do the world's most powerful computers work?

Other websites

There are lots of websites covering computer history. Here are a just a few favorites worth exploring!

- The Computer History Museum : The website of the world's biggest computer museum in California.

- The Computing Age : A BBC special report into computing past, present, and future.

- Charles Babbage at the London Science Museum : Lots of information about Babbage and his extraordinary engines. [Archived via the Wayback Machine]

- IBM History : Many fascinating online exhibits, as well as inside information about the part IBM inventors have played in wider computer history.

- Wikipedia History of Computing Hardware : covers similar ground to this page.

- Computer history images : A small but interesting selection of photos.

- Transistorized! : The history of the invention of the transistor from PBS.

- Intel Museum : The story of Intel's contributions to computing from the 1970s onward.

There are some superb computer history videos on YouTube and elsewhere; here are three good ones to start you off:

- The Difference Engine : A great introduction to Babbage's Difference Engine from Doron Swade, one of the world's leading Babbage experts.

- The ENIAC : A short Movietone news clip about the completion of the world's first programmable electronic computer.

- A tour of the Computer History Museum : Dag Spicer gives us a tour of the world's most famous computer museum, in California.

For older readers

For younger readers.

Text copyright © Chris Woodford 2006, 2023. All rights reserved. Full copyright notice and terms of use .

Rate this page

Tell your friends, cite this page, more to explore on our website....

- Get the book

- Send feedback

History of Computers: A Brief Timeline

Discover the fascinating history with our history of computers timeline, featuring key hardware breakthroughs from the earliest developments to recent innovations. Explore milestones such as Charles Babbage’s Analytical Engine, ENIAC, the transistor’s invention, the IBM PC’s introduction, and the revolutionary impact of artificial intelligence. This timeline highlights significant advancements that have shaped the evolution of computer technology and provides insights into how these innovations continue to influence our world today.

Conceived in the early 1830s, the Analytical Engine represented a monumental leap in computational design, laying the groundwork for modern computing. An accomplished mathematician and mechanical engineer, Charles Babbage envisaged a machine capable of performing any arithmetic operation through programmable instructions. This concept was revolutionary for its time. Babbage’s initial… Read More

Konrad Zuse’s Z1 is a monumental achievement in the history of computing, marking the advent of programmable, mechanical computing devices. Constructed between 1936 and 1938 in Zuse’s parents’ living room, the Z1 was the first in a series of computers that would ultimately revolutionize the field of computer science. The… Read More

In 1941, a significant milestone in the history of computing was achieved with the creation of the Atanasoff-Berry Computer (ABC), recognized as one of the first electronic digital computers. Developed by physicist and mathematician John Atanasoff and his graduate student, Clifford Berry, at Iowa State College (now Iowa State University),… Read More

The period from 1943 to 1944 marked a significant milestone in the history of computing with the development of Colossus, one of the first programmable digital computers. British codebreakers primarily used this groundbreaking machine at Bletchley Park, the United Kingdom’s central site for cryptographic efforts during World War II. Colossus… Read More

In 1946, the world witnessed a monumental leap in computational technology with the unveiling of ENIAC (Electronic Numerical Integrator and Computer), heralded as the first general-purpose electronic digital computer. Developed by John W. Mauchly and J. Presper Eckert at the University of Pennsylvania, ENIAC addressed the complex calculations required for… Read More

The year 1947 marked a pivotal moment in the history of technology with the invention of the transistor by John Bardeen, Walter Brattain, and William Shockley at Bell Laboratories. This groundbreaking development effectively replaced vacuum tubes, which had been the cornerstone of electronic circuits up until that time. Vacuum tubes,… Read More

The year 1951 marked a significant milestone in the history of computing with the introduction of the UNIVAC I (Universal Automatic Computer I), the first commercially produced computer. Developed by J. Presper Eckert and John Mauchly, the UNIVAC I revolutionized data processing and set the stage for the modern computing… Read More

In the annals of computing history, 1956 stands out as a landmark year with the introduction of the IBM 305 RAMAC, the first computer to feature a hard disk drive. This pivotal innovation revolutionized data storage and retrieval, laying the foundation for modern data management systems. The IBM 305 RAMAC,… Read More

In 1958, the groundbreaking invention of the integrated circuit by Jack Kilby and Robert Noyce marked a pivotal moment in the history of technology, revolutionizing the field of electronics and paving the way for the development of more compact and powerful computers. Before this innovation, electronic circuits were constructed using… Read More

In 1960, the Digital Equipment Corporation (DEC) introduced the PDP-1 (Programmed Data Processor-1), an early minicomputer that would become a milestone in the history of computing. The PDP-1 was revolutionary for its time, representing a significant departure from the monolithic, room-filling mainframes that dominated the computing landscape of the era.… Read More

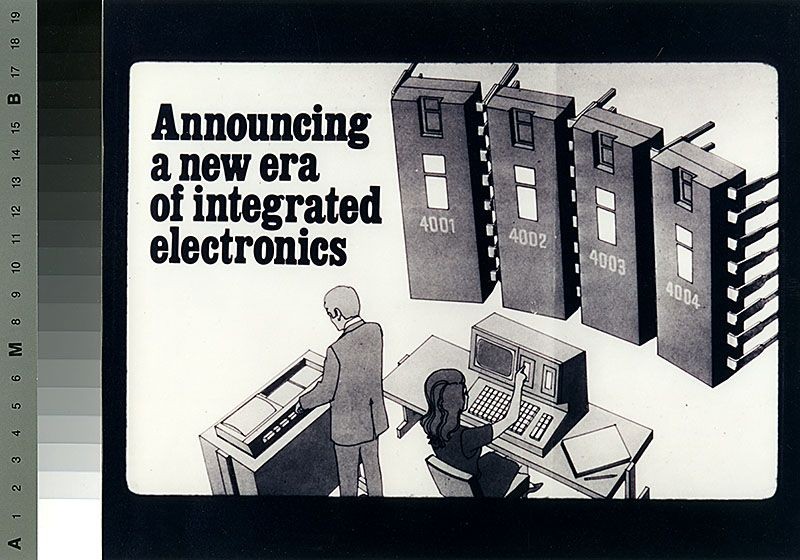

In 1964, IBM introduced the System/360, a groundbreaking family of computers that revolutionized the landscape of mainframe computing and established a new standard in the industry. Before the System/360 launch, the computer market was fragmented, with each system often requiring unique software and peripherals. This created significant inefficiencies and increased… Read More

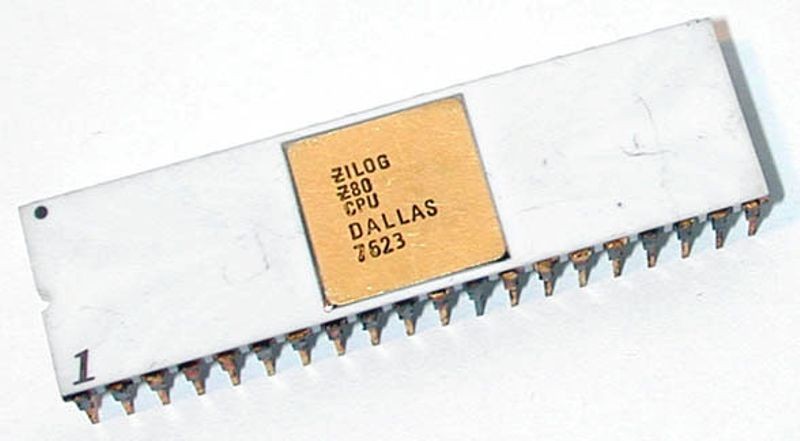

In 1971, introducing the Intel 4004 microprocessor marked a pivotal moment in the annals of technological innovation, setting the stage for the microcomputer revolution that would transform industries and societies worldwide. As the first commercially available microprocessor, the Intel 4004 integrated the core functions of a central processing unit (CPU)… Read More

The year 1973 marked a significant milestone in the history of computing with the advent of the Xerox Alto, recognized as the first computer designed with a graphical user interface (GUI). Developed at Xerox’s Palo Alto Research Center (PARC), the Alto revolutionized how humans interacted with computers. Before its inception,… Read More

In 1975, the Altair 8800 emerged as the first commercially successful personal computer, marking a significant milestone in the evolution of computing technology. Developed by Micro Instrumentation and Telemetry Systems (MITS) and designed by Ed Roberts, the Altair 8800 was a groundbreaking product that democratized computing by making it accessible… Read More

In 1976, a momentous chapter began in the annals of technology with the introduction of the Apple I, the inaugural product from Apple, Inc. This product not only marked the genesis of a company that would become a global powerhouse in personal computing but also signaled a paradigm shift in… Read More

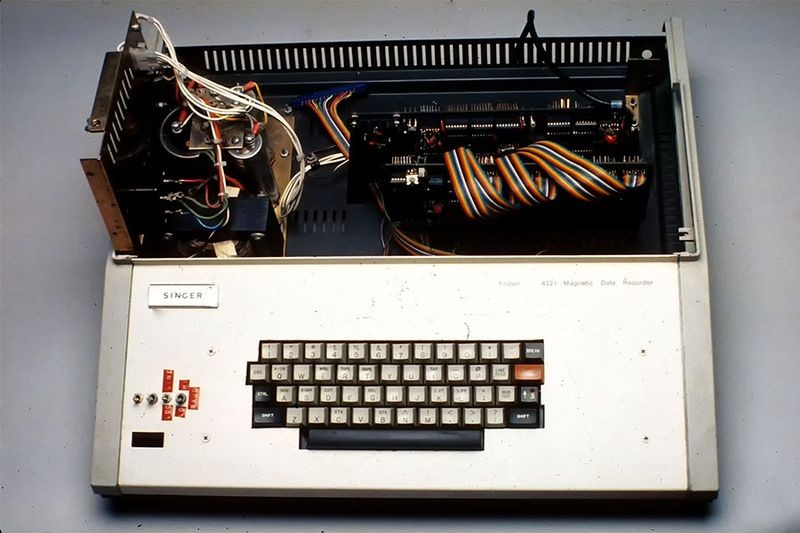

The year 1977 marked a significant milestone in the history of personal computing with the introduction of the Commodore PET, one of the first all-in-one home computers. For the Personal Electronic Transactor, the Commodore PET revolutionized the market by offering an integrated design that combined the keyboard, monitor, and data… Read More

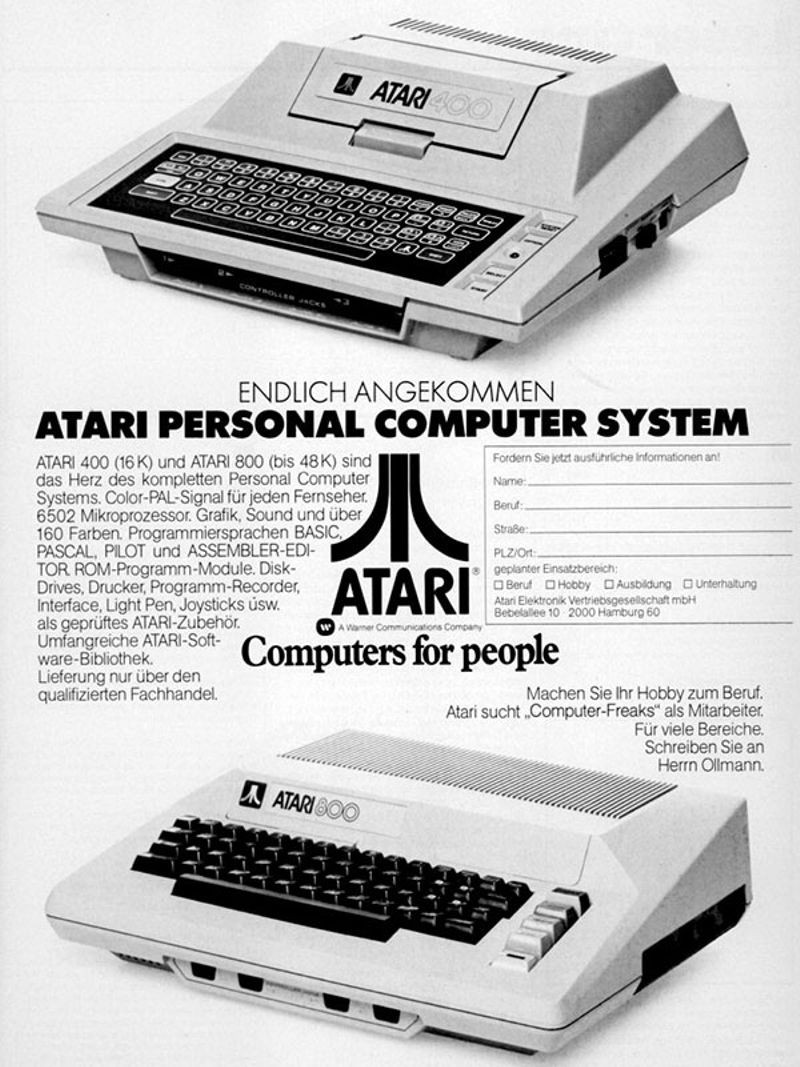

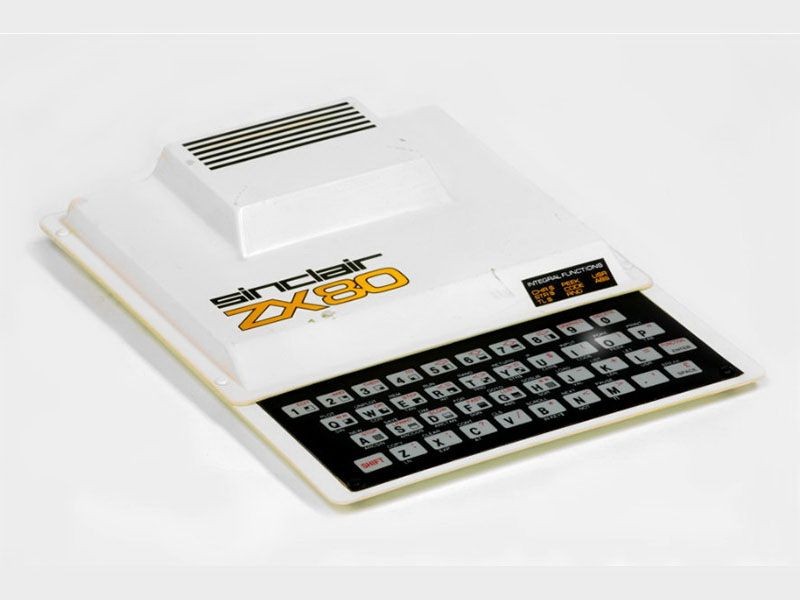

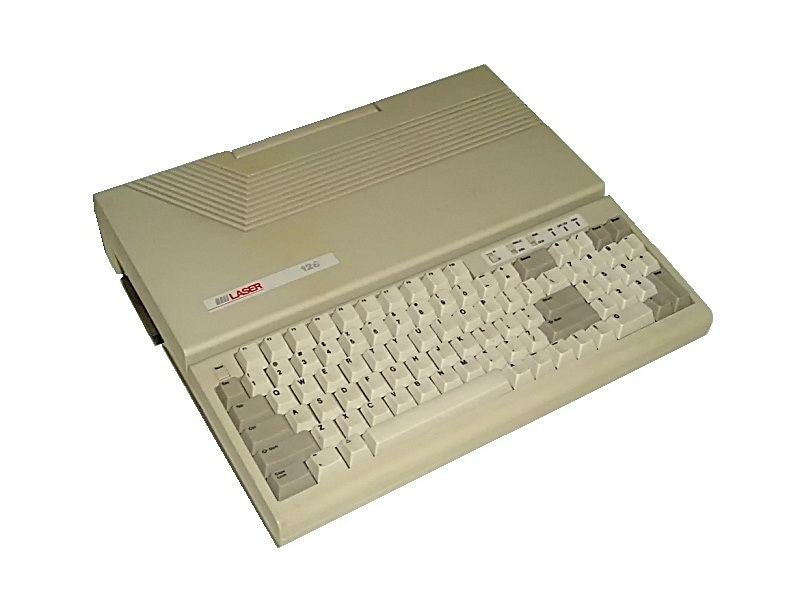

In 1980, the introduction of the Sinclair ZX80 marked a pivotal moment in the history of personal computing, particularly in the United Kingdom. Developed by Sinclair Research Ltd., this compact and affordable home computer was among the first to make computing accessible to the general public. Before the ZX80, computers… Read More