10 Unique Data Science Capstone Project Ideas

A capstone project is a culminating assignment that allows students to demonstrate the skills and knowledge they’ve acquired throughout their degree program. For data science students, it’s a chance to tackle a substantial real-world data problem.

If you’re short on time, here’s a quick answer to your question: Some great data science capstone ideas include analyzing health trends, building a predictive movie recommendation system, optimizing traffic patterns, forecasting cryptocurrency prices, and more .

In this comprehensive guide, we will explore 10 unique capstone project ideas for data science students. We’ll overview potential data sources, analysis methods, and practical applications for each idea.

Whether you want to work with social media datasets, geospatial data, or anything in between, you’re sure to find an interesting capstone topic.

Project Idea #1: Analyzing Health Trends

When it comes to data science capstone projects, analyzing health trends is an intriguing idea that can have a significant impact on public health. By leveraging data from various sources, data scientists can uncover valuable insights that can help improve healthcare outcomes and inform policy decisions.

Data Sources

There are several data sources that can be used to analyze health trends. One of the most common sources is electronic health records (EHRs), which contain a wealth of information about patient demographics, medical history, and treatment outcomes.

Other sources include health surveys, wearable devices, social media, and even environmental data.

Analysis Approaches

When analyzing health trends, data scientists can employ a variety of analysis approaches. Descriptive analysis can provide a snapshot of current health trends, such as the prevalence of certain diseases or the distribution of risk factors.

Predictive analysis can be used to forecast future health outcomes, such as predicting disease outbreaks or identifying individuals at high risk for certain conditions. Machine learning algorithms can be trained to identify patterns and make accurate predictions based on large datasets.

Applications

The applications of analyzing health trends are vast and far-reaching. By understanding patterns and trends in health data, policymakers can make informed decisions about resource allocation and public health initiatives.

Healthcare providers can use these insights to develop personalized treatment plans and interventions. Researchers can uncover new insights into disease progression and identify potential targets for intervention.

Ultimately, analyzing health trends has the potential to improve overall population health and reduce healthcare costs.

Project Idea #2: Movie Recommendation System

When developing a movie recommendation system, there are several data sources that can be used to gather information about movies and user preferences. One popular data source is the MovieLens dataset, which contains a large collection of movie ratings provided by users.

Another source is IMDb, a trusted website that provides comprehensive information about movies, including user ratings and reviews. Additionally, streaming platforms like Netflix and Amazon Prime also provide access to user ratings and viewing history, which can be valuable for building an accurate recommendation system.

There are several analysis approaches that can be employed to build a movie recommendation system. One common approach is collaborative filtering, which uses user ratings and preferences to identify patterns and make recommendations based on similar users’ preferences.

Another approach is content-based filtering, which analyzes the characteristics of movies (such as genre, director, and actors) to recommend similar movies to users. Hybrid approaches that combine both collaborative and content-based filtering techniques are also popular, as they can provide more accurate and diverse recommendations.

A movie recommendation system has numerous applications in the entertainment industry. One application is to enhance the user experience on streaming platforms by providing personalized movie recommendations based on individual preferences.

This can help users discover new movies they might enjoy and improve overall satisfaction with the platform. Additionally, movie recommendation systems can be used by movie production companies to analyze user preferences and trends, aiding in the decision-making process for creating new movies.

Finally, movie recommendation systems can also be utilized by movie critics and reviewers to identify movies that are likely to be well-received by audiences.

For more information on movie recommendation systems, you can visit https://www.kaggle.com/rounakbanik/movie-recommender-systems or https://www.researchgate.net/publication/221364567_A_new_movie_recommendation_system_for_large-scale_data .

Project Idea #3: Optimizing Traffic Patterns

When it comes to optimizing traffic patterns, there are several data sources that can be utilized. One of the most prominent sources is real-time traffic data collected from various sources such as GPS devices, traffic cameras, and mobile applications.

This data provides valuable insights into the current traffic conditions, including congestion, accidents, and road closures. Additionally, historical traffic data can also be used to identify recurring patterns and trends in traffic flow.

Other data sources that can be used include weather data, which can help in understanding how weather conditions impact traffic patterns, and social media data, which can provide information about events or incidents that may affect traffic.

Optimizing traffic patterns requires the use of advanced data analysis techniques. One approach is to use machine learning algorithms to predict traffic patterns based on historical and real-time data.

These algorithms can analyze various factors such as time of day, day of the week, weather conditions, and events to predict traffic congestion and suggest alternative routes.

Another approach is to use network analysis to identify bottlenecks and areas of congestion in the road network. By analyzing the flow of traffic and identifying areas where traffic slows down or comes to a halt, transportation authorities can make informed decisions on how to optimize traffic flow.

The optimization of traffic patterns has numerous applications and benefits. One of the main benefits is the reduction of traffic congestion, which can lead to significant time and fuel savings for commuters.

By optimizing traffic patterns, transportation authorities can also improve road safety by reducing the likelihood of accidents caused by congestion.

Additionally, optimizing traffic patterns can have positive environmental impacts by reducing greenhouse gas emissions. By minimizing the time spent idling in traffic, vehicles can operate more efficiently and emit fewer pollutants.

Furthermore, optimizing traffic patterns can have economic benefits by improving the flow of goods and services. Efficient traffic patterns can reduce delivery times and increase productivity for businesses.

Project Idea #4: Forecasting Cryptocurrency Prices

With the growing popularity of cryptocurrencies like Bitcoin and Ethereum, forecasting their prices has become an exciting and challenging task for data scientists. This project idea involves using historical data to predict future price movements and trends in the cryptocurrency market.

When working on this project, data scientists can gather cryptocurrency price data from various sources such as cryptocurrency exchanges, financial websites, or APIs. Websites like CoinMarketCap (https://coinmarketcap.com/) provide comprehensive data on various cryptocurrencies, including historical price data.

Additionally, platforms like CryptoCompare (https://www.cryptocompare.com/) offer real-time and historical data for different cryptocurrencies.

To forecast cryptocurrency prices, data scientists can employ various analysis approaches. Some common techniques include:

- Time Series Analysis: This approach involves analyzing historical price data to identify patterns, trends, and seasonality in cryptocurrency prices. Techniques like moving averages, autoregressive integrated moving average (ARIMA), or exponential smoothing can be used to make predictions.

- Machine Learning: Machine learning algorithms, such as random forests, support vector machines, or neural networks, can be trained on historical cryptocurrency data to predict future price movements. These algorithms can consider multiple variables, such as trading volume, market sentiment, or external factors, to make accurate predictions.

- Sentiment Analysis: This approach involves analyzing social media sentiment and news articles related to cryptocurrencies to gauge market sentiment. By considering the collective sentiment, data scientists can predict how positive or negative sentiment can impact cryptocurrency prices.

Forecasting cryptocurrency prices can have several practical applications:

- Investment Decision Making: Accurate price forecasts can help investors make informed decisions when buying or selling cryptocurrencies. By considering the predicted price movements, investors can optimize their investment strategies and potentially maximize their returns.

- Trading Strategies: Traders can use price forecasts to develop trading strategies, such as trend following or mean reversion. By leveraging predicted price movements, traders can make profitable trades in the volatile cryptocurrency market.

- Risk Management: Cryptocurrency price forecasts can help individuals and organizations manage their risk exposure. By understanding potential price fluctuations, risk management strategies can be implemented to mitigate losses.

Project Idea #5: Predicting Flight Delays

One interesting and practical data science capstone project idea is to create a model that can predict flight delays. Flight delays can cause a lot of inconvenience for passengers and can have a significant impact on travel plans.

By developing a predictive model, airlines and travelers can be better prepared for potential delays and take appropriate actions.

To create a flight delay prediction model, you would need to gather relevant data from various sources. Some potential data sources include:

- Flight data from airlines or aviation organizations

- Weather data from meteorological agencies

- Historical flight delay data from airports

By combining these different data sources, you can build a comprehensive dataset that captures the factors contributing to flight delays.

Once you have collected the necessary data, you can employ different analysis approaches to predict flight delays. Some common approaches include:

- Machine learning algorithms such as decision trees, random forests, or neural networks

- Time series analysis to identify patterns and trends in flight delay data

- Feature engineering to extract relevant features from the dataset

By applying these analysis techniques, you can develop a model that can accurately predict flight delays based on the available data.

The applications of a flight delay prediction model are numerous. Airlines can use the model to optimize their operations, improve scheduling, and minimize disruptions caused by delays. Travelers can benefit from the model by being alerted in advance about potential delays and making necessary adjustments to their travel plans.

Additionally, airports can use the model to improve resource allocation and manage passenger flow during periods of high delay probability. Overall, a flight delay prediction model can significantly enhance the efficiency and customer satisfaction in the aviation industry.

Project Idea #6: Fighting Fake News

With the rise of social media and the easy access to information, the spread of fake news has become a significant concern. Data science can play a crucial role in combating this issue by developing innovative solutions.

Here are some aspects to consider when working on a project that aims to fight fake news.

When it comes to fighting fake news, having reliable data sources is essential. There are several trustworthy platforms that provide access to credible news articles and fact-checking databases. Websites like Snopes and FactCheck.org are good starting points for obtaining accurate information.

Additionally, social media platforms such as Twitter and Facebook can be valuable sources for analyzing the spread of misinformation.

One approach to analyzing fake news is by utilizing natural language processing (NLP) techniques. NLP can help identify patterns and linguistic cues that indicate the presence of misleading information.

Sentiment analysis can also be employed to determine the emotional tone of news articles or social media posts, which can be an indicator of potential bias or misinformation.

Another approach is network analysis, which focuses on understanding how information spreads through social networks. By analyzing the connections between users and the content they share, it becomes possible to identify patterns of misinformation dissemination.

Network analysis can also help in identifying influential sources and detecting coordinated efforts to spread fake news.

The applications of a project aiming to fight fake news are numerous. One possible application is the development of a browser extension or a mobile application that provides users with real-time fact-checking information.

This tool could flag potentially misleading articles or social media posts and provide users with accurate information to help them make informed decisions.

Another application could be the creation of an algorithm that automatically identifies fake news articles and separates them from reliable sources. This algorithm could be integrated into news aggregation platforms to help users distinguish between credible and non-credible information.

Project Idea #7: Analyzing Social Media Sentiment

Social media platforms have become a treasure trove of valuable data for businesses and researchers alike. When analyzing social media sentiment, there are several data sources that can be tapped into. The most popular ones include:

- Twitter: With its vast user base and real-time nature, Twitter is often the go-to platform for sentiment analysis. Researchers can gather tweets containing specific keywords or hashtags to analyze the sentiment of a particular topic.

- Facebook: Facebook offers rich data for sentiment analysis, including posts, comments, and reactions. Analyzing the sentiment of Facebook posts can provide valuable insights into user opinions and preferences.

- Instagram: Instagram’s visual nature makes it an interesting platform for sentiment analysis. By analyzing the comments and captions on Instagram posts, researchers can gain insights into the sentiment associated with different images or topics.

- Reddit: Reddit is a popular platform for discussions on various topics. By analyzing the sentiment of comments and posts on specific subreddits, researchers can gain insights into the sentiment of different communities.

These are just a few examples of the data sources that can be used for analyzing social media sentiment. Depending on the research goals, other platforms such as LinkedIn, YouTube, and TikTok can also be explored.

When it comes to analyzing social media sentiment, there are various approaches that can be employed. Some commonly used analysis techniques include:

- Lexicon-based analysis: This approach involves using predefined sentiment lexicons to assign sentiment scores to words or phrases in social media posts. By aggregating these scores, researchers can determine the overall sentiment of a post or a collection of posts.

- Machine learning: Machine learning algorithms can be trained to classify social media posts into positive, negative, or neutral sentiment categories. These algorithms learn from labeled data and can make predictions on new, unlabeled data.

- Deep learning: Deep learning techniques, such as recurrent neural networks (RNNs) or convolutional neural networks (CNNs), can be used to capture the complex patterns and dependencies in social media data. These models can learn to extract sentiment information from textual or visual content.

It is important to note that the choice of analysis approach depends on the specific research objectives, available resources, and the nature of the social media data being analyzed.

Analyzing social media sentiment has a wide range of applications across different industries. Here are a few examples:

- Brand reputation management: By analyzing social media sentiment, businesses can monitor and manage their brand reputation. They can identify potential issues, respond to customer feedback, and take proactive measures to maintain a positive image.

- Market research: Social media sentiment analysis can provide valuable insights into consumer opinions and preferences. Businesses can use this information to understand market trends, identify customer needs, and develop targeted marketing strategies.

- Customer feedback analysis: Social media sentiment analysis can help businesses understand customer satisfaction levels and identify areas for improvement. By analyzing sentiment in customer feedback, companies can make data-driven decisions to enhance their products or services.

- Public opinion analysis: Researchers can analyze social media sentiment to study public opinion on various topics, such as political events, social issues, or product launches. This information can be used to understand public sentiment, predict trends, and inform decision-making.

These are just a few examples of how analyzing social media sentiment can be applied in real-world scenarios. The insights gained from sentiment analysis can help businesses and researchers make informed decisions, improve customer experience, and drive innovation.

Project Idea #8: Improving Online Ad Targeting

Improving online ad targeting involves analyzing various data sources to gain insights into users’ preferences and behaviors. These data sources may include:

- Website analytics: Gathering data from websites to understand user engagement, page views, and click-through rates.

- Demographic data: Utilizing information such as age, gender, location, and income to create targeted ad campaigns.

- Social media data: Extracting data from platforms like Facebook, Twitter, and Instagram to understand users’ interests and online behavior.

- Search engine data: Analyzing search queries and user behavior on search engines to identify intent and preferences.

By combining and analyzing these diverse data sources, data scientists can gain a comprehensive understanding of users and their ad preferences.

To improve online ad targeting, data scientists can employ various analysis approaches:

- Segmentation analysis: Dividing users into distinct groups based on shared characteristics and preferences.

- Collaborative filtering: Recommending ads based on users with similar preferences and behaviors.

- Predictive modeling: Developing algorithms to predict users’ likelihood of engaging with specific ads.

- Machine learning: Utilizing algorithms that can continuously learn from user interactions to optimize ad targeting.

These analysis approaches help data scientists uncover patterns and insights that can enhance the effectiveness of online ad campaigns.

Improved online ad targeting has numerous applications:

- Increased ad revenue: By delivering more relevant ads to users, advertisers can expect higher click-through rates and conversions.

- Better user experience: Users are more likely to engage with ads that align with their interests, leading to a more positive browsing experience.

- Reduced ad fatigue: By targeting ads more effectively, users are less likely to feel overwhelmed by irrelevant or repetitive advertisements.

- Maximized ad budget: Advertisers can optimize their budget by focusing on the most promising target audiences.

Project Idea #9: Enhancing Customer Segmentation

Enhancing customer segmentation involves gathering relevant data from various sources to gain insights into customer behavior, preferences, and demographics. Some common data sources include:

- Customer transaction data

- Customer surveys and feedback

- Social media data

- Website analytics

- Customer support interactions

By combining data from these sources, businesses can create a comprehensive profile of their customers and identify patterns and trends that will help in improving their segmentation strategies.

There are several analysis approaches that can be used to enhance customer segmentation:

- Clustering: Using clustering algorithms to group customers based on similar characteristics or behaviors.

- Classification: Building predictive models to assign customers to different segments based on their attributes.

- Association Rule Mining: Identifying relationships and patterns in customer data to uncover hidden insights.

- Sentiment Analysis: Analyzing customer feedback and social media data to understand customer sentiment and preferences.

These analysis approaches can be used individually or in combination to enhance customer segmentation and create more targeted marketing strategies.

Enhancing customer segmentation can have numerous applications across industries:

- Personalized marketing campaigns: By understanding customer preferences and behaviors, businesses can tailor their marketing messages to individual customers, increasing the likelihood of engagement and conversion.

- Product recommendations: By segmenting customers based on their purchase history and preferences, businesses can provide personalized product recommendations, leading to higher customer satisfaction and sales.

- Customer retention: By identifying at-risk customers and understanding their needs, businesses can implement targeted retention strategies to reduce churn and improve customer loyalty.

- Market segmentation: By identifying distinct customer segments, businesses can develop tailored product offerings and marketing strategies for each segment, maximizing the effectiveness of their marketing efforts.

Project Idea #10: Building a Chatbot

A chatbot is a computer program that uses artificial intelligence to simulate human conversation. It can interact with users in a natural language through text or voice. Building a chatbot can be an exciting and challenging data science capstone project.

It requires a combination of natural language processing, machine learning, and programming skills.

When building a chatbot, data sources play a crucial role in training and improving its performance. There are various data sources that can be used:

- Chat logs: Analyzing existing chat logs can help in understanding common user queries, responses, and patterns. This data can be used to train the chatbot on how to respond to different types of questions and scenarios.

- Knowledge bases: Integrating a knowledge base can provide the chatbot with a wide range of information and facts. This can be useful in answering specific questions or providing detailed explanations on certain topics.

- APIs: Utilizing APIs from different platforms can enhance the chatbot’s capabilities. For example, integrating a weather API can allow the chatbot to provide real-time weather information based on user queries.

There are several analysis approaches that can be used to build an efficient and effective chatbot:

- Natural Language Processing (NLP): NLP techniques enable the chatbot to understand and interpret user queries. This involves tasks such as tokenization, part-of-speech tagging, named entity recognition, and sentiment analysis.

- Intent recognition: Identifying the intent behind user queries is crucial for providing accurate responses. Machine learning algorithms can be trained to classify user intents based on the input text.

- Contextual understanding: Chatbots need to understand the context of the conversation to provide relevant and meaningful responses. Techniques such as sequence-to-sequence models or attention mechanisms can be used to capture contextual information.

Chatbots have a wide range of applications in various industries:

- Customer support: Chatbots can be used to handle customer queries and provide instant support. They can assist with common troubleshooting issues, answer frequently asked questions, and escalate complex queries to human agents when necessary.

- E-commerce: Chatbots can enhance the shopping experience by assisting users in finding products, providing recommendations, and answering product-related queries.

- Healthcare: Chatbots can be deployed in healthcare settings to provide preliminary medical advice, answer general health-related questions, and assist with appointment scheduling.

Building a chatbot as a data science capstone project not only showcases your technical skills but also allows you to explore the exciting field of artificial intelligence and natural language processing.

It can be a great opportunity to create a practical and useful tool that can benefit users in various domains.

Completing an in-depth capstone project is the perfect way for data science students to demonstrate their technical skills and business acumen. This guide outlined 10 unique project ideas spanning industries like healthcare, transportation, finance, and more.

By identifying the ideal data sources, analysis techniques, and practical applications for their chosen project, students can produce an impressive capstone that solves real-world problems and showcases their abilities.

Similar Posts

The Ultimate List Of Computer Science Pick Up Lines

Looking for some geeky ways to break the ice with your crush in the computer lab or woo fellow techies at a hackathon? Computer science pick up lines combine romantic intent with programming humor, offering a fun and flirty way to connect. Whether you’re a coding pro or total newbie, these pick up lines are…

The Top 10 Community Colleges For Computer Science Degrees

For many students, community college provides an affordable path to launch a technology career. With associate’s degrees and transfer programs in computer science, you can gain foundational coding skills and professional development for as little as $3,000 per year at some CC’s. If you’re short on time, here’s a quick answer: Santa Monica College, Mesa…

Is A Business Degree Considered A Bachelor Of Science?

When pursuing a business degree, you’ll have to choose between a Bachelor of Science (BS) or a Bachelor of Arts (BA). What’s the difference? If you’re short on time, here’s a quick answer: Business degrees can be either a BS or BA, depending on the program’s focus. BS programs emphasize technical skills like analytics, while…

What Is A Call In Computer Science?

In computer programming, a call is an instruction that tells a program to execute a certain function or procedure. Understanding calls is key to grasping how programs operate behind the scenes. If you’re short on time, here’s a quick answer to your question: A call in computer science is an instruction that activates a function…

What Is One Main Purpose Of Science Fiction?

Science fiction is a genre that allows us to explore imaginative futures, worlds, and technologies through storytelling. If you’re short on time, here’s a quick answer: One of the main purposes of science fiction is to use speculation and imagination to examine the impact of science and technology on humanity. In this comprehensive guide, we…

Computational Science Vs Computer Science: Understanding The Key Differences

In today’s digital world, both computational science and computer science are appealing fields of study for students interested in technology and programming. But what exactly is the difference between the two disciplines? If you’re short on time, here’s a quick answer to your question: While computational science focuses on using computers to analyze and solve…

21 Interesting Data Science Capstone Project Ideas [2024]

Data science, encompassing the analysis and interpretation of data, stands as a cornerstone of modern innovation.

Capstone projects in data science education play a pivotal role, offering students hands-on experience to apply theoretical concepts in practical settings.

These projects serve as a culmination of their learning journey, providing invaluable opportunities for skill development and problem-solving.

Our blog is dedicated to guiding prospective students through the selection process of data science capstone project ideas. It offers curated ideas and insights to help them embark on a fulfilling educational experience.

Join us as we navigate the dynamic world of data science, empowering students to thrive in this exciting field.

Data Science Capstone Project: A Comprehensive Overview

Table of Contents

Data science capstone projects are an essential component of data science education, providing students with the opportunity to apply their knowledge and skills to real-world problems.

Capstone projects challenge students to acquire and analyze data to solve real-world problems. These projects are designed to test students’ skills in data visualization, probability, inference and modeling, data wrangling, data organization, regression, and machine learning.

In addition, capstone projects are conducted with industry, government, and academic partners, and most projects are sponsored by an organization.

The projects are drawn from real-world problems, and students work in teams consisting of two to four students and a faculty advisor.

However, the goal of the capstone project is to create a usable/public data product that can be used to show students’ skills to potential employers.

Best Data Science Capstone Project Ideas – According to Skill Level

Data science capstone projects are a great way to showcase your skills and apply what you’ve learned in a real-world context. Here are some project ideas categorized by skill level:

Beginner-Level Data Science Capstone Project Ideas

1. Exploratory Data Analysis (EDA) on a Dataset

Start by analyzing a dataset of your choice and exploring its characteristics, trends, and relationships. Practice using basic statistical techniques and visualization tools to gain insights and present your findings clearly and understandably.

2. Predictive Modeling with Linear Regression

Build a simple linear regression model to predict a target variable based on one or more input features. Learn about model evaluation techniques such as mean squared error and R-squared, and interpret the results to make meaningful predictions.

3. Classification with Decision Trees

Use decision tree algorithms to classify data into distinct categories. Learn how to preprocess data, train a decision tree model, and evaluate its performance using metrics like accuracy, precision, and recall. Apply your model to practical scenarios like predicting customer churn or classifying spam emails.

4. Clustering with K-Means

Explore unsupervised learning by applying the K-Means algorithm to group similar data points together. Practice feature scaling and model evaluation to identify meaningful clusters within your dataset. Apply your clustering model to segment customers or analyze patterns in market data.

5. Sentiment Analysis on Text Data

Dive into natural language processing (NLP) by analyzing text data to determine sentiment polarity (positive, negative, or neutral).

Learn about tokenization, text preprocessing, and sentiment analysis techniques using libraries like NLTK or spaCy. Apply your skills to analyze product reviews or social media comments.

6. Time Series Forecasting

Predict future trends or values based on historical time series data. Learn about time series decomposition, trend analysis, and seasonal patterns using methods like ARIMA or exponential smoothing. Apply your forecasting skills to predict stock prices, weather patterns, or sales trends.

7. Image Classification with Convolutional Neural Networks (CNNs)

Explore deep learning concepts by building a basic CNN model to classify images into different categories.

Learn about convolutional layers, pooling, and fully connected layers, and experiment with different architectures to improve model performance. Apply your CNN model to tasks like recognizing handwritten digits or classifying images of animals.

Intermediate-Level Data Science Capstone Project Ideas

8. Customer Segmentation and Market Basket Analysis

Utilize advanced clustering techniques to segment customers based on their purchasing behavior. Conduct market basket analysis to identify frequent item associations and recommend personalized product suggestions.

Implement techniques like the Apriori algorithm or association rules mining to uncover valuable insights for targeted marketing strategies.

9. Time Series Anomaly Detection

Apply anomaly detection algorithms to identify unusual patterns or outliers in time series data. Utilize techniques such as moving average, Z-score, or autoencoders to detect anomalies in various domains, including finance, IoT sensors, or network traffic.

Develop robust anomaly detection models to enhance data security and predictive maintenance.

10. Recommendation System Development

Build a recommendation engine to suggest personalized items or content to users based on their preferences and behavior. Implement collaborative filtering, content-based filtering, or hybrid recommendation approaches to improve user engagement and satisfaction.

Evaluate the performance of your recommendation system using metrics like precision, recall, and mean average precision.

11. Natural Language Processing for Topic Modeling

Dive deeper into NLP by exploring topic modeling techniques to extract meaningful topics from text data.

Implement algorithms like Latent Dirichlet Allocation (LDA) or Non-Negative Matrix Factorization (NMF) to identify hidden themes or subjects within large text corpora. Apply topic modeling to analyze customer feedback, news articles, or academic papers.

12. Fraud Detection in Financial Transactions

Develop a fraud detection system using machine learning algorithms to identify suspicious activities in financial transactions. Utilize supervised learning techniques such as logistic regression, random forests, or gradient boosting to classify transactions as fraudulent or legitimate.

Employ feature engineering and model evaluation to improve fraud detection accuracy and minimize false positives.

13. Predictive Maintenance for Industrial Equipment

Implement predictive maintenance techniques to anticipate equipment failures and prevent costly downtime.

Analyze sensor data from machinery using machine learning algorithms like support vector machines or recurrent neural networks to predict when maintenance is required. Optimize maintenance schedules to minimize downtime and maximize operational efficiency.

14. Healthcare Data Analysis and Disease Prediction

Utilize healthcare datasets to analyze patient demographics, medical history, and diagnostic tests to predict the likelihood of disease occurrence or progression.

Apply machine learning algorithms such as logistic regression, decision trees, or support vector machines to develop predictive models for diseases like diabetes, cancer, or heart disease. Evaluate model performance using metrics like sensitivity, specificity, and area under the ROC curve.

Advanced Level Data Science Capstone Project Ideas

15. Deep Learning for Image Generation

Explore generative adversarial networks (GANs) or variational autoencoders (VAEs) to generate realistic images from scratch. Experiment with architectures like DCGAN or StyleGAN to create high-resolution images of faces, landscapes, or artwork.

Evaluate image quality and diversity using perceptual metrics and human judgment.

16. Reinforcement Learning for Game Playing

Implement reinforcement learning algorithms like deep Q-learning or policy gradients to train agents to play complex games like Atari or board games.

Experiment with exploration-exploitation strategies and reward-shaping techniques to improve agent performance and achieve superhuman levels of gameplay.

17. Anomaly Detection in Streaming Data

Develop real-time anomaly detection systems to identify abnormal behavior in streaming data streams such as network traffic, sensor readings, or financial transactions.

Utilize online learning algorithms like streaming k-means or Isolation Forest to detect anomalies and trigger timely alerts for intervention.

18. Multi-Modal Sentiment Analysis

Extend sentiment analysis to incorporate multiple modalities such as text, images, and audio to capture rich emotional expressions.

However, utilize deep learning architectures like multimodal transformers or fusion models to analyze sentiment across different modalities and improve understanding of complex human emotions.

19. Graph Neural Networks for Social Network Analysis

Apply graph neural networks (GNNs) to model and analyze complex relational data in social networks. Use techniques like graph convolutional networks (GCNs) or graph attention networks (GATs) to learn node embeddings and predict node properties such as community detection or influential users.

20. Time Series Forecasting with Deep Learning

Explore advanced deep learning architectures like long short-term memory (LSTM) networks or transformer-based models for time series forecasting.

Utilize attention mechanisms and multi-horizon forecasting to capture long-term dependencies and improve prediction accuracy in dynamic and volatile environments.

21. Adversarial Robustness in Machine Learning

Investigate techniques to improve the robustness of machine learning models against adversarial attacks.

Explore methods like adversarial training, defensive distillation, or certified robustness to mitigate vulnerabilities and ensure model reliability in adversarial perturbations, particularly in critical applications like autonomous vehicles or healthcare.

These project ideas cater to various skill levels in data science, ranging from beginners to experts. Choose a project that aligns with your interests and skill level, and don’t hesitate to experiment and learn along the way!

Factors to Consider When Choosing a Data Science Capstone Project

Choosing the right data science capstone project is crucial for your learning experience and effectively showcasing your skills. Here are some factors to consider when selecting a data science capstone project:

Personal Interest

Select a project that aligns with your passions and career goals to stay motivated and engaged throughout the process.

Data Availability

Ensure access to relevant and sufficient data to complete the project and draw meaningful insights effectively.

Complexity Level

Consider your current skill level and choose a project that challenges you without overwhelming you, allowing for growth and learning.

Real-World Impact

Aim for projects with practical applications or societal relevance to showcase your ability to solve tangible problems.

Resource Requirements

Evaluate the availability of resources such as time, computing power, and software tools needed to execute the project successfully.

Mentorship and Support

Seek projects with opportunities for guidance and feedback from mentors or peers to enhance your learning experience.

Novelty and Innovation

Explore projects that push boundaries and explore new techniques or approaches to demonstrate creativity and originality in your work.

Tips for Successfully Completing a Data Science Capstone Project

Successfully completing a data science capstone project requires careful planning, effective execution, and strong communication skills. Here are some tips to help you navigate through the process:

- Plan and Prioritize: Break down the project into manageable tasks and create a timeline to stay organized and focused.

- Understand the Problem: Clearly define the project objectives, requirements, and expected outcomes before analyzing.

- Explore and Experiment: Experiment with different methodologies, algorithms, and techniques to find the most suitable approach.

- Document and Iterate: Document your process, results, and insights thoroughly, and iterate on your analyses based on feedback and new findings.

- Collaborate and Seek Feedback: Collaborate with peers, mentors, and stakeholders, actively seeking feedback to improve your work and decision-making.

- Practice Communication: Communicate your findings effectively through clear visualizations, reports, and presentations tailored to your audience’s understanding.

- Reflect and Learn: Reflect on your challenges, successes, and lessons learned throughout the project to inform your future endeavors and continuous improvement.

By following these tips, you can successfully navigate the data science capstone project and demonstrate your skills and expertise in the field.

Wrapping Up

In wrapping up, data science capstone project ideas are invaluable in bridging the gap between theory and practice, offering students a chance to apply their knowledge in real-world scenarios.

They are a cornerstone of data science education, fostering critical thinking, problem-solving, and practical skills development.

As you embark on your journey, don’t hesitate to explore diverse and challenging project ideas. Embrace the opportunity to push boundaries, innovate, and make meaningful contributions to the field.

Share your insights, challenges, and successes with others, and invite fellow enthusiasts to exchange ideas and experiences.

1. What is the purpose of a data science capstone project?

A data science capstone project serves as a culmination of a student’s learning experience, allowing them to apply their knowledge and skills to solve real-world problems in the field of data science. It provides hands-on experience and showcases their ability to analyze data, derive insights, and communicate findings effectively.

2. What are some examples of data science capstone projects?

Data science capstone projects can cover a wide range of topics and domains, including predictive modeling, natural language processing, image classification, recommendation systems, and more. Examples may include analyzing customer behavior, predicting stock prices, sentiment analysis on social media data, or detecting anomalies in financial transactions.

3. How long does it typically take to complete a data science capstone project?

The duration of a data science capstone project can vary depending on factors such as project complexity, available resources, and individual pace. Generally, it may take several weeks to several months to complete a project, including tasks such as data collection, preprocessing, analysis, modeling, and presentation of findings.

Related Posts

Science Fair Project Ideas For 6th Graders

When it comes to Science Fair Project Ideas For 6th Graders, the possibilities are endless! These projects not only help students develop essential skills, such…

Java Project Ideas for Beginners

Java is one of the most popular programming languages. It is used for many applications, from laptops to data centers, gaming consoles, scientific supercomputers, and…

Capstone Projects

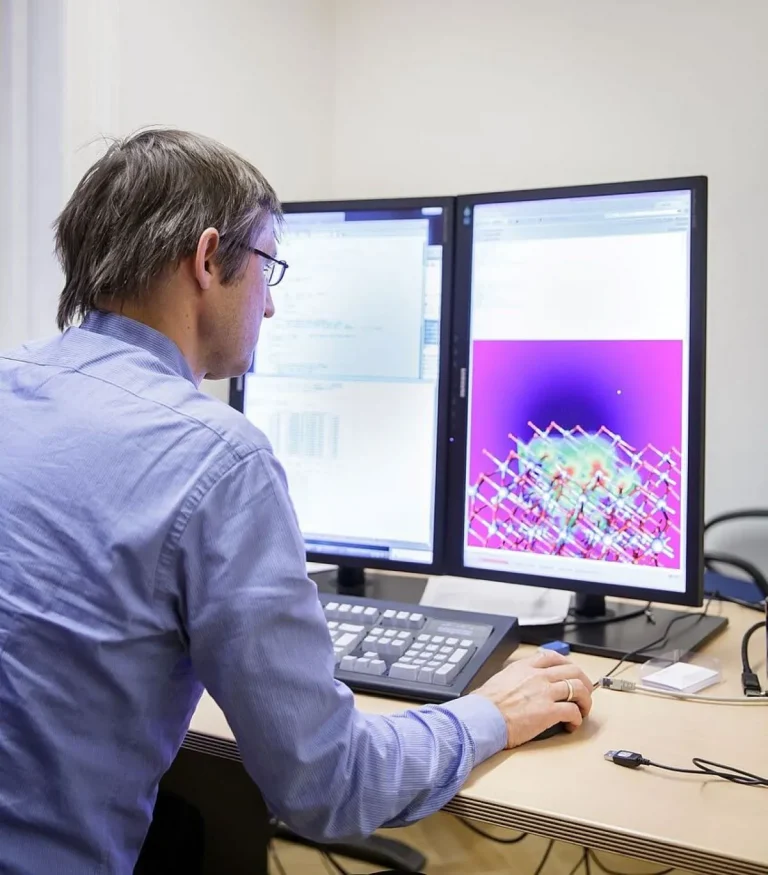

Education is one of the pillars of the data science institute..

Through educational activities, we strive to create a community in Data Science at Columbia. The capstone project is one of the most lauded elements of our MS in Data Science program. As a final step during their study at Columbia, our MS students work on a project sponsored by a DSI industry affiliate or a faculty member over the course of a semester.

Faculty-Sponsored Capstone Projects

A DSI faculty member proposes a research project and advises a team of students working on this project. This is a great way to run a research project with enthusiastic students, eager to try out their newly acquired data science skills in a research setting. This is especially a good opportunity for developing and accelerating interdisciplinary collaboration.

2024-2025 Academic Year: July 15, 2024 via this form

Project Archive

- Spring 2022

- Spring 2020

- Spring 2019

- Spring 2018

- Spring 2016

Data Science: Capstone

To become an expert you need practice and experience..

Show what you’ve learned from the Professional Certificate Program in Data Science.

What You'll Learn

To become an expert data scientist you need practice and experience. By completing this capstone project you will get an opportunity to apply the knowledge and skills in R data analysis that you have gained throughout the series. This final project will test your skills in data visualization, probability, inference and modeling, data wrangling, data organization, regression, and machine learning.

Unlike the rest of our Professional Certificate Program in Data Science , in this course, you will receive much less guidance from the instructors. When you complete the project you will have a data product to show off to potential employers or educational programs, a strong indicator of your expertise in the field of data science.

The course will be delivered via edX and connect learners around the world. By the end of the course, participants will understand the following concepts:

- How to apply the knowledge base and skills learned throughout the series to a real-world problem

- How to independently work on a data analysis project

Your Instructors

Rafael Irizarry

Professor of Biostatistics at Harvard University Read full bio.

Ways to take this course

When you enroll in this course, you will have the option of pursuing a Verified Certificate or Auditing the Course.

A Verified Certificate costs $149 and provides unlimited access to full course materials, activities, tests, and forums. At the end of the course, learners who earn a passing grade can receive a certificate.

Alternatively, learners can Audit the course for free and have access to select course material, activities, tests, and forums. Please note that this track does not offer a certificate for learners who earn a passing grade.

Introduction to Linear Models and Matrix Algebra

Learn to use R programming to apply linear models to analyze data in life sciences.

High-Dimensional Data Analysis

A focus on several techniques that are widely used in the analysis of high-dimensional data.

Introduction to Bioconductor

Join Harvard faculty in this online course to learn the structure, annotation, normalization, and interpretation of genome scale assays.

Data Science: Capstone

Show what you’ve learned from the Professional Certificate Program in Data Science.

Associated Schools

Harvard T.H. Chan School of Public Health

What you'll learn.

How to apply the knowledge base and skills learned throughout the series to a real-world problem

Independently work on a data analysis project

Course description

To become an expert data scientist you need practice and experience. By completing this capstone project you will get an opportunity to apply the knowledge and skills in R data analysis that you have gained throughout the series. This final project will test your skills in data visualization, probability, inference and modeling, data wrangling, data organization, regression, and machine learning.

Unlike the rest of our Professional Certificate Program in Data Science, in this course, you will receive much less guidance from the instructors. When you complete the project you will have a data product to show off to potential employers or educational programs, a strong indicator of your expertise in the field of data science.

Instructors

Rafael Irizarry

You may also like.

High-Dimensional Data Analysis

A focus on several techniques that are widely used in the analysis of high-dimensional data.

Advanced Bioconductor

Learn advanced approaches to genomic visualization, reproducible analysis, data architecture, and exploration of cloud-scale consortium-generated genomic data.

Principles, Statistical and Computational Tools for Reproducible Data Science

Learn skills and tools that support data science and reproducible research, to ensure you can trust your own research results, reproduce them yourself, and communicate them to others.

Join our list to learn more

Capstone Projects

The culminating experience in the Master’s in Applied Data Science program is a Capstone Project where you’ll put your knowledge and skills into practice . You will immerse yourself in a real business problem and will gain valuable, data driven insights using authentic data. Together with project sponsors, you will develop a data science solution to address organization problems, enhance analytics capabilities, and expand talent pools and employment opportunities. Leveraging the university’s rich research portfolio, you also have the option to join a research-focused team .

Selected Capstone Projects

Copd readmission and cost reduction assessment, an nfl ticket pricing study: optimizing revenue using variable and dynamic pricing methods, using image recognition to identify yoga poses, using image recognition to measure the speed of a pitch, real-time credit card fraud detection, interested in becoming a capstone sponsor.

The Master’s in Applied Data Science program accepts projects year-round for placement at the beginning of every quarter, with the Spring quarter being the largest cohort. All projects must be submitted no later than one month prior to the beginning of the preferred starting quarter based on the UChicago academic calendar .

Capstone Sponsor Incentives

Sponsors derive measurable benefits from this unique opportunity to support higher education. Partner organizations propose real-world problems, untested ideas or research queries. Students review them from the perspective of data scientists trained to generate actionable insights that provide long-term value. Through the project, Capstone partners gain access to a symbiotic pool of world-class students, highly accomplished instructors, and cited researchers, resulting in optimized utilization of modern data science-based methods, using your data. Further, for many sponsors, the project becomes a meaningful source of recruitment through the excellent pool of students who work on your project.

Capstone Sponsor Obligations

While there is no monetary cost or contract necessary to sponsor a project, we do consider this a partnership. Teams comprised of four students and guided by an instructor and subject matter expert are provided with expectations from the capstone sponsor and learning objectives, assignments, and evaluation requirements from instructors. In turn, Capstone partners should be prepared to provide the following:

- A detailed problem statement with a description of the data and expected results

- Two or more points of contact

- Access to data relevant to the project by the first week of the applicable quarter

- Engagement through regular meetings (typically bi-weekly) while classes are in session

- If requested, a non-disclosure agreement that may be completed by the student team

Interested in Becoming a Capstone or Industry Research Partner?

Get in touch with us to submit your idea for a collaboration or ask us questions about how the partnership process works.

_.jpg)

Wednesdays @ 12:45pm - 3:00pm SEC LL2.223 (Allston Campus)

Capstone research project course, ac297r, fall 2022 weiwei pan, founded by the institute for applied computational science (iacs)'s scientific program director, pavlos protopapas , the capstone research course is a group-based research experience where students work directly with a partner from industry, government, academia, or an ngo to solve a real-world data science/ computation problem. students will create a solution in the form of a software package, which will require varying levels of research. upon completion of this challenging project, students will be better equipped to conduct research and enter the professional world. every class session includes a guest lecture concerning various essential skills for one's career -- from public speaking, reading and writing research papers, how to work remotely on a team, everything about start-ups, and more..

Snake Classification using Neural-Backed Decision Trees

- Group members: Rui Zheng, Weihua Zhao, Nikolas Racelis-Russell

Abstract: Many advanced algorithms, specifically deep learning models, are considered “black box” to human understanding. Transparency to intrprete such models has become a key obstacle which prevents such algorithms from being put into practical use. Although algorithms, such as GradCam, are invented to provide visual explanations from deep networks via gradient-based localization, they do not provide details of how the models reached their final decision step by step in detail. The goal of this project is to provide more interpretability to Convolutional Neural Networks (CNN) models by combining Grad-CAM with Neural Backed Decision Trees (NBDTs), and provide visual explanations with detailed decision making process of CNN models. This project demonstrates the potential and limitations of jointly applying Grad-CAM and NBDTs on snake classification.

Autonomous Vehicles

Autoware and lgsvl.

- Group members: Andres Bernal, Amir Uqdah, Jie Wang

Abstract: We were able to replicate the ThunderHill race track using the Unity 3D game engine and integrated Unity with the track and robot into the LGSVL simulator. Once the integration was complete we were able to see our robot with the Thunderhill Track as our map in the simulator. We were then able to virtualize the functions of the IMU, odometry and lidar sensors and RGB-D cameras to better visualize what our robot perceives in the simulation. Finally we were able to fully visualize what our robot sees with the virtual sensors using Autoware Rviz which displays the location and point cloud map of the vehicle and its surroundings.

Computer Vision and Lane Segmentation in Autonomous Vehicles

- Group members: Evan Kim, Joseph Fallon, Ka Chan

Abstract: Perception is absolutely vital to navigation. Without perception, any corporeal entity cannot localize itself in its environment and move around obstacles. To create an autonomous vehicle (AV) capable of racing on the Thunderhill Raceway track in Berkeley, California, the team must supplement a stereo camera capable of supporting image perception and computer vision. The team was given two cameras, the Intel RealSense D455 and the ZED camera. In this analysis, the team will compare the capabilities of the two cameras and responsibly select a camera capable of supporting the object detection, and will develop a lane segmentation algorithm that will help extract lanes from the camera feed.

Autonomous Mapping, Localization and Navigation using Computer Vision as well as Tuning of Camera

- Group members: Youngseo Do, Jay Chong, Siddharth Saha

Abstract: One of the main tools used in autonomous mapping and navigation is a 3D Lidar. A 3D Lidar provides various advantages. It is not sensitive to light conditions, it can detect color through reflective channels, it has a complete 360 degree view of the environment and does not require any ”learning” to detect obstacles. One can use the reflective channel to detect the color of lanes as well as avoid obstacles. The pointcloud information from the Lidar can also easily enable mapping and localization as the vehicle will know where it is at all points. It is easy to see why so many large scale autonomous vehicle units invest in expensive and bulky Lidars. However, this is not accessible to all due to it’s price. A camera (even depth) is much more affordable. However it comes with it’s own slew of disadvantages. It can see color but programming for the color is hard due to varying light conditions. Unless you use multiple cameras you often can’t see all around you. These factors together are a hindrance to autonomous navigation. We thus aim to demonstrate 3 goals: 1) Mapping and Localization with a single camera and other sensory information using RTABMAP SLAM algorithm 2) Obstacle avoidance and lane following with a single camera using Facebook AI Detectron2 Deep Learning and ROS 3) Tuning of the camera to be less sensitive to varying light conditions using ROS rqt_reconfigure

Data Visualizations and Interface For Autonomous Robots

- Group members: Jia Shi, Seokmin Hong, Yuxi Luo

Abstract: Autonomous navigation requires a wide-range of engineering expertise and a well-developed technological architecture in order to operate. The focus of this project and report is to illustrate the significance of data visualizations and an interactive interface with regards to autonomous navigation in a racing environment. In order to yield the best results in an autonomous navigation race, the users must be able to understand the behavior of the vehicle when training navigation models and during the live race. In order to address these concerns, teams working on autonomous navigation must be able to visualize and interact with the robot. In this project, different algorithms such as A* search and RRT* (Rapidly-exploring random tree) are implemented to create path planning and obstacle avoidance. Visualizations of these respective algorithms and a user interface to send/receive commands will help to enhance model testing, debug unexpected behavior, and improve upon existing autonomous navigation models. Simulations with the most optimal navigation algorithm will also be run to demonstrate the functionality of the interactive interface. Results, implications of the interface, and further improvements will also be discussed.

GPS Based Autonomous Navigation on the 1/5th Scale

- Group members: Shiyin Liang, Garrett Gibo, Neghena Faizyar

Abstract: Self-driving vehicles are revolutionizing the automotive industry with companies like Tesla, Toyota, Audi and many more pouring a substantial amount of money into research and development. While many of these self-driving systems use a combination of cameras, lidars, and radars for local perception and navigation, the fundamental global localization system that they use relies upon a GPS. The challenge in building a navigation system around a GPS derives from the inherent issues of the sensor itself. In general, GPS’s tend to suffer from issues of signal interference that lead to infrequent positional updates and lower precision. On the 1/5th car scale, positional inaccuracies are magnified, so it is crucial that we know the location of our vehicle with speed and precision. In this project, we compare the performance of different GPS’s in order to determine what level of performance is best suited at the 1/5th scale. Using the best-suited GPS, we design a navigation system that can mitigate the shortcomings of the GPS and provide both a reliable autonomous vehicle.

Autonomous: Odometry and IMU

- Group members: Pranav Deshmane, Sally Poon

Abstract: For a vehicle to successfully navigate istelf and even race autonomously, it is essential for the vehicle to be able localize itself within its environment. This is where Odometry and IMU data can greatly support the robot’s navigational ability. Wheel Odometry provides useful measurements to estimate the position of the car through the use of wheel’s circumference and rotations per second. IMU, which stands for Interial Measurement Unit, is 9 axis sensor that can sense linear acceleration, angular velocity, and magnetic fields. Together, these data sources can provide us crucial information in deriving a Position Estimate (how far our robot has traveled) and a Compass Heading (orientation of the robot/where it’s headed). While most navigation stacks rely on GPS or Computer Vision to achieve successful navigation, this leaves the robot vulnerable to unfavorable scenarios. For example, GPS is prone to lag and may be infeasible in unfamiliar terrain. Computer Vision approaches often depend heavily on training data and cannot always provide continouos and accurate orientation. Odometry and IMU readings are thus invaluable sources of sensing information that can easily complement and enhance navigational stacks in place to build more robust and accurate autonomous navigation models.

Malware and Graph Learning

Malware detection.

- Group members: Yu-Chieh Chen, Ruoyu Liu, Yikai Hao

Abstract: As the technology grows fast in recent years, more and more people cannot live without cell phones. It is important to protect users’ data for cell phone companies and operating system providers. Therefore, detecting malwares based on the code they have can avoid publishing of malwares and prohibiting them from the source. This report aims at finding a model which can detect malwares accurately and with a small computational cost. It uses different matrices and graphs to search the relationships between applications and detecting malwares based on the similarity. As a result, the best model can achieve a test accuracy around 99%.

Potential Improvement of MAMADROID System

- Group members: Zihan Qin, Jian Jiao

Abstract: Nowadays, smartphone is an indispensable part of people's daily life. Android System is the most popular system running on smartphone. Due to this popularity, malware detection on Android becomes on of the most significant task for research community. In this project, we are mainly focusing on one called MAMADROID System. Instead of previous work which highly relied on the permissions requested by apps, MAMADROID relied on the sequences of abstracted API calls performed by apps. We are very interested in finding ways to improve this model. To achieve this, we've been trying to find some new features to fit into the model. We made three basic model and take the one with the highest accuracy and made two more advanced model based on this model with the best performance.

Exploring the Language of Malware

- Group members: Neel Shah, Mandy Ma

Abstract: The Android app store and its open-source features make it extremely vulnerable to malicious software, known as Malware. The current state of the art encompasses the use of advanced code analysis and corresponding machine learning models. Although along with our initial research we found that the Applications in the Android app store along with their corresponding API calls behave a lot like a language. They have their comparable own syntax, structure, and grammar. This inspired us to use techniques from Natural Language Processing(NLP) and use the same idea of creating graphical relationships between applications and APIs. Additionally, we also show that the use of these graphical embeddings maintains the integrity of classification metrics to even correctly identify and differentiate Malware and Benign applications.

CoCoDroid: Detecting Malware By Building Common Graph Using Control Flow Graph

- Group members: Edwin Huang, Sabrina Ho

Abstract: In today's world, malware has grown so much. In 2020, there are more than 129 millions of Android users around the world. With Android applications dominating the devices, we hope to produce a detection tool that is accessible to the general public. We present a structure that analyze apps in the form of control flow graph. With that, we build a common graph to capture how close the apps are to each other and classify whether they are malicious or not. We compare our work with other methods and show that using control flow graph is a good choice as a representation of Android applications (APKs) and can outperform other models. We built features using Metapath2Vec and Doc2Vec, and trained Random Forest, 1-Nearest Neighbors, and 3-Nearest Neighbors Models.

Attacking the HinDroid Malware Detector

- Group members: Ruben Gonzalez, Amar Joea

Abstract: Over the past decade, malware has established itself as a constant issue for the Android operating system. In 2018, Symantec reported that they blocked more than 10 thousand malicious Android apps per day, while nearly 3 quarters of Android devices remained on older versions of Android. With billions of active Android devices, millions of users are only a swipe away from becoming victims. Naturally, automated machine learning-based detection systems have become commonplace solutions as they can drastically speed up the labeling process. However, it has been shown that many of these models are vulnerable to adversarial attacks, notably attacks that add redundant code to malware to consfuse detectors. First, we introduce a new model that extends the Hindroid detection system by employing node embeddings using metapath2vec. We believe that the introduction of node embeddings will improve the performance of the model beyond the capabilities of HinDroid. Second, we intend to break these two models using a method similar to that proposed in the Android HIV paper. That is we train an adversarial model that perturbs malware such that a detector mislabels it as a benign app. We then measure the performance of each model after recursively feeding adversarial examples back into them. We believe that by doing so, our model will be able to outperform the Hindroid implementation in its ability to label malware even after adversarial examples have been added.

Text Mining and NLP

Autophrase application web.

- Group members: Tiange Wan, Yicen Ma, Anant Gandhi

Abstract: We propose the creation of a full-stack website as an extension of the AutoPhrase algorithm and text analysis to help the non-tech users understand their text efficiently. Also, we provide a notebook with one specific dataset with text analysis to the users.

Analyzing Movies Using Phrase Mining

- Group members: Daniel Lee, Yuxuan Fan, Huilai Miao

Abstract: Movies are a rich source of human culture from which we can derive insight. Previous work addresses either a textual analysis of movie plots or the use of phrase mining for natural language processing, but not both. Here, we propose a novel analysis of movies by extracting key phrases from movie plot summaries using AutoPhrase, a phrase mining framework. Using these phrases, we analyze movies through 1) an exploratory data analysis that examines the progression of human culture over time, 2) the development and interpretation of a classification model that predicts movie genre, and 3) the development and interpretation of a clustering model that clusters movies. We see that this application of phrase mining to movie plots provides a unique and valuable insight into human culture while remaining accessible to a general audience, e.g., history and anthropology non-experts.

AutoPhrase for Financial Documents Interpretation

- Group members: Joey Hou, Shaoqing Yi, Zachary Ling

Abstract: The stock market is one of the most popular markets that the investors like to put their money in. There are millions of investors who participate in the stock market investment directly or indirectly, such as by mutual fund, defined-benefit plan. The performance of the stock price is highly related to the latest news information, such as the 8-K reports, the annual or quarter report. These reports reflect the operating performance of the companies, which are the important fundaments for the stock price. However, there are numerous news to the market for each day, and we want to build a model to extract the features from the news and use them to predict the price trend. In this project, we apply the AutoPhrase model from Professor Jingbo Shang to extract the high-quality phrases from the news documents and to predict stock price trends. We aim to explore if certain words or phrases correlate to higher or lower stock prices after a release of an 8-K report.

Text Classification with Named-Entity Recognition and AutoPhrase

- Group members: Siyu Deng, Rachel Ung, Yang Li

Abstract: Text Classification (TC) and Named-Entity Recognition (NER) are two fundamental tasks for many Natural Language Processing (NLP) applications, which involve understanding, extracting information, and categorizing the text. In order to achieve these goals, we utilized AutoPhrase and a pre-trained language NER model to extract quality phrases. Using these as part of our features, we are able to achieve very high performance for a five-class and a twenty-class text classification dataset. Our project will follow a similar setting as previous works with train, validation, and test datasets and comparing the results across different methods.

AutoLibrary - A Personal Digital Library to Find Related Works via Text Analyzer

- Group members: Bingqi Zhou, Jiayi Fan, Yichun Ren

Abstract: When encountering scientific papers, it is challenging for readers themselves to find other related works. First of all, it is hard to identify keywords that summarize the papers to search for similar papers. This dilemma is most common if readers are not familiar with the domains of papers that they are reading. Meanwhile, traditional recommendation models based on user profile and collection data are not applicable for recommending similar works. Some existing digital libraries’ recommender systems utilize phrase mining methods such as taxonomy construction and topic modeling, but such methods also fail to catch the specific topics of the paper. AutoLibrary is designed to address these difficulties, where users can input a scientific paper and get the most related papers. AutoLibrary solves the dilemma via a text analyzer method called AutoPhrase. AutoPhrase is a domain-independent phrase mining method developed by Jingbo Shang et al. (2018) that can automatically extract quality phrases from the input paper. After users upload the paper and select the fields of study of the paper, AutoLibrary utilizes AutoPhrase and our pre-trained domain datasets to return high-quality domain-specific keywords that could represent the paper. While AutoLibrary uses the top three keywords to search on Semantic Scholar for similar works at first, users could also customize the selection of the high-quality phrases or enter their own keywords to explore other related works. Based on the experiments and result analysis, AutoLibrary outperforms other similar text analyzer applications efficiently and effectively across different scientific fields. AutoLibrary is beneficial as it eases the pain point of manually extracting accurate, specific keywords from papers and provides a personalized user experience for finding related papers of various domains and subdomains.

Restaurant Recommender System

- Group members: Shenghan Liu, Catherine Hou, Vincent Le

Abstract: Over time, we rely more and more heavily on online platforms such as Netflix, Amazon, Spotify, which are embedded with the recommendation system in the applications. They know users’ preferences by collecting their ratings, recording the clicks, combing the reviews and then recommending more items. In building the recommender system, review texts can hold the same importance as the numerical statistics because they contain key phrases that characterize how they felt about the review. For this project, we propose to build the recommender system with primary focus on the text reviews analysis through TF-IDF (term frequency-inverse document frequency) and AutoPhrase and to add targeted segmented analysis on phrases to attach sentiments to aspects of a restaurant to rank those recommendations. The ultimate goal is designing a website for deploying our recommender system and showing its functionality.

Recommender Systems

Forumrec - a question recommender for the super user community.

- Group members: Yo Jeremijenko-Conley, Jack Lin, Jasraj Johl

Abstract: The Super User forum exists on the internet as a medium for users to exchange information. In particular, the information shared here primarily related to questions pertaining to operating systems. The system we developed, ForumRec, aims to increase usability for the forum’s participants by specifically recommending questions that may be more suitable for a user in particular to answer. The model we built uses a combination of technique content-based and collaborative filtering from the LightFM package to identify how well a novel question would fit for the desired user. In comparison to baseline models of how Super User already recommends questions, the model attains better performance for more recent data, scoring 0.0014, 0.0033, and 0.5160 on precision at 100, recall at 100, and AUC, which is markedly better than the baselines.

OnSight: Outdoor Rock Climbing Recommendations

- Group members: Brent Min, Brian Cheng, Eric Liu

Abstract: Recommendations for outdoor rock climbing has historically been limited to word of mouth, guide books, and most popular climbs. We aim to change that with our project OnSight, offering personalized recommendations for outdoor rock climbers.

Bridging the Gap: Solving Music Disputes with Recommendation Systems

- Group members: Nayoung Park, Sarat Sreepathy, Duncan Carlmark

Abstract: Many have probably found themselves in an uncomfortable conversation in which a parent is questioning why the song playing over a bedroom speaker is so loud, repetitive, or profane. If someone has never had such a conversation, at the very least they have probably made a conscious decision to refrain from playing a certain genre or artist when their parents are around. Knowing what music to play in these situations does not have to be an elaborate, stressful process. In fact, finding appropriate songs can be made quite simple with the help of recommendation systems. Our solution to this issue actually consists of two recommendation systems that function in similar ways. The first takes music that parents enjoy and recommends it to their children. The second takes music that children enjoy and recommends it to their parents. Both these recommendation systems create their own individual Spotify playlists that try to “bridge the gap” between the music tastes of parents and their children. Through user testing and user interviews we found that our recommenders had mixed success in creating playlists that could be listened to by children and their parents. The success of our recommendations seemed to be largely correlated with the degree of inherent similarity between the music tastes of children and their parents. So while our solution is not perfect, in situations where overlap between parents and children exist, our recommender can successfully “bridge the gap”.

Asnapp - Workout Video Recommender

- Group members: Peter Peng, Najeem Kanishka, Amanda Shu

Abstract: For those who work out at home, finding a good workout routine is difficult. Many of the workout options you may find online are non-personalized and do not take into account your time and equipment constraints, as well as your workout preferences. Asnapp is a web application that provides personalized recommendations of workout videos by Fitness Blender, a company that provides free online workout videos. Our website displays several lists of recommendations (similar to Netflix’s user interface), such as “top upper body workouts for you”. Users can login into our website, choose between several models to generate their recommendations, browse through personalized recommendations lists, and choose a workout to do, saving them the time and effort needed to build a good workout routine.

- Group members: Zachary Nguyen, Alex Pham, Anthony Fong

Abstract: Existing options for recipe recommendations are less than satisfactory. We sought to solve this problem by creating our own recommendation system hosted on a website. We used Food.com recipe data to create a classifier to identify cuisines of recipes, a popularity based recommender, and a content-based filtering recommender using cosine similarity. In the future, we would like to improve upon this recommender by exploring alternative ways to model ingredients, try to start tracking implicit/explicit data of a user, and try to create a hybrid recommender using collaborative techniques.

Makeup Recommender

- Group members: Justin Lee, Shayal Singh, Alexandria Kim