Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Methodology

- Descriptive Research | Definition, Types, Methods & Examples

Descriptive Research | Definition, Types, Methods & Examples

Published on May 15, 2019 by Shona McCombes . Revised on June 22, 2023.

Descriptive research aims to accurately and systematically describe a population, situation or phenomenon. It can answer what , where , when and how questions , but not why questions.

A descriptive research design can use a wide variety of research methods to investigate one or more variables . Unlike in experimental research , the researcher does not control or manipulate any of the variables, but only observes and measures them.

Table of contents

When to use a descriptive research design, descriptive research methods, other interesting articles.

Descriptive research is an appropriate choice when the research aim is to identify characteristics, frequencies, trends, and categories.

It is useful when not much is known yet about the topic or problem. Before you can research why something happens, you need to understand how, when and where it happens.

Descriptive research question examples

- How has the Amsterdam housing market changed over the past 20 years?

- Do customers of company X prefer product X or product Y?

- What are the main genetic, behavioural and morphological differences between European wildcats and domestic cats?

- What are the most popular online news sources among under-18s?

- How prevalent is disease A in population B?

Prevent plagiarism. Run a free check.

Descriptive research is usually defined as a type of quantitative research , though qualitative research can also be used for descriptive purposes. The research design should be carefully developed to ensure that the results are valid and reliable .

Survey research allows you to gather large volumes of data that can be analyzed for frequencies, averages and patterns. Common uses of surveys include:

- Describing the demographics of a country or region

- Gauging public opinion on political and social topics

- Evaluating satisfaction with a company’s products or an organization’s services

Observations

Observations allow you to gather data on behaviours and phenomena without having to rely on the honesty and accuracy of respondents. This method is often used by psychological, social and market researchers to understand how people act in real-life situations.

Observation of physical entities and phenomena is also an important part of research in the natural sciences. Before you can develop testable hypotheses , models or theories, it’s necessary to observe and systematically describe the subject under investigation.

Case studies

A case study can be used to describe the characteristics of a specific subject (such as a person, group, event or organization). Instead of gathering a large volume of data to identify patterns across time or location, case studies gather detailed data to identify the characteristics of a narrowly defined subject.

Rather than aiming to describe generalizable facts, case studies often focus on unusual or interesting cases that challenge assumptions, add complexity, or reveal something new about a research problem .

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Normal distribution

- Degrees of freedom

- Null hypothesis

- Discourse analysis

- Control groups

- Mixed methods research

- Non-probability sampling

- Quantitative research

- Ecological validity

Research bias

- Rosenthal effect

- Implicit bias

- Cognitive bias

- Selection bias

- Negativity bias

- Status quo bias

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

McCombes, S. (2023, June 22). Descriptive Research | Definition, Types, Methods & Examples. Scribbr. Retrieved September 15, 2024, from https://www.scribbr.com/methodology/descriptive-research/

Is this article helpful?

Shona McCombes

Other students also liked, what is quantitative research | definition, uses & methods, correlational research | when & how to use, descriptive statistics | definitions, types, examples, get unlimited documents corrected.

✔ Free APA citation check included ✔ Unlimited document corrections ✔ Specialized in correcting academic texts

Root out friction in every digital experience, super-charge conversion rates, and optimize digital self-service

Uncover insights from any interaction, deliver AI-powered agent coaching, and reduce cost to serve

Increase revenue and loyalty with real-time insights and recommendations delivered to teams on the ground

Know how your people feel and empower managers to improve employee engagement, productivity, and retention

Take action in the moments that matter most along the employee journey and drive bottom line growth

Whatever they’re saying, wherever they’re saying it, know exactly what’s going on with your people

Get faster, richer insights with qual and quant tools that make powerful market research available to everyone

Run concept tests, pricing studies, prototyping + more with fast, powerful studies designed by UX research experts

Track your brand performance 24/7 and act quickly to respond to opportunities and challenges in your market

Explore the platform powering Experience Management

- Free Account

- Product Demos

- For Digital

- For Customer Care

- For Human Resources

- For Researchers

- Financial Services

- All Industries

Popular Use Cases

- Customer Experience

- Employee Experience

- Net Promoter Score

- Voice of Customer

- Customer Success Hub

- Product Documentation

- Training & Certification

- XM Institute

- Popular Resources

- Customer Stories

- Artificial Intelligence

Market Research

- Partnerships

- Marketplace

The annual gathering of the experience leaders at the world’s iconic brands building breakthrough business results, live in Salt Lake City.

- English/AU & NZ

- Español/Europa

- Español/América Latina

- Português Brasileiro

- REQUEST DEMO

- Experience Management

- Descriptive Statistics

Try Qualtrics for free

Descriptive statistics in research: a critical component of data analysis.

15 min read With any data, the object is to describe the population at large, but what does that mean and what processes, methods and measures are used to uncover insights from that data? In this short guide, we explore descriptive statistics and how it’s applied to research.

What do we mean by descriptive statistics?

With any kind of data, the main objective is to describe a population at large — and using descriptive statistics, researchers can quantify and describe the basic characteristics of a given data set.

For example, researchers can condense large data sets, which may contain thousands of individual data points or observations, into a series of statistics that provide useful information on the population of interest. We call this process “describing data”.

In the process of producing summaries of the sample, we use measures like mean, median, variance, graphs, charts, frequencies, histograms, box and whisker plots, and percentages. For datasets with just one variable, we use univariate descriptive statistics. For datasets with multiple variables, we use bivariate correlation and multivariate descriptive statistics.

Want to find out the definitions?

Univariate descriptive statistics: this is when you want to describe data with only one characteristic or attribute

Bivariate correlation: this is when you simultaneously analyze (compare) two variables to see if there is a relationship between them

Multivariate descriptive statistics: this is a subdivision of statistics encompassing the simultaneous observation and analysis of more than one outcome variable

Then, after describing and summarizing the data, as well as using simple graphical analyses, we can start to draw meaningful insights from it to help guide specific strategies. It’s also important to note that descriptive statistics can employ and use both quantitative and qualitative research .

Describing data is undoubtedly the most critical first step in research as it enables the subsequent organization, simplification and summarization of information — and every survey question and population has summary statistics. Let’s take a look at a few examples.

Examples of descriptive statistics

Consider for a moment a number used to summarize how well a striker is performing in football — goals scored per game. This number is simply the number of shots taken against how many of those shots hit the back of the net (reported to three significant digits). If a striker is scoring 0.333, that’s one goal for every three shots. If they’re scoring one in four, that’s 0.250.

A classic example is a student’s grade point average (GPA). This single number describes the general performance of a student across a range of course experiences and classes. It doesn’t tell us anything about the difficulty of the courses the student is taking, or what those courses are, but it does provide a summary that enables a degree of comparison with people or other units of data.

Ultimately, descriptive statistics make it incredibly easy for people to understand complex (or data intensive) quantitative or qualitative insights across large data sets.

Take your research to the next level with XM for Strategy & Research

Types of descriptive statistics

To quantitatively summarize the characteristics of raw, ungrouped data, we use the following types of descriptive statistics:

- Measures of Central Tendency ,

- Measures of Dispersion and

- Measures of Frequency Distribution.

Following the application of any of these approaches, the raw data then becomes ‘grouped’ data that’s logically organized and easy to understand. To visually represent the data, we then use graphs, charts, tables etc.

Let’s look at the different types of measurement and the statistical methods that belong to each:

Measures of Central Tendency are used to describe data by determining a single representative of central value. For example, the mean, median or mode.

Measures of Dispersion are used to determine how spread out a data distribution is with respect to the central value, e.g. the mean, median or mode. For example, while central tendency gives the person the average or central value, it doesn’t describe how the data is distributed within the set.

Measures of Frequency Distribution are used to describe the occurrence of data within the data set (count).

The methods of each measure are summarized in the table below:

| Measures of Central Tendency | Measures of Dispersion | Measures of Frequency Distribution |

|---|---|---|

| Mean | Range | Count |

| Median | Standard deviation | |

| Mode | Quartile deviation | |

| Variance | ||

| Absolute deviation |

Mean: The most popular and well-known measure of central tendency. The mean is equal to the sum of all the values in the data set divided by the number of values in the data set.

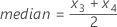

Median: The median is the middle score for a set of data that has been arranged in order of magnitude. If you have an even number of data, e.g. 10 data points, take the two middle scores and average the result.

Mode: The mode is the most frequently occurring observation in the data set.

Range: The difference between the highest and lowest value.

Standard deviation: Standard deviation measures the dispersion of a data set relative to its mean and is calculated as the square root of the variance.

Quartile deviation : Quartile deviation measures the deviation in the middle of the data.

Variance: Variance measures the variability from the average of mean.

Absolute deviation: The absolute deviation of a dataset is the average distance between each data point and the mean.

Count: How often each value occurs.

Scope of descriptive statistics in research

Descriptive statistics (or analysis) is considered more vast than other quantitative and qualitative methods as it provides a much broader picture of an event, phenomenon or population.

But that’s not all: it can use any number of variables, and as it collects data and describes it as it is, it’s also far more representative of the world as it exists.

However, it’s also important to consider that descriptive analyses lay the foundation for further methods of study. By summarizing and condensing the data into easily understandable segments, researchers can further analyze the data to uncover new variables or hypotheses.

Mostly, this practice is all about the ease of data visualization. With data presented in a meaningful way, researchers have a simplified interpretation of the data set in question. That said, while descriptive statistics helps to summarize information, it only provides a general view of the variables in question.

It is, therefore, up to the researchers to probe further and use other methods of analysis to discover deeper insights.

Things you can do with descriptive statistics

Define subject characteristics

If a marketing team wanted to build out accurate buyer personas for specific products and industry verticals, they could use descriptive analyses on customer datasets (procured via a survey) to identify consistent traits and behaviors.

They could then ‘describe’ the data to build a clear picture and understanding of who their buyers are, including things like preferences, business challenges, income and so on.

Measure data trends

Let’s say you wanted to assess propensity to buy over several months or years for a specific target market and product. With descriptive statistics, you could quickly summarize the data and extract the precise data points you need to understand the trends in product purchase behavior.

Compare events, populations or phenomena

How do different demographics respond to certain variables? For example, you might want to run a customer study to see how buyers in different job functions respond to new product features or price changes. Are all groups as enthusiastic about the new features and likely to buy? Or do they have reservations? This kind of data will help inform your overall product strategy and potentially how you tier solutions.

Validate existing conditions

When you have a belief or hypothesis but need to prove it, you can use descriptive techniques to ascertain underlying patterns or assumptions.

Form new hypotheses

With the data presented and surmised in a way that everyone can understand (and infer connections from), you can delve deeper into specific data points to uncover deeper and more meaningful insights — or run more comprehensive research.

Guiding your survey design to improve the data collected

To use your surveys as an effective tool for customer engagement and understanding, every survey goal and item should answer one simple, yet highly important question:

What am I really asking?

It might seem trivial, but by having this question frame survey research, it becomes significantly easier for researchers to develop the right questions that uncover useful, meaningful and actionable insights.

Planning becomes easier, questions clearer and perspective far wider and yet nuanced.

Hypothesize – what’s the problem that you’re trying to solve? Far too often, organizations collect data without understanding what they’re asking, and why they’re asking it.

Finally, focus on the end result. What kind of data do you need to answer your question? Also, are you asking a quantitative or qualitative question? Here are a few things to consider:

- Clear questions are clear for everyone. It takes time to make a concept clear

- Ask about measurable, evident and noticeable activities or behaviors.

- Make rating scales easy. Avoid long lists, confusing scales or “don’t know” or “not applicable” options.

- Ensure your survey makes sense and flows well. Reduce the cognitive load on respondents by making it easy for them to complete the survey.

- Read your questions aloud to see how they sound.

- Pretest by asking a few uninvolved individuals to answer.

Furthermore…

As well as understanding what you’re really asking, there are several other considerations for your data:

Keep it random

How you select your sample is what makes your research replicable and meaningful. Having a truly random sample helps prevent bias, increasingly the quality of evidence you find.

Plan for and avoid sample error

Before starting your research project, have a clear plan for avoiding sample error. Use larger sample sizes, and apply random sampling to minimize the potential for bias.

Don’t over sample

Remember, you can sample 500 respondents selected randomly from a population and they will closely reflect the actual population 95% of the time.

Think about the mode

Match your survey methods to the sample you select. For example, how do your current customers prefer communicating? Do they have any shared characteristics or preferences? A mixed-method approach is critical if you want to drive action across different customer segments.

Use a survey tool that supports you with the whole process

Surveys created using a survey research software can support researchers in a number of ways:

- Employee satisfaction survey template

- Employee exit survey template

- Customer satisfaction (CSAT) survey template

- Ad testing survey template

- Brand awareness survey template

- Product pricing survey template

- Product research survey template

- Employee engagement survey template

- Customer service survey template

- NPS survey template

- Product package testing survey template

- Product features prioritization survey template

These considerations have been included in Qualtrics’ survey software , which summarizes and creates visualizations of data, making it easy to access insights, measure trends, and examine results without complexity or jumping between systems.

Uncover your next breakthrough idea with Stats iQ™

What makes Qualtrics so different from other survey providers is that it is built in consultation with trained research professionals and includes high-tech statistical software like Qualtrics Stats iQ .

With just a click, the software can run specific analyses or automate statistical testing and data visualization. Testing parameters are automatically chosen based on how your data is structured (e.g. categorical data will run a statistical test like Chi-squared), and the results are translated into plain language that anyone can understand and put into action.

Get more meaningful insights from your data

Stats iQ includes a variety of statistical analyses, including: describe, relate, regression, cluster, factor, TURF, and pivot tables — all in one place!

Confidently analyze complex data

Built-in artificial intelligence and advanced algorithms automatically choose and apply the right statistical analyses and return the insights in plain english so everyone can take action.

Integrate existing statistical workflows

For more experienced stats users, built-in R code templates allow you to run even more sophisticated analyses by adding R code snippets directly in your survey analysis.

Advanced statistical analysis methods available in Stats iQ

Regression analysis – Measures the degree of influence of independent variables on a dependent variable (the relationship between two or multiple variables).

Analysis of Variance (ANOVA) test – Commonly used with a regression study to find out what effect independent variables have on the dependent variable. It can compare multiple groups simultaneously to see if there is a relationship between them.

Conjoint analysis – Asks people to make trade-offs when making decisions, then analyses the results to give the most popular outcome. Helps you understand why people make the complex choices they do.

T-Test – Helps you compare whether two data groups have different mean values and allows the user to interpret whether differences are meaningful or merely coincidental.

Crosstab analysis – Used in quantitative market research to analyze categorical data – that is, variables that are different and mutually exclusive, and allows you to compare the relationship between two variables in contingency tables.

Go from insights to action

Now that you have a better understanding of descriptive statistics in research and how you can leverage statistical analysis methods correctly, now’s the time to utilize a tool that can take your research and subsequent analysis to the next level.

Try out a Qualtrics survey software demo so you can see how it can take you through descriptive research and further research projects from start to finish.

Related resources

Mixed methods research 17 min read, market intelligence 10 min read, marketing insights 11 min read, ethnographic research 11 min read, qualitative vs quantitative research 13 min read, qualitative research questions 11 min read, qualitative research design 12 min read, request demo.

Ready to learn more about Qualtrics?

- Chester Fritz Library

- Library of the Health Sciences

- Thormodsgard Law Library

- University of North Dakota

- Research Guides

- SMHS Library Resources

Statistics - explanations and formulas

Descriptive statistics.

- Absolute Risk Reduction

- Bell-shaped Curve

- Confidence Interval

- Control Event Rate

- Correlation

- Discrete Stats

- Experimental Event Rate

- Forest Plots

- Hazard Ratio

- Heterogeneity / Statistical Heterogeneity

- Inferential Statistics

- Intention to Treat

- Internal Validity / External Validity

- Kaplan-Meier Curves

- Kruskal-Wallis Test

- Likelihood Ratios

- Logistics Regression

- Mann-Whitney U Test

- Mean Difference

- Misclassification Bias

- Multiple Regression Coefficients

- Nominal Data

- Noninferiority Studies

- Noninferiority Trials

- Nonparametric Analysis

- Normal Distribution

- Number Needed to Treat - including how to calculate

- Power Analysis

- Predictive Power

- Probability

- Propensity Score

- Random Sample

- Regression Analysis

- Relative Risk

- Sampling Error

- Spearman Rank Correlation

- Specificity and Sensitivity

- Statistical Significance versus Clinical Significance

- Survivor Analysis

- Wilcoxon Rank Sum Test

- Excel formulas

- Picking the appropriate method

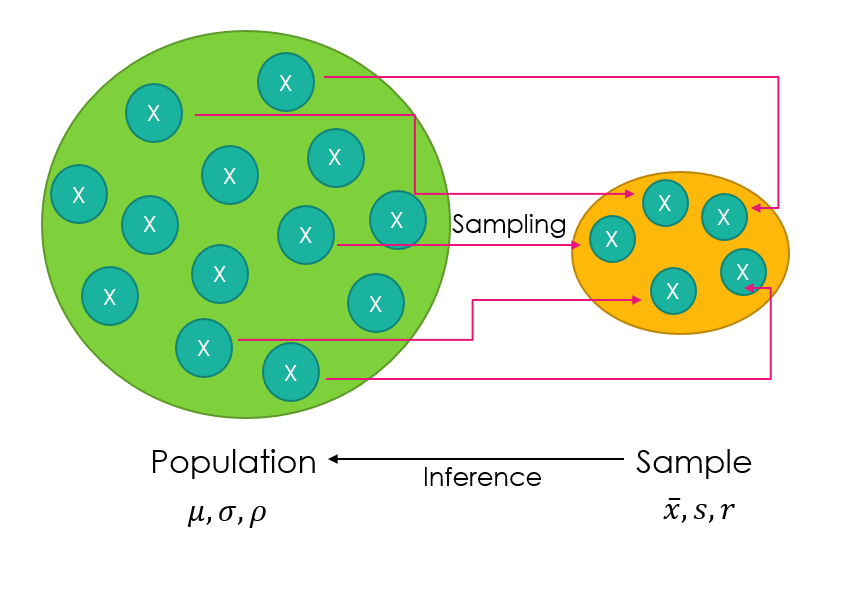

Descriptive statistics are techniques used for describing, graphing, organizing and summarizing quantitative data . They describe something, either visually or statistically, about individual variables or the association among two or more variables. For instance, a social researcher may want to know how many people in his/her study are male or female, what the average age of the respondents is, or what the median income is. Researchers often need to know how closely their data represent the population from which it is drawn so that they can assess the data’s representativeness.

Descriptive statistics include mean, standard deviation, mode,and median.

Descriptive information gives researchers a general picture of their data, as opposed to an explanation for why certain variables may be associated with each other. Descriptive statistics are often contrasted with inferential statistics, which are used to make inferences, or to explain factors, about the population. Data can be summarized at the univariate level with visual pictures, such as graphs, histograms, and pie charts. Statistical techniques used to describe individual variables include frequencies, the mean , median, mode, cumulative percent, percentile, standard deviation, variance, and interquartile range. Data can also be summarized at the bivariate level. Measures of association between two variables include calculations of eta, gamma, lambda, Pearson’s r, Kendall’s tau, Spearman’s rho, and chi2, among others. Bivariate relationships can also be illustrated in visual graphs that describe the association between two variables.

(from Oxford Reference Online )

- << Previous: Correlation

- Next: Discrete Stats >>

- Last Updated: Jul 3, 2024 12:02 PM

- URL: https://libguides.und.edu/statistics

Educational resources and simple solutions for your research journey

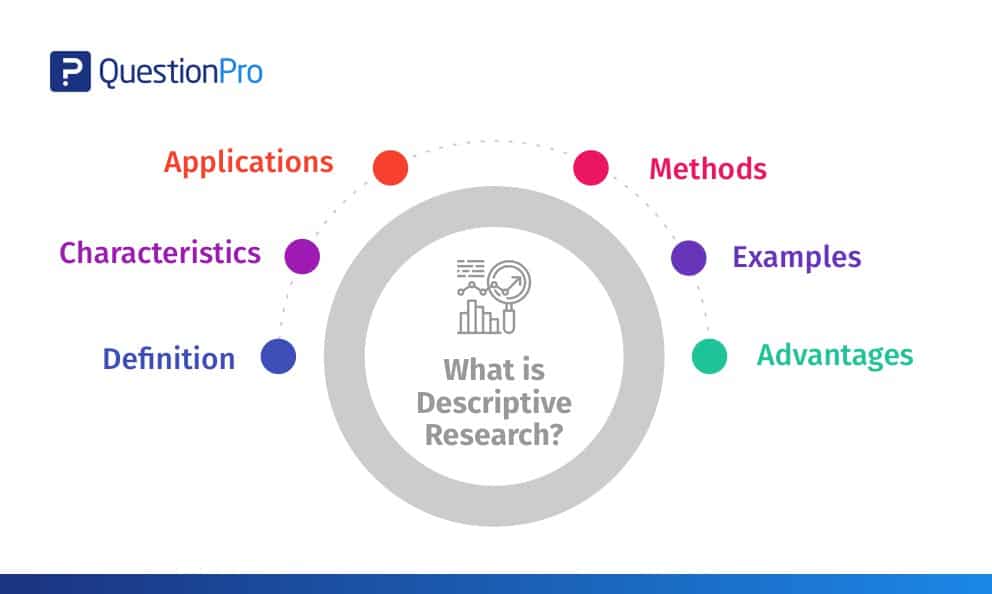

What is Descriptive Research? Definition, Methods, Types and Examples

Descriptive research is a methodological approach that seeks to depict the characteristics of a phenomenon or subject under investigation. In scientific inquiry, it serves as a foundational tool for researchers aiming to observe, record, and analyze the intricate details of a particular topic. This method provides a rich and detailed account that aids in understanding, categorizing, and interpreting the subject matter.

Descriptive research design is widely employed across diverse fields, and its primary objective is to systematically observe and document all variables and conditions influencing the phenomenon.

After this descriptive research definition, let’s look at this example. Consider a researcher working on climate change adaptation, who wants to understand water management trends in an arid village in a specific study area. She must conduct a demographic survey of the region, gather population data, and then conduct descriptive research on this demographic segment. The study will then uncover details on “what are the water management practices and trends in village X.” Note, however, that it will not cover any investigative information about “why” the patterns exist.

Table of Contents

What is descriptive research?

If you’ve been wondering “What is descriptive research,” we’ve got you covered in this post! In a nutshell, descriptive research is an exploratory research method that helps a researcher describe a population, circumstance, or phenomenon. It can help answer what , where , when and how questions, but not why questions. In other words, it does not involve changing the study variables and does not seek to establish cause-and-effect relationships.

Importance of descriptive research

Now, let’s delve into the importance of descriptive research. This research method acts as the cornerstone for various academic and applied disciplines. Its primary significance lies in its ability to provide a comprehensive overview of a phenomenon, enabling researchers to gain a nuanced understanding of the variables at play. This method aids in forming hypotheses, generating insights, and laying the groundwork for further in-depth investigations. The following points further illustrate its importance:

Provides insights into a population or phenomenon: Descriptive research furnishes a comprehensive overview of the characteristics and behaviors of a specific population or phenomenon, thereby guiding and shaping the research project.

Offers baseline data: The data acquired through this type of research acts as a reference for subsequent investigations, laying the groundwork for further studies.

Allows validation of sampling methods: Descriptive research validates sampling methods, aiding in the selection of the most effective approach for the study.

Helps reduce time and costs: It is cost-effective and time-efficient, making this an economical means of gathering information about a specific population or phenomenon.

Ensures replicability: Descriptive research is easily replicable, ensuring a reliable way to collect and compare information from various sources.

When to use descriptive research design?

Determining when to use descriptive research depends on the nature of the research question. Before diving into the reasons behind an occurrence, understanding the how, when, and where aspects is essential. Descriptive research design is a suitable option when the research objective is to discern characteristics, frequencies, trends, and categories without manipulating variables. It is therefore often employed in the initial stages of a study before progressing to more complex research designs. To put it in another way, descriptive research precedes the hypotheses of explanatory research. It is particularly valuable when there is limited existing knowledge about the subject.

Some examples are as follows, highlighting that these questions would arise before a clear outline of the research plan is established:

- In the last two decades, what changes have occurred in patterns of urban gardening in Mumbai?

- What are the differences in climate change perceptions of farmers in coastal versus inland villages in the Philippines?

Characteristics of descriptive research

Coming to the characteristics of descriptive research, this approach is characterized by its focus on observing and documenting the features of a subject. Specific characteristics are as below.

- Quantitative nature: Some descriptive research types involve quantitative research methods to gather quantifiable information for statistical analysis of the population sample.

- Qualitative nature: Some descriptive research examples include those using the qualitative research method to describe or explain the research problem.

- Observational nature: This approach is non-invasive and observational because the study variables remain untouched. Researchers merely observe and report, without introducing interventions that could impact the subject(s).

- Cross-sectional nature: In descriptive research, different sections belonging to the same group are studied, providing a “snapshot” of sorts.

- Springboard for further research: The data collected are further studied and analyzed using different research techniques. This approach helps guide the suitable research methods to be employed.

Types of descriptive research

There are various descriptive research types, each suited to different research objectives. Take a look at the different types below.

- Surveys: This involves collecting data through questionnaires or interviews to gather qualitative and quantitative data.

- Observational studies: This involves observing and collecting data on a particular population or phenomenon without influencing the study variables or manipulating the conditions. These may be further divided into cohort studies, case studies, and cross-sectional studies:

- Cohort studies: Also known as longitudinal studies, these studies involve the collection of data over an extended period, allowing researchers to track changes and trends.

- Case studies: These deal with a single individual, group, or event, which might be rare or unusual.

- Cross-sectional studies : A researcher collects data at a single point in time, in order to obtain a snapshot of a specific moment.

- Focus groups: In this approach, a small group of people are brought together to discuss a topic. The researcher moderates and records the group discussion. This can also be considered a “participatory” observational method.

- Descriptive classification: Relevant to the biological sciences, this type of approach may be used to classify living organisms.

Descriptive research methods

Several descriptive research methods can be employed, and these are more or less similar to the types of approaches mentioned above.

- Surveys: This method involves the collection of data through questionnaires or interviews. Surveys may be done online or offline, and the target subjects might be hyper-local, regional, or global.

- Observational studies: These entail the direct observation of subjects in their natural environment. These include case studies, dealing with a single case or individual, as well as cross-sectional and longitudinal studies, for a glimpse into a population or changes in trends over time, respectively. Participatory observational studies such as focus group discussions may also fall under this method.

Researchers must carefully consider descriptive research methods, types, and examples to harness their full potential in contributing to scientific knowledge.

Examples of descriptive research

Now, let’s consider some descriptive research examples.

- In social sciences, an example could be a study analyzing the demographics of a specific community to understand its socio-economic characteristics.

- In business, a market research survey aiming to describe consumer preferences would be a descriptive study.

- In ecology, a researcher might undertake a survey of all the types of monocots naturally occurring in a region and classify them up to species level.

These examples showcase the versatility of descriptive research across diverse fields.

Advantages of descriptive research

There are several advantages to this approach, which every researcher must be aware of. These are as follows:

- Owing to the numerous descriptive research methods and types, primary data can be obtained in diverse ways and be used for developing a research hypothesis .

- It is a versatile research method and allows flexibility.

- Detailed and comprehensive information can be obtained because the data collected can be qualitative or quantitative.

- It is carried out in the natural environment, which greatly minimizes certain types of bias and ethical concerns.

- It is an inexpensive and efficient approach, even with large sample sizes

Disadvantages of descriptive research

On the other hand, this design has some drawbacks as well:

- It is limited in its scope as it does not determine cause-and-effect relationships.

- The approach does not generate new information and simply depends on existing data.

- Study variables are not manipulated or controlled, and this limits the conclusions to be drawn.

- Descriptive research findings may not be generalizable to other populations.

- Finally, it offers a preliminary understanding rather than an in-depth understanding.

To reiterate, the advantages of descriptive research lie in its ability to provide a comprehensive overview, aid hypothesis generation, and serve as a preliminary step in the research process. However, its limitations include a potential lack of depth, inability to establish cause-and-effect relationships, and susceptibility to bias.

Frequently asked questions

When should researchers conduct descriptive research.

Descriptive research is most appropriate when researchers aim to portray and understand the characteristics of a phenomenon without manipulating variables. It is particularly valuable in the early stages of a study.

What is the difference between descriptive and exploratory research?

Descriptive research focuses on providing a detailed depiction of a phenomenon, while exploratory research aims to explore and generate insights into an issue where little is known.

What is the difference between descriptive and experimental research?

Descriptive research observes and documents without manipulating variables, whereas experimental research involves intentional interventions to establish cause-and-effect relationships.

Is descriptive research only for social sciences?

No, various descriptive research types may be applicable to all fields of study, including social science, humanities, physical science, and biological science.

How important is descriptive research?

The importance of descriptive research lies in its ability to provide a glimpse of the current state of a phenomenon, offering valuable insights and establishing a basic understanding. Further, the advantages of descriptive research include its capacity to offer a straightforward depiction of a situation or phenomenon, facilitate the identification of patterns or trends, and serve as a useful starting point for more in-depth investigations. Additionally, descriptive research can contribute to the development of hypotheses and guide the formulation of research questions for subsequent studies.

Editage All Access is a subscription-based platform that unifies the best AI tools and services designed to speed up, simplify, and streamline every step of a researcher’s journey. The Editage All Access Pack is a one-of-a-kind subscription that unlocks full access to an AI writing assistant, literature recommender, journal finder, scientific illustration tool, and exclusive discounts on professional publication services from Editage.

Based on 22+ years of experience in academia, Editage All Access empowers researchers to put their best research forward and move closer to success. Explore our top AI Tools pack, AI Tools + Publication Services pack, or Build Your Own Plan. Find everything a researcher needs to succeed, all in one place – Get All Access now starting at just $14 a month !

Related Posts

Join Us for Peer Review Week 2024

How Editage All Access is Boosting Productivity for Academics in India

A Guide on Data Analysis

3 descriptive statistics.

When you have an area of interest that you want to research, a problem that you want to solve, a relationship that you want to investigate, theoretical and empirical processes will help you.

Estimand is defined as “a quantity of scientific interest that can be calculated in the population and does not change its value depending on the data collection design used to measure it (i.e., it does not vary with sample size and survey design, or the number of non-respondents, or follow-up efforts).” ( Rubin 1996 )

Estimands include:

- population means

- Population variances

- correlations

- factor loading

- regression coefficients

3.1 Numerical Measures

There are differences between a population and a sample

| Measures of | Category | Population | Sample |

|---|---|---|---|

| - | What is it? | Reality | A small fraction of reality (inference) |

| - | Characteristics described by | Parameters | Statistics |

| Central Tendency | Mean | \(\mu = E(Y)\) | \(\hat{\mu} = \overline{y}\) |

| Central Tendency | Median | 50-th percentile | \(y_{(\frac{n+1}{2})}\) |

| Dispersion | Variance | \[\begin{aligned} \sigma^2 &= var(Y) \\ &= E(Y- \mu^2) \end{aligned}\] | \(s^2=\frac{1}{n-1} \sum_{i = 1}^{n} (y_i-\overline{y})^2\) |

| Dispersion | Coefficient of Variation | \(\frac{\sigma}{\mu}\) | \(\frac{s}{\overline{y}}\) |

| Dispersion | Interquartile Range | difference between 25th and 75th percentiles. Robust to outliers | |

| Shape | Skewness Standardized 3rd central moment (unitless) | \(g_1=\frac{\mu_3}{\mu_2^{3/2}}\) | \(\hat{g_1}=\frac{m_3}{m_2sqrt(m_2)}\) |

| Shape | Central moments | \(\mu=E(Y)\) \(\mu_2 = \sigma^2=E(Y-\mu)^2\) \(\mu_3 = E(Y-\mu)^3\) \(\mu_4 = E(Y-\mu)^4\) | | \(m_2=\sum_{i=1}^{n}(y_1-\overline{y})^2/n\) \(m_3=\sum_{i=1}^{n}(y_1-\overline{y})^3/n\) |

| Shape | Kurtosis (peakedness and tail thickness) Standardized 4th central moment | \(g_2^*=\frac{E(Y-\mu)^4}{\sigma^4}\) | \(\hat{g_2}=\frac{m_4}{m_2^2}-3\) |

Order Statistics: \(y_{(1)},y_{(2)},...,y_{(n)}\) where \(y_{(1)}<y_{(2)}<...<y_{(n)}\)

Coefficient of variation: standard deviation over mean. This metric is stable, dimensionless statistic for comparison.

Symmetric: mean = median, skewness = 0

Skewed right: mean > median, skewness > 0

Skewed left: mean < median, skewness < 0

Central moments: \(\mu=E(Y)\) , \(\mu_2 = \sigma^2=E(Y-\mu)^2\) , \(\mu_3 = E(Y-\mu)^3\) , \(\mu_4 = E(Y-\mu)^4\)

For normal distributions, \(\mu_3=0\) , so \(g_1=0\)

\(\hat{g_1}\) is distributed approximately as \(N(0,6/n)\) if sample is from a normal population. (valid when \(n > 150\) )

- For large samples, inference on skewness can be based on normal tables with 95% confidence interval for \(g_1\) as \(\hat{g_1}\pm1.96\sqrt{6/n}\)

- For small samples, special tables from Snedecor and Cochran 1989, Table A 19(i) or Monte Carlo test

| Kurtosis > 0 (leptokurtic) | heavier tail | compared to a normal distribution with the same \(\sigma\) (e.g., t-distribution) |

| Kurtosis < 0 (platykurtic) | lighter tail | compared to a normal distribution with the same \(\sigma\) |

For a normal distribution, \(g_2^*=3\) . Kurtosis is often redefined as: \(g_2=\frac{E(Y-\mu)^4}{\sigma^4}-3\) where the 4th central moment is estimated by \(m_4=\sum_{i=1}^{n}(y_i-\overline{y})^4/n\)

- the asymptotic sampling distribution for \(\hat{g_2}\) is approximately \(N(0,24/n)\) (with \(n > 1000\) )

- large sample on kurtosis uses standard normal tables

- small sample uses tables by Snedecor and Cochran, 1989, Table A 19(ii) or Geary 1936

3.2 Graphical Measures

3.2.1 shape.

It’s a good habit to label your graph, so others can easily follow.

Others more advanced plots

3.2.2 Scatterplot

3.3 normality assessment.

Since Normal (Gaussian) distribution has many applications, we typically want/ wish our data or our variable is normal. Hence, we have to assess the normality based on not only Numerical Measures but also Graphical Measures

3.3.1 Graphical Assessment

The straight line represents the theoretical line for normally distributed data. The dots represent real empirical data that we are checking. If all the dots fall on the straight line, we can be confident that our data follow a normal distribution. If our data wiggle and deviate from the line, we should be concerned with the normality assumption.

3.3.2 Summary Statistics

Sometimes it’s hard to tell whether your data follow the normal distribution by just looking at the graph. Hence, we often have to conduct statistical test to aid our decision. Common tests are

Methods based on normal probability plot

- Correlation Coefficient with Normal Probability Plots

- Shapiro-Wilk Test

Methods based on empirical cumulative distribution function

- Anderson-Darling Test

- Kolmogorov-Smirnov Test

- Cramer-von Mises Test

- Jarque–Bera Test

3.3.2.1 Methods based on normal probability plot

3.3.2.1.1 correlation coefficient with normal probability plots.

( Looney and Gulledge Jr 1985 ) ( Samuel S. Shapiro and Francia 1972 ) The correlation coefficient between \(y_{(i)}\) and \(m_i^*\) as given on the normal probability plot:

\[W^*=\frac{\sum_{i=1}^{n}(y_{(i)}-\bar{y})(m_i^*-0)}{(\sum_{i=1}^{n}(y_{(i)}-\bar{y})^2\sum_{i=1}^{n}(m_i^*-0)^2)^.5}\]

where \(\bar{m^*}=0\)

Pearson product moment formula for correlation:

\[\hat{p}=\frac{\sum_{i-1}^{n}(y_i-\bar{y})(x_i-\bar{x})}{(\sum_{i=1}^{n}(y_{i}-\bar{y})^2\sum_{i=1}^{n}(x_i-\bar{x})^2)^.5}\]

- When the correlation is 1, the plot is exactly linear and normality is assumed.

- The closer the correlation is to zero, the more confident we are to reject normality

- Inference on W* needs to be based on special tables ( Looney and Gulledge Jr 1985 )

3.3.2.1.2 Shapiro-Wilk Test

( Samuel Sanford Shapiro and Wilk 1965 )

\[W=(\frac{\sum_{i=1}^{n}a_i(y_{(i)}-\bar{y})(m_i^*-0)}{(\sum_{i=1}^{n}a_i^2(y_{(i)}-\bar{y})^2\sum_{i=1}^{n}(m_i^*-0)^2)^.5})^2\]

where \(a_1,..,a_n\) are weights computed from the covariance matrix for the order statistics.

- Researchers typically use this test to assess normality. (n < 2000) Under normality, W is close to 1, just like \(W^*\) . Notice that the only difference between W and W* is the “weights”.

3.3.2.2 Methods based on empirical cumulative distribution function

The formula for the empirical cumulative distribution function (CDF) is:

\(F_n(t)\) = estimate of probability that an observation \(\le\) t = (number of observation \(\le\) t)/n

This method requires large sample sizes. However, it can apply to distributions other than the normal (Gaussian) one.

3.3.2.2.1 Anderson-Darling Test

The Anderson-Darling statistic ( T. W. Anderson and Darling 1952 ) :

\[A^2=\int_{-\infty}^{\infty}(F_n(t)=F(t))^2\frac{dF(t)}{F(t)(1-F(t))}\]

- a weight average of squared deviations (it weights small and large values of t more)

For the normal distribution,

\(A^2 = - (\sum_{i=1}^{n}(2i-1)(ln(p_i) +ln(1-p_{n+1-i}))/n-n\)

where \(p_i=\Phi(\frac{y_{(i)}-\bar{y}}{s})\) , the probability that a standard normal variable is less than \(\frac{y_{(i)}-\bar{y}}{s}\)

Reject normal assumption when \(A^2\) is too large

Evaluate the null hypothesis that the observations are randomly selected from a normal population based on the critical value provided by ( Marsaglia and Marsaglia 2004 ) and ( Stephens 1974 )

This test can be applied to other distributions:

- Exponential

- Extreme-value

- Weibull: log(Weibull) = Gumbel

- Log-normal (two-parameter)

Consult ( Stephens 1974 ) for more detailed transformation and critical values.

3.3.2.2.2 Kolmogorov-Smirnov Test

- Based on the largest absolute difference between empirical and expected cumulative distribution

- Another deviation of K-S test is Kuiper’s test

3.3.2.2.3 Cramer-von Mises Test

- Based on the average squared discrepancy between the empirical distribution and a given theoretical distribution. Each discrepancy is weighted equally (unlike Anderson-Darling test weights end points more heavily)

3.3.2.2.4 Jarque–Bera Test

( Bera and Jarque 1981 )

Based on the skewness and kurtosis to test normality.

\(JB = \frac{n}{6}(S^2+(K-3)^2/4)\) where \(S\) is the sample skewness and \(K\) is the sample kurtosis

\(S=\frac{\hat{\mu_3}}{\hat{\sigma}^3}=\frac{\sum_{i=1}^{n}(x_i-\bar{x})^3/n}{(\sum_{i=1}^{n}(x_i-\bar{x})^2/n)^\frac{3}{2}}\)

\(K=\frac{\hat{\mu_4}}{\hat{\sigma}^4}=\frac{\sum_{i=1}^{n}(x_i-\bar{x})^4/n}{(\sum_{i=1}^{n}(x_i-\bar{x})^2/n)^2}\)

recall \(\hat{\sigma^2}\) is the estimate of the second central moment (variance) \(\hat{\mu_3}\) and \(\hat{\mu_4}\) are the estimates of third and fourth central moments.

If the data comes from a normal distribution, the JB statistic asymptotically has a chi-squared distribution with two degrees of freedom.

The null hypothesis is a joint hypothesis of the skewness being zero and the excess kurtosis being zero.

3.4 Bivariate Statistics

Correlation between

- Two Continuous variables

- Two Discrete variables

- Categorical and Continuous

| Categorical | Continuous | |

|---|---|---|

|

| ||

|

|

|

Questions to keep in mind:

- Is the relationship linear or non-linear?

- If the variable is continuous, is it normal and homoskadastic?

- How big is your dataset?

3.4.1 Two Continuous

3.4.1.1 pearson correlation.

- Good with linear relationship

3.4.1.2 Spearman Correlation

3.4.2 categorical and continuous, 3.4.2.1 point-biserial correlation.

Similar to the Pearson correlation coefficient, the point-biserial correlation coefficient is between -1 and 1 where:

-1 means a perfectly negative correlation between two variables

0 means no correlation between two variables

1 means a perfectly positive correlation between two variables

Alternatively

3.4.2.2 Logistic Regression

See 3.4.2.2

3.4.3 Two Discrete

3.4.3.1 distance metrics.

Some consider distance is not a correlation metric because it isn’t unit independent (i.e., if you scale the distance, the metrics will change), but it’s still a useful proxy. Distance metrics are more likely to be used for similarity measure.

Euclidean Distance

Manhattan Distance

Chessboard Distance

Minkowski Distance

Canberra Distance

Hamming Distance

Cosine Distance

Sum of Absolute Distance

Sum of Squared Distance

Mean-Absolute Error

3.4.3.2 Statistical Metrics

3.4.3.2.1 chi-squared test, 3.4.3.2.1.1 phi coefficient, 3.4.3.2.1.2 cramer’s v.

- between nominal categorical variables (no natural order)

\[ \text{Cramer's V} = \sqrt{\frac{\chi^2/n}{\min(c-1,r-1)}} \]

\(\chi^2\) = Chi-square statistic

\(n\) = sample size

\(r\) = # of rows

\(c\) = # of columns

Alternatively,

ncchisq noncentral Chi-square

nchisqadj Adjusted noncentral Chi-square

fisher Fisher Z transformation

fisheradj bias correction Fisher z transformation

3.4.3.2.1.3 Tschuprow’s T

- 2 nominal variables

3.4.3.3 Ordinal Association (Rank correlation)

- Good with non-linear relationship

3.4.3.3.1 Ordinal and Nominal

3.4.3.3.1.1 freeman’s theta.

- Ordinal and nominal

3.4.3.3.1.2 Epsilon-squared

3.4.3.3.2 two ordinal, 3.4.3.3.2.1 goodman kruskal’s gamma.

- 2 ordinal variables

3.4.3.3.2.2 Somers’ D

or Somers’ Delta

3.4.3.3.2.3 Kendall’s Tau-b

3.4.3.3.2.4 yule’s q and y.

Special version \((2 \times 2)\) of the Goodman Kruskal’s Gamma coefficient.

| Variable 1 | ||

|---|---|---|

| a | b | |

| c | d |

\[ \text{Yule's Q} = \frac{ad - bc}{ad + bc} \]

We typically use Yule’s \(Q\) in practice while Yule’s Y has the following relationship with \(Q\) .

\[ \text{Yule's Y} = \frac{\sqrt{ad} - \sqrt{bc}}{\sqrt{ad} + \sqrt{bc}} \]

\[ Q = \frac{2Y}{1 + Y^2} \]

\[ Y = \frac{1 = \sqrt{1-Q^2}}{Q} \]

3.4.3.3.2.5 Tetrachoric Correlation

- is a special case of Polychoric Correlation when both variables are binary

3.4.3.3.2.6 Polychoric Correlation

- between ordinal categorical variables (natural order).

- Assumption: Ordinal variable is a discrete representation of a latent normally distributed continuous variable. (Income = low, normal, high).

3.5 Summary

Get the correlation table for continuous variables only

Alternatively, you can also have the

| cyl | vs | carb | |

|---|---|---|---|

| cyl | 1 | . | . |

| vs | −.81 | 1 | . |

| carb | .53 | −.57 | 1 |

Different comparison between different correlation between different types of variables (i.e., continuous vs. categorical) can be problematic. Moreover, the problem of detecting non-linear vs. linear relationship/correlation is another one. Hence, a solution is that using mutual information from information theory (i.e., knowing one variable can reduce uncertainty about the other).

To implement mutual information, we have the following approximations

\[ \downarrow \text{prediction error} \approx \downarrow \text{uncertainty} \approx \downarrow \text{association strength} \]

More specifically, following the X2Y metric , we have the following steps:

Predict \(y\) without \(x\) (i.e., baseline model)

Average of \(y\) when \(y\) is continuous

Most frequent value when \(y\) is categorical

Predict \(y\) with \(x\) (e.g., linear, random forest, etc.)

Calculate the prediction error difference between 1 and 2

To have a comprehensive table that could handle

continuous vs. continuous

categorical vs. continuous

continuous vs. categorical

categorical vs. categorical

the suggested model would be Classification and Regression Trees (CART). But we can certainly use other models as well.

The downfall of this method is that you might suffer

- Symmetry: \((x,y) \neq (y,x)\)

- Comparability : Different pair of comparison might use different metrics (e.g., misclassification error vs. MAE)

3.5.1 Visualization

More general form,

Both heat map and correlation at the same time

More elaboration with ggplot2

Popular searches

- How to Get Participants For Your Study

- How to Do Segmentation?

- Conjoint Preference Share Simulator

- MaxDiff Analysis

- Likert Scales

- Reliability & Validity

Request consultation

Do you need support in running a pricing or product study? We can help you with agile consumer research and conjoint analysis.

Looking for an online survey platform?

Conjointly offers a great survey tool with multiple question types, randomisation blocks, and multilingual support. The Basic tier is always free.

Research Methods Knowledge Base

- Navigating the Knowledge Base

- Foundations

- Measurement

- Research Design

- Conclusion Validity

- Data Preparation

- Correlation

- Inferential Statistics

- Table of Contents

Fully-functional online survey tool with various question types, logic, randomisation, and reporting for unlimited number of surveys.

Completely free for academics and students .

Descriptive Statistics

Descriptive statistics are used to describe the basic features of the data in a study. They provide simple summaries about the sample and the measures. Together with simple graphics analysis, they form the basis of virtually every quantitative analysis of data.

Descriptive statistics are typically distinguished from inferential statistics . With descriptive statistics you are simply describing what is or what the data shows. With inferential statistics, you are trying to reach conclusions that extend beyond the immediate data alone. For instance, we use inferential statistics to try to infer from the sample data what the population might think. Or, we use inferential statistics to make judgments of the probability that an observed difference between groups is a dependable one or one that might have happened by chance in this study. Thus, we use inferential statistics to make inferences from our data to more general conditions; we use descriptive statistics simply to describe what’s going on in our data.

Descriptive Statistics are used to present quantitative descriptions in a manageable form. In a research study we may have lots of measures. Or we may measure a large number of people on any measure. Descriptive statistics help us to simplify large amounts of data in a sensible way. Each descriptive statistic reduces lots of data into a simpler summary. For instance, consider a simple number used to summarize how well a batter is performing in baseball, the batting average. This single number is simply the number of hits divided by the number of times at bat (reported to three significant digits). A batter who is hitting .333 is getting a hit one time in every three at bats. One batting .250 is hitting one time in four. The single number describes a large number of discrete events. Or, consider the scourge of many students, the Grade Point Average (GPA). This single number describes the general performance of a student across a potentially wide range of course experiences.

Every time you try to describe a large set of observations with a single indicator you run the risk of distorting the original data or losing important detail. The batting average doesn’t tell you whether the batter is hitting home runs or singles. It doesn’t tell whether she’s been in a slump or on a streak. The GPA doesn’t tell you whether the student was in difficult courses or easy ones, or whether they were courses in their major field or in other disciplines. Even given these limitations, descriptive statistics provide a powerful summary that may enable comparisons across people or other units.

Univariate Analysis

Univariate analysis involves the examination across cases of one variable at a time. There are three major characteristics of a single variable that we tend to look at:

- the distribution

- the central tendency

- the dispersion

In most situations, we would describe all three of these characteristics for each of the variables in our study.

The Distribution

The distribution is a summary of the frequency of individual values or ranges of values for a variable. The simplest distribution would list every value of a variable and the number of persons who had each value. For instance, a typical way to describe the distribution of college students is by year in college, listing the number or percent of students at each of the four years. Or, we describe gender by listing the number or percent of males and females. In these cases, the variable has few enough values that we can list each one and summarize how many sample cases had the value. But what do we do for a variable like income or GPA? With these variables there can be a large number of possible values, with relatively few people having each one. In this case, we group the raw scores into categories according to ranges of values. For instance, we might look at GPA according to the letter grade ranges. Or, we might group income into four or five ranges of income values.

| Category | Percent |

|---|---|

| Under 35 years old | 9% |

| 36–45 | 21% |

| 46–55 | 45% |

| 56–65 | 19% |

| 66+ | 6% |

One of the most common ways to describe a single variable is with a frequency distribution . Depending on the particular variable, all of the data values may be represented, or you may group the values into categories first (e.g. with age, price, or temperature variables, it would usually not be sensible to determine the frequencies for each value. Rather, the value are grouped into ranges and the frequencies determined.). Frequency distributions can be depicted in two ways, as a table or as a graph. The table above shows an age frequency distribution with five categories of age ranges defined. The same frequency distribution can be depicted in a graph as shown in Figure 1. This type of graph is often referred to as a histogram or bar chart.

Distributions may also be displayed using percentages. For example, you could use percentages to describe the:

- percentage of people in different income levels

- percentage of people in different age ranges

- percentage of people in different ranges of standardized test scores

Central Tendency

The central tendency of a distribution is an estimate of the “center” of a distribution of values. There are three major types of estimates of central tendency:

The Mean or average is probably the most commonly used method of describing central tendency. To compute the mean all you do is add up all the values and divide by the number of values. For example, the mean or average quiz score is determined by summing all the scores and dividing by the number of students taking the exam. For example, consider the test score values:

The sum of these 8 values is 167 , so the mean is 167/8 = 20.875 .

The Median is the score found at the exact middle of the set of values. One way to compute the median is to list all scores in numerical order, and then locate the score in the center of the sample. For example, if there are 500 scores in the list, score #250 would be the median. If we order the 8 scores shown above, we would get:

There are 8 scores and score #4 and #5 represent the halfway point. Since both of these scores are 20 , the median is 20 . If the two middle scores had different values, you would have to interpolate to determine the median.

The Mode is the most frequently occurring value in the set of scores. To determine the mode, you might again order the scores as shown above, and then count each one. The most frequently occurring value is the mode. In our example, the value 15 occurs three times and is the model. In some distributions there is more than one modal value. For instance, in a bimodal distribution there are two values that occur most frequently.

Notice that for the same set of 8 scores we got three different values ( 20.875 , 20 , and 15 ) for the mean, median and mode respectively. If the distribution is truly normal (i.e. bell-shaped), the mean, median and mode are all equal to each other.

Dispersion refers to the spread of the values around the central tendency. There are two common measures of dispersion, the range and the standard deviation. The range is simply the highest value minus the lowest value. In our example distribution, the high value is 36 and the low is 15 , so the range is 36 - 15 = 21 .

The Standard Deviation is a more accurate and detailed estimate of dispersion because an outlier can greatly exaggerate the range (as was true in this example where the single outlier value of 36 stands apart from the rest of the values. The Standard Deviation shows the relation that set of scores has to the mean of the sample. Again lets take the set of scores:

to compute the standard deviation, we first find the distance between each value and the mean. We know from above that the mean is 20.875 . So, the differences from the mean are:

Notice that values that are below the mean have negative discrepancies and values above it have positive ones. Next, we square each discrepancy:

Now, we take these “squares” and sum them to get the Sum of Squares (SS) value. Here, the sum is 350.875 . Next, we divide this sum by the number of scores minus 1 . Here, the result is 350.875 / 7 = 50.125 . This value is known as the variance . To get the standard deviation, we take the square root of the variance (remember that we squared the deviations earlier). This would be SQRT(50.125) = 7.079901129253 .

Although this computation may seem convoluted, it’s actually quite simple. To see this, consider the formula for the standard deviation:

- X is each score,

- X̄ is the mean (or average),

- n is the number of values,

- Σ means we sum across the values.

In the top part of the ratio, the numerator, we see that each score has the mean subtracted from it, the difference is squared, and the squares are summed. In the bottom part, we take the number of scores minus 1 . The ratio is the variance and the square root is the standard deviation. In English, we can describe the standard deviation as:

the square root of the sum of the squared deviations from the mean divided by the number of scores minus one.

Although we can calculate these univariate statistics by hand, it gets quite tedious when you have more than a few values and variables. Every statistics program is capable of calculating them easily for you. For instance, I put the eight scores into SPSS and got the following table as a result:

| Metric | Value |

|---|---|

| N | 8 |

| Mean | 20.8750 |

| Median | 20.0000 |

| Mode | 15.00 |

| Standard Deviation | 7.0799 |

| Variance | 50.1250 |

| Range | 21.00 |

which confirms the calculations I did by hand above.

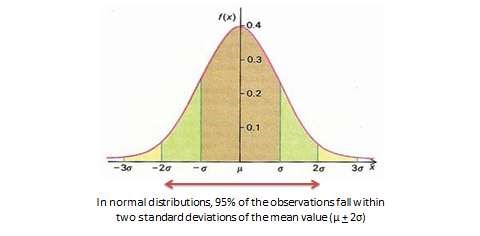

The standard deviation allows us to reach some conclusions about specific scores in our distribution. Assuming that the distribution of scores is normal or bell-shaped (or close to it!), the following conclusions can be reached:

- approximately 68% of the scores in the sample fall within one standard deviation of the mean

- approximately 95% of the scores in the sample fall within two standard deviations of the mean

- approximately 99% of the scores in the sample fall within three standard deviations of the mean

For instance, since the mean in our example is 20.875 and the standard deviation is 7.0799 , we can from the above statement estimate that approximately 95% of the scores will fall in the range of 20.875-(2*7.0799) to 20.875+(2*7.0799) or between 6.7152 and 35.0348 . This kind of information is a critical stepping stone to enabling us to compare the performance of an individual on one variable with their performance on another, even when the variables are measured on entirely different scales.

Cookie Consent

Conjointly uses essential cookies to make our site work. We also use additional cookies in order to understand the usage of the site, gather audience analytics, and for remarketing purposes.

For more information on Conjointly's use of cookies, please read our Cookie Policy .

Which one are you?

I am new to conjointly, i am already using conjointly.

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

14 Quantitative analysis: Descriptive statistics

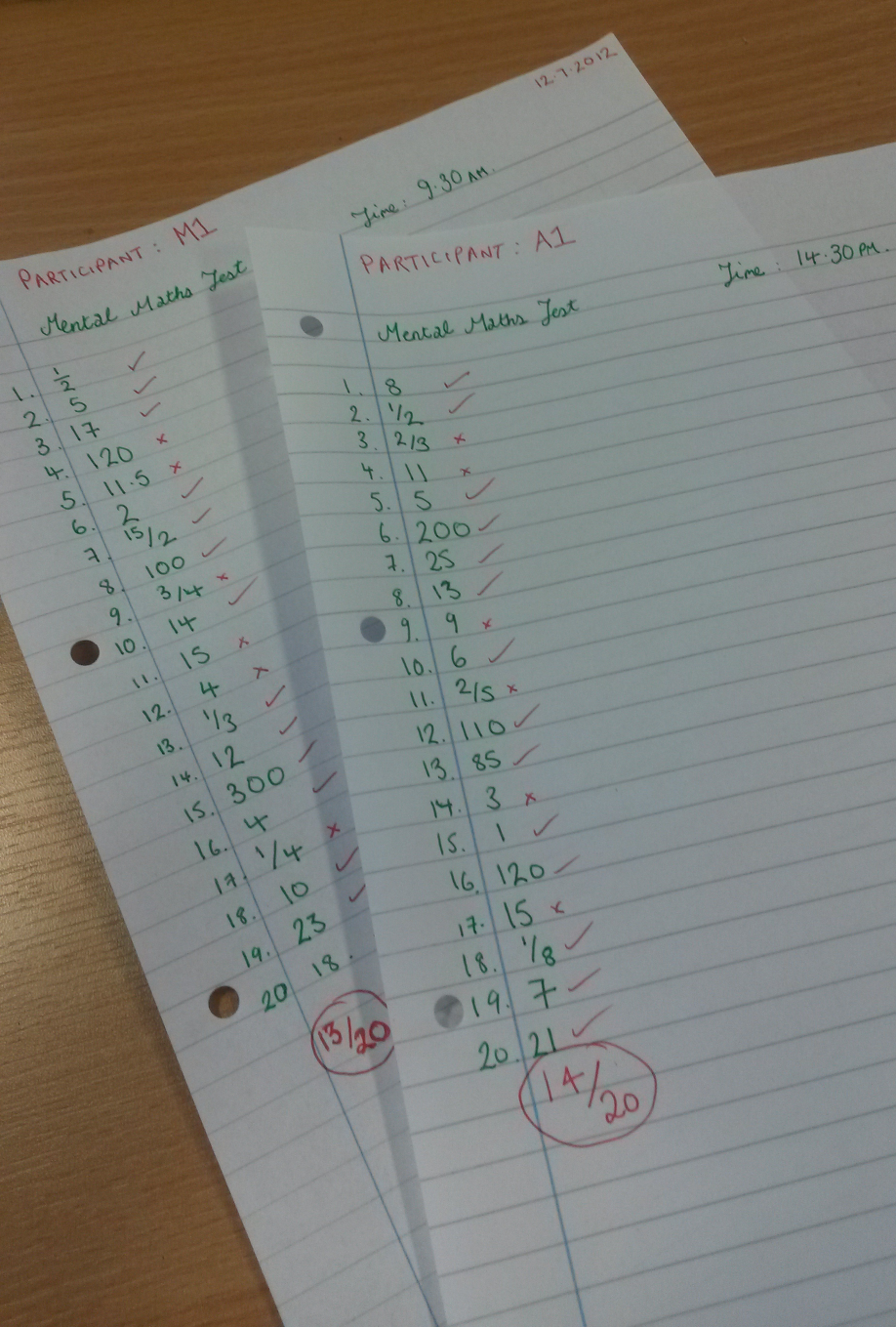

Numeric data collected in a research project can be analysed quantitatively using statistical tools in two different ways. Descriptive analysis refers to statistically describing, aggregating, and presenting the constructs of interest or associations between these constructs. Inferential analysis refers to the statistical testing of hypotheses (theory testing). In this chapter, we will examine statistical techniques used for descriptive analysis, and the next chapter will examine statistical techniques for inferential analysis. Much of today’s quantitative data analysis is conducted using software programs such as SPSS or SAS. Readers are advised to familiarise themselves with one of these programs for understanding the concepts described in this chapter.

Data preparation

In research projects, data may be collected from a variety of sources: postal surveys, interviews, pretest or posttest experimental data, observational data, and so forth. This data must be converted into a machine-readable, numeric format, such as in a spreadsheet or a text file, so that they can be analysed by computer programs like SPSS or SAS. Data preparation usually follows the following steps:

Data coding. Coding is the process of converting data into numeric format. A codebook should be created to guide the coding process. A codebook is a comprehensive document containing a detailed description of each variable in a research study, items or measures for that variable, the format of each item (numeric, text, etc.), the response scale for each item (i.e., whether it is measured on a nominal, ordinal, interval, or ratio scale, and whether this scale is a five-point, seven-point scale, etc.), and how to code each value into a numeric format. For instance, if we have a measurement item on a seven-point Likert scale with anchors ranging from ‘strongly disagree’ to ‘strongly agree’, we may code that item as 1 for strongly disagree, 4 for neutral, and 7 for strongly agree, with the intermediate anchors in between. Nominal data such as industry type can be coded in numeric form using a coding scheme such as: 1 for manufacturing, 2 for retailing, 3 for financial, 4 for healthcare, and so forth (of course, nominal data cannot be analysed statistically). Ratio scale data such as age, income, or test scores can be coded as entered by the respondent. Sometimes, data may need to be aggregated into a different form than the format used for data collection. For instance, if a survey measuring a construct such as ‘benefits of computers’ provided respondents with a checklist of benefits that they could select from, and respondents were encouraged to choose as many of those benefits as they wanted, then the total number of checked items could be used as an aggregate measure of benefits. Note that many other forms of data—such as interview transcripts—cannot be converted into a numeric format for statistical analysis. Codebooks are especially important for large complex studies involving many variables and measurement items, where the coding process is conducted by different people, to help the coding team code data in a consistent manner, and also to help others understand and interpret the coded data.

Data entry. Coded data can be entered into a spreadsheet, database, text file, or directly into a statistical program like SPSS. Most statistical programs provide a data editor for entering data. However, these programs store data in their own native format—e.g., SPSS stores data as .sav files—which makes it difficult to share that data with other statistical programs. Hence, it is often better to enter data into a spreadsheet or database where it can be reorganised as needed, shared across programs, and subsets of data can be extracted for analysis. Smaller data sets with less than 65,000 observations and 256 items can be stored in a spreadsheet created using a program such as Microsoft Excel, while larger datasets with millions of observations will require a database. Each observation can be entered as one row in the spreadsheet, and each measurement item can be represented as one column. Data should be checked for accuracy during and after entry via occasional spot checks on a set of items or observations. Furthermore, while entering data, the coder should watch out for obvious evidence of bad data, such as the respondent selecting the ‘strongly agree’ response to all items irrespective of content, including reverse-coded items. If so, such data can be entered but should be excluded from subsequent analysis.

Data transformation. Sometimes, it is necessary to transform data values before they can be meaningfully interpreted. For instance, reverse coded items—where items convey the opposite meaning of that of their underlying construct—should be reversed (e.g., in a 1-7 interval scale, 8 minus the observed value will reverse the value) before they can be compared or combined with items that are not reverse coded. Other kinds of transformations may include creating scale measures by adding individual scale items, creating a weighted index from a set of observed measures, and collapsing multiple values into fewer categories (e.g., collapsing incomes into income ranges).

Univariate analysis

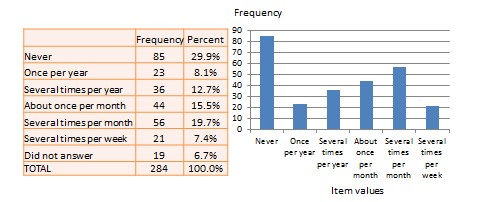

Univariate analysis—or analysis of a single variable—refers to a set of statistical techniques that can describe the general properties of one variable. Univariate statistics include: frequency distribution, central tendency, and dispersion. The frequency distribution of a variable is a summary of the frequency—or percentages—of individual values or ranges of values for that variable. For instance, we can measure how many times a sample of respondents attend religious services—as a gauge of their ‘religiosity’—using a categorical scale: never, once per year, several times per year, about once a month, several times per month, several times per week, and an optional category for ‘did not answer’. If we count the number or percentage of observations within each category—except ‘did not answer’ which is really a missing value rather than a category—and display it in the form of a table, as shown in Figure 14.1, what we have is a frequency distribution. This distribution can also be depicted in the form of a bar chart, as shown on the right panel of Figure 14.1, with the horizontal axis representing each category of that variable and the vertical axis representing the frequency or percentage of observations within each category.

With very large samples, where observations are independent and random, the frequency distribution tends to follow a plot that looks like a bell-shaped curve—a smoothed bar chart of the frequency distribution—similar to that shown in Figure 14.2. Here most observations are clustered toward the centre of the range of values, with fewer and fewer observations clustered toward the extreme ends of the range. Such a curve is called a normal distribution .

Lastly, the mode is the most frequently occurring value in a distribution of values. In the previous example, the most frequently occurring value is 15, which is the mode of the above set of test scores. Note that any value that is estimated from a sample, such as mean, median, mode, or any of the later estimates are called a statistic .

Bivariate analysis

Bivariate analysis examines how two variables are related to one another. The most common bivariate statistic is the bivariate correlation —often, simply called ‘correlation’—which is a number between -1 and +1 denoting the strength of the relationship between two variables. Say that we wish to study how age is related to self-esteem in a sample of 20 respondents—i.e., as age increases, does self-esteem increase, decrease, or remain unchanged?. If self-esteem increases, then we have a positive correlation between the two variables, if self-esteem decreases, then we have a negative correlation, and if it remains the same, we have a zero correlation. To calculate the value of this correlation, consider the hypothetical dataset shown in Table 14.1.

After computing bivariate correlation, researchers are often interested in knowing whether the correlation is significant (i.e., a real one) or caused by mere chance. Answering such a question would require testing the following hypothesis:

Social Science Research: Principles, Methods and Practices (Revised edition) Copyright © 2019 by Anol Bhattacherjee is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License , except where otherwise noted.

Share This Book

- Privacy Policy

Home » Descriptive Analytics – Methods, Tools and Examples

Descriptive Analytics – Methods, Tools and Examples

Table of Contents

Descriptive Analytics

Definition:

Descriptive analytics focused on describing or summarizing raw data and making it interpretable. This type of analytics provides insight into what has happened in the past. It involves the analysis of historical data to identify patterns, trends, and insights. Descriptive analytics often uses visualization tools to represent the data in a way that is easy to interpret.

Descriptive Analytics in Research

Descriptive analytics plays a crucial role in research, helping investigators understand and describe the data collected in their studies. Here’s how descriptive analytics is typically used in a research setting:

- Descriptive Statistics: In research, descriptive analytics often takes the form of descriptive statistics . This includes calculating measures of central tendency (like mean, median, and mode), measures of dispersion (like range, variance, and standard deviation), and measures of frequency (like count, percent, and frequency). These calculations help researchers summarize and understand their data.

- Visualizing Data: Descriptive analytics also involves creating visual representations of data to better understand and communicate research findings . This might involve creating bar graphs, line graphs, pie charts, scatter plots, box plots, and other visualizations.

- Exploratory Data Analysis: Before conducting any formal statistical tests, researchers often conduct an exploratory data analysis, which is a form of descriptive analytics. This might involve looking at distributions of variables, checking for outliers, and exploring relationships between variables.

- Initial Findings: Descriptive analytics are often reported in the results section of a research study to provide readers with an overview of the data. For example, a researcher might report average scores, demographic breakdowns, or the percentage of participants who endorsed each response on a survey.

- Establishing Patterns and Relationships: Descriptive analytics helps in identifying patterns, trends, or relationships in the data, which can guide subsequent analysis or future research. For instance, researchers might look at the correlation between variables as a part of descriptive analytics.

Descriptive Analytics Techniques

Descriptive analytics involves a variety of techniques to summarize, interpret, and visualize historical data. Some commonly used techniques include:

Statistical Analysis

This includes basic statistical methods like mean, median, mode (central tendency), standard deviation, variance (dispersion), correlation, and regression (relationships between variables).

Data Aggregation

It is the process of compiling and summarizing data to obtain a general perspective. It can involve methods like sum, count, average, min, max, etc., often applied to a group of data.

Data Mining

This involves analyzing large volumes of data to discover patterns, trends, and insights. Techniques used in data mining can include clustering (grouping similar data), classification (assigning data into categories), association rules (finding relationships between variables), and anomaly detection (identifying outliers).

Data Visualization

This involves presenting data in a graphical or pictorial format to provide clear and easy understanding of the data patterns, trends, and insights. Common data visualization methods include bar charts, line graphs, pie charts, scatter plots, histograms, and more complex forms like heat maps and interactive dashboards.

This involves organizing data into informational summaries to monitor how different areas of a business are performing. Reports can be generated manually or automatically and can be presented in tables, graphs, or dashboards.

Cross-tabulation (or Pivot Tables)

It involves displaying the relationship between two or more variables in a tabular form. It can provide a deeper understanding of the data by allowing comparisons and revealing patterns and correlations that may not be readily apparent in raw data.

Descriptive Modeling

Some techniques use complex algorithms to interpret data. Examples include decision tree analysis, which provides a graphical representation of decision-making situations, and neural networks, which are used to identify correlations and patterns in large data sets.

Descriptive Analytics Tools

Some common Descriptive Analytics Tools are as follows:

Excel: Microsoft Excel is a widely used tool that can be used for simple descriptive analytics. It has powerful statistical and data visualization capabilities. Pivot tables are a particularly useful feature for summarizing and analyzing large data sets.

Tableau: Tableau is a data visualization tool that is used to represent data in a graphical or pictorial format. It can handle large data sets and allows for real-time data analysis.