Andrew W. Appel , Princeton University

David Bindel , Cornell University

Jean-Baptiste Jeannin , University of Michigan

Karthik Duraisamy , University of Michigan

Ariel Kellison , Cornell University, PhD Student

Josh Cohen , Princeton University, PhD Student

Yichen Tao , Sahil Bola , University of Michigan, PhD Students

Shengyi Wang , Princeton University, Research Scientist

Mohit Tekriwal , Lawrence Livermore Lab, Postdoc, External Collaborator

Philip Johnson-Freyd, Samuel Pollard , Heidi Thornquist , Sandia National Labs, External Collaborators

In this collection of research projects, we take a layered approach to foundational verification of correctness and accuracy of numerical software–that is, formal machine-checked proofs about programs (not just algorithms), with no assumptions except specifications of instruction-set architectures. We build, improve, and use appropriate tools at each layer: proving in the real numbers about discrete algorithms; proving how floating-point algorithms approximate real-number algorithms; reasoning about C program implementation of floating-point algorithms; and connecting all proofs end-to-end in Coq.

Our initial research projects (and results) are,

- cbench_vst /sqrt: Square root by Newton’s Method, by Appel and Bertot .

- VerifiedLeapfrog : A verified numerical method for an Ordinary Differential Equation, by Kellison and Appel .

- VCFloat2 : Floating-point error analysis in Coq, by Appel & Kellison, improvements on an earlier open-source project by Ramananandro et al.

- Parallel Dot Product , demonstrating how to use VST to verify correctness of simple shared-memory task parallelism

- Stationary Iterative Methods with formally verified error bounds

Bibliography

- Verified correctness, accuracy, and convergence of a stationary iterative linear solver: Jacobi method , by Mohit Tekriwal, Andrew W. Appel, Ariel E. Kellison, David Bindel, and Jean-Baptiste Jeannin. 16th Conference on Intelligent Computer Mathematics , September 2023.

- LAProof: a library of formal accuracy and correctness proofs for sparse linear algebra programs , by Ariel E. Kellison, Andrew W. Appel, Mohit Tekriwal, and David Bindel, 30th IEEE International Symposium on Computer Arithmetic , September 2023.

- Towards verified rounding error analysis for stationary iterative methods , by Ariel Kellison, Mohit Tekriwal, Jean-Baptiste Jeannin, and Geoffrey Hulette, in Correctness 2022: Sixth International Workshop on Software Correctness for HPC Applications , November 2022.

- Verified Numerical Methods for Ordinary Differential Equations , by Ariel E. Kellison and Andrew W. Appel, in NSV’22: 15th International Workshop on Numerical Software Verification , August 2022.

- VCFloat2: Floating-point Error Analysis in Coq , by Andrew W. Appel and Ariel E. Kellison, in CPP 2024: Proceedings of the 13th ACM SIGPLAN International Conference on Certified Programs and Proofs , pages 14–29, January 2024.

- C-language floating-point proofs layered with VST and Flocq , by Andrew W. Appel and Yves Bertot, Journal of Formalized Reasoning volume 13, number 1, pages 1-16.

VeriNum’s various projects are supported in part by

- National Science Foundation grant 2219757 “Formally Verified Numerical Methods”, to Princeton University (Appel, Principal Investigator) and grant 2219758 to Cornell University (Bindel)

- National Science Foundation grant 2219997 “Foundational Approaches for End-to-end Formal Verification of Computational Physics” to the University of Michigan (Jeannin and Duraisamy)

- U.S. Department of Energy Computational Science Graduate Fellowship (Ariel Kellison)

- Sandia National Laboratories, funding the collaboration of Sandia participants with these projects

Computational Science & Numerical Analysis

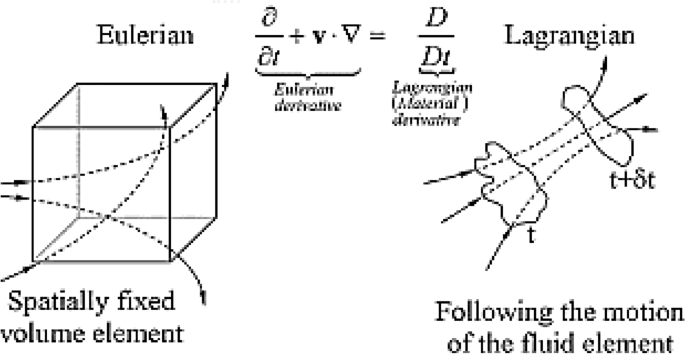

Computational science is a key area related to physical mathematics. The problems of interest in physical mathematics often require computations for their resolution. Conversely, the development of efficient computational algorithms often requires an understanding of the basic properties of the solutions to the equations to be solved numerically. For example, the development of methods for the solution of hyperbolic equations (e.g. shock capturing methods in, say, gas-dynamics) has been characterized by a very close interaction between theoretical, computational, experimental scientists, and engineers.

Department Members in This Field

- Laurent Demanet Applied analysis, Scientific Computing

- Alan Edelman Parallel Computing, Numerical Linear Algebra, Random Matrices

- Steven Johnson Waves, PDEs, Scientific Computing

- Pablo Parrilo Optimization, Control Theory, Computational Algebraic Geometry, Applied Mathematics

- Gilbert Strang Numerical Analysis, Partial Differential Equations

- John Urschel Matrix Analysis, Numerical Linear Algebra, Spectral Graph Theory

Instructors & Postdocs

- Pengning Chao Scientific computing, Nanophotonics, Inverse problems, Fundamental limits

- Ziang Chen applied analysis, applied probability, statistics, optimization, machine learning

Researchers & Visitors

- Keaton Burns PDEs, Spectral Methods, Fluid Dynamics

- Raphaël Pestourie Surrogate Models, AI, Electromagnetic Design, End-to-end Optimization, Inverse Design

Graduate Students*

- Rodrigo Arrieta Candia Numerical methods for PDEs, Numerical Analysis, Scientific Computing, Computational Electromagnetism

- Mo Chen Optimization, Scientific Computing

- Max Daniels High-dimensional statistics, optimization, sampling algorithms, machine learning

- Sarah Greer Imaging, inverse problems, signal processing

- Songchen Tan computational science, numerical analysis, differentiable programming

*Only a partial list of graduate students

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Methodology

- What Is Quantitative Research? | Definition, Uses & Methods

What Is Quantitative Research? | Definition, Uses & Methods

Published on June 12, 2020 by Pritha Bhandari . Revised on June 22, 2023.

Quantitative research is the process of collecting and analyzing numerical data. It can be used to find patterns and averages, make predictions, test causal relationships, and generalize results to wider populations.

Quantitative research is the opposite of qualitative research , which involves collecting and analyzing non-numerical data (e.g., text, video, or audio).

Quantitative research is widely used in the natural and social sciences: biology, chemistry, psychology, economics, sociology, marketing, etc.

- What is the demographic makeup of Singapore in 2020?

- How has the average temperature changed globally over the last century?

- Does environmental pollution affect the prevalence of honey bees?

- Does working from home increase productivity for people with long commutes?

Table of contents

Quantitative research methods, quantitative data analysis, advantages of quantitative research, disadvantages of quantitative research, other interesting articles, frequently asked questions about quantitative research.

You can use quantitative research methods for descriptive, correlational or experimental research.

- In descriptive research , you simply seek an overall summary of your study variables.

- In correlational research , you investigate relationships between your study variables.

- In experimental research , you systematically examine whether there is a cause-and-effect relationship between variables.

Correlational and experimental research can both be used to formally test hypotheses , or predictions, using statistics. The results may be generalized to broader populations based on the sampling method used.

To collect quantitative data, you will often need to use operational definitions that translate abstract concepts (e.g., mood) into observable and quantifiable measures (e.g., self-ratings of feelings and energy levels).

| Research method | How to use | Example |

|---|---|---|

| Control or manipulate an to measure its effect on a dependent variable. | To test whether an intervention can reduce procrastination in college students, you give equal-sized groups either a procrastination intervention or a comparable task. You compare self-ratings of procrastination behaviors between the groups after the intervention. | |

| Ask questions of a group of people in-person, over-the-phone or online. | You distribute with rating scales to first-year international college students to investigate their experiences of culture shock. | |

| (Systematic) observation | Identify a behavior or occurrence of interest and monitor it in its natural setting. | To study college classroom participation, you sit in on classes to observe them, counting and recording the prevalence of active and passive behaviors by students from different backgrounds. |

| Secondary research | Collect data that has been gathered for other purposes e.g., national surveys or historical records. | To assess whether attitudes towards climate change have changed since the 1980s, you collect relevant questionnaire data from widely available . |

Note that quantitative research is at risk for certain research biases , including information bias , omitted variable bias , sampling bias , or selection bias . Be sure that you’re aware of potential biases as you collect and analyze your data to prevent them from impacting your work too much.

Here's why students love Scribbr's proofreading services

Discover proofreading & editing

Once data is collected, you may need to process it before it can be analyzed. For example, survey and test data may need to be transformed from words to numbers. Then, you can use statistical analysis to answer your research questions .

Descriptive statistics will give you a summary of your data and include measures of averages and variability. You can also use graphs, scatter plots and frequency tables to visualize your data and check for any trends or outliers.

Using inferential statistics , you can make predictions or generalizations based on your data. You can test your hypothesis or use your sample data to estimate the population parameter .

First, you use descriptive statistics to get a summary of the data. You find the mean (average) and the mode (most frequent rating) of procrastination of the two groups, and plot the data to see if there are any outliers.

You can also assess the reliability and validity of your data collection methods to indicate how consistently and accurately your methods actually measured what you wanted them to.

Quantitative research is often used to standardize data collection and generalize findings . Strengths of this approach include:

- Replication

Repeating the study is possible because of standardized data collection protocols and tangible definitions of abstract concepts.

- Direct comparisons of results

The study can be reproduced in other cultural settings, times or with different groups of participants. Results can be compared statistically.

- Large samples

Data from large samples can be processed and analyzed using reliable and consistent procedures through quantitative data analysis.

- Hypothesis testing

Using formalized and established hypothesis testing procedures means that you have to carefully consider and report your research variables, predictions, data collection and testing methods before coming to a conclusion.

Despite the benefits of quantitative research, it is sometimes inadequate in explaining complex research topics. Its limitations include:

- Superficiality

Using precise and restrictive operational definitions may inadequately represent complex concepts. For example, the concept of mood may be represented with just a number in quantitative research, but explained with elaboration in qualitative research.

- Narrow focus

Predetermined variables and measurement procedures can mean that you ignore other relevant observations.

- Structural bias

Despite standardized procedures, structural biases can still affect quantitative research. Missing data , imprecise measurements or inappropriate sampling methods are biases that can lead to the wrong conclusions.

- Lack of context

Quantitative research often uses unnatural settings like laboratories or fails to consider historical and cultural contexts that may affect data collection and results.

Prevent plagiarism. Run a free check.

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Chi square goodness of fit test

- Degrees of freedom

- Null hypothesis

- Discourse analysis

- Control groups

- Mixed methods research

- Non-probability sampling

- Inclusion and exclusion criteria

Research bias

- Rosenthal effect

- Implicit bias

- Cognitive bias

- Selection bias

- Negativity bias

- Status quo bias

Quantitative research deals with numbers and statistics, while qualitative research deals with words and meanings.

Quantitative methods allow you to systematically measure variables and test hypotheses . Qualitative methods allow you to explore concepts and experiences in more detail.

In mixed methods research , you use both qualitative and quantitative data collection and analysis methods to answer your research question .

Data collection is the systematic process by which observations or measurements are gathered in research. It is used in many different contexts by academics, governments, businesses, and other organizations.

Operationalization means turning abstract conceptual ideas into measurable observations.

For example, the concept of social anxiety isn’t directly observable, but it can be operationally defined in terms of self-rating scores, behavioral avoidance of crowded places, or physical anxiety symptoms in social situations.

Before collecting data , it’s important to consider how you will operationalize the variables that you want to measure.

Reliability and validity are both about how well a method measures something:

- Reliability refers to the consistency of a measure (whether the results can be reproduced under the same conditions).

- Validity refers to the accuracy of a measure (whether the results really do represent what they are supposed to measure).

If you are doing experimental research, you also have to consider the internal and external validity of your experiment.

Hypothesis testing is a formal procedure for investigating our ideas about the world using statistics. It is used by scientists to test specific predictions, called hypotheses , by calculating how likely it is that a pattern or relationship between variables could have arisen by chance.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Bhandari, P. (2023, June 22). What Is Quantitative Research? | Definition, Uses & Methods. Scribbr. Retrieved August 21, 2024, from https://www.scribbr.com/methodology/quantitative-research/

Is this article helpful?

Pritha Bhandari

Other students also liked, descriptive statistics | definitions, types, examples, inferential statistics | an easy introduction & examples, "i thought ai proofreading was useless but..".

I've been using Scribbr for years now and I know it's a service that won't disappoint. It does a good job spotting mistakes”

- USC Libraries

- Research Guides

Organizing Your Social Sciences Research Paper

- Quantitative Methods

- Purpose of Guide

- Design Flaws to Avoid

- Independent and Dependent Variables

- Glossary of Research Terms

- Reading Research Effectively

- Narrowing a Topic Idea

- Broadening a Topic Idea

- Extending the Timeliness of a Topic Idea

- Academic Writing Style

- Applying Critical Thinking

- Choosing a Title

- Making an Outline

- Paragraph Development

- Research Process Video Series

- Executive Summary

- The C.A.R.S. Model

- Background Information

- The Research Problem/Question

- Theoretical Framework

- Citation Tracking

- Content Alert Services

- Evaluating Sources

- Primary Sources

- Secondary Sources

- Tiertiary Sources

- Scholarly vs. Popular Publications

- Qualitative Methods

- Insiderness

- Using Non-Textual Elements

- Limitations of the Study

- Common Grammar Mistakes

- Writing Concisely

- Avoiding Plagiarism

- Footnotes or Endnotes?

- Further Readings

- Generative AI and Writing

- USC Libraries Tutorials and Other Guides

- Bibliography

Quantitative methods emphasize objective measurements and the statistical, mathematical, or numerical analysis of data collected through polls, questionnaires, and surveys, or by manipulating pre-existing statistical data using computational techniques . Quantitative research focuses on gathering numerical data and generalizing it across groups of people or to explain a particular phenomenon.

Babbie, Earl R. The Practice of Social Research . 12th ed. Belmont, CA: Wadsworth Cengage, 2010; Muijs, Daniel. Doing Quantitative Research in Education with SPSS . 2nd edition. London: SAGE Publications, 2010.

Need Help Locating Statistics?

Resources for locating data and statistics can be found here:

Statistics & Data Research Guide

Characteristics of Quantitative Research

Your goal in conducting quantitative research study is to determine the relationship between one thing [an independent variable] and another [a dependent or outcome variable] within a population. Quantitative research designs are either descriptive [subjects usually measured once] or experimental [subjects measured before and after a treatment]. A descriptive study establishes only associations between variables; an experimental study establishes causality.

Quantitative research deals in numbers, logic, and an objective stance. Quantitative research focuses on numeric and unchanging data and detailed, convergent reasoning rather than divergent reasoning [i.e., the generation of a variety of ideas about a research problem in a spontaneous, free-flowing manner].

Its main characteristics are :

- The data is usually gathered using structured research instruments.

- The results are based on larger sample sizes that are representative of the population.

- The research study can usually be replicated or repeated, given its high reliability.

- Researcher has a clearly defined research question to which objective answers are sought.

- All aspects of the study are carefully designed before data is collected.

- Data are in the form of numbers and statistics, often arranged in tables, charts, figures, or other non-textual forms.

- Project can be used to generalize concepts more widely, predict future results, or investigate causal relationships.

- Researcher uses tools, such as questionnaires or computer software, to collect numerical data.

The overarching aim of a quantitative research study is to classify features, count them, and construct statistical models in an attempt to explain what is observed.

Things to keep in mind when reporting the results of a study using quantitative methods :

- Explain the data collected and their statistical treatment as well as all relevant results in relation to the research problem you are investigating. Interpretation of results is not appropriate in this section.

- Report unanticipated events that occurred during your data collection. Explain how the actual analysis differs from the planned analysis. Explain your handling of missing data and why any missing data does not undermine the validity of your analysis.

- Explain the techniques you used to "clean" your data set.

- Choose a minimally sufficient statistical procedure ; provide a rationale for its use and a reference for it. Specify any computer programs used.

- Describe the assumptions for each procedure and the steps you took to ensure that they were not violated.

- When using inferential statistics , provide the descriptive statistics, confidence intervals, and sample sizes for each variable as well as the value of the test statistic, its direction, the degrees of freedom, and the significance level [report the actual p value].

- Avoid inferring causality , particularly in nonrandomized designs or without further experimentation.

- Use tables to provide exact values ; use figures to convey global effects. Keep figures small in size; include graphic representations of confidence intervals whenever possible.

- Always tell the reader what to look for in tables and figures .

NOTE: When using pre-existing statistical data gathered and made available by anyone other than yourself [e.g., government agency], you still must report on the methods that were used to gather the data and describe any missing data that exists and, if there is any, provide a clear explanation why the missing data does not undermine the validity of your final analysis.

Babbie, Earl R. The Practice of Social Research . 12th ed. Belmont, CA: Wadsworth Cengage, 2010; Brians, Craig Leonard et al. Empirical Political Analysis: Quantitative and Qualitative Research Methods . 8th ed. Boston, MA: Longman, 2011; McNabb, David E. Research Methods in Public Administration and Nonprofit Management: Quantitative and Qualitative Approaches . 2nd ed. Armonk, NY: M.E. Sharpe, 2008; Quantitative Research Methods. Writing@CSU. Colorado State University; Singh, Kultar. Quantitative Social Research Methods . Los Angeles, CA: Sage, 2007.

Basic Research Design for Quantitative Studies

Before designing a quantitative research study, you must decide whether it will be descriptive or experimental because this will dictate how you gather, analyze, and interpret the results. A descriptive study is governed by the following rules: subjects are generally measured once; the intention is to only establish associations between variables; and, the study may include a sample population of hundreds or thousands of subjects to ensure that a valid estimate of a generalized relationship between variables has been obtained. An experimental design includes subjects measured before and after a particular treatment, the sample population may be very small and purposefully chosen, and it is intended to establish causality between variables. Introduction The introduction to a quantitative study is usually written in the present tense and from the third person point of view. It covers the following information:

- Identifies the research problem -- as with any academic study, you must state clearly and concisely the research problem being investigated.

- Reviews the literature -- review scholarship on the topic, synthesizing key themes and, if necessary, noting studies that have used similar methods of inquiry and analysis. Note where key gaps exist and how your study helps to fill these gaps or clarifies existing knowledge.

- Describes the theoretical framework -- provide an outline of the theory or hypothesis underpinning your study. If necessary, define unfamiliar or complex terms, concepts, or ideas and provide the appropriate background information to place the research problem in proper context [e.g., historical, cultural, economic, etc.].

Methodology The methods section of a quantitative study should describe how each objective of your study will be achieved. Be sure to provide enough detail to enable the reader can make an informed assessment of the methods being used to obtain results associated with the research problem. The methods section should be presented in the past tense.

- Study population and sampling -- where did the data come from; how robust is it; note where gaps exist or what was excluded. Note the procedures used for their selection;

- Data collection – describe the tools and methods used to collect information and identify the variables being measured; describe the methods used to obtain the data; and, note if the data was pre-existing [i.e., government data] or you gathered it yourself. If you gathered it yourself, describe what type of instrument you used and why. Note that no data set is perfect--describe any limitations in methods of gathering data.

- Data analysis -- describe the procedures for processing and analyzing the data. If appropriate, describe the specific instruments of analysis used to study each research objective, including mathematical techniques and the type of computer software used to manipulate the data.

Results The finding of your study should be written objectively and in a succinct and precise format. In quantitative studies, it is common to use graphs, tables, charts, and other non-textual elements to help the reader understand the data. Make sure that non-textual elements do not stand in isolation from the text but are being used to supplement the overall description of the results and to help clarify key points being made. Further information about how to effectively present data using charts and graphs can be found here .

- Statistical analysis -- how did you analyze the data? What were the key findings from the data? The findings should be present in a logical, sequential order. Describe but do not interpret these trends or negative results; save that for the discussion section. The results should be presented in the past tense.

Discussion Discussions should be analytic, logical, and comprehensive. The discussion should meld together your findings in relation to those identified in the literature review, and placed within the context of the theoretical framework underpinning the study. The discussion should be presented in the present tense.

- Interpretation of results -- reiterate the research problem being investigated and compare and contrast the findings with the research questions underlying the study. Did they affirm predicted outcomes or did the data refute it?

- Description of trends, comparison of groups, or relationships among variables -- describe any trends that emerged from your analysis and explain all unanticipated and statistical insignificant findings.

- Discussion of implications – what is the meaning of your results? Highlight key findings based on the overall results and note findings that you believe are important. How have the results helped fill gaps in understanding the research problem?

- Limitations -- describe any limitations or unavoidable bias in your study and, if necessary, note why these limitations did not inhibit effective interpretation of the results.

Conclusion End your study by to summarizing the topic and provide a final comment and assessment of the study.

- Summary of findings – synthesize the answers to your research questions. Do not report any statistical data here; just provide a narrative summary of the key findings and describe what was learned that you did not know before conducting the study.

- Recommendations – if appropriate to the aim of the assignment, tie key findings with policy recommendations or actions to be taken in practice.

- Future research – note the need for future research linked to your study’s limitations or to any remaining gaps in the literature that were not addressed in your study.

Black, Thomas R. Doing Quantitative Research in the Social Sciences: An Integrated Approach to Research Design, Measurement and Statistics . London: Sage, 1999; Gay,L. R. and Peter Airasain. Educational Research: Competencies for Analysis and Applications . 7th edition. Upper Saddle River, NJ: Merril Prentice Hall, 2003; Hector, Anestine. An Overview of Quantitative Research in Composition and TESOL . Department of English, Indiana University of Pennsylvania; Hopkins, Will G. “Quantitative Research Design.” Sportscience 4, 1 (2000); "A Strategy for Writing Up Research Results. The Structure, Format, Content, and Style of a Journal-Style Scientific Paper." Department of Biology. Bates College; Nenty, H. Johnson. "Writing a Quantitative Research Thesis." International Journal of Educational Science 1 (2009): 19-32; Ouyang, Ronghua (John). Basic Inquiry of Quantitative Research . Kennesaw State University.

Strengths of Using Quantitative Methods

Quantitative researchers try to recognize and isolate specific variables contained within the study framework, seek correlation, relationships and causality, and attempt to control the environment in which the data is collected to avoid the risk of variables, other than the one being studied, accounting for the relationships identified.

Among the specific strengths of using quantitative methods to study social science research problems:

- Allows for a broader study, involving a greater number of subjects, and enhancing the generalization of the results;

- Allows for greater objectivity and accuracy of results. Generally, quantitative methods are designed to provide summaries of data that support generalizations about the phenomenon under study. In order to accomplish this, quantitative research usually involves few variables and many cases, and employs prescribed procedures to ensure validity and reliability;

- Applying well established standards means that the research can be replicated, and then analyzed and compared with similar studies;

- You can summarize vast sources of information and make comparisons across categories and over time; and,

- Personal bias can be avoided by keeping a 'distance' from participating subjects and using accepted computational techniques .

Babbie, Earl R. The Practice of Social Research . 12th ed. Belmont, CA: Wadsworth Cengage, 2010; Brians, Craig Leonard et al. Empirical Political Analysis: Quantitative and Qualitative Research Methods . 8th ed. Boston, MA: Longman, 2011; McNabb, David E. Research Methods in Public Administration and Nonprofit Management: Quantitative and Qualitative Approaches . 2nd ed. Armonk, NY: M.E. Sharpe, 2008; Singh, Kultar. Quantitative Social Research Methods . Los Angeles, CA: Sage, 2007.

Limitations of Using Quantitative Methods

Quantitative methods presume to have an objective approach to studying research problems, where data is controlled and measured, to address the accumulation of facts, and to determine the causes of behavior. As a consequence, the results of quantitative research may be statistically significant but are often humanly insignificant.

Some specific limitations associated with using quantitative methods to study research problems in the social sciences include:

- Quantitative data is more efficient and able to test hypotheses, but may miss contextual detail;

- Uses a static and rigid approach and so employs an inflexible process of discovery;

- The development of standard questions by researchers can lead to "structural bias" and false representation, where the data actually reflects the view of the researcher instead of the participating subject;

- Results provide less detail on behavior, attitudes, and motivation;

- Researcher may collect a much narrower and sometimes superficial dataset;

- Results are limited as they provide numerical descriptions rather than detailed narrative and generally provide less elaborate accounts of human perception;

- The research is often carried out in an unnatural, artificial environment so that a level of control can be applied to the exercise. This level of control might not normally be in place in the real world thus yielding "laboratory results" as opposed to "real world results"; and,

- Preset answers will not necessarily reflect how people really feel about a subject and, in some cases, might just be the closest match to the preconceived hypothesis.

Research Tip

Finding Examples of How to Apply Different Types of Research Methods

SAGE publications is a major publisher of studies about how to design and conduct research in the social and behavioral sciences. Their SAGE Research Methods Online and Cases database includes contents from books, articles, encyclopedias, handbooks, and videos covering social science research design and methods including the complete Little Green Book Series of Quantitative Applications in the Social Sciences and the Little Blue Book Series of Qualitative Research techniques. The database also includes case studies outlining the research methods used in real research projects. This is an excellent source for finding definitions of key terms and descriptions of research design and practice, techniques of data gathering, analysis, and reporting, and information about theories of research [e.g., grounded theory]. The database covers both qualitative and quantitative research methods as well as mixed methods approaches to conducting research.

SAGE Research Methods Online and Cases

- << Previous: Qualitative Methods

- Next: Insiderness >>

- Last Updated: Aug 21, 2024 8:54 AM

- URL: https://libguides.usc.edu/writingguide

Qualitative Analysis of a Novel Numerical Method for Solving Non-linear Ordinary Differential Equations

- Original Paper

- Published: 17 April 2024

- Volume 10 , article number 99 , ( 2024 )

Cite this article

- Sonali Kaushik 1 &

- Rajesh Kumar 2

163 Accesses

Explore all metrics

The dynamics of innumerable real-world phenomena is represented with the help of non-linear ordinary differential equations (NODEs). There is a growing trend of solving these equations using accurate and easy to implement methods. The goal of this research work is to create a numerical method to solve the first-order NODEs (FNODEs) by coupling of the well-known trapezoidal method with a newly developed semi-analytical technique called the Laplace optimized decomposition method (LODM). The novelty of this coupling lies in the improvement of order of accuracy of the scheme when the terms in the series solution are increased. The article discusses the qualitative behavior of the new method, i.e., consistency, stability and convergence. Several numerical test cases of the non-linear differential equations are considered to validate our findings.

This is a preview of subscription content, log in via an institution to check access.

Access this article

Subscribe and save.

- Get 10 units per month

- Download Article/Chapter or eBook

- 1 Unit = 1 Article or 1 Chapter

- Cancel anytime

Price includes VAT (Russian Federation)

Instant access to the full article PDF.

Rent this article via DeepDyve

Institutional subscriptions

Similar content being viewed by others

Analysis of some dynamical systems by combination of two different methods

Novel approach for solving higher-order differential equations with applications to the Van der Pol and Van der Pol–Duffing equations

Nonlinear Initial Value Ordinary Differential Equations

Data availability.

Enquiries about data availability should be directed to the authors.

Simmons, G.F.: Differential Equations with Applications and Historical Notes. CRC Press, Boca Raton (2016)

Google Scholar

Nagle, R.K., Saff, E.B., Snider, A.D.: Fundamentals of Differential Equations. Pearson, London (2017)

Nadeem, M., He, J.-H., He, C.-H., Sedighi, H.M., Shirazi, A.: A numerical solution of nonlinear fractional Newell-Whitehead-Segel equation using natural transform. TWMS J. Pure Appl. Math. 13 (2), 168–182 (2022)

Liu, J., Nadeem, M., Habib, M., Karim, S., Or Roshid, H.: Numerical investigation of the nonlinear coupled fractional massive thirring equation using two-scale approach, Complexity 2022 (2022)

Nadeem, M., He, J.-H., Islam, A.: The homotopy perturbation method for fractional differential equations: part 1 mohand transform. Int. J. Numer. Methods Heat Fluid Flow 31 (11), 3490–3504 (2021)

Article Google Scholar

Tran, T.V.H., Pavelková, D., Homolka, L.: Solow model with technological progress: an empirical study of economic growth in Vietnam through Ardl approach. Quality-Access to Success 23 (186) (2022)

Briec, W., Lasselle, L.: On some relations between a continuous time Luenberger productivity indicator and the Solow model. Bull. Econ. Res. 74 (2), 484–502 (2022)

Article MathSciNet Google Scholar

Danca, M., Codreanu, S., Bako, B.: Detailed analysis of a nonlinear prey–predator model. J. Biol. Phys. 23 (1), 11 (1997)

Bentout, S., Djilali, S., Atangana, A.: Bifurcation analysis of an age-structured prey–predator model with infection developed in prey. Math. Methods Appl. Sci. 45 (3), 1189–1208 (2022)

Campos, L.: Non-Linear Differential Equations and Dynamical Systems: Ordinary Differential Equations with Applications to Trajectories and Oscillations. CRC Press, Boca Raton (2019)

Book Google Scholar

Shah, N.A., Ahmad, I., Bazighifan, O., Abouelregal, A.E., Ahmad, H.: Multistage optimal homotopy asymptotic method for the nonlinear Riccati ordinary differential equation in nonlinear physics. Appl. Math. 14 (6), 1009–1016 (2020)

MathSciNet Google Scholar

LeVeque, R.J.: Finite Difference Methods for Ordinary and Partial Differential Equations. Steady-state and Time-Dependent Problems. SIAM, Philadelphia (2007)

Mickens, R.E.: Nonstandard finite difference schemes for differential equations. J. Differ. Equ. Appl. 8 (9), 823–847 (2002)

Mehdizadeh Khalsaraei, M., Khodadosti, F.: Nonstandard finite difference schemes for differential equations. Sahand Commun. Math. Anal. 1 (2), 47–54 (2014)

Thirumalai, S., Seshadri, R., Yuzbasi, S.: Spectral solutions of fractional differential equations modelling combined drug therapy for HIV infection. Chaos, Solitons & Fractals 151 , 111234 (2021)

Evans, G.A., Blackledge, J.M., Yardley, P.D.: Finite element method for ordinary differential equations. In: Numerical Methods for Partial Differential Equations, pp. 123–164. Springer(2000)

Deng, K., Xiong, Z.: Superconvergence of a discontinuous finite element method for a nonlinear ordinary differential equation. Appl. Math. Comput. 217 (7), 3511–3515 (2010)

Wriggers, P.: Nonlinear Finite Element Methods. Springer, Berlin (2008)

Gonsalves, S., Swapna, G.: Finite element study of nanofluid through porous nonlinear stretching surface under convective boundary conditions. Mater. Today Proc. (2023)

Al-Omari, A., Schüttler, H.-B., Arnold, J., Taha, T.: Solving nonlinear systems of first order ordinary differential equations using a Galerkin finite element method. IEEE Access 1 , 408–417 (2013)

Odibat, Z.: An optimized decomposition method for nonlinear ordinary and partial differential equations. Phys. A 541 , 123323 (2020)

Jafari, H., Daftardar-Gejji, V.: Revised adomian decomposition method for solving systems of ordinary and fractional differential equations. Appl. Math. Comput. 181 (1), 598–608 (2006)

Liao, S.: Homotopy Analysis Method in Nonlinear Differential Equations. Springer, Berlin (2012)

Odibat, Z.: An improved optimal homotopy analysis algorithm for nonlinear differential equations. J. Math. Anal. Appl. 488 (2), 124089 (2020)

He, J.-H., Latifizadeh, H.: A general numerical algorithm for nonlinear differential equations by the variational iteration method. Int. J. Numer. Methods Heat Fluid Flow 30 (11), 4797–4810 (2020)

Biazar, J., Ghazvini, H.: He’s variational iteration method for solving linear and non-linear systems of ordinary differential equations. Appl. Math. Comput. 191 (1), 287–297 (2007)

Ramos, J.I.: On the variational iteration method and other iterative techniques for nonlinear differential equations. Appl. Math. Comput. 199 (1), 39–69 (2008)

Geng, F.: A modified variational iteration method for solving Riccati differential equations. Comput. Math. Appl. 60 (7), 1868–1872 (2010)

Kumar, R.V., Sarris, I.E., Sowmya, G., Abdulrahman, A.: Iterative solutions for the nonlinear heat transfer equation of a convective-radiative annular fin with power law temperature-dependent thermal properties. Symmetry 15 (6), 1204 (2023)

Sowmya, G., Kumar, R.S.V., Banu, Y.: Thermal performance of a longitudinal fin under the influence of magnetic field using sumudu transform method with pade approximant (stm-pa). ZAMM J. Appl. Math. Mech. 103 , e202100526 (2023)

Varun Kumar, R.S., Sowmya, G., Jayaprakash, M.C., Prasannakumara, B.C., Khan, M.I., Guedri, K., Kumam, P., Sitthithakerngkiet, K., Galal, A.M.: Assessment of thermal distribution through an inclined radiative–convective porous fin of concave profile using generalized residual power series method (GRPSM). Sci. Rep. (2022). https://doi.org/10.1038/s41598-022-15396-z

Kaushik, S., Kumar, R.: A novel optimized decomposition method for Smoluchowski’s aggregation equation. J. Comput. Appl. Math. 419 , 114710 (2023)

Odibat, Z.: The optimized decomposition method for a reliable treatment of ivps for second order differential equations. Phys. Scr. 96 (9), 095206 (2021)

Beghami, W., Maayah, B., Bushnaq, S., Abu Arqub, O.: The laplace optimized decomposition method for solving systems of partial differential equations of fractional order. Int. J. Appl. Comput. Math. 8 , 52 (2022). https://doi.org/10.1007/s40819-022-01256-x

Kaushik, S., Hussain, S., Kumar, R.: Laplace transform-based approximation methods for solving pure aggregation and breakage equations. Math. Methods Appl. Sci. 46 (16), 17402–17421 (2023)

Patade, J., Bhalekar, S.: A new numerical method based on Daftardar-Gejji and Jafari technique for solving differential equations. World J. Model. Simul. 11 , 256–271 (2015)

Patade, J., Bhalekar, S.: A novel numerical method for solving Volterra integro-differential equations. Int. J. Appl. Comput. Math. 6 (1), 1–19 (2020)

Ali, L., Islam, S., Gul, T., Amiri, I.S.: Solution of nonlinear problems by a new analytical technique using Daftardar-Gejji and Jafari polynomials. Adv. Mech. Eng. 11 (12), 1687814019896962 (2019)

Download references

Rajesh Kumar wishes to thank Science and Engineering Research Board (SERB), Department of Science and Technology (DST), India, for the funding through the project MTR/2021/000866.

Author information

Authors and affiliations.

Department of Mathematics, School of Advanced Sciences, VIT-AP University, Amaravati, 522237, India

Sonali Kaushik

Department of Mathematics, BITS Pilani, Pilani Campus, Pilani, Rajasthan, 333031, India

Rajesh Kumar

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Sonali Kaushik .

Ethics declarations

Competing interests.

The authors have not disclosed any competing interests.

Additional information

Publisher's note.

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

Reprints and permissions

About this article

Kaushik, S., Kumar, R. Qualitative Analysis of a Novel Numerical Method for Solving Non-linear Ordinary Differential Equations. Int. J. Appl. Comput. Math 10 , 99 (2024). https://doi.org/10.1007/s40819-024-01735-3

Download citation

Accepted : 27 March 2024

Published : 17 April 2024

DOI : https://doi.org/10.1007/s40819-024-01735-3

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- First-order non-linear differential equations

- Trapezoidal method

- Semi-analytical method

- Laplace transform

- Laplace optimized decomposition method

- Consistency

- Convergence

Mathematics Subject Classification

- Primary 45K05

- Secondary 34A34

- Find a journal

- Publish with us

- Track your research

- Privacy Policy

Home » Quantitative Research – Methods, Types and Analysis

Quantitative Research – Methods, Types and Analysis

Table of Contents

Quantitative Research

Quantitative research is a type of research that collects and analyzes numerical data to test hypotheses and answer research questions . This research typically involves a large sample size and uses statistical analysis to make inferences about a population based on the data collected. It often involves the use of surveys, experiments, or other structured data collection methods to gather quantitative data.

Quantitative Research Methods

Quantitative Research Methods are as follows:

Descriptive Research Design

Descriptive research design is used to describe the characteristics of a population or phenomenon being studied. This research method is used to answer the questions of what, where, when, and how. Descriptive research designs use a variety of methods such as observation, case studies, and surveys to collect data. The data is then analyzed using statistical tools to identify patterns and relationships.

Correlational Research Design

Correlational research design is used to investigate the relationship between two or more variables. Researchers use correlational research to determine whether a relationship exists between variables and to what extent they are related. This research method involves collecting data from a sample and analyzing it using statistical tools such as correlation coefficients.

Quasi-experimental Research Design

Quasi-experimental research design is used to investigate cause-and-effect relationships between variables. This research method is similar to experimental research design, but it lacks full control over the independent variable. Researchers use quasi-experimental research designs when it is not feasible or ethical to manipulate the independent variable.

Experimental Research Design

Experimental research design is used to investigate cause-and-effect relationships between variables. This research method involves manipulating the independent variable and observing the effects on the dependent variable. Researchers use experimental research designs to test hypotheses and establish cause-and-effect relationships.

Survey Research

Survey research involves collecting data from a sample of individuals using a standardized questionnaire. This research method is used to gather information on attitudes, beliefs, and behaviors of individuals. Researchers use survey research to collect data quickly and efficiently from a large sample size. Survey research can be conducted through various methods such as online, phone, mail, or in-person interviews.

Quantitative Research Analysis Methods

Here are some commonly used quantitative research analysis methods:

Statistical Analysis

Statistical analysis is the most common quantitative research analysis method. It involves using statistical tools and techniques to analyze the numerical data collected during the research process. Statistical analysis can be used to identify patterns, trends, and relationships between variables, and to test hypotheses and theories.

Regression Analysis

Regression analysis is a statistical technique used to analyze the relationship between one dependent variable and one or more independent variables. Researchers use regression analysis to identify and quantify the impact of independent variables on the dependent variable.

Factor Analysis

Factor analysis is a statistical technique used to identify underlying factors that explain the correlations among a set of variables. Researchers use factor analysis to reduce a large number of variables to a smaller set of factors that capture the most important information.

Structural Equation Modeling

Structural equation modeling is a statistical technique used to test complex relationships between variables. It involves specifying a model that includes both observed and unobserved variables, and then using statistical methods to test the fit of the model to the data.

Time Series Analysis

Time series analysis is a statistical technique used to analyze data that is collected over time. It involves identifying patterns and trends in the data, as well as any seasonal or cyclical variations.

Multilevel Modeling

Multilevel modeling is a statistical technique used to analyze data that is nested within multiple levels. For example, researchers might use multilevel modeling to analyze data that is collected from individuals who are nested within groups, such as students nested within schools.

Applications of Quantitative Research

Quantitative research has many applications across a wide range of fields. Here are some common examples:

- Market Research : Quantitative research is used extensively in market research to understand consumer behavior, preferences, and trends. Researchers use surveys, experiments, and other quantitative methods to collect data that can inform marketing strategies, product development, and pricing decisions.

- Health Research: Quantitative research is used in health research to study the effectiveness of medical treatments, identify risk factors for diseases, and track health outcomes over time. Researchers use statistical methods to analyze data from clinical trials, surveys, and other sources to inform medical practice and policy.

- Social Science Research: Quantitative research is used in social science research to study human behavior, attitudes, and social structures. Researchers use surveys, experiments, and other quantitative methods to collect data that can inform social policies, educational programs, and community interventions.

- Education Research: Quantitative research is used in education research to study the effectiveness of teaching methods, assess student learning outcomes, and identify factors that influence student success. Researchers use experimental and quasi-experimental designs, as well as surveys and other quantitative methods, to collect and analyze data.

- Environmental Research: Quantitative research is used in environmental research to study the impact of human activities on the environment, assess the effectiveness of conservation strategies, and identify ways to reduce environmental risks. Researchers use statistical methods to analyze data from field studies, experiments, and other sources.

Characteristics of Quantitative Research

Here are some key characteristics of quantitative research:

- Numerical data : Quantitative research involves collecting numerical data through standardized methods such as surveys, experiments, and observational studies. This data is analyzed using statistical methods to identify patterns and relationships.

- Large sample size: Quantitative research often involves collecting data from a large sample of individuals or groups in order to increase the reliability and generalizability of the findings.

- Objective approach: Quantitative research aims to be objective and impartial in its approach, focusing on the collection and analysis of data rather than personal beliefs, opinions, or experiences.

- Control over variables: Quantitative research often involves manipulating variables to test hypotheses and establish cause-and-effect relationships. Researchers aim to control for extraneous variables that may impact the results.

- Replicable : Quantitative research aims to be replicable, meaning that other researchers should be able to conduct similar studies and obtain similar results using the same methods.

- Statistical analysis: Quantitative research involves using statistical tools and techniques to analyze the numerical data collected during the research process. Statistical analysis allows researchers to identify patterns, trends, and relationships between variables, and to test hypotheses and theories.

- Generalizability: Quantitative research aims to produce findings that can be generalized to larger populations beyond the specific sample studied. This is achieved through the use of random sampling methods and statistical inference.

Examples of Quantitative Research

Here are some examples of quantitative research in different fields:

- Market Research: A company conducts a survey of 1000 consumers to determine their brand awareness and preferences. The data is analyzed using statistical methods to identify trends and patterns that can inform marketing strategies.

- Health Research : A researcher conducts a randomized controlled trial to test the effectiveness of a new drug for treating a particular medical condition. The study involves collecting data from a large sample of patients and analyzing the results using statistical methods.

- Social Science Research : A sociologist conducts a survey of 500 people to study attitudes toward immigration in a particular country. The data is analyzed using statistical methods to identify factors that influence these attitudes.

- Education Research: A researcher conducts an experiment to compare the effectiveness of two different teaching methods for improving student learning outcomes. The study involves randomly assigning students to different groups and collecting data on their performance on standardized tests.

- Environmental Research : A team of researchers conduct a study to investigate the impact of climate change on the distribution and abundance of a particular species of plant or animal. The study involves collecting data on environmental factors and population sizes over time and analyzing the results using statistical methods.

- Psychology : A researcher conducts a survey of 500 college students to investigate the relationship between social media use and mental health. The data is analyzed using statistical methods to identify correlations and potential causal relationships.

- Political Science: A team of researchers conducts a study to investigate voter behavior during an election. They use survey methods to collect data on voting patterns, demographics, and political attitudes, and analyze the results using statistical methods.

How to Conduct Quantitative Research

Here is a general overview of how to conduct quantitative research:

- Develop a research question: The first step in conducting quantitative research is to develop a clear and specific research question. This question should be based on a gap in existing knowledge, and should be answerable using quantitative methods.

- Develop a research design: Once you have a research question, you will need to develop a research design. This involves deciding on the appropriate methods to collect data, such as surveys, experiments, or observational studies. You will also need to determine the appropriate sample size, data collection instruments, and data analysis techniques.

- Collect data: The next step is to collect data. This may involve administering surveys or questionnaires, conducting experiments, or gathering data from existing sources. It is important to use standardized methods to ensure that the data is reliable and valid.

- Analyze data : Once the data has been collected, it is time to analyze it. This involves using statistical methods to identify patterns, trends, and relationships between variables. Common statistical techniques include correlation analysis, regression analysis, and hypothesis testing.

- Interpret results: After analyzing the data, you will need to interpret the results. This involves identifying the key findings, determining their significance, and drawing conclusions based on the data.

- Communicate findings: Finally, you will need to communicate your findings. This may involve writing a research report, presenting at a conference, or publishing in a peer-reviewed journal. It is important to clearly communicate the research question, methods, results, and conclusions to ensure that others can understand and replicate your research.

When to use Quantitative Research

Here are some situations when quantitative research can be appropriate:

- To test a hypothesis: Quantitative research is often used to test a hypothesis or a theory. It involves collecting numerical data and using statistical analysis to determine if the data supports or refutes the hypothesis.

- To generalize findings: If you want to generalize the findings of your study to a larger population, quantitative research can be useful. This is because it allows you to collect numerical data from a representative sample of the population and use statistical analysis to make inferences about the population as a whole.

- To measure relationships between variables: If you want to measure the relationship between two or more variables, such as the relationship between age and income, or between education level and job satisfaction, quantitative research can be useful. It allows you to collect numerical data on both variables and use statistical analysis to determine the strength and direction of the relationship.

- To identify patterns or trends: Quantitative research can be useful for identifying patterns or trends in data. For example, you can use quantitative research to identify trends in consumer behavior or to identify patterns in stock market data.

- To quantify attitudes or opinions : If you want to measure attitudes or opinions on a particular topic, quantitative research can be useful. It allows you to collect numerical data using surveys or questionnaires and analyze the data using statistical methods to determine the prevalence of certain attitudes or opinions.

Purpose of Quantitative Research

The purpose of quantitative research is to systematically investigate and measure the relationships between variables or phenomena using numerical data and statistical analysis. The main objectives of quantitative research include:

- Description : To provide a detailed and accurate description of a particular phenomenon or population.

- Explanation : To explain the reasons for the occurrence of a particular phenomenon, such as identifying the factors that influence a behavior or attitude.

- Prediction : To predict future trends or behaviors based on past patterns and relationships between variables.

- Control : To identify the best strategies for controlling or influencing a particular outcome or behavior.

Quantitative research is used in many different fields, including social sciences, business, engineering, and health sciences. It can be used to investigate a wide range of phenomena, from human behavior and attitudes to physical and biological processes. The purpose of quantitative research is to provide reliable and valid data that can be used to inform decision-making and improve understanding of the world around us.

Advantages of Quantitative Research

There are several advantages of quantitative research, including:

- Objectivity : Quantitative research is based on objective data and statistical analysis, which reduces the potential for bias or subjectivity in the research process.

- Reproducibility : Because quantitative research involves standardized methods and measurements, it is more likely to be reproducible and reliable.

- Generalizability : Quantitative research allows for generalizations to be made about a population based on a representative sample, which can inform decision-making and policy development.

- Precision : Quantitative research allows for precise measurement and analysis of data, which can provide a more accurate understanding of phenomena and relationships between variables.

- Efficiency : Quantitative research can be conducted relatively quickly and efficiently, especially when compared to qualitative research, which may involve lengthy data collection and analysis.

- Large sample sizes : Quantitative research can accommodate large sample sizes, which can increase the representativeness and generalizability of the results.

Limitations of Quantitative Research

There are several limitations of quantitative research, including:

- Limited understanding of context: Quantitative research typically focuses on numerical data and statistical analysis, which may not provide a comprehensive understanding of the context or underlying factors that influence a phenomenon.

- Simplification of complex phenomena: Quantitative research often involves simplifying complex phenomena into measurable variables, which may not capture the full complexity of the phenomenon being studied.

- Potential for researcher bias: Although quantitative research aims to be objective, there is still the potential for researcher bias in areas such as sampling, data collection, and data analysis.

- Limited ability to explore new ideas: Quantitative research is often based on pre-determined research questions and hypotheses, which may limit the ability to explore new ideas or unexpected findings.

- Limited ability to capture subjective experiences : Quantitative research is typically focused on objective data and may not capture the subjective experiences of individuals or groups being studied.

- Ethical concerns : Quantitative research may raise ethical concerns, such as invasion of privacy or the potential for harm to participants.

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Triangulation in Research – Types, Methods and...

Applied Research – Types, Methods and Examples

One-to-One Interview – Methods and Guide

Correlational Research – Methods, Types and...

Qualitative Research – Methods, Analysis Types...

Quasi-Experimental Research Design – Types...

- Academic Calendar

- How Online Learning Works

- Faculty Support

- Apply and Enroll

- Apply for Admission

- Enroll in Courses

- Courses by Semester

- Tuition and Fees

- Military and Veterans

- Academics FAQ

- Programs and Courses

- Graduate Programs

- Certificate Programs

- Professional Development Programs

- Course Catalog

- Courses FAQ

- Student Resources

- Online Course Homepages

- Site Course Homepages

- Exams and Homework

- Technical Support

- Graduate Program Procedures

- Course Drop & Withdrawals

- University Resources

- Students FAQ

CE 536 Introduction to Numerical Methods for Civil Engineers

3 Credit Hours

This is an entry level graduate course intended to give an introduction to widely used numerical methods through application to several civil and environmental engineering problems. The emphasis will be on the breadth of topics and applications; however, to the extent possible, the mathematical theory behind the numerical methods will also be presented. The course is expected to lay foundation for students beginning to engage in research projects that involve numerical methods. Student will use MATLAB as a tool in the course. Experience with MATLAB is not required. The course will be taught in an interactive setting in a computer equipped classroom.

Prerequisite

For graduate students in civil engineering, there are no formal prerequisites or co-requisites. Undergraduate students should have a GPA of 3.0 or better and Junior standing. Discuss with instructor for any clarification regarding these requirements.

Course Objectives

Upon completion of the course, the students will be able to:

- Use MATLAB as a programming language for engineering problem solving.

- Describe and apply basic numerical methods for civil engineering problem solving.

- Develop algorithms and programs for solving civil engineering problems involving: (i) multi-dimensional integration, (ii) multivariate differentiation, (iii) ordinary differential equations, (iv) partial differential equations, (v) optimization, and (vi) curve fitting or inverse problems.

Course Requirements

Typically 7 homework assignments, 1 mini-project report, and a take-home final exam. A weighted average grade will be calculated as follows:

- Assignments – 40%

- Online Quizzes – 10%

- Mini-Project – 15%

- Final exam – 35%

+/- Grading system will be used. MATLAB software is required (see below under computer requirements).

Course Organization

| Module | Topic | Lectures: 75 min |

|---|---|---|

| 1.1 | General MATLAB commands and features | Lectures 1 – 2 |

| 1.2 | Simple Civil Engineering Examples | Lecture 3 |

| 2.1 | Numerical integration techniques and civil engineering applications | Lectures 4 – 6 |

| 2.2 | Numerical differentiation with applications in groundwater flow | Lecture 7 |

| 3.1 | Runge-Kutta methods with applications in structural and environmental engineering | Lectures 8 – 9 |

| 3.2 | Stiff systems with applications in environmental engineering | Lecture 10 |

| 3.3 | Boundary value problems in structural and environmental engineering | Lectures 11 – 12 |

| 4.1 | Introduction to finite difference methods with civil engineering examples | Lectures 13 – 15 |

| 4.2 | Linear and non-linear system solution (direct and iterative methods) | Lecture 16 |

| 4.3 | Applications in groundwater flow and transport | Lecture 17 |

| 4.4 | Matlab PDE toolbox | Lecture 18 |

| 4.5 | Introduction to finite element methods with application to groundwater flow | Lecture 19 |

| 5.1 | Direct methods with applications in environmental and structural engineering | Lecture 20 |

| 5.2 | Gradient based methods with applications in water resources engineering | Lectures 21 – 23 |

| 5.3 | Heuristic methods with applications in structural and water resources engineering | Lectures 24 – 25 |

| 5.4 | Design applications in structural and environmental engineering | Lecture 26 |

| 6.1 | Linear and non-linear regression | Lecture 27 |

| 6.2 | Direct methods | Lecture 28 |

| 6.3 | Indirect methods | Lecture 28 |

| 6.4 | Applications in Civil Engineering | Lecture 29 |

Recommended Textbook

Hardcover book:

Chapra, S.C., and R.P. Canale. Numerical Methods for Engineers , 7th edition, McGraw Hill, 2015. (Optional)

Updated: 1/11/2021

Browse Course Material

Course info.

- Dr. Benjamin Seibold

Departments

- Mathematics

As Taught In

- Algorithms and Data Structures

- Numerical Simulation

- Differential Equations

- Mathematical Analysis

Learning Resource Types

Numerical methods for partial differential equations, project description and schedule.

The course project counts for 50% of the overall course grade.

The project consists of

- a midterm report (20% of the project grade)

- a final report (60% of the project grade), and

- a presentation (20% of the project grade)

Throughout the term, each participant works on an extended problem related to the content of the course. You are allowed to choose a project which is related to your thesis, however, the following restrictions apply:

- The project must focus on computational aspects related to the lecture topics.

- It is illegal to “reuse” a project from another course or thesis work. Your 8.336 project must cover specific questions and goals, which must not to be identical to the questions and goals of your thesis. For instance, you can investigate a specific computational aspect of your work, and investigate this aspect deeper than you would in your thesis work.

Of course, you can also choose a topic unrelated to your other work. For instance, you can consult the lecturer for interesting problems related to his research.

Any course project has to be agreed on by, and is under the supervision of the lecturer.

The following deadlines apply:

- By Ses #4: Submit project proposal

- By Ses #13: Submit midterm report on project

- By Ses #25: Submit final project report

- Last two sessions: Give short talk on project

Project Proposal

Your project proposal to should include the following information:

- Project title

- Project background Does it relate to your work in another field (e.g. your thesis)? If yes, briefly outline the questions and goals of your work in the other field.

- Questions and Goals Briefly describe the questions you wish to investigate in your project. What are your expectations?

- Plan Which language do you plan to program in? Do you intend to use special software? Does your project work relate to the work of other people at MIT?

Project Abstracts

Project presentations take place on a Saturday, and are aimed at a general scientific audience, with focus on the numerical solution of physically arising equations.

The following represents the project abstracts chosen by the students during the Spring ‘09 term.

Numerical Solution for Poisson Equation with Variable Coefficients

I will present a numerical technique to obtain the solution of a quasi-3-dimensional potential distribution due to a point source of current located on the surface of the semi-infinite media containing an arbitrary 2-dimensional distribution of conductivity. I will show how two different boundary conditions (Neumann and mixed) chosen to simulate the “infinitely distant” edges of the lower half-space affect the accuracy of the solution. The solution on the uniform and non-uniform grid will be compared. Some figures showing the potential field over the simple structures (such as layered media, vertical contact, square body) will be presented.

Finite Difference Elastic Wave Modeling with an Irregular Free Surface

The objective of my project is to model elastic wave propagation in a homogenous medium with surface topography. Surface topography and the weathered zone (i.e., heterogeneity near the earth’s surface) have great effects on seismic surveys as the recorded seismograms can be severely contaminated by scattering, diffractions, and ground rolls that exhibit non-ray wave propagation phenomena. implemented a 2-D staggered finite difference approximation of first order PDEs that is second-order accurate in time and space (with velocity-stress formulation), and described the results of the finite-difference scheme on various irregular free surface models.

Simulation of the Onset of Baroclinic Instability

Many features of atmospheric dynamics can be demonstrated with a simple table-top rotating tank experiment. Here a numerical simulation of the incompressible Navier-Stokes equations and the heat equation is applied to a flow in a rotating annular tank. A finite difference method is used on an axisymmetric 2-Drotating reference frame. At low rotational rates a stable vertical velocity gradient is observed in the zonal flow, corresponding to the tropical Hadley cell flow. At high rotational rates a baroclinic instability is observed, corresponding to atmospheric dynamics seen in the upper-latitudes.

Analysis of the Time-Dependent Coupling of Optical-Thermal Response of Laser-Irradiated Tissue Using Finite Difference Methods

Over the years lasers have been extensively used for detection and treatment of diseases and abnormal health conditions including cancerous tissues and arterial plaques. While such detection and treatment has numerous advantages including superior accuracy and unparalleled control over conventional techniques, it is imperative to optimize light dosimetry, treatment parameters and conditions of delivery a priori so that thermal damage due to laser irradiation is limited to the tissue area (volume) under consideration. In this work, a non-linear finite-difference program is developed to simulate the dynamic evolution of tissue coagulation considering the dependence of the optical properties and blood perfusion on the instantaneous temperature and damage index. Using this coupled optical diffusion-bioheat equation model, we observe that significant changes arise as a function of the dynamic behavior of the optical properties and the perfusion. Specifically, the model reveals that the heat penetration in laser irradiated tissue is much lesser than what would be expected from a static treatment of the biophysical parameters. Finally, we provide preliminary results on the optical-thermal response of the tissue on application of a pulsed laser.

Modeling and Simulation of Self-Sustained Combustions in Reactive Multi-Layers of Energetic Materials

It is known that forced mixing of energetic materials such as nickel and aluminum results in highly exothermic reactions. The reaction, herein combustion, initiated by thermal impulses propagates through the materials in self-sustained manner. In this project, self-sustained combustion and its propagation in a reactive Ni-Al nanolaminate is first mathematically modeled in two coupled partial differential equations for heat and atomic diffusions. The, the coupled governing equations are discretized in time and space, and numerically solved using the finite difference schemes for the Ni-Al nanolaminate domain with appropriate initial and boundary conditions for the temperature and atomic composition. The simulation results for the heat and mass diffusion with combustions would be presented, and compared with several experimental results. Also, several important effects of simulation conditions such as initial temperature profile, ambient temperature and Ni-Al premixed thickness would be discussed. Finally, several important features for the governing equations, and physical and chemical assumptions for the models would be addressed.

Simulation of an Airbag for Landing of Re-Entry Vehicles

This presentation will focus on the implementation of the Immersed Boundary Method to approximate the dynamics of an impacting airbag intended for use in Earth re-entry vehicle applications. Specifically, the numerical approach and its related implementation issues will be discussed, followed by a discussion and analysis of the obtained results.

Immersed Boundary Method (IBM) for the Navier Stokes Equations

Studying certain medical conditions, such as hypertension, requires accurate simulation of the blood flow in complex-shaped elastic arteries. In this work we present a 2-D fluid-structure interaction solver to accurately simulate blood flow in arteries with bends and bifurcations. Such blood flow is mathematically modeled using the incompressible Navier-Stokes equations. The arterial wall is modeled using a linear elasticity model. Our solver is based on the immersed boundary method (IBM). The numerical accuracy of our solver stems from using a staggered grid for the spatial discretization of the incompressible Navier-Stokes equations. The computational efficiency of our method stems from using Chorin’s projection method for the time stepping, coupled with the fast Fourier transform (FFT) to solve the intermediate Poisson equations. We have validated our results versus reference results obtained from MERCK Research Laboratories for a straight vessel of length 10cm and diameter 2cm. Our results for pressure, flow and radius variations are within 5% of those obtained from MERCK.

Finite Difference Modeling of Seismic Wave Propagation in Fractured Media (2D)

It is critical to detect and characterize fracture networks for production of oil reservoirs. Much effort has gone into the development of methods for identifying and understanding fracture systems from seismic data. Forward modeling of seismic wave propagation in fractured media plays a very important role in this field. In equivalent medium theory, background medium pluses fracture is equivalent to anisotropy medium, in this paper, I will use this theory together with staggered finite difference technique for modeling seismic wave propagation in fractured media.

Glacial Ice Streams

Two models of one-dimensional longitudinal glacial ice flow as a function of depth are presented. The system dynamics are governed by Stokes? 2019 flow acting under the influence of a velocity dependent viscosity coefficient. The domain of computation is discretized on a staggered one-dimensional grid to enhance the stability of the methods. The first model uses a centered finite difference scheme to treat the problem as a diffusion problem with non-constant spatially and temporally varying coefficients. The second model combines centered and upwinded finite difference schemes to treat the problem as an advection problem. These methods are compared for a range of basal boundary conditions representative of the found in large glacial ice sheets, with prescribed velocities, shear stresses, or shear stress limits.

Solver for Axisymmetric Low Speed Inviscid Flow