10 Best Literature Review Tools for Researchers

This post may contain affiliate links that allow us to earn a commission at no expense to you. Learn more

Boost your research game with these Best Literature Review Tools for Researchers! Uncover hidden gems, organize your findings, and ace your next research paper!

Conducting literature reviews poses challenges for researchers due to the overwhelming volume of information available and the lack of efficient methods to manage and analyze it.

Researchers struggle to identify key sources, extract relevant information, and maintain accuracy while manually conducting literature reviews. This leads to inefficiency, errors, and difficulty in identifying gaps or trends in existing literature.

Advancements in technology have resulted in a variety of literature review tools. These tools streamline the process, offering features like automated searching, filtering, citation management, and research data extraction. They save time, improve accuracy, and provide valuable insights for researchers.

In this article, we present a curated list of the 10 best literature review tools, empowering researchers to make informed choices and revolutionize their systematic literature review process.

Table of Contents

Top 10 Literature Review Tools for Researchers: In A Nutshell (2023)

#1. semantic scholar – a free, ai-powered research tool for scientific literature.

Semantic Scholar is a cutting-edge literature review tool that researchers rely on for its comprehensive access to academic publications. With its advanced AI algorithms and extensive database, it simplifies the discovery of relevant research papers.

By employing semantic analysis, users can explore scholarly articles based on context and meaning, making it a go-to resource for scholars across disciplines.

Additionally, Semantic Scholar offers personalized recommendations and alerts, ensuring researchers stay updated with the latest developments. However, users should be cautious of potential limitations.

Not all scholarly content may be indexed, and occasional false positives or inaccurate associations can occur. Furthermore, the tool primarily focuses on computer science and related fields, potentially limiting coverage in other disciplines.

Researchers should be mindful of these considerations and supplement Semantic Scholar with other reputable resources for a comprehensive literature review. Despite these caveats, Semantic Scholar remains a valuable tool for streamlining research and staying informed.

#2. Elicit – Research assistant using language models like GPT-3

Elicit is a game-changing literature review tool that has gained popularity among researchers worldwide. With its user-friendly interface and extensive database of scholarly articles, it streamlines the research process, saving time and effort.

The tool employs advanced algorithms to provide personalized recommendations, ensuring researchers discover the most relevant studies for their field. Elicit also promotes collaboration by enabling users to create shared folders and annotate articles.

However, users should be cautious when using Elicit. It is important to verify the credibility and accuracy of the sources found through the tool, as the database encompasses a wide range of publications.

Additionally, occasional glitches in the search function have been reported, leading to incomplete or inaccurate results. While Elicit offers tremendous benefits, researchers should remain vigilant and cross-reference information to ensure a comprehensive literature review.

#3. Scite.Ai – Your personal research assistant

Scite.Ai is a popular literature review tool that revolutionizes the research process for scholars. With its innovative citation analysis feature, researchers can evaluate the credibility and impact of scientific articles, making informed decisions about their inclusion in their own work.

By assessing the context in which citations are used, Scite.Ai ensures that the sources selected are reliable and of high quality, enabling researchers to establish a strong foundation for their research.

However, while Scite.Ai offers numerous advantages, there are a few aspects to be cautious about. As with any data-driven tool, occasional errors or inaccuracies may arise, necessitating researchers to cross-reference and verify results with other reputable sources.

Moreover, Scite.Ai’s coverage may be limited in certain subject areas and languages, with a possibility of missing relevant studies, especially in niche fields or non-English publications.

Therefore, researchers should supplement the use of Scite.Ai with additional resources to ensure comprehensive literature coverage and avoid any potential gaps in their research.

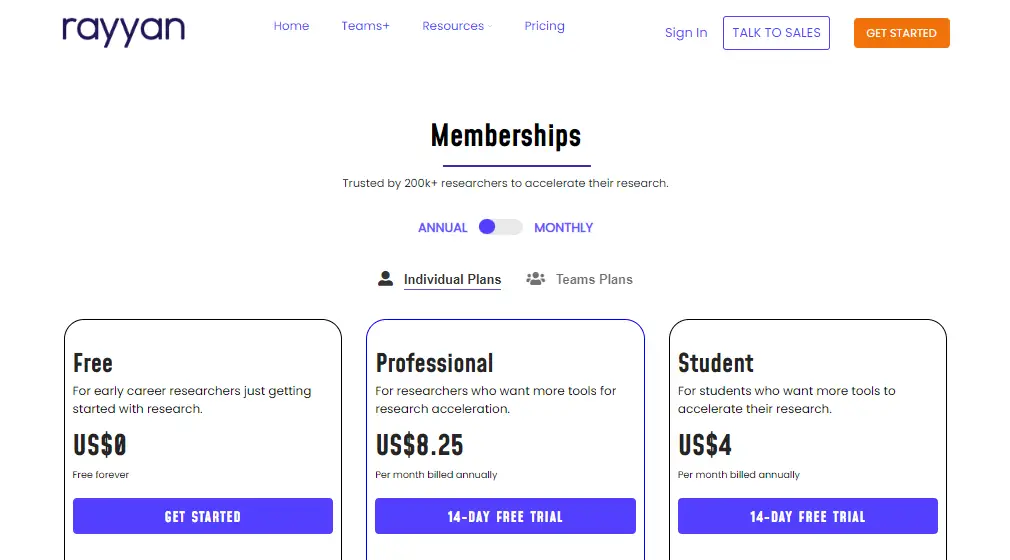

Rayyan offers the following paid plans:

- Monthly Plan: $20

- Yearly Plan: $12

#4. DistillerSR – Literature Review Software

DistillerSR is a powerful literature review tool trusted by researchers for its user-friendly interface and robust features. With its advanced search capabilities, researchers can quickly find relevant studies from multiple databases, saving time and effort.

The tool offers comprehensive screening and data extraction functionalities, streamlining the review process and improving the reliability of findings. Real-time collaboration features also facilitate seamless teamwork among researchers.

While DistillerSR offers numerous advantages, there are a few considerations. Users should invest time in understanding the tool’s features and functionalities to maximize its potential. Additionally, the pricing structure may be a factor for individual researchers or small teams with limited budgets.

Despite occasional technical glitches reported by some users, the developers actively address these issues through updates and improvements, ensuring a better user experience.

Overall, DistillerSR empowers researchers to navigate the vast sea of information, enhancing the quality and efficiency of literature reviews while fostering collaboration among research teams .

#5. Rayyan – AI Powered Tool for Systematic Literature Reviews

Rayyan is a powerful literature review tool that simplifies the research process for scholars and academics. With its user-friendly interface and efficient management features, Rayyan is highly regarded by researchers worldwide.

It allows users to import and organize large volumes of scholarly articles, making it easier to identify relevant studies for their research projects. The tool also facilitates seamless collaboration among team members, enhancing productivity and streamlining the research workflow.

However, it’s important to be aware of a few aspects. The free version of Rayyan has limitations, and upgrading to a premium subscription may be necessary for additional functionalities.

Users should also be mindful of occasional technical glitches and compatibility issues, promptly reporting any problems. Despite these considerations, Rayyan remains a valuable asset for researchers, providing an effective solution for literature review tasks.

Rayyan offers both free and paid plans:

- Professional: $8.25/month

- Student: $4/month

- Pro Team: $8.25/month

- Team+: $24.99/month

#6. Consensus – Use AI to find you answers in scientific research

Consensus is a cutting-edge literature review tool that has become a go-to choice for researchers worldwide. Its intuitive interface and powerful capabilities make it a preferred tool for navigating and analyzing scholarly articles.

With Consensus, researchers can save significant time by efficiently organizing and accessing relevant research material.People consider Consensus for several reasons.

Its advanced search algorithms and filters help researchers sift through vast amounts of information, ensuring they focus on the most relevant articles. By streamlining the literature review process, Consensus allows researchers to extract valuable insights and accelerate their research progress.

However, there are a few factors to watch out for when using Consensus. As with any automated tool, researchers should exercise caution and independently verify the accuracy and relevance of the generated results. Complex or niche topics may present challenges, resulting in limited search results. Researchers should also supplement Consensus with manual searches to ensure comprehensive coverage of the literature.

Overall, Consensus is a valuable resource for researchers seeking to optimize their literature review process. By leveraging its features alongside critical thinking and manual searches, researchers can enhance the efficiency and effectiveness of their work, advancing their research endeavors to new heights.

Consensus offers both free and paid plans:

- Premium: $9.99/month

- Enterprise: Custom

#7. RAx – AI-powered reading assistant

Consensus is a revolutionary literature review tool that has transformed the research process for scholars worldwide. With its user-friendly interface and advanced features, it offers a vast database of academic publications across various disciplines, providing access to relevant and up-to-date literature.

Using advanced algorithms and machine learning, Consensus delivers personalized recommendations, saving researchers time and effort in their literature search.

However, researchers should be cautious of potential biases in the recommendation system and supplement their search with manual verification to ensure a comprehensive review.

Additionally, occasional inaccuracies in metadata have been reported, making it essential for users to cross-reference information with reliable sources. Despite these considerations, Consensus remains an invaluable tool for enhancing the efficiency and quality of literature reviews.

RAx offers both free and paid plans. Currently offering 50% discounts as of July 2023:

- Premium: $6/month $3/month

- Premium with Copilot: $8/month $4/month

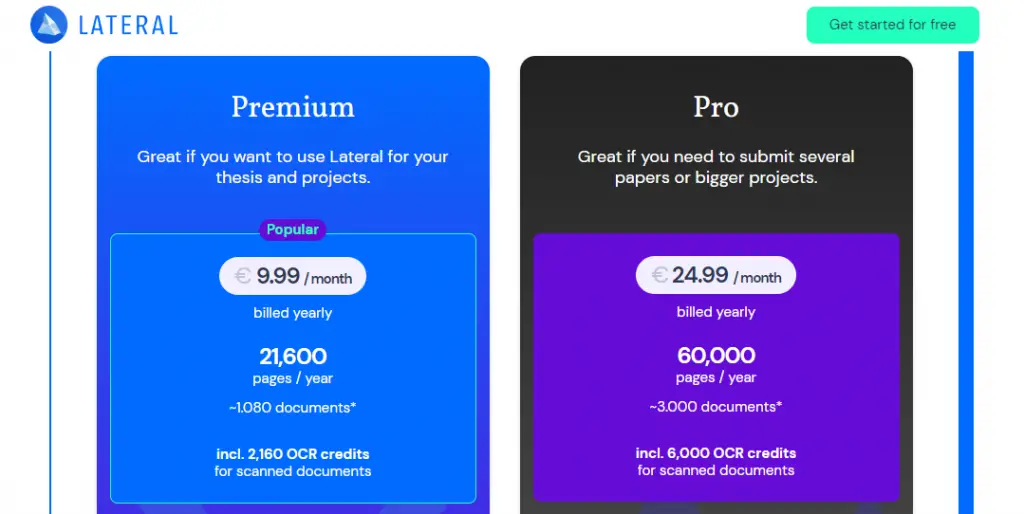

#8. Lateral – Advance your research with AI

“Lateral” is a revolutionary literature review tool trusted by researchers worldwide. With its user-friendly interface and powerful search capabilities, it simplifies the process of gathering and analyzing scholarly articles.

By leveraging advanced algorithms and machine learning, Lateral saves researchers precious time by retrieving relevant articles and uncovering new connections between them, fostering interdisciplinary exploration.

While Lateral provides numerous benefits, users should exercise caution. It is advisable to cross-reference its findings with other sources to ensure a comprehensive review.

Additionally, researchers must be mindful of potential biases introduced by the tool’s algorithms and should critically evaluate and interpret the results.

Despite these considerations, Lateral remains an indispensable resource, empowering researchers to delve deeper into their fields of study and make valuable contributions to the academic community.

RAx offers both free and paid plans:

- Premium: $10.98

- Pro: $27.46

#9. Iris AI – Introducing the researcher workspace

Iris AI is an innovative literature review tool that has transformed the research process for academics and scholars. With its advanced artificial intelligence capabilities, Iris AI offers a seamless and efficient way to navigate through a vast array of academic papers and publications.

Researchers are drawn to this tool because it saves valuable time by automating the tedious task of literature review and provides comprehensive coverage across multiple disciplines.

Its intelligent recommendation system suggests related articles, enabling researchers to discover hidden connections and broaden their knowledge base. However, caution should be exercised while using Iris AI.

While the tool excels at surfacing relevant papers, researchers should independently evaluate the quality and validity of the sources to ensure the reliability of their work.

It’s important to note that Iris AI may occasionally miss niche or lesser-known publications, necessitating a supplementary search using traditional methods.

Additionally, being an algorithm-based tool, there is a possibility of false positives or missed relevant articles due to the inherent limitations of automated text analysis. Nevertheless, Iris AI remains an invaluable asset for researchers, enhancing the quality and efficiency of their research endeavors.

Iris AI offers different pricing plans to cater to various user needs:

- Basic: Free

- Premium: Monthly ($82.41), Quarterly ($222.49), and Annual ($791.07)

#10. Scholarcy – Summarize your literature through AI

Scholarcy is a powerful literature review tool that helps researchers streamline their work. By employing advanced algorithms and natural language processing, it efficiently analyzes and summarizes academic papers, saving researchers valuable time.

Scholarcy’s ability to extract key information and generate concise summaries makes it an attractive option for scholars looking to quickly grasp the main concepts and findings of multiple papers.

However, it is important to exercise caution when relying solely on Scholarcy. While it provides a useful starting point, engaging with the original research papers is crucial to ensure a comprehensive understanding.

Scholarcy’s automated summarization may not capture the nuanced interpretations or contextual information presented in the full text.

Researchers should also be aware that certain types of documents, particularly those with heavy mathematical or technical content, may pose challenges for the tool.

Despite these considerations, Scholarcy remains a valuable resource for researchers seeking to enhance their literature review process and improve overall efficiency.

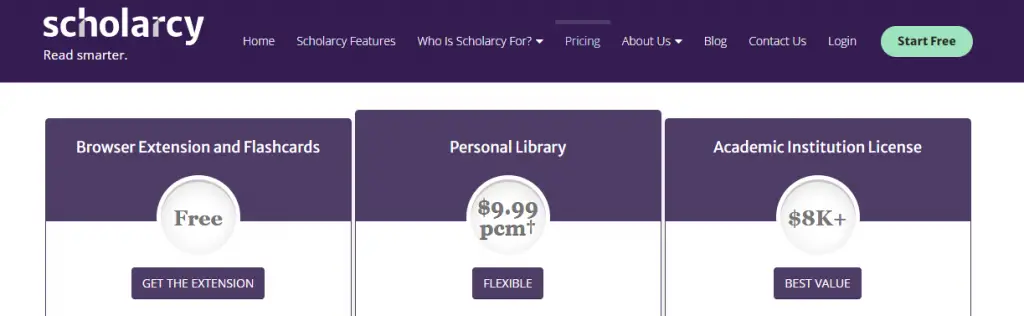

Scholarcy offer the following pricing plans:

- Browser Extension and Flashcards: Free

- Personal Library: $9.99

- Academic Institution License: $8K+

Final Thoughts

In conclusion, conducting a comprehensive literature review is a crucial aspect of any research project, and the availability of reliable and efficient tools can greatly facilitate this process for researchers. This article has explored the top 10 literature review tools that have gained popularity among researchers.

Moreover, the rise of AI-powered tools like Iris.ai and Sci.ai promises to revolutionize the literature review process by automating various tasks and enhancing research efficiency.

Ultimately, the choice of literature review tool depends on individual preferences and research needs, but the tools presented in this article serve as valuable resources to enhance the quality and productivity of research endeavors.

Researchers are encouraged to explore and utilize these tools to stay at the forefront of knowledge in their respective fields and contribute to the advancement of science and academia.

Q1. What are literature review tools for researchers?

Literature review tools for researchers are software or online platforms designed to assist researchers in efficiently conducting literature reviews. These tools help researchers find, organize, analyze, and synthesize relevant academic papers and other sources of information.

Q2. What criteria should researchers consider when choosing literature review tools?

When choosing literature review tools, researchers should consider factors such as the tool’s search capabilities, database coverage, user interface, collaboration features, citation management, annotation and highlighting options, integration with reference management software, and data extraction capabilities.

It’s also essential to consider the tool’s accessibility, cost, and technical support.

Q3. Are there any literature review tools specifically designed for systematic reviews or meta-analyses?

Yes, there are literature review tools that cater specifically to systematic reviews and meta-analyses, which involve a rigorous and structured approach to reviewing existing literature. These tools often provide features tailored to the specific needs of these methodologies, such as:

Screening and eligibility assessment: Systematic review tools typically offer functionalities for screening and assessing the eligibility of studies based on predefined inclusion and exclusion criteria. This streamlines the process of selecting relevant studies for analysis.

Data extraction and quality assessment: These tools often include templates and forms to facilitate data extraction from selected studies. Additionally, they may provide features for assessing the quality and risk of bias in individual studies.

Meta-analysis support: Some literature review tools include statistical analysis features that assist in conducting meta-analyses. These features can help calculate effect sizes, perform statistical tests, and generate forest plots or other visual representations of the meta-analytic results.

Reporting assistance: Many tools provide templates or frameworks for generating systematic review reports, ensuring compliance with established guidelines such as PRISMA (Preferred Reporting Items for Systematic Reviews and Meta-Analyses).

Q4. Can literature review tools help with organizing and annotating collected references?

Yes, literature review tools often come equipped with features to help researchers organize and annotate collected references. Some common functionalities include:

Reference management: These tools enable researchers to import references from various sources, such as databases or PDF files, and store them in a central library. They typically allow you to create folders or tags to organize references based on themes or categories.

Annotation capabilities: Many tools provide options for adding annotations, comments, or tags to individual references or specific sections of research articles. This helps researchers keep track of important information, highlight key findings, or note potential connections between different sources.

Full-text search: Literature review tools often offer full-text search functionality, allowing you to search within the content of imported articles or documents. This can be particularly useful when you need to locate specific information or keywords across multiple references.

Integration with citation managers: Some literature review tools integrate with popular citation managers like Zotero, Mendeley, or EndNote, allowing seamless transfer of references and annotations between platforms.

By leveraging these features, researchers can streamline the organization and annotation of their collected references, making it easier to retrieve relevant information during the literature review process.

Leave a Comment Cancel reply

Save my name, email, and website in this browser for the next time I comment.

We maintain and update science journals and scientific metrics. Scientific metrics data are aggregated from publicly available sources. Please note that we do NOT publish research papers on this platform. We do NOT accept any manuscript.

2012-2024 © scijournal.org

Literature reviews

- Introduction

- Conduct your search

- Store and organise the literature

Evaluate the information you have found

Critique the literature.

- Different subject areas

- Find literature reviews

When conducting your searches you may find many references that will not be suitable to use in your literature review.

- Skim through the resource. A quick read through the table of contents, the introductory paragraph or the abstract should indicate whether you need to read further or whether you can immediately discard the result.

- Evaluate the quality and reliability of the references you find. Our evaluating information page outlines what you need to consider when evaluating the books, journal articles, news and websites you find to ensure they are suitable for use in your literature review.

Critiquing the literature involves looking at the strength and weaknesses of the paper and evaluating the statements made by the author/s.

Books and resources on reading critically

- CASP Checklists Critical appraisal tools designed to be used when reading research. Includes tools for Qualitative studies, Systematic Reviews, Randomised Controlled Trials, Cohort Studies, Case Control Studies, Economic Evaluations, Diagnostic Studies and Clinical Prediction Rule.

- How to read critically - business and management From Postgraduate research in business - the aim of this chapter is to show you how to become a critical reader of typical academic literature in business and management.

- Learning to read critically in language and literacy Aims to develop skills of critical analysis and research design. It presents a series of examples of `best practice' in language and literacy education research.

- Critical appraisal in health sciences See tools for critically appraising health science research.

- << Previous: Store and organise the literature

- Next: Different subject areas >>

- Last Updated: Nov 20, 2024 5:10 PM

- URL: https://guides.library.uq.edu.au/research-techniques/literature-reviews

An official website of the United States government

Official websites use .gov A .gov website belongs to an official government organization in the United States.

Secure .gov websites use HTTPS A lock ( Lock Locked padlock icon ) or https:// means you've safely connected to the .gov website. Share sensitive information only on official, secure websites.

- Publications

- Account settings

- Advanced Search

- Journal List

Critical Appraisal Toolkit (CAT) for assessing multiple types of evidence

- Author information

- Article notes

- Copyright and License information

Correspondence: [email protected]

Contributor: Jennifer Kruse, Public Health Agency of Canada – Conceptualization and project administration

Series information

Scientific writing

Collection date 2017 Sep 7.

Healthcare professionals are often expected to critically appraise research evidence in order to make recommendations for practice and policy development. Here we describe the Critical Appraisal Toolkit (CAT) currently used by the Public Health Agency of Canada. The CAT consists of: algorithms to identify the type of study design, three separate tools (for appraisal of analytic studies, descriptive studies and literature reviews), additional tools to support the appraisal process, and guidance for summarizing evidence and drawing conclusions about a body of evidence. Although the toolkit was created to assist in the development of national guidelines related to infection prevention and control, clinicians, policy makers and students can use it to guide appraisal of any health-related quantitative research. Participants in a pilot test completed a total of 101 critical appraisals and found that the CAT was user-friendly and helpful in the process of critical appraisal. Feedback from participants of the pilot test of the CAT informed further revisions prior to its release. The CAT adds to the arsenal of available tools and can be especially useful when the best available evidence comes from non-clinical trials and/or studies with weak designs, where other tools may not be easily applied.

Introduction

Healthcare professionals, researchers and policy makers are often involved in the development of public health policies or guidelines. The most valuable guidelines provide a basis for evidence-based practice with recommendations informed by current, high quality, peer-reviewed scientific evidence. To develop such guidelines, the available evidence needs to be critically appraised so that recommendations are based on the "best" evidence. The ability to critically appraise research is, therefore, an essential skill for health professionals serving on policy or guideline development working groups.

Our experience with working groups developing infection prevention and control guidelines was that the review of relevant evidence went smoothly while the critical appraisal of the evidence posed multiple challenges. Three main issues were identified. First, although working group members had strong expertise in infection prevention and control or other areas relevant to the guideline topic, they had varying levels of expertise in research methods and critical appraisal. Second, the critical appraisal tools in use at that time focused largely on analytic studies (such as clinical trials), and lacked definitions of key terms and explanations of the criteria used in the studies. As a result, the use of these tools by working group members did not result in a consistent way of appraising analytic studies nor did the tools provide a means of assessing descriptive studies and literature reviews. Third, working group members wanted guidance on how to progress from assessing individual studies to summarizing and assessing a body of evidence.

To address these issues, a review of existing critical appraisal tools was conducted. We found that the majority of existing tools were design-specific, with considerable variability in intent, criteria appraised and construction of the tools. A systematic review reported that fewer than half of existing tools had guidelines for use of the tool and interpretation of the items ( 1 ). The well-known Grading of Recommendations Assessment, Development and Evaluation (GRADE) rating-of-evidence system and the Cochrane tools for assessing risk of bias were considered for use ( 2 ), ( 3 ). At that time, the guidelines for using these tools were limited, and the tools were focused primarily on randomized controlled trials (RCTs) and non-randomized controlled trials. For feasibility and ethical reasons, clinical trials are rarely available for many common infection prevention and control issues ( 4 ), ( 5 ). For example, there are no intervention studies assessing which practice restrictions, if any, should be placed on healthcare workers who are infected with a blood-borne pathogen. Working group members were concerned that if they used GRADE, all evidence would be rated as very low or as low quality or certainty, and recommendations based on this evidence may be interpreted as unconvincing, even if they were based on the best or only available evidence.

The team decided to develop its own critical appraisal toolkit. So a small working group was convened, led by an epidemiologist with expertise in research, methodology and critical appraisal, with the goal of developing tools to critically appraise studies informing infection prevention and control recommendations. This article provides an overview of the Critical Appraisal Toolkit (CAT). The full document, entitled Infection Prevention and Control Guidelines Critical Appraisal Tool Kit is available online ( 6 ).

Following a review of existing critical appraisal tools, studies informing infection prevention and control guidelines that were in development were reviewed to identify the types of studies that would need to be appraised using the CAT. A preliminary draft of the CAT was used by various guideline development working groups and iterative revisions were made over a two year period. A pilot test of the CAT was then conducted which led to the final version ( 6 ).

The toolkit is set up to guide reviewers through three major phases in the critical appraisal of a body of evidence: appraisal of individual studies; summarizing the results of the appraisals; and appraisal of the body of evidence.

Tools for critically appraising individual studies

The first step in the critical appraisal of an individual study is to identify the study design; this can be surprisingly problematic, since many published research studies are complex. An algorithm was developed to help identify whether a study was an analytic study, a descriptive study or a literature review (see text box for definitions). It is critical to establish the design of the study first, as the criteria for assessment differs depending on the type of study.

Definitions of the types of studies that can be analyzed with the Critical Appraisal Toolkit*

Analytic study: A study designed to identify or measure effects of specific exposures, interventions or risk factors. This design employs the use of an appropriate comparison group to test epidemiologic hypotheses, thus attempting to identify associations or causal relationships.

Descriptive study: A study that describes characteristics of a condition in relation to particular factors or exposure of interest. This design often provides the first important clues about possible determinants of disease and is useful for the formulation of hypotheses that can be subsequently tested using an analytic design.

Literature review: A study that analyzes critical points of a published body of knowledge. This is done through summary, classification and comparison of prior studies. With the exception of meta-analyses, which statistically re-analyze pooled data from several studies, these studies are secondary sources and do not report any new or experimental work.

* Public Health Agency of Canada. Infection Prevention and Control Guidelines Critical Appraisal Tool Kit ( 6 )

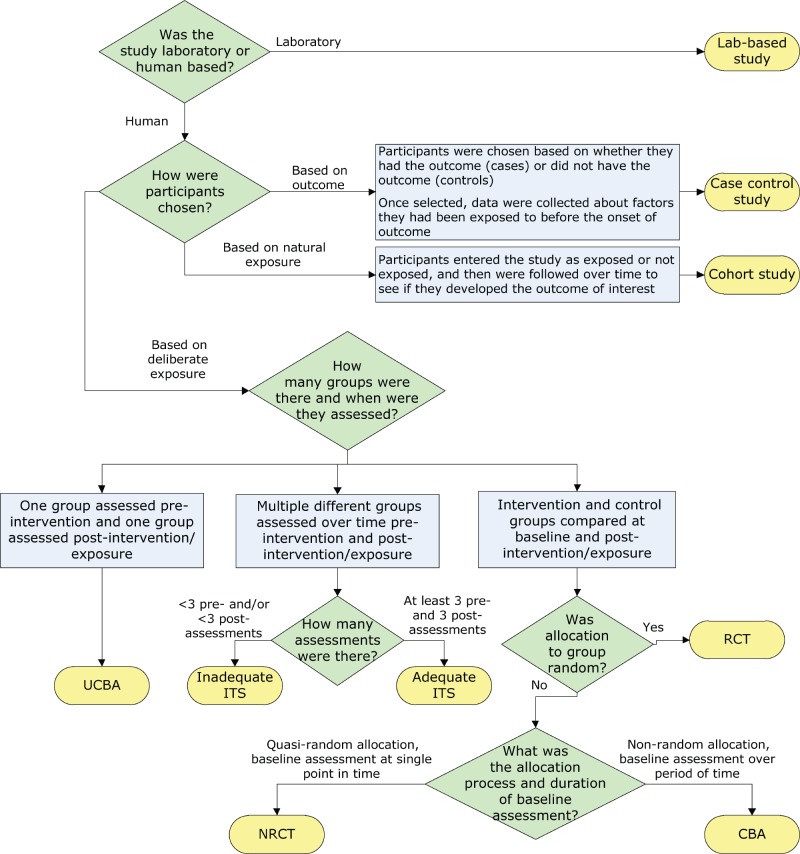

Separate algorithms were developed for analytic studies, descriptive studies and literature reviews to help reviewers identify specific designs within those categories. The algorithm below, for example, helps reviewers determine which study design was used within the analytic study category ( Figure 1 ). It is based on key decision points such as number of groups or allocation to group. The legends for the algorithms and supportive tools such as the glossary provide additional detail to further differentiate study designs, such as whether a cohort study was retrospective or prospective.

Figure 1. Algorithm for identifying the type of analytic study.

Abbreviations: CBA, controlled before-after; ITS, interrupted time series; NRCT, non-randomized controlled trial; RCT, randomized controlled trial; UCBA, uncontrolled before-after

Separate critical appraisal tools were developed for analytic studies, for descriptive studies and for literature reviews, with relevant criteria in each tool. For example, a summary of the items covered in the analytic study critical appraisal tool is shown in Table 1 . This tool is used to appraise trials, observational studies and laboratory-based experiments. A supportive tool for assessing statistical analysis was also provided that describes common statistical tests used in epidemiologic studies.

Table 1. Aspects appraised in analytic study critical appraisal tool.

The descriptive study critical appraisal tool assesses different aspects of sampling, data collection, statistical analysis, and ethical conduct. It is used to appraise cross-sectional studies, outbreak investigations, case series and case reports.

The literature review critical appraisal tool assesses the methodology, results and applicability of narrative reviews, systematic reviews and meta-analyses.

After appraisal of individual items in each type of study, each critical appraisal tool also contains instructions for drawing a conclusion about the overall quality of the evidence from a study, based on the per-item appraisal. Quality is rated as high, medium or low. While a RCT is a strong study design and a survey is a weak design, it is possible to have a poor quality RCT or a high quality survey. As a result, the quality of evidence from a study is distinguished from the strength of a study design when assessing the quality of the overall body of evidence. A definition of some terms used to evaluate evidence in the CAT is shown in Table 2 .

Table 2. Definition of terms used to evaluate evidence.

* Considered strong design if there are at least two control groups and two intervention groups. Considered moderate design if there is only one control and one intervention group

Tools for summarizing the evidence

The second phase in the critical appraisal process involves summarizing the results of the critical appraisal of individual studies. Reviewers are instructed to complete a template evidence summary table, with key details about each study and its ratings. Studies are listed in descending order of strength in the table. The table simplifies looking across all studies that make up the body of evidence informing a recommendation and allows for easy comparison of participants, sample size, methods, interventions, magnitude and consistency of results, outcome measures and individual study quality as determined by the critical appraisal. These evidence summary tables are reviewed by the working group to determine the rating for the quality of the overall body of evidence and to facilitate development of recommendations based on evidence.

Rating the quality of the overall body of evidence

The third phase in the critical appraisal process is rating the quality of the overall body of evidence. The overall rating depends on the five items summarized in Table 2 : strength of study designs, quality of studies, number of studies, consistency of results and directness of the evidence. The various combinations of these factors lead to an overall rating of the strength of the body of evidence as strong, moderate or weak as summarized in Table 3 .

Table 3. Criteria for rating evidence on which recommendations are based.

A unique aspect of this toolkit is that recommendations are not graded but are formulated based on the graded body of evidence. Actions are either recommended or not recommended; it is the strength of the available evidence that varies, not the strength of the recommendation. The toolkit does highlight, however, the need to re-evaluate new evidence as it becomes available especially when recommendations are based on weak evidence.

Pilot test of the CAT

Of 34 individuals who indicated an interest in completing the pilot test, 17 completed it. Multiple peer-reviewed studies were selected representing analytic studies, descriptive studies and literature reviews. The same studies were assigned to participants with similar content expertise. Each participant was asked to appraise three analytic studies, two descriptive studies and one literature review, using the appropriate critical appraisal tool as identified by the participant. For each study appraised, one critical appraisal tool and the associated tool-specific feedback form were completed. Each participant also completed a single general feedback form. A total of 101 of 102 critical appraisals were conducted and returned, with 81 tool-specific feedback forms and 14 general feedback forms returned.

The majority of participants (>85%) found the flow of each tool was logical and the length acceptable but noted they still had difficulty identifying the study designs ( Table 4 ).

Table 4. Pilot test feedback on user friendliness.

* Number of tool-specific forms returned for total number of critical appraisals conducted

The vast majority of the feedback forms (86–93%) indicated that the different tools facilitated the critical appraisal process. In the assessment of consistency, however, only four of ten analytic studies appraised (40%), had complete agreement on the rating of overall study quality by participants, the other six studies had differences noted as mismatches. Four of the six studies with mismatches were observational studies. The differences were minor. None of the mismatches included a study that was rated as both high and low quality by different participants. Based on the comments provided by participants, most mismatches could likely have been resolved through discussion with peers. Mismatched ratings were not an issue for the descriptive studies and literature reviews. In summary, the pilot test provided useful feedback on different aspects of the toolkit. Revisions were made to address the issues identified from the pilot test and thus strengthen the CAT.

The Infection Prevention and Control Guidelines Critical Appraisal Tool Kit was developed in response to the needs of infection control professionals reviewing literature that generally did not include clinical trial evidence. The toolkit was designed to meet the identified needs for training in critical appraisal with extensive instructions and dictionaries, and tools applicable to all three types of studies (analytic studies, descriptive studies and literature reviews). The toolkit provided a method to progress from assessing individual studies to summarizing and assessing the strength of a body of evidence and assigning a grade. Recommendations are then developed based on the graded body of evidence. This grading system has been used by the Public Health Agency of Canada in the development of recent infection prevention and control guidelines ( 5 ), ( 7 ). The toolkit has also been used for conducting critical appraisal for other purposes, such as addressing a practice problem and serving as an educational tool ( 8 ), ( 9 ).

The CAT has a number of strengths. It is applicable to a wide variety of study designs. The criteria that are assessed allow for a comprehensive appraisal of individual studies and facilitates critical appraisal of a body of evidence. The dictionaries provide reviewers with a common language and criteria for discussion and decision making.

The CAT also has a number of limitations. The tools do not address all study designs (e.g., modelling studies) and the toolkit provides limited information on types of bias. Like the majority of critical appraisal tools ( 10 ), ( 11 ), these tools have not been tested for validity and reliability. Nonetheless, the criteria assessed are those indicated as important in textbooks and in the literature ( 12 ), ( 13 ). The grading scale used in this toolkit does not allow for comparison of evidence grading across organizations or internationally, but most reviewers do not need such comparability. It is more important that strong evidence be rated higher than weak evidence, and that reviewers provide rationales for their conclusions; the toolkit enables them to do so.

Overall, the pilot test reinforced that the CAT can help with critical appraisal training and can increase comfort levels for those with limited experience. Further evaluation of the toolkit could assess the effectiveness of revisions made and test its validity and reliability.

A frequent question regarding this toolkit is how it differs from GRADE as both distinguish stronger evidence from weaker evidence and use similar concepts and terminology. The main differences between GRADE and the CAT are presented in Table 5 . Key differences include the focus of the CAT on rating the quality of individual studies, and the detailed instructions and supporting tools that assist those with limited experience in critical appraisal. When clinical trials and well controlled intervention studies are or become available, GRADE and related tools from Cochrane would be more appropriate ( 2 ), ( 3 ). When descriptive studies are all that is available, the CAT is very useful.

Table 5. Comparison of features of the Critical Appraisal Toolkit (CAT) and GRADE.

Abbreviation: GRADE, Grading of Recommendations Assessment, Development and Evaluation

The Infection Prevention and Control Guidelines Critical Appraisal Tool Kit was developed in response to needs for training in critical appraisal, assessing evidence from a wide variety of research designs, and a method for going from assessing individual studies to characterizing the strength of a body of evidence. Clinician researchers, policy makers and students can use these tools for critical appraisal of studies whether they are trying to develop policies, find a potential solution to a practice problem or critique an article for a journal club. The toolkit adds to the arsenal of critical appraisal tools currently available and is especially useful in assessing evidence from a wide variety of research designs.

Authors’ Statement

DM – Conceptualization, methodology, investigation, data collection and curation and writing – original draft, review and editing

TO – Conceptualization, methodology, investigation, data collection and curation and writing – original draft, review and editing

KD – Conceptualization, review and editing, supervision and project administration

Acknowledgements

We thank the Infection Prevention and Control Expert Working Group of the Public Health Agency of Canada for feedback on the development of the toolkit, Lisa Marie Wasmund for data entry of the pilot test results, Katherine Defalco for review of data and cross-editing of content and technical terminology for the French version of the toolkit, Laurie O’Neil for review and feedback on early versions of the toolkit, Frédéric Bergeron for technical support with the algorithms in the toolkit and the Centre for Communicable Diseases and Infection Control of the Public Health Agency of Canada for review, feedback and ongoing use of the toolkit. We thank Dr. Patricia Huston, Canada Communicable Disease Report Editor-in-Chief, for a thorough review and constructive feedback on the draft manuscript.

Conflict of interest: None.

Funding: This work was supported by the Public Health Agency of Canada.

- 1. Katrak P, Bialocerkowski AE, Massy-Westropp N, Kumar S, Grimmer KA. A systematic review of the content of critical appraisal tools. BMC Med Res Methodol 2004. Sep;4:22. 10.1186/1471-2288-4-22 [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 2. GRADE Working Group. Criteria for applying or using GRADE. www.gradeworkinggroup.org [Accessed July 25, 2017].

- 3. Higgins JP, Green S, editors. Cochrane Handbook for Systematic Reviews of Interventions Version 5.1.0. The Cochrane Collaboration, 2011. http://handbook.cochrane.org

- 4. Khan AR, Khan S, Zimmerman V, Baddour LM, Tleyjeh IM. Quality and strength of evidence of the Infectious Diseases Society of America clinical practice guidelines. Clin Infect Dis 2010. Nov;51(10):1147–56. 10.1086/656735 [ DOI ] [ PubMed ] [ Google Scholar ]

- 5. Public Health Agency of Canada. Routine practices and additional precautions for preventing the transmission of infection in healthcare settings. http://www.phac-aspc.gc.ca/nois-sinp/guide/summary-sommaire/tihs-tims-eng.php [Accessed December 4, 2015].

- 6. Public Health Agency of Canada. Infection Prevention and Control Guidelines Critical Appraisal Tool Kit. http://publications.gc.ca/collections/collection_2014/aspc-phac/HP40-119-2014-eng.pdf [Accessed December 4, 2015].

- 7. Public Health Agency of Canada. Hand hygiene practices in healthcare settings. https://www.canada.ca/en/public-health/services/infectious-diseases/nosocomial-occupational-infections/hand-hygiene-practices-healthcare-settings.html [Accessed December 4, 2015].

- 8. Ha S, Paquette D, Tarasuk J, Dodds J, Gale-Rowe M, Brooks JI et al. A systematic review of HIV testing among Canadian populations. Can J Public Health 2014. Jan;105(1):e53–62. 10.17269/cjph.105.4128 [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 9. Stevens LK, Ricketts ED, Bruneau JE. Critical appraisal through a new lens. Nurs Leadersh (Tor Ont) 2014. Jun;27(2):10–3. 10.12927/cjnl.2014.23843 [ DOI ] [ PubMed ] [ Google Scholar ]

- 10. Lohr KN. Rating the strength of scientific evidence: relevance for quality improvement programs. Int J Qual Health Care 2004. Feb;16(1):9–18. 10.1093/intqhc/mzh005 [ DOI ] [ PubMed ] [ Google Scholar ]

- 11. Crowe M, Sheppard L. A review of critical appraisal tools show they lack rigor: alternative tool structure is proposed. J Clin Epidemiol 2011. Jan;64(1):79–89. 10.1016/j.jclinepi.2010.02.008 [ DOI ] [ PubMed ] [ Google Scholar ]

- 12. Young JM, Solomon MJ. How to critically appraise an article. Nat Clin Pract Gastroenterol Hepatol 2009. Feb;6(2):82–91. 10.1038/ncpgasthep1331 [ DOI ] [ PubMed ] [ Google Scholar ]

- 13. Polit DF, Beck CT. Nursing Research: Generating and Assessing Evidence for Nursing Practice. 9 th ed. Philadelphia, PA: Lippincott Williams & Wilkins; 2008. Chapter XX, Literature reviews: Finding and critiquing the evidence p. 94-125. [ Google Scholar ]

- View on publisher site

- PDF (348.4 KB)

- Collections

Similar articles

Cited by other articles, links to ncbi databases.

- Download .nbib .nbib

- Format: AMA APA MLA NLM

Add to Collections

AI Literature Review Generator

- Academic Research: Create a literature review for your thesis, dissertation, or research paper.

- Professional Research: Conduct a literature review for a project, report, or proposal at work.

- Content Creation: Write a literature review for a blog post, article, or book.

- Personal Research: Conduct a literature review to deepen your understanding of a topic of interest.

New & Trending Tools

Cinematic drama, ai camera and photography assistant, romantic fiction outline generator.

About Systematic Reviews

Choosing the Best Systematic Review Critical Appraisal Tool

Automate every stage of your literature review to produce evidence-based research faster and more accurately.

What is a critical appraisal.

Critical appraisal involves the evaluation of the quality, reliability, and relevance of studies, which is assessed based on quality measures specific to the research question, its related topics, design, methodology, data analysis, and the reporting of different types of systematic reviews .

Planning a critical appraisal starts with identifying or developing checklists. There are several critical appraisal tools that can be used to guide the process, adapting evaluation measures to be relevant to the specific research. It is important to pilot test these checklists and ensure that they are comprehensive enough to tackle all aspects of your systematic review.

What is the Purpose of a Critical Appraisal?

A critical appraisal is an integral part of a systematic review because it helps determine which studies can support the research. Here are some additional reasons why critical appraisals are important.

Assessing Quality

Critical appraisals employ measures specific to the systematic review. Through these, researchers can assess the quality of the studies—their trustworthiness, value, and reliability. This helps weed out substandard reviews, saving researchers’ time that would have been wasted reading full texts.

Determining Relevance

By appraising studies, researchers can determine whether or not they are relevant to the systematic review, such as if they’re connected to the topic or if their results support the research, etc. By doing this, the question “ Can you include a systematic review in a scoping review? ” can also be answered depending on its relevance to the study.

Identifying Flaws

Critical appraisals aim to identify methodological flaws in the literature, helping researchers and readers make informed decisions about the research evidence. They also help reduce the risk of bias when selecting studies.

What to Consider in a Critical Appraisal

Critical appraisals vary as they are specific to the topic, nature, and methodology of each systematic review. However, they generally have the same goal, trying to answer the following questions about the studies being considered:

- Is the study relevant to the research question?

- Is the study valid?

- Did the study use appropriate methods to address the research question?

- Does the study support the findings and evidence claims of the review?

- Are the valid results of the study important?

- Are the valid results of the study applicable to the research?

Learn More About DistillerSR

(Article continues below)

Critical Appraisal Tools

There are hundreds of tools and worksheets that can serve as a guide through the critical appraisal process. Here are just some of the most common ones to consider:

- AMSTAR – to examine the effectiveness of interventions.

- CASP – to appraise randomized control trials, systematic reviews, cohort studies, case-control studies, qualitative research, economic evaluations, diagnostic tests, and clinical prediction rules.

- Cochrane Risk of Bias Tool – to assess the risk of bias of randomized control trials (RCTs).

- GRADE – to grade the quality of evidence in healthcare research and policy.

- JBI Critical Tools – to assess trustworthiness, relevance, and results of published papers.

- NOS – to assess the quality of non-randomized studies in meta-analyses.

- ROBIS – to assess the risk of bias in interventions, diagnosis, prognosis, and etiology.

- STROBE – to address cohort, case-control, and conduct cross-sectional studies.

What is the Best Critical Appraisal Tool?

There is no single best critical appraisal tool for any study design, nor is there a generic one that can be expected to consistently do well when used across different study types.

Critical appraisal tools vary considerably in intent, components, and construction, and the right one for your systematic review is the one that addresses the components that you need to tackle and ensures that your research results in comprehensive, unbiased, and valid findings.

3 Reasons to Connect

All-in-one Literature Review Software

The #1 literature review software with the best ai integration.

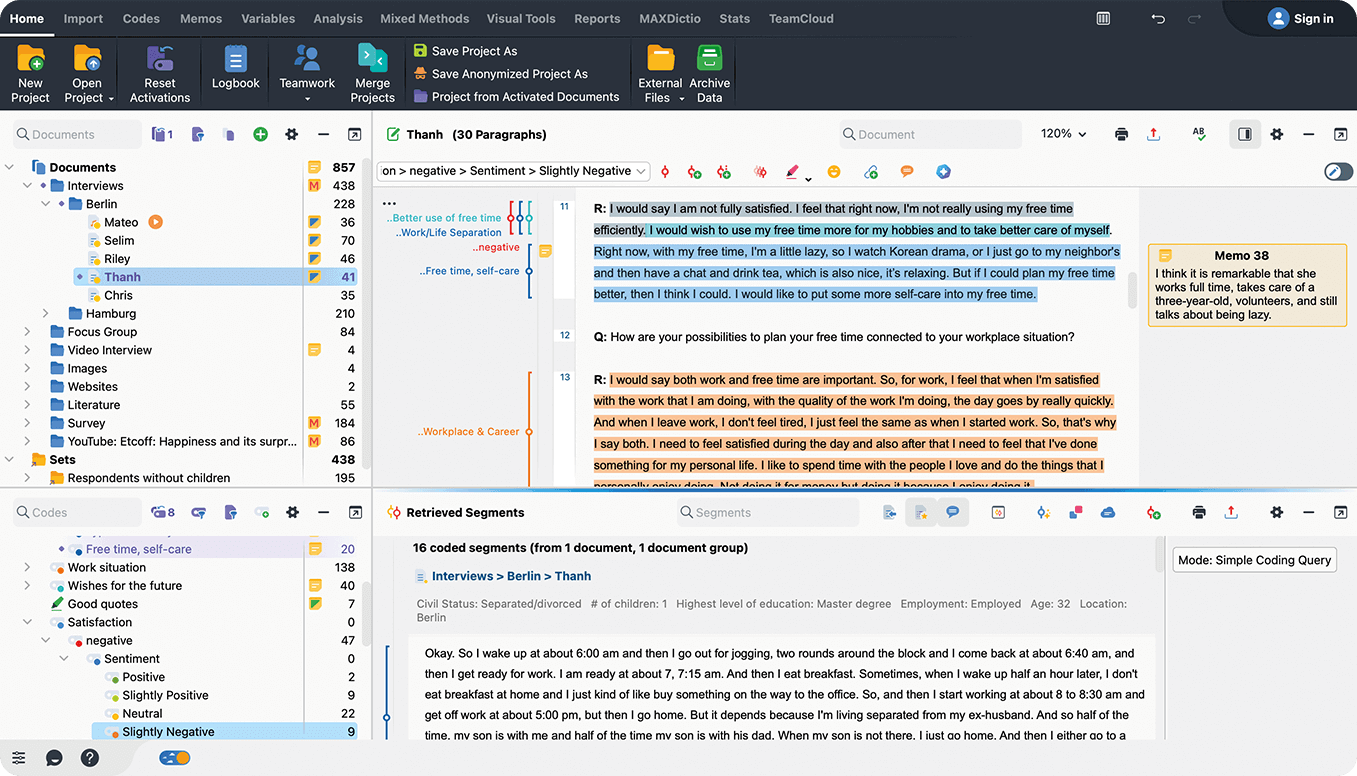

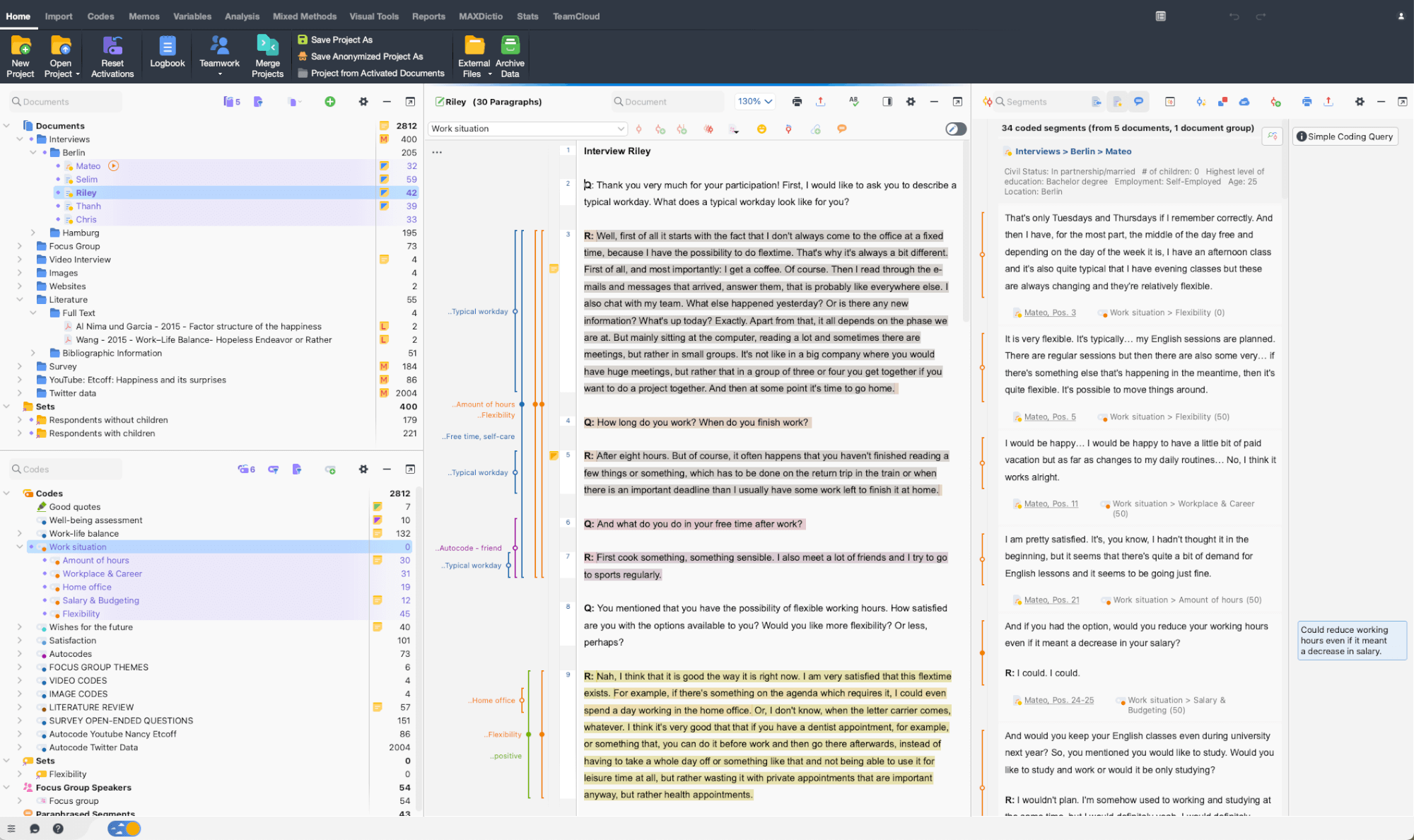

MAXQDA streamlines your literature review and reference management with powerful analysis tools, ease of use, and smart AI integration. Explore the possibilities now.

Start your free trial

Free MAXQDA trial for Windows and Mac

Literature Review with AI

Learn more about AI-powered literature review with MAXQDA.

Organize. Analyze. Visualize. Present.

One software, many solutions

Your trial will end automatically after 14 days and will not renew. There is no need for cancelation.

MAXQDA The All-in-one Literature Review Software

MAXQDA is the best choice for a comprehensive literature review. It works with a wide range of data types and offers powerful tools for literature review, such as reference management, qualitative, vocabulary, text analysis tools, and more.

Document viewer

Your analysis.

As your all-in-one literature review software, MAXQDA can be used to manage your entire research project. Easily import data from texts, interviews, focus groups, PDFs, web pages, spreadsheets, articles, e-books, and even social media data. Connect the reference management system of your choice with MAXQDA to easily import bibliographic data. Organize your data in groups, link relevant quotes to each other, keep track of your literature summaries, and share and compare work with your team members. Your project file stays flexible and you can expand and refine your category system as you go to suit your research.

Developed by and for researchers – since 1989

Having used several qualitative data analysis software programs, there is no doubt in my mind that MAXQDA has advantages over all the others. In addition to its remarkable analytical features for harnessing data, MAXQDA’s stellar customer service, online tutorials, and global learning community make it a user friendly and top-notch product.

Sally S. Cohen – NYU Rory Meyers College of Nursing

Literature Review is Faster and Smarter with MAXQDA

Easily import your literature review data

With a literature review software like MAXQDA, you can easily import bibliographic data from reference management programs for your literature review. MAXQDA can work with all reference management programs that can export their databases in RIS-format which is a standard format for bibliographic information. Like MAXQDA, these reference managers use project files, containing all collected bibliographic information, such as author, title, links to websites, keywords, abstracts, and other information. In addition, you can easily import the corresponding full texts. Upon import, all documents will be automatically pre-coded to facilitate your literature review at a later stage.

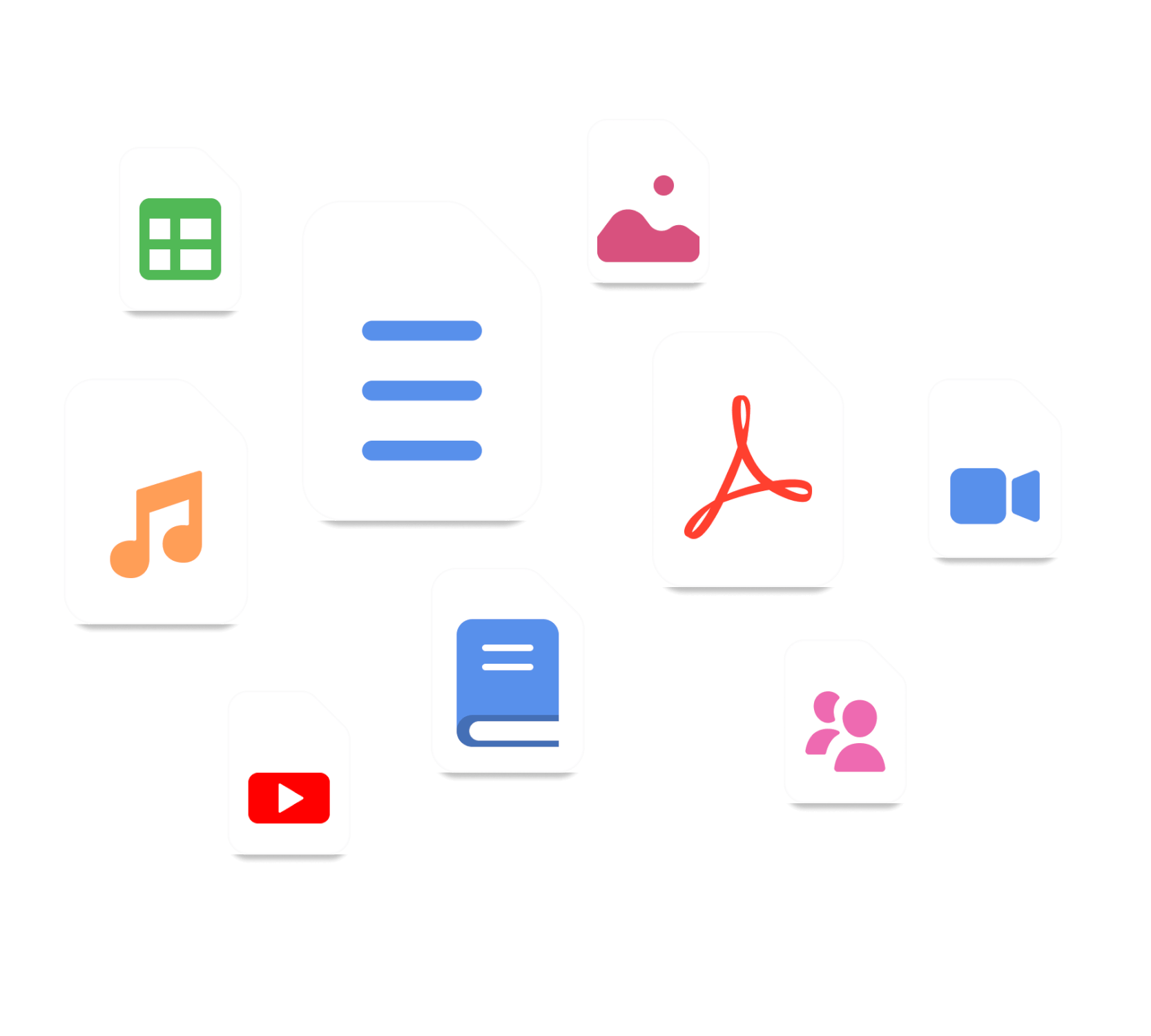

Capture your ideas while analyzing your literature

Great ideas will often occur to you while you’re doing your literature review. Using MAXQDA as your literature review software, you can create memos to store your ideas, such as research questions and objectives, or you can use memos for paraphrasing passages into your own words. By attaching memos like post-it notes to text passages, texts, document groups, images, audio/video clips, and of course codes, you can easily retrieve them at a later stage. Particularly useful for literature reviews are free memos written during the course of work from which passages can be copied and inserted into the final text.

Find concepts important to your generated literature review

When generating a literature review you might need to analyze a large amount of text. Luckily MAXQDA as the #1 literature review software offers Text Search tools that allow you to explore your documents without reading or coding them first. Automatically search for keywords (or dictionaries of keywords), such as important concepts for your literature review, and automatically code them with just a few clicks. Document variables that were automatically created during the import of your bibliographic information can be used for searching and retrieving certain text segments. MAXQDA’s powerful Coding Query allows you to analyze the combination of activated codes in different ways.

Aggregate your literature review

When conducting a literature review you can easily get lost. But with MAXQDA as your literature review software, you will never lose track of the bigger picture. Among other tools, MAXQDA’s overview and summary tables are especially useful for aggregating your literature review results. MAXQDA offers overview tables for almost everything, codes, memos, coded segments, links, and so on. With MAXQDA literature review tools you can create compressed summaries of sources that can be effectively compared and represented, and with just one click you can easily export your overview and summary tables and integrate them into your literature review report.

Powerful and easy-to-use literature review tools

Quantitative aspects can also be relevant when conducting a literature review analysis. Using MAXQDA as your literature review software enables you to employ a vast range of procedures for the quantitative evaluation of your material. You can sort sources according to document variables, compare amounts with frequency tables and charts, and much more. Make sure you don’t miss the word frequency tools of MAXQDA’s add-on module for quantitative content analysis. Included are tools for visual text exploration, content analysis, vocabulary analysis, dictionary-based analysis, and more that facilitate the quantitative analysis of terms and their semantic contexts.

Visualize your literature review

As an all-in-one literature review software, MAXQDA offers a variety of visual tools that are tailor-made for qualitative research and literature reviews. Create stunning visualizations to analyze your material. Of course, you can export your visualizations in various formats to enrich your literature review analysis report. Work with word clouds to explore the central themes of a text and key terms that are used, create charts to easily compare the occurrences of concepts and important keywords, or make use of the graphical representation possibilities of MAXMaps, which in particular permit the creation of concept maps. Thanks to the interactive connection between your visualizations with your MAXQDA data, you’ll never lose sight of the big picture.

AI Assist: literature review software meets AI

AI Assist – your virtual research assistant – supports your literature review with various tools. AI Assist simplifies your work by automatically analyzing and summarizing elements of your research project and by generating suggestions for subcodes. No matter which AI tool you use – you can customize your results to suit your needs.

Free tutorials and guides on literature review

MAXQDA offers a variety of free learning resources for literature review, making it easy for both beginners and advanced users to learn how to use the software. From free video tutorials and webinars to step-by-step guides and sample projects, these resources provide a wealth of information to help you understand the features and functionality of MAXQDA for literature review. For beginners, the software’s user-friendly interface and comprehensive help center make it easy to get started with your data analysis, while advanced users will appreciate the detailed guides and tutorials that cover more complex features and techniques. Whether you’re just starting out or are an experienced researcher, MAXQDA’s free learning resources will help you get the most out of your literature review.

Free MAXQDA Trial for Windows and Mac

Get your maxqda license, compare the features of maxqda and maxqda analytics pro, faq: literature review software.

Literature review software is a tool designed to help researchers efficiently manage and analyze the existing body of literature relevant to their research topic. MAXQDA, a versatile qualitative data analysis tool, can be instrumental in this process.

Literature review software, like MAXQDA, typically includes features such as data import and organization, coding and categorization, advanced search capabilities, data visualization tools, and collaboration features. These features facilitate the systematic review and analysis of relevant literature.

Literature review software, including MAXQDA, can assist in qualitative data interpretation by enabling researchers to organize, code, and categorize relevant literature. This organized data can then be analyzed to identify trends, patterns, and themes, helping researchers draw meaningful insights from the literature they’ve reviewed.

Yes, literature review software like MAXQDA is suitable for researchers of all levels of experience. It offers user-friendly interfaces and extensive support resources, making it accessible to beginners while providing advanced features that cater to the needs of experienced researchers.

Getting started with literature review software, such as MAXQDA, typically involves downloading and installing the software, importing your relevant literature, and exploring the available features. Many software providers offer tutorials and documentation to help users get started quickly.

For students, MAXQDA can be an excellent literature review software choice. Its user-friendly interface, comprehensive feature set, and educational discounts make it a valuable tool for students conducting literature reviews as part of their academic research.

MAXQDA is available for both Windows and Mac users, making it a suitable choice for Mac users looking for literature review software. It offers a consistent and feature-rich experience on Mac operating systems.

When it comes to literature review software, MAXQDA is widely regarded as one of the best choices. Its robust feature set, user-friendly interface, and versatility make it a top pick for researchers conducting literature reviews.

Yes, literature reviews can be conducted without software. However, using literature review software like MAXQDA can significantly streamline and enhance the process by providing tools for efficient data management, analysis, and visualization.

- Open access

- Published: 08 June 2023

Guidance to best tools and practices for systematic reviews

- Kat Kolaski 1 ,

- Lynne Romeiser Logan 2 &

- John P. A. Ioannidis 3

Systematic Reviews volume 12 , Article number: 96 ( 2023 ) Cite this article

31k Accesses

33 Citations

74 Altmetric

Metrics details

Data continue to accumulate indicating that many systematic reviews are methodologically flawed, biased, redundant, or uninformative. Some improvements have occurred in recent years based on empirical methods research and standardization of appraisal tools; however, many authors do not routinely or consistently apply these updated methods. In addition, guideline developers, peer reviewers, and journal editors often disregard current methodological standards. Although extensively acknowledged and explored in the methodological literature, most clinicians seem unaware of these issues and may automatically accept evidence syntheses (and clinical practice guidelines based on their conclusions) as trustworthy.

A plethora of methods and tools are recommended for the development and evaluation of evidence syntheses. It is important to understand what these are intended to do (and cannot do) and how they can be utilized. Our objective is to distill this sprawling information into a format that is understandable and readily accessible to authors, peer reviewers, and editors. In doing so, we aim to promote appreciation and understanding of the demanding science of evidence synthesis among stakeholders. We focus on well-documented deficiencies in key components of evidence syntheses to elucidate the rationale for current standards. The constructs underlying the tools developed to assess reporting, risk of bias, and methodological quality of evidence syntheses are distinguished from those involved in determining overall certainty of a body of evidence. Another important distinction is made between those tools used by authors to develop their syntheses as opposed to those used to ultimately judge their work.

Exemplar methods and research practices are described, complemented by novel pragmatic strategies to improve evidence syntheses. The latter include preferred terminology and a scheme to characterize types of research evidence. We organize best practice resources in a Concise Guide that can be widely adopted and adapted for routine implementation by authors and journals. Appropriate, informed use of these is encouraged, but we caution against their superficial application and emphasize their endorsement does not substitute for in-depth methodological training. By highlighting best practices with their rationale, we hope this guidance will inspire further evolution of methods and tools that can advance the field.

Part 1. The state of evidence synthesis

Evidence syntheses are commonly regarded as the foundation of evidence-based medicine (EBM). They are widely accredited for providing reliable evidence and, as such, they have significantly influenced medical research and clinical practice. Despite their uptake throughout health care and ubiquity in contemporary medical literature, some important aspects of evidence syntheses are generally overlooked or not well recognized. Evidence syntheses are mostly retrospective exercises, they often depend on weak or irreparably flawed data, and they may use tools that have acknowledged or yet unrecognized limitations. They are complicated and time-consuming undertakings prone to bias and errors. Production of a good evidence synthesis requires careful preparation and high levels of organization in order to limit potential pitfalls [ 1 ]. Many authors do not recognize the complexity of such an endeavor and the many methodological challenges they may encounter. Failure to do so is likely to result in research and resource waste.

Given their potential impact on people’s lives, it is crucial for evidence syntheses to correctly report on the current knowledge base. In order to be perceived as trustworthy, reliable demonstration of the accuracy of evidence syntheses is equally imperative [ 2 ]. Concerns about the trustworthiness of evidence syntheses are not recent developments. From the early years when EBM first began to gain traction until recent times when thousands of systematic reviews are published monthly [ 3 ] the rigor of evidence syntheses has always varied. Many systematic reviews and meta-analyses had obvious deficiencies because original methods and processes had gaps, lacked precision, and/or were not widely known. The situation has improved with empirical research concerning which methods to use and standardization of appraisal tools. However, given the geometrical increase in the number of evidence syntheses being published, a relatively larger pool of unreliable evidence syntheses is being published today.

Publication of methodological studies that critically appraise the methods used in evidence syntheses is increasing at a fast pace. This reflects the availability of tools specifically developed for this purpose [ 4 , 5 , 6 ]. Yet many clinical specialties report that alarming numbers of evidence syntheses fail on these assessments. The syntheses identified report on a broad range of common conditions including, but not limited to, cancer, [ 7 ] chronic obstructive pulmonary disease, [ 8 ] osteoporosis, [ 9 ] stroke, [ 10 ] cerebral palsy, [ 11 ] chronic low back pain, [ 12 ] refractive error, [ 13 ] major depression, [ 14 ] pain, [ 15 ] and obesity [ 16 , 17 ]. The situation is even more concerning with regard to evidence syntheses included in clinical practice guidelines (CPGs) [ 18 , 19 , 20 ]. Astonishingly, in a sample of CPGs published in 2017–18, more than half did not apply even basic systematic methods in the evidence syntheses used to inform their recommendations [ 21 ].

These reports, while not widely acknowledged, suggest there are pervasive problems not limited to evidence syntheses that evaluate specific kinds of interventions or include primary research of a particular study design (eg, randomized versus non-randomized) [ 22 ]. Similar concerns about the reliability of evidence syntheses have been expressed by proponents of EBM in highly circulated medical journals [ 23 , 24 , 25 , 26 ]. These publications have also raised awareness about redundancy, inadequate input of statistical expertise, and deficient reporting. These issues plague primary research as well; however, there is heightened concern for the impact of these deficiencies given the critical role of evidence syntheses in policy and clinical decision-making.

Methods and guidance to produce a reliable evidence synthesis

Several international consortiums of EBM experts and national health care organizations currently provide detailed guidance (Table 1 ). They draw criteria from the reporting and methodological standards of currently recommended appraisal tools, and regularly review and update their methods to reflect new information and changing needs. In addition, they endorse the Grading of Recommendations Assessment, Development and Evaluation (GRADE) system for rating the overall quality of a body of evidence [ 27 ]. These groups typically certify or commission systematic reviews that are published in exclusive databases (eg, Cochrane, JBI) or are used to develop government or agency sponsored guidelines or health technology assessments (eg, National Institute for Health and Care Excellence [NICE], Scottish Intercollegiate Guidelines Network [SIGN], Agency for Healthcare Research and Quality [AHRQ]). They offer developers of evidence syntheses various levels of methodological advice, technical and administrative support, and editorial assistance. Use of specific protocols and checklists are required for development teams within these groups, but their online methodological resources are accessible to any potential author.

Notably, Cochrane is the largest single producer of evidence syntheses in biomedical research; however, these only account for 15% of the total [ 28 ]. The World Health Organization requires Cochrane standards be used to develop evidence syntheses that inform their CPGs [ 29 ]. Authors investigating questions of intervention effectiveness in syntheses developed for Cochrane follow the Methodological Expectations of Cochrane Intervention Reviews [ 30 ] and undergo multi-tiered peer review [ 31 , 32 ]. Several empirical evaluations have shown that Cochrane systematic reviews are of higher methodological quality compared with non-Cochrane reviews [ 4 , 7 , 9 , 11 , 14 , 32 , 33 , 34 , 35 ]. However, some of these assessments have biases: they may be conducted by Cochrane-affiliated authors, and they sometimes use scales and tools developed and used in the Cochrane environment and by its partners. In addition, evidence syntheses published in the Cochrane database are not subject to space or word restrictions, while non-Cochrane syntheses are often limited. As a result, information that may be relevant to the critical appraisal of non-Cochrane reviews is often removed or is relegated to online-only supplements that may not be readily or fully accessible [ 28 ].

Influences on the state of evidence synthesis

Many authors are familiar with the evidence syntheses produced by the leading EBM organizations but can be intimidated by the time and effort necessary to apply their standards. Instead of following their guidance, authors may employ methods that are discouraged or outdated 28]. Suboptimal methods described in in the literature may then be taken up by others. For example, the Newcastle–Ottawa Scale (NOS) is a commonly used tool for appraising non-randomized studies [ 36 ]. Many authors justify their selection of this tool with reference to a publication that describes the unreliability of the NOS and recommends against its use [ 37 ]. Obviously, the authors who cite this report for that purpose have not read it. Authors and peer reviewers have a responsibility to use reliable and accurate methods and not copycat previous citations or substandard work [ 38 , 39 ]. Similar cautions may potentially extend to automation tools. These have concentrated on evidence searching [ 40 ] and selection given how demanding it is for humans to maintain truly up-to-date evidence [ 2 , 41 ]. Cochrane has deployed machine learning to identify randomized controlled trials (RCTs) and studies related to COVID-19, [ 2 , 42 ] but such tools are not yet commonly used [ 43 ]. The routine integration of automation tools in the development of future evidence syntheses should not displace the interpretive part of the process.

Editorials about unreliable or misleading systematic reviews highlight several of the intertwining factors that may contribute to continued publication of unreliable evidence syntheses: shortcomings and inconsistencies of the peer review process, lack of endorsement of current standards on the part of journal editors, the incentive structure of academia, industry influences, publication bias, and the lure of “predatory” journals [ 44 , 45 , 46 , 47 , 48 ]. At this juncture, clarification of the extent to which each of these factors contribute remains speculative, but their impact is likely to be synergistic.

Over time, the generalized acceptance of the conclusions of systematic reviews as incontrovertible has affected trends in the dissemination and uptake of evidence. Reporting of the results of evidence syntheses and recommendations of CPGs has shifted beyond medical journals to press releases and news headlines and, more recently, to the realm of social media and influencers. The lay public and policy makers may depend on these outlets for interpreting evidence syntheses and CPGs. Unfortunately, communication to the general public often reflects intentional or non-intentional misrepresentation or “spin” of the research findings [ 49 , 50 , 51 , 52 ] News and social media outlets also tend to reduce conclusions on a body of evidence and recommendations for treatment to binary choices (eg, “do it” versus “don’t do it”) that may be assigned an actionable symbol (eg, red/green traffic lights, smiley/frowning face emoji).

Strategies for improvement

Many authors and peer reviewers are volunteer health care professionals or trainees who lack formal training in evidence synthesis [ 46 , 53 ]. Informing them about research methodology could increase the likelihood they will apply rigorous methods [ 25 , 33 , 45 ]. We tackle this challenge, from both a theoretical and a practical perspective, by offering guidance applicable to any specialty. It is based on recent methodological research that is extensively referenced to promote self-study. However, the information presented is not intended to be substitute for committed training in evidence synthesis methodology; instead, we hope to inspire our target audience to seek such training. We also hope to inform a broader audience of clinicians and guideline developers influenced by evidence syntheses. Notably, these communities often include the same members who serve in different capacities.

In the following sections, we highlight methodological concepts and practices that may be unfamiliar, problematic, confusing, or controversial. In Part 2, we consider various types of evidence syntheses and the types of research evidence summarized by them. In Part 3, we examine some widely used (and misused) tools for the critical appraisal of systematic reviews and reporting guidelines for evidence syntheses. In Part 4, we discuss how to meet methodological conduct standards applicable to key components of systematic reviews. In Part 5, we describe the merits and caveats of rating the overall certainty of a body of evidence. Finally, in Part 6, we summarize suggested terminology, methods, and tools for development and evaluation of evidence syntheses that reflect current best practices.

Part 2. Types of syntheses and research evidence

A good foundation for the development of evidence syntheses requires an appreciation of their various methodologies and the ability to correctly identify the types of research potentially available for inclusion in the synthesis.

Types of evidence syntheses

Systematic reviews have historically focused on the benefits and harms of interventions; over time, various types of systematic reviews have emerged to address the diverse information needs of clinicians, patients, and policy makers [ 54 ] Systematic reviews with traditional components have become defined by the different topics they assess (Table 2.1 ). In addition, other distinctive types of evidence syntheses have evolved, including overviews or umbrella reviews, scoping reviews, rapid reviews, and living reviews. The popularity of these has been increasing in recent years [ 55 , 56 , 57 , 58 ]. A summary of the development, methods, available guidance, and indications for these unique types of evidence syntheses is available in Additional File 2 A.

Both Cochrane [ 30 , 59 ] and JBI [ 60 ] provide methodologies for many types of evidence syntheses; they describe these with different terminology, but there is obvious overlap (Table 2.2 ). The majority of evidence syntheses published by Cochrane (96%) and JBI (62%) are categorized as intervention reviews. This reflects the earlier development and dissemination of their intervention review methodologies; these remain well-established [ 30 , 59 , 61 ] as both organizations continue to focus on topics related to treatment efficacy and harms. In contrast, intervention reviews represent only about half of the total published in the general medical literature, and several non-intervention review types contribute to a significant proportion of the other half.

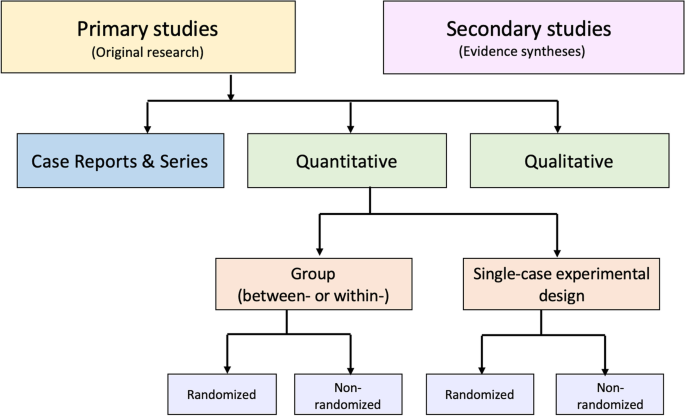

Types of research evidence

There is consensus on the importance of using multiple study designs in evidence syntheses; at the same time, there is a lack of agreement on methods to identify included study designs. Authors of evidence syntheses may use various taxonomies and associated algorithms to guide selection and/or classification of study designs. These tools differentiate categories of research and apply labels to individual study designs (eg, RCT, cross-sectional). A familiar example is the Design Tree endorsed by the Centre for Evidence-Based Medicine [ 70 ]. Such tools may not be helpful to authors of evidence syntheses for multiple reasons.

Suboptimal levels of agreement and accuracy even among trained methodologists reflect challenges with the application of such tools [ 71 , 72 ]. Problematic distinctions or decision points (eg, experimental or observational, controlled or uncontrolled, prospective or retrospective) and design labels (eg, cohort, case control, uncontrolled trial) have been reported [ 71 ]. The variable application of ambiguous study design labels to non-randomized studies is common, making them especially prone to misclassification [ 73 ]. In addition, study labels do not denote the unique design features that make different types of non-randomized studies susceptible to different biases, including those related to how the data are obtained (eg, clinical trials, disease registries, wearable devices). Given this limitation, it is important to be aware that design labels preclude the accurate assignment of non-randomized studies to a “level of evidence” in traditional hierarchies [ 74 ].

These concerns suggest that available tools and nomenclature used to distinguish types of research evidence may not uniformly apply to biomedical research and non-health fields that utilize evidence syntheses (eg, education, economics) [ 75 , 76 ]. Moreover, primary research reports often do not describe study design or do so incompletely or inaccurately; thus, indexing in PubMed and other databases does not address the potential for misclassification [ 77 ]. Yet proper identification of research evidence has implications for several key components of evidence syntheses. For example, search strategies limited by index terms using design labels or study selection based on labels applied by the authors of primary studies may cause inconsistent or unjustified study inclusions and/or exclusions [ 77 ]. In addition, because risk of bias (RoB) tools consider attributes specific to certain types of studies and study design features, results of these assessments may be invalidated if an inappropriate tool is used. Appropriate classification of studies is also relevant for the selection of a suitable method of synthesis and interpretation of those results.