Deep Learning IIT Ropar Week 8 Nptel Assignment Answers

Are you looking for the Deep Learning IIT Ropar Week 8 NPTEL Assignment Answers 2024 (July-Dec)? You’ve come to the right place! Access the most accurate and up-to-date solutions for your Week 8 assignment in the Deep Learning course offered by IIT Ropar.

Course Link: Click Here

Table of Contents

Deep Learning IIT Ropar Week 8 Nptel Assignment Answers (July-Dec 2024)

- Which of the following activation functions is not zero-centered? A) Sigmoid B) Tanh C) ReLU D) Softmax

Answer: C) ReLU

- What is the gradient of the sigmoid function at saturation? Answer: 0

- Given a neuron initialized with weights w1=1.5, w2=0.5, and inputs x1=0.2, x2=−0.5, calculate the output of a ReLU neuron. Answer: 0.05

- How does pre-training prevent overfitting in deep networks? A) It adds regularization B) It initializes the weights near local minima C) It constrains the weights to a certain region D) It eliminates the need for fine-tuning

Answer: D) It eliminates the need for fine-tuning

- We train a feed-forward neural network and notice that all the weights for a particular neuron are equal. What could be the possible causes of this issue? A) Weights were initialized randomly B) Weights were initialized to high values C) Weights were initialized to equal values D) Weights were initialized to zero

Answer: A) Weights were initialized randomly C) Weights were initialized to equal values

- Which of the following best describes the concept of saturation in deep learning? A) When the activation function output approaches either 0 or 1 and the gradient is close to zero. B) When the activation function output is very small and the gradient is close to zero. C) When the activation function output is very large and the gradient is close to zero. D) None of the above.

Answer: A) When the activation function output approaches either 0 or 1 and the gradient is close to zero.

These are Deep Learning IIT Ropar Week 8 Nptel Assignment Answers

- Which of the following is true about the role of unsupervised pre-training in deep learning? A) It is used to replace the need for labeled data B) It is used to initialize the weights of a deep neural network C) It is used to fine-tune a pre-trained model D) It is only useful for small datasets

Answer: B) It is used to initialize the weights of a deep neural network

- Which of the following is an advantage of unsupervised pre-training in deep learning? A) It helps in reducing overfitting B) Pre-trained models converge faster C) It improves the accuracy of the model D) It requires fewer computational resources

Answer: B) Pre-trained models converge faster

- What is the main cause of the Dead ReLU problem in deep learning? A) High variance B) High negative bias C) Overfitting D) Underfitting

Answer: B) High negative bias

- What is the main cause of the symmetry breaking problem in deep learning? A) High variance B) High bias C) Overfitting D) Equal initialization of weights

Answer: D) Equal initialization of weights

Check here all Deep Learning IIT Ropar Nptel Assignment Answers : Click here

For answers to additional Nptel courses, please refer to this link: NPTEL Assignment Answers

Deep Learning Week 8 Nptel Assignment Answers (Apr-Jun 2023)

Q1. Which of the following functions can be used as an activation function in the output layer if we wish to predict the probabilities of n classes such that the sum of p over all n equals to 1? a. Softmax b. RelU c. Sigmoid d. Tanh

Answer: a. Softmax

Q2. The input image has been converted into a matrix of size 256 X 256 and a kernel/filter of size 5×5 with a stride of 1 and no padding. What will be the size of the convoluted matrix? a. 252×252 b. 3×3 c 254×254 d. 256×256

Answer: a. 252×252

These are NPTEL Deep Learning Week 8 Assignment Answers

Q3. What will be the range of output if we apply ReLU non-linearity and then Sigmoid Nonlinearity subsequently after a convolution layer? a. [1,1] b. [0,1] c. [0.5,1] d. [1,-0.5]

Answer: c. [0.5,1]

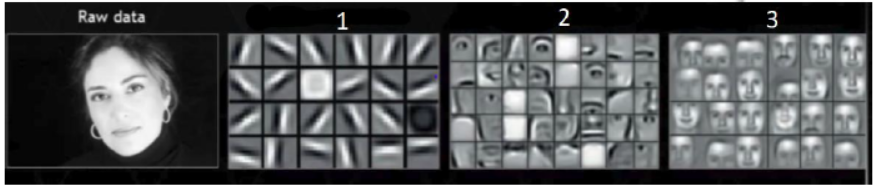

Q4. The figure below shows image of a face which is input to a convolutional neural net and the other three images shows different levels of features extracted from the network. Can you identify from the following options which one is correct?

a. Label 3: Low-level features, Label 2: High-level features, Label 1: Mid-level features b. Label 1: Low-level features, Label 3: High-level features, Label 2: Mid-level features c. Label 2: Low-level features, Label 1: High-level features, Label 3: Mid-level features d. Label 3: Low-level features, Label 1: High-level features, Label 2: Mid-level features

Answer: b. Label 1: Low-level features, Label 3: High-level features, Label 2: Mid-level features

Q5. Suppose you have 8 convolutional kernel of size 5 x 5 with no padding and stride 1 in the first layer of a convolutional neural network. You pass an input of dimension 228 x 228 x 3 through athis layer. What are the dimensions of the data which the next layer will receive? a. 224x224x3 b. 224x224x8 c. 226x226x8 d. 225x225x3

Answer: b. 224x224x8

Q6. What is the mathematical form of the Leaky RelU layer? a. f(x)=max(0,x) b. f(x)=min(0,x) c. f(x)=min(0, ax), where a is a small constant d. f(x)=1(x<0)(ax)+1(x>=0)(x), where a is a small constant

Answer: d. f(x)=1(x<0)(ax)+1(x>=0)(x), where a is a small constant

Q7. The input image has been converted into a matrix of size 224 x 224 and convolved with a kernel/filter of size FxF with a stride of s and padding P to produce a feature map of dimension 222×222. Which among the following is true? a. F=3×3,s=1,P=1 b. F=3×3,s=0, P=1 c. F=3×3,s=1,P=0 d. F=2×2,s=0, P=0

Answer: c. F=3×3,s=1,P=0

Q8. Statement 1: For a transfer learning task, lower layers are more generally transferred to another task Statement 2: For a transfer learning task, last few layers are more generally transferred to another task Which of the following option is correct? a. Statement 1 is correct and Statement 2 is incorrect b. Statement 1 is incorrect and Statement 2 is correct c. Both Statement 1 and Statement 2 are correct d. Both Statement 1 and Statement 2 are incorrect

Answer: a. Statement 1 is correct and Statement 2 is incorrect

Q9. Statement 1: Adding more hidden layers will solve the vanishing gradient problem for a 2-layer neural network Statement 2: Making the network deeper will increase the chance of vanishing gradients. a. Statement 1 is correct b. Statement 2 is correct c. Neither Statement 1 nor Statement 2 is correct d. Vanishing gradient problem is independent of number of hidden layers of the neural network.

Answer: b. Statement 2 is correct

Q10. How many convolution layers are there in a LeNet-5 architecture? a. 2 b. 3 c 4 d. 5

Answer: a. 2

More weeks of Deep Learning: Click Here

More Nptel Courses: https://progiez.com/nptel

Navigation Menu

Search code, repositories, users, issues, pull requests..., provide feedback.

We read every piece of feedback, and take your input very seriously.

Saved searches

Use saved searches to filter your results more quickly.

To see all available qualifiers, see our documentation .

- Notifications You must be signed in to change notification settings

greyhatguy007/deep-learning-NPTEL

Folders and files, repository files navigation, deep learning - iit ropar.

Contains My Progress, Solutions for Assignments, Quizzes in the Deep Learning Course By IIT Ropar on NPTEL

Credits : IIT Ropar & IIT Madras

- Python 100.0%

Deep Learning - IIT Ropar

Note: This exam date is subject to change based on seat availability. You can check final exam date on your hall ticket.

Page Visits

Course layout, books and references, instructor bio.

Prof. Sudarshan Iyengar

Prof. Padmavati

Course certificate.

DOWNLOAD APP

SWAYAM SUPPORT

Please choose the SWAYAM National Coordinator for support. * :

- Computer Science and Engineering

- NOC:Deep Learning- Part 1 (Video)

- Co-ordinated by : IIT Ropar

- Available from : 2018-04-25

- Intro Video

- Biological Neuron

- From Spring to Winter of AI

- The Deep Revival

- From Cats to Convolutional Neural Networks

- Faster, higher, stronger

- The Curious Case of Sequences

- Beating humans at their own games (literally)

- The Madness (2013-)

- (Need for) Sanity

- Motivation from Biological Neurons

- McCulloch Pitts Neuron, Thresholding Logic

- Perceptrons

- Error and Error Surfaces

- Perceptron Learning Algorithm

- Proof of Convergence of Perceptron Learning Algorithm

- Deep Learning(CS7015): Linearly Separable Boolean Functions

- Deep Learning(CS7015): Representation Power of a Network of Perceptrons

- Deep Learning(CS7015): Sigmoid Neuron

- Deep Learning(CS7015): A typical Supervised Machine Learning Setup

- Deep Learning(CS7015): Learning Parameters: (Infeasible) guess work

- Deep Learning(CS7015): Learning Parameters: Gradient Descent

- Deep Learning(CS7015): Representation Power of Multilayer Network of Sigmoid Neurons

- Feedforward Neural Networks (a.k.a multilayered network of neurons)

- Learning Paramters of Feedforward Neural Networks (Intuition)

- Output functions and Loss functions

- Backpropagation (Intuition)

- Backpropagation: Computing Gradients w.r.t. the Output Units

- Backpropagation: Computing Gradients w.r.t. Hidden Units

- Backpropagation: Computing Gradients w.r.t. Parameters

- Backpropagation: Pseudo code

- Derivative of the activation function

- Information content, Entropy & cross entropy

- Recap: Learning Parameters: Guess Work, Gradient Descent

- Contours Maps

- Momentum based Gradient Descent

- Nesterov Accelerated Gradient Descent

- Stochastic And Mini-Batch Gradient Descent

- Tips for Adjusting Learning Rate and Momentum

- Line Search

- Gradient Descent with Adaptive Learning Rate

- Bias Correction in Adam

- Eigenvalues and Eigenvectors

- Linear Algebra : Basic Definitions

- Eigenvalue Decompositon

- Principal Component Analysis and its Interpretations

- PCA : Interpretation 2

- PCA : Interpretation 3

- PCA : Interpretation 3 (Contd.)

- PCA : Practical Example

- Singular Value Decomposition

- Introduction to Autoncoders

- Link between PCA and Autoencoders

- Regularization in autoencoders (Motivation)

- Denoising Autoencoders

- Sparse Autoencoders

- Contractive Autoencoders

- Bias and Variance

- Train error vs Test error

- Train error vs Test error (Recap)

- True error and Model complexity

- L2 regularization

- Dataset augmentation

- Parameter sharing and tying

- Adding Noise to the inputs

- Adding Noise to the outputs

- Early stopping

- Ensemble Methods

- A quick recap of training deep neural networks

- Unsupervised pre-training

- Better activation functions

- Better initialization strategies

- Batch Normalization

- One-hot representations of words

- Distributed Representations of words

- SVD for learning word representations

- SVD for learning word representations (Contd.)

- Continuous bag of words model

- Skip-gram model

- Skip-gram model (Contd.)

- Contrastive estimation

- Hierarchical softmax

- GloVe representations

- Evaluating word representations

- Relation between SVD and Word2Vec

- The convolution operation

- Relation between input size, output size and filter size

- Convolutional Neural Networks

- Convolutional Neural Networks (Contd.)

- CNNs (success stories on ImageNet)

- CNNs (success stories on ImageNet) (Contd.)

- Image Classification continued (GoogLeNet and ResNet)

- Visualizing patches which maximally activate a neuron

- Visualizing filters of a CNN

- Occlusion experiments

- Finding influence of input pixels using backpropagation

- Guided Backpropagation

- Optimization over images

- Create images from embeddings

- Fooling Deep Convolutional Neural Networks

- Sequence Learning Problems

- Recurrent Neural Networks

- Backpropagation through time

- The problem of Exploding and Vanishing Gradients

- Some Gory Details

- Selective Read, Selective Write, Selective Forget - The Whiteboard Analogy

- Long Short Term Memory(LSTM) and Gated Recurrent Units(GRUs)

- How LSTMs avoid the problem of vanishing gradients

- How LSTMs avoid the problem of vanishing gradients (Contd.)

- Introduction to Encoder Decoder Models

- Applications of Encoder Decoder models

- Attention Mechanism

- Attention Mechanism (Contd.)

- Attention over images

- Hierarchical Attention

- Live Session 10-04-2021

- Watch on YouTube

- Assignments

- Download Videos

- Transcripts

IMAGES

VIDEO

COMMENTS

NPTEL-Deep Learning (IIT Ropar)- Assignment 11 Solution (2024)Assignment-11 for Week-11 can be accessed from the following linkink: https://onlinecourses.npt...

Deep Learning - IIT Ropar Week 12 Assignment 12 Answers || July 2023 NPTEL1.https://youtu.be/NUZ8N9nldr0?si=-GTf9o7F1vftx5GA2. Join telegram Channel -- https...

Are you looking for the Deep Learning IIT Ropar Week 8 NPTEL Assignment Answers 2024 (July-Dec)? You’ve come to the right place! Access the most accurate and up-to-date solutions for your Week 8 assignment in the Deep Learning course offered by IIT Ropar.

Deep Learning- IIT Ropar week 5 assignment solution. in case of any change see pinned comment. #deeplearning #assignment #week5#iitropar#nptel

Deep Learning Solution for week wise assignment. Contribute to DataSenseiAryan/NPTELDeepLearningAssingnment development by creating an account on GitHub.

By following these steps, you can easily locate and use the assignment answers and solutions for the NPTEL courses provided in this repository. We hope this resource assists you in your studies!

Contains My Progress, Solutions for Assignments, Quizzes in the Deep Learning Course By IIT Ropar on NPTEL

Students can submit answers multiple times before the due date of August 10, 2022. The document is an assessment for Week 1 of the Deep Learning course offered by IIT Ropar on the NPTEL online learning platform.

In this course we will learn about the building blocks used in these Deep Learning based solutions. Specifically, we will learn about feedforward neural networks, convolutional neural networks, recurrent neural networks and attention mechanisms.

Deep Learning(CS7015): A typical Supervised Machine Learning Setup: Download Verified; 20: Deep Learning(CS7015): Learning Parameters: (Infeasible) guess work: Download Verified; 21: Deep Learning(CS7015): Learning Parameters: Gradient Descent: Download Verified; 22: Deep Learning(CS7015): Representation Power of Multilayer Network of Sigmoid ...